M1 Max killer

You are right.… but let’s put this in context because devil is in the details!

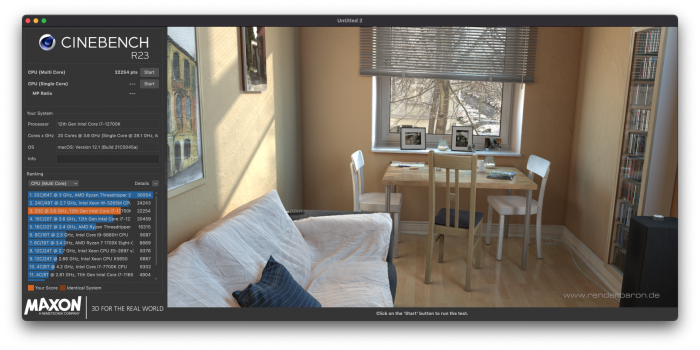

Today, that top of the line x86-64, 16 core, 272 Watt

desktop/workstation monster part from Intel is an M1 Max

laptop killer with the M1 Max’ smaller 10 core count on unoptimized for M1 software.

For comparison, a hypothetical 200+ Watt M1 would likely scale to 28-30 M1 cores, maybe more!

It’s probably worthwhile revisiting the

accuracy of this killer statement in 6 months to 1 year (once cinebench has been optimized for Apple Silicon) in order to see if it remains true for users of Cinebench and Cinema 4d.

In the short term, on most other apps, I’d continue to still expect to see ADL desktop to show it’s 5-10% performance advantage with it’s 200W+ power footprint under heavy workstation load when compared against M1 on unoptimized synthetic benchmarks, games and legacy code relative to an M1 Pro/Max. Of course using native Apple apps optimized shows the a significant performance advantage the other way in Davinci Resolve, Final Cut Pro, Logic etc…

That being said, given the fixation on cinebench it’s got me thinking...

Clearly Maxon Cinema 4d is very important to a number of our friends on this thread. I was wondering if the next person who is dissatisfied about the M1 Max performance in Cinebench would be willing to please show and share with me, the types of 3d professional workload that they are running in Cinema 4d (not pre-canned benchmark scenes) so that I can get an understanding of the nature of your workload and where you are limited by the performance offered by M1 Max in portable laptop VS you would realize greater value jumping to a desktop workstation configuration?

I really want to calibrate my understanding of the level of expectation here for performance…

For example

@Leifi /

@bombardier10 : is the cinebench test scene that you using representative of the types of scenes that you are both building in your spare time / your job / your professional lives?

If so, would you care to share samples of your 3D work (even appreciating copyright considerations with your employer, I’d just be grateful to see a personal project) so that perhaps the community here can help evaluate as to whether the level of performance that you are unhappy with while investigating an M1 Pro / Max VS alderlake with the community on an apple forum?

You may be better served looking at other software that is better optimized for your workflow - have you looked at alternatives?

Look forward to seeing what all you creative talented people are able to show and share and seeing if I can be of any assistance to you in finding a better alternative that may better meet your needs.

Happy Holidays,

Tom.