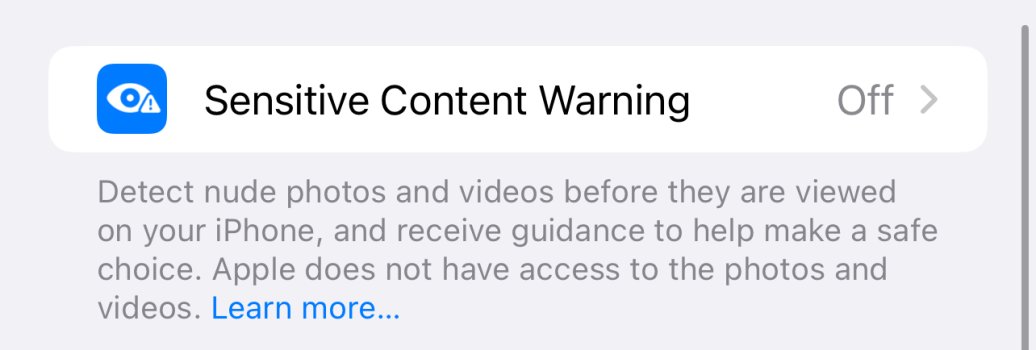

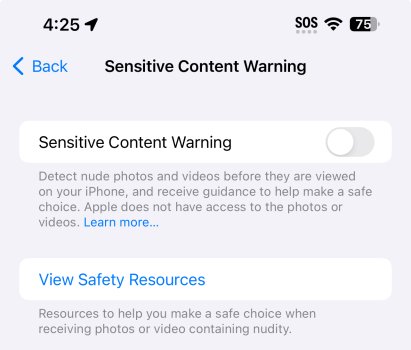

Apple in iOS 17 is adding a new feature to iOS that is designed to automatically block incoming messages and files that may have sensitive content like nudity.

Opt-in blurring can be applied to sensitive images sent in Messages, AirDrop, Contact Posters for the Phone app, FaceTime messages, and third-party apps. The feature will prevent adult iPhone users from being subjected to unwanted imagery. All nudity will be blocked, but can be viewed by tapping the "Show" button.

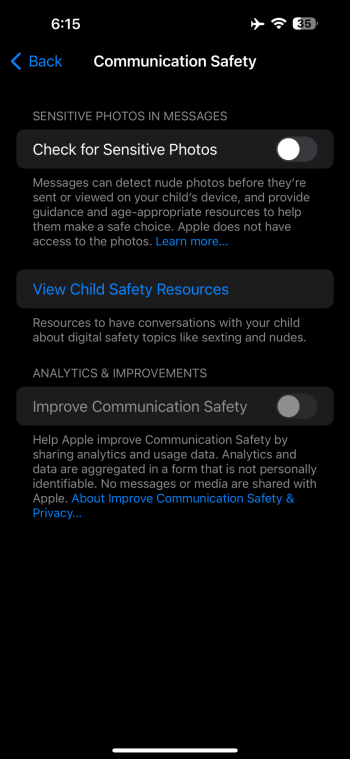

Sensitive Content Warnings work like the Communication Safety functionality that Apple added for children, with all detection done on device so Apple does not see the content that's being shared. Sensitive Content Warnings are an expansion of the Communication Safety options that Apple introduced for children last year.

Communication Safety detects and blocks nude images before children can view them, and with iOS 17, this too will expand to encompass AirDrop, the systemwide photo picker, FaceTime messages, and third-party apps.

Article Link: iOS 17 Can Automatically Block Unsolicited Nude Photos With 'Sensitive Content Warnings'

Last edited: