the SAS cable is so short that I would not be able to connect it to any other slot except the top one anyway.

Yeah, this has been a problem before as well, and Maxupgrades has an adapter for that too (

here).

The number of adapters and need for more externals than I would have needed with another case (more bays), was the cost factor that played into me not keeping a 2008.

and, yes I am pretty sure I rebooted while the RAID5 was building, because a bunch of programs froze and would not force quit.

Ouch. That didn't help matters either.

On the plus side though, the card will pick back up where it left off, assuming the boot process is completed properly (initialization, recovery, online expansion, and online migration).

I have multiple copies of everything right now. I was planning on having this second RAID5 as a backup, in the same enclosure, would that be foolish? as the two raids would use the same controller?

No, it's not the worst way to go in your situation, as a blown card is very rare. It always comes down to mitigating failure conditions in regard to usage requirements and costs, as there's no such thing as fool-proof/100% covered storage system, no matter how well a storage system is put together.

But having it on a separate controller (i.e. eSATA + Port Multiplier based enclosure), is more cost effective. The reason is not only is the card and enclosure cheaper, but you can use consumer grade drives, and Greens on top of that (best cost/GB ratio available). It just happens to cover you in the event of a dead RAID card.

Where this differs of course, is with very critical data, such as what would require a SAN (i.e. think banking records, gov. records such as IRS data,...). These types of setups incorporate redundancy on the computers, installed cards, how the arrays are configured, networking gear, ...

originally it was on the Apple RAID card, I copied that boot drive onto a FW drive, then was planning on copying that onto a drive attached to the logic board, however that was problematic, so its back running off the Apple Raid.

There is a way to connect the OS drive to the logic board without using the MiniSAS (SFF-8087) connector. You'll physically have to install the boot drive elsewhere, and the empty ODD bay (optical disk drive) is the easiest place to do this.

As per cables, a Molex to SATA power cable will handle supplying the juice, and a standard SATA data cable is used to connect to one of the ODD_SATA ports found on the logic board (upper left IIRC, near the fan; they're a bit buried, but they're there). The only caveat that might exist, is you may not be able to boot any other OS from that port (i.e. 2008's won't boot Windows without a hack). Routing isn't exactly fun from those that have done it, but it's possible.

It's in here somewhere, and it has pics on where the ports are located and how they routed the data cable. So it would be worth the time to search (rather old, so you'll need to be willing to put in some time to find it - could be more than an hour just to locate it).

As far as I can access...

There are things that can be done, which are listed above.

BTW, the image link isn't showing anything.

that's interesting, the drive is Quad interface, so I will run it through usb then; however I have a hard time thinking Mac will ever give a problem with FW800 until Thunderbolt is perfected.

No way to be sure with Apple, but in general, USB is the safer way to go when working on multiple, independent systems (i.e. on different, unconnected networks or stand-alone systems).

Cost wise, it's also inexpensive, and a good way to go for independents/SMB's (and I stress small) that don't have the networking infrastructure or require an OS image that will be used on multiple machines.

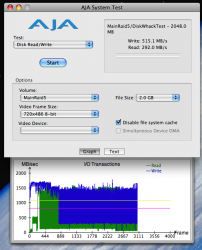

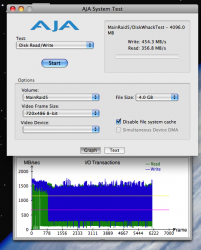

FW800 isn't the fastest thing out there, so ~80MB/s is realistic (theoretically it would be 100MB/s sustainable, but there's latency to deal with that reduces the figure in the real world).

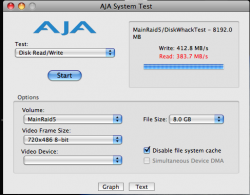

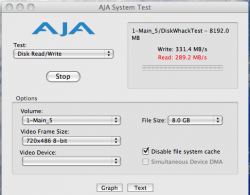

Or did you mean this is all you're getting off of the Apple RAID Pro?

At any rate, it's possible to remove the Apple RAID Pro all together (it really is

that bad), and I'd recommend doing so to regain a PCIe slot.

For example, the following slot config is both possible and desirable if you've only a single GPU card:

- Slot 1 = GPU

- Slot 2 = Areca RAID Card

- Slot 3 = eSATA card for a backup system

- Slot 4 = empty

I kept all my enterprise drives in my external enclosure, and I was not running raid on my apple raid card, just JBOD, I didn't think this would be an issue, I was planning on not running through my RAID card at all and using the logic board, which is why I was going to fill my internal slots with consumer rated drives, and have one be boot and the other three on a software RAID0.

If consumer drives are connected to the logic board, that's fine. But they don't do well on a proper hardware RAID card such as an Areca (this is why you do not find consumer disk P/N's on the HDD Compatibility List on their support site).

You'll be able to get the HDD bay cable back on the logic board (it was designed to fit). Perhaps not the easiest task, but it's possible, and worth the effort in order to get the configuration you're after.

Most users however, attach the internal HDD bays to the RAID card for lower cost than an external solution for every single disk attached to the card (i.e. even with say a 12 port card, 4x are internal in the MP, and up to 8x in an external enclosure).

Look at it this way; internal adapter is ~$130USD or so, while a 4 bay enclosure with an SFF-8088 port on the rear goes for $300USD or so. Even if an internal to external cable is needed ($60USD), it's still a savings of $110, which on a tight budget, is particularly attractive.

I had changed the boot location, I was running off the external.

Assuming the settings had taken properly (I'll assume for the moment this is the case, and not lost due to a weak CMOS battery in the MP), the consumer drives could easily have caused all that aggravation/mess.

when I said get rid of the card, I meant the Apple card, the Areca seems to be fine.

Ah, OK. Your post was a bit confusing (this one is too), so I'm wading through them as best as I can.

RAID is a difficult undertaking, and the details are critical. So a misunderstanding is quite an easy occurrence, unfortunately. This is why either email or phone support can be so arduous.

The only reason I was using consumer level drives is because I was not planning on using a hardware raid card with them.

This is fine.

But I'm under the impression they ended up connected to the Areca, which would easily cause the mess you encountered (seen this before with those that didn't know any better, or worse, ignored the advice given prior to their equipment purchases).

There are other things that could have caused it as well, such as a duplicated LUN, but the information you've made available lends me to think along the lines that the consumer disks somehow ended up attached to the Areca (and they'll do this even if not in a RAID set).

BTW, in the case of a RAID card, you won't be able to run JBOD and RAID simultaneously. If you dig, it's in one of the card's settings, so it's one or the other, but never both. A second card is needed for that, or you can run it from Disk Utility for a lower cost solution (which is fine, and is a nice way to save on funds too). Great for backups when a single volume is desired with less risk than RAID 0 (JBOD's risk level is that of a single disk).

However, since although it's possible to run the drives off the logic board, it does not seem to be worth my time, as I have other work I need to get too and I need my computer functional and stable.

It won't take that long (hour should be sufficient for the actual work). Figuring in research and really taking your time, you should still be able to get it all done in a day (physical installation + initialization processes + at least begin the data restoration).

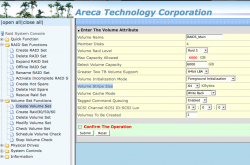

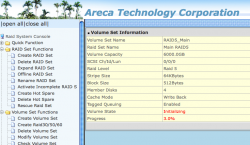

I better off using the "expand raid set" function in the Areca Raid Storage Manager, or should I copy everything to another drive, then delete the 4 drive array, and rebuild as a six?

I'd recommend you always make a backup prior to changing the array in any manner.

Now once that's done, it's your choice if you want to use the Online Expansion method, or do it manually (there are advantages to both).

Online Expansion can allow you to access the data while the expansion process is underway, but it's slower as the card's processing is divided between both the expansion function and providing data simultaneously (also, make sure the settings will allow this, as Foreground under Initialization could prevent the system from gaining access to the data during the Expansion process).

Manually is faster, but you won't be able to access the data until it's completed.