Question: Have you wonder why Apple rumored to launch M4 series by the end of the year? This round Apple will launch M4 from top to bottom including Mac Mini within a quarter period, why?

Mark Gurman can't tell you, but I can. The key ingredient missing is LPDDR6.

Apple will reset whole Mac lineup with LPDDR6 to stay competitive. Next year, we should have at least 4 more players entering ARM PC market with Cortex-X5, which has faster IPC than M3.

Before I explained why upcoming M4 series will support LPDDR6 standard and raise the maximum amount of RAM up to 512GB. Let me show you the current LPDDR5 RAM configuration so that you guys can prepare for what is coming later this year:

Apple's LPDDR6 Solution

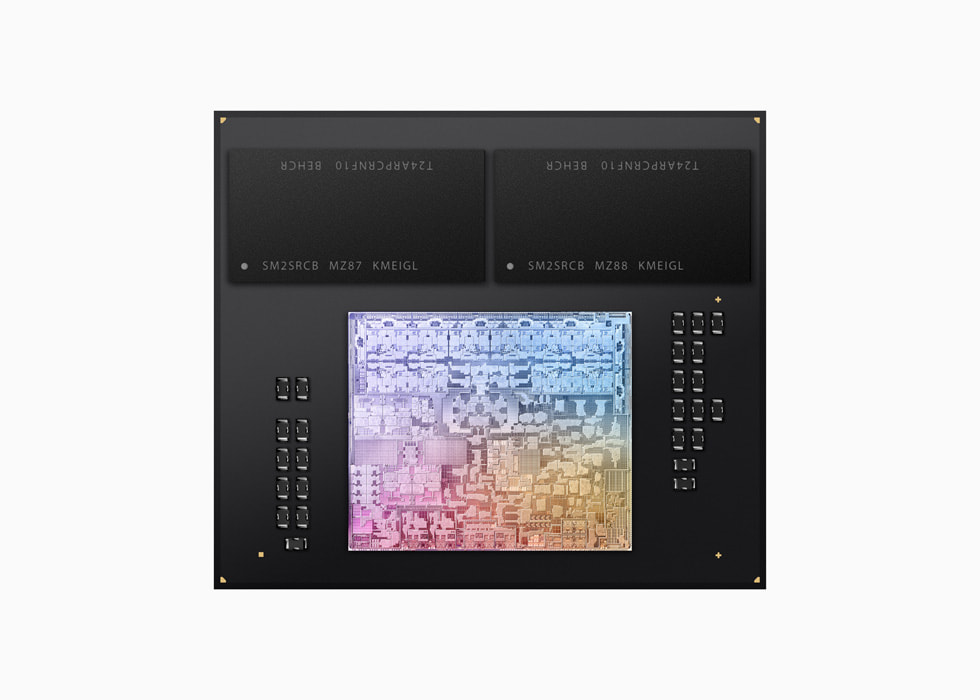

Shown above is the die shot of M3 SoC from Apple. M3 is connected to 128-bit LPDDR5-6400 with 102GB/s bandwidth.

LPDDR6 Standard: 24 bits channel width

Apple going to implement something weird yet make sense if you understand the logic behind. For upcoming M4 series, Apple going to introduce 96-bit memory bus of LPDDR6 per channel as shown below:-

First of all, you have to understand Samsung, the biggest memory maker is making 24Gb (3GB) die as standard LPDDR6 die. Therefore, all the LPDDR6 memory chip will contain multiple layers of memory die, the most common one is x4 equal to 12GB.

Updates: Mark Gurman mentioned that M4 series going to support up to 512GB, it seems Apple goes even bigger for LPDDR6. They are going to ask Samsung to manufacture 32Gb (4GB) per die. I have updated the table to show new memory size.

The Advantages of LPDDR6

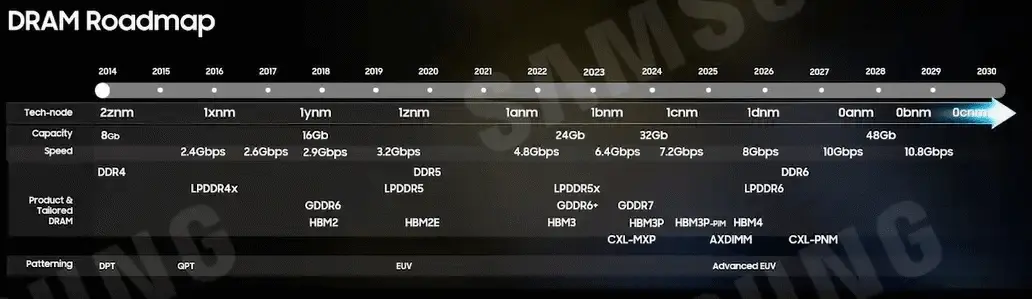

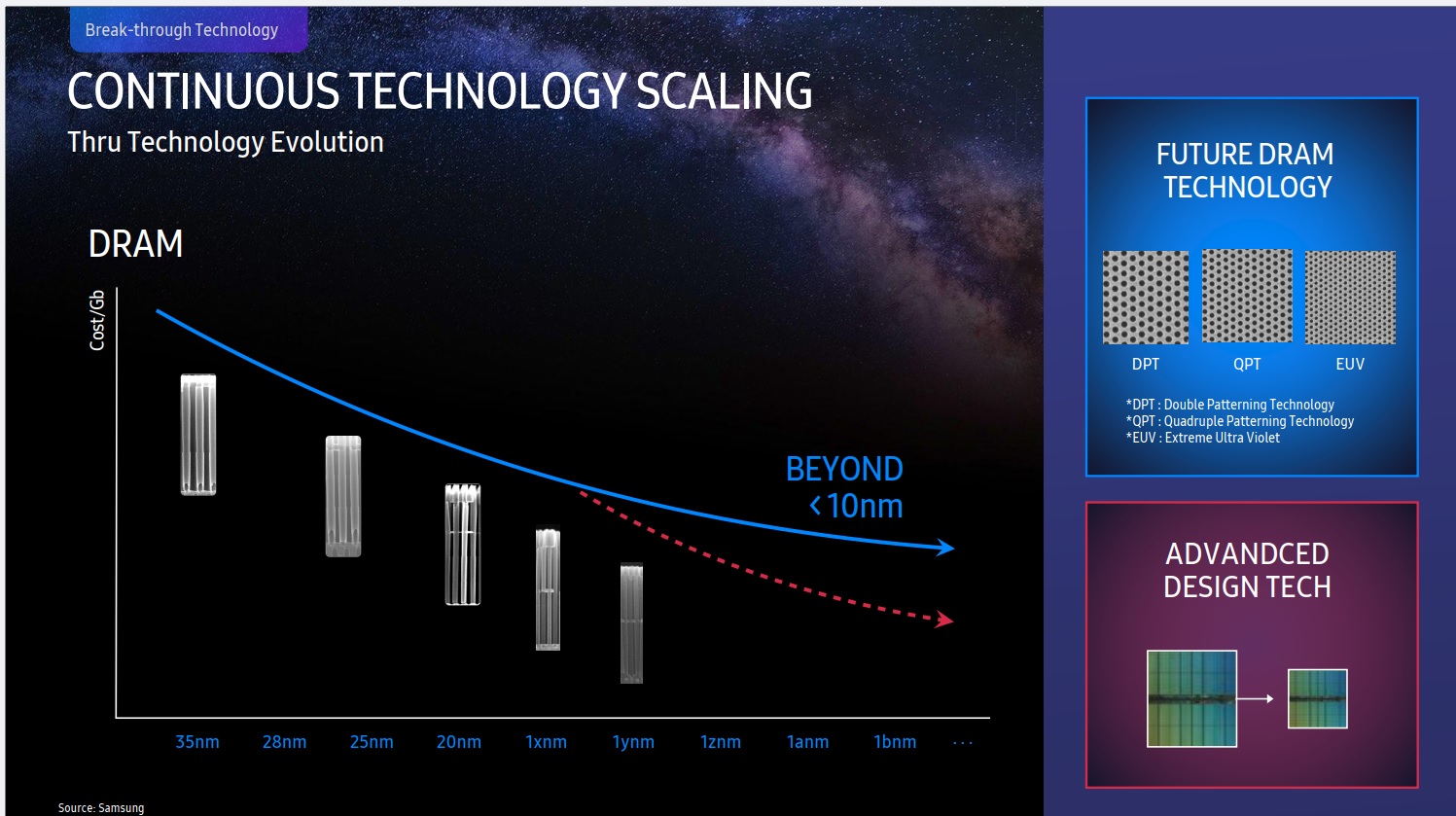

The roadmap stated that LPDDR6 will be available in 2026, not by the end of 2024. Then why do I think Apple will bundle LPDDR6 by the end of 2024? Cause the standard will be certified by Q3 this year. And Samsung has been manufactured 24Gb and 6.4 Gbps memory die using EUV since 2023. Samsung also is making 32Gb memory die in 2024.

Unless Mark Gurman's leaks about 512GB memory size is wrong and Mac Mini will not be updated within the same timeframe, then Apple is gearing up the super cycle of Mac lineup.

Do you think Apple among the first to release MacBook Pro with 512-bit LPDDR5 don't know the advantages of LPDDR6?

If my speculated RAM configuration is correct, Apple will be working closely with Samsung to build 32Gb memory die with 96-bit memory bus.

Mac volume is relatively small compared to iPhone sales volume. That's why I suspect Apple might reserve LPDDR6 for Pro lineup. And most likely using 24Gb memory die which has been manufactured since 2023.

PS: There is already news about upcoming Qualcomm's 8G4 using LPDDR6 by the end of this year. Do you think Apple will miss the biggest change in RAM upgrade?

Mark Gurman can't tell you, but I can. The key ingredient missing is LPDDR6.

Apple will reset whole Mac lineup with LPDDR6 to stay competitive. Next year, we should have at least 4 more players entering ARM PC market with Cortex-X5, which has faster IPC than M3.

Before I explained why upcoming M4 series will support LPDDR6 standard and raise the maximum amount of RAM up to 512GB. Let me show you the current LPDDR5 RAM configuration so that you guys can prepare for what is coming later this year:

| Memory Density 16Gb (2GB) | M3 | M3 Pro | M3 Max |

|---|---|---|---|

| LPDDR5 Memory Bus | 128-bit | 192-bit | 512-bit |

| Memory BW | 100 GB/s | 150 GB/s | 410 GB/s |

| Memory Chips | 2 pcs | 3 pcs | 4 pcs |

| x2 = 4GB (S: 32-Gb) | 8 GB | ||

| x3 = 6GB (M: 48-Gb) | 18 GB | ||

| x4 = 8GB (S: 64-Gb) | 16 GB | ||

| x6 = 12GB (S: 96-Gb) | 24 GB | 36 GB | 48 GB |

| x8 = 16GB (S: 128-Gb) | 64 GB | ||

| x16 = 32GB (256-Gb) | 128 GB |

- Apple has reduced the memory bus of M3 Pro from 256-bit to 192-bit. You may ask why? Cost cutting? Nah, Apple actually increased the RAM from 16GB to 18GB by ordering 48-Gb memory chips from Micron.

- Apple could use two 48-Gb to make 12GB standard in M3 but Apple didn't. Why? One reason is Apple is waiting for LPDDR6, another reason is the increment of 12-16-24 LPDDR5 is not linear.

- M3 Max is a different SoC with 128-bit memory bus connecting to each memory chip compared to 64-bit of M3 and M3 Pro. That's why Apple able to support 512-bit memory bus with 4 memory chips only.

Apple's LPDDR6 Solution

Shown above is the die shot of M3 SoC from Apple. M3 is connected to 128-bit LPDDR5-6400 with 102GB/s bandwidth.

LPDDR6 Standard: 24 bits channel width

Apple going to implement something weird yet make sense if you understand the logic behind. For upcoming M4 series, Apple going to introduce 96-bit memory bus of LPDDR6 per channel as shown below:-

| Memory Density 32Gb (4GB) | M5 | M5 Pro | M5 Max |

|---|---|---|---|

| LPDDR6 Memory Bus | 96-bit | 192-bit | 384-bit |

| Memory BW | ? GB/s | ? GB/s | ? GB/s |

| + % | |||

| Memory Chips | 1 pc | 2 pcs | 4 pcs |

| x3 = 12GB | 12 GB | 24 GB | 48 GB |

| x4 = 16GB | 16 GB | 64 GB | |

| x6 = 24GB | 24 GB | 48 GB | |

| x8 = 32GB | 32 GB | 128 GB | |

| x12 = 48GB | 192 GB | ||

| x16 = 64GB | 256 GB |

First of all, you have to understand Samsung, the biggest memory maker is making 24Gb (3GB) die as standard LPDDR6 die. Therefore, all the LPDDR6 memory chip will contain multiple layers of memory die, the most common one is x4 equal to 12GB.

Updates: Mark Gurman mentioned that M4 series going to support up to 512GB, it seems Apple goes even bigger for LPDDR6. They are going to ask Samsung to manufacture 32Gb (4GB) per die. I have updated the table to show new memory size.

The Advantages of LPDDR6

The roadmap stated that LPDDR6 will be available in 2026, not by the end of 2024. Then why do I think Apple will bundle LPDDR6 by the end of 2024? Cause the standard will be certified by Q3 this year. And Samsung has been manufactured 24Gb and 6.4 Gbps memory die using EUV since 2023. Samsung also is making 32Gb memory die in 2024.

Unless Mark Gurman's leaks about 512GB memory size is wrong and Mac Mini will not be updated within the same timeframe, then Apple is gearing up the super cycle of Mac lineup.

Do you think Apple among the first to release MacBook Pro with 512-bit LPDDR5 don't know the advantages of LPDDR6?

If my speculated RAM configuration is correct, Apple will be working closely with Samsung to build 32Gb memory die with 96-bit memory bus.

Mac volume is relatively small compared to iPhone sales volume. That's why I suspect Apple might reserve LPDDR6 for Pro lineup. And most likely using 24Gb memory die which has been manufactured since 2023.

PS: There is already news about upcoming Qualcomm's 8G4 using LPDDR6 by the end of this year. Do you think Apple will miss the biggest change in RAM upgrade?

Last edited: