Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M4+ Chip Generation - Speculation Megathread [MERGED]

- Thread starter GoetzPhil

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Seems we should start talking about M4 and M5 now

Some findings from other

So leman was right some time ago that we could start seeing quite early M5 variation

Some findings from other

So leman was right some time ago that we could start seeing quite early M5 variation

Attachments

Last edited:

Guess that would be M5 MacBook Air. Possibly even the same timeline as the H1 2022 launches: M1 Ultra in March, M2 MacBook Air in June. So M4 Ultra in March 2025, followed by M5 MacBook Air in June 2025.Seems we should start talking about M4 and M5 now

Some findings from other

So leman was right some time ago that we could start seeing quite early M5 variation

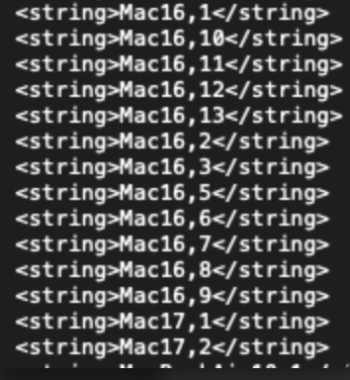

There are twelve M4 Macs identified there (Mac16,4 is missing), so, let's see, I count [1] M4 iMac [2] M4 Mini [3] M4 Pro Mini [4] M4 MacBook Pro 14" [5] M4 Pro MacBook Pro 14" [6] M4 Max MacBook Pro 14" [7] M4 Pro MacBook Pro 16" [8] M4 Max MacBook Pro 16" [9] M4 Max Mac Studio [10] M4 Ultra Mac Studio [11] M4 Ultra Mac Pro.Seems we should start talking about M4 and M5 now

Some findings from other

So leman was right some time ago that we could start seeing quite early M5 variation

That's eleven, so there's room for one more thing... [12] M4 Extreme Mac Pro!

EDIT: My count is incorrect, there are two current iMac identifiers (2-port and 4-port), not one 😳 🙈

So we’re left hanging our new-product hats on the missing Mac16,4 identifier…

Last edited:

i think with the M4 family its time for Apple to do an option that works just for the Mac Pro alone, with proper high cooling compared to a mac studio or anything else in the mac familyThere are twelve M4 Macs identified there (Mac16,4 is missing), so, let's see, I count [1] M4 iMac [2] M4 Mini [3] M4 Pro Mini [4] M4 MacBook Pro 14" [5] M4 Pro MacBook Pro 14" [6] M4 Max MacBook Pro 14" [7] M4 Pro MacBook Pro 16" [8] M4 Max MacBook Pro 16" [9] M4 Max Mac Studio [10] M4 Ultra Mac Studio [11] M4 Ultra Mac Pro.

That's eleven, so leaves room for one more thing... [12] M4 Extreme Mac Pro!

"...there's room for one more thing..."

or iMac Pro?

or iMac Pro?

or iMac Plus? or iMac 6K?"...there's room for one more thing..."

or iMac Pro?

More likely than “iMac Pro,” in my view. I don’t see them undermining the Studio, but on the other hand there is demand for a larger iMac. Could have M4 Pro in it, I suppose, but I don’t think they would call it iMac Pro — it would be heir to the original 5K iMac, not the later iMac Pro.

So leman was right some time ago that we could start seeing quite early M5 variation

I hoped we would jump straight to M5 for the entire Mac lineup. If they do the M4-Pro/max in the fall and M5 MBA in the spring, my hopes would be proven misplaced

So you thought the M4 would be just for the ipad pro?And m5 in the fallI hoped we would jump straight to M5 for the entire Mac lineup. If they do the M4-Pro/max in the fall and M5 MBA in the spring, my hopes would be proven misplaced

Now it depends on what M5 would bring. M4 over M3 seems a good improvement overall

Last edited:

So you thought the M4 would be just for the ipad pro?

That and maybe the MBA. I was hoping that M5 will introduce a new system approach that is better suitable for high-performance applications.

When you get a chance, I'd be interested to hear what you had in mind.That and maybe the MBA. I was hoping that M5 will introduce a new system approach that is better suitable for high-performance applications.

Which got me wondering who might be using CoWoS-R, or be the motivation for this approach?

View attachment 2402348

Following on . . . when CoWoS-R arrives it will bring a notable difference to the general Advanced versus Standard Packaging discussion that has occurred around interconnect PHY. The division between Advanced and Standard Packaging has defaulted around whether a Silicon Interposer / Bridge is used or not, because they provided the trace density and the signal path conditions existing interconnect PHYs needed.

The difference after CoWoS-R becomes available, will be that TSMC and Apple’s patent are aiming at an RDL interposer with the signal carrying capacity (on a mm / mm2 basis) that will be able to equal / rival Silicon Interposers along their Die-to-Die beachfronts. TSMC has noted a 4 µm Wire/Space in the RDL layers and, I’m guessing from Apple’s Patent, they will be able to go down to a 25 µm bump pitch along the die edges if and when required.

From Apple’s 2022 Patent:

[0026] . . . The routing substrates 130 in accordance with embodiments can also be formed using thin film techniques. For example, the insulation layer(s) 134 may be formed of a photoimageable dielectric material including polymers (e.g. polyimide, epoxy, epoxy blends, etc.) or inorganic materials (e.g. oxide, nitride), while the metal routing layers 132 and vias 136 may be formed of a suitable metal, including copper. In such an embodiment the metal routing layers 132 result in a smooth surface, lowering conductor losses. Routing substrates formed using thin film techniques may have finer wiring patterns, such as 5 μm W/S, and 25 μm bump pitch (landing pad pitch) compared to more traditional MCM substrates with 10 μm W/S, and 90-110 μm bump pitch (landing pad pitch).

[0030] In accordance with some embodiments, the dies 110 and components 120 may be surface mounted onto the routing substrate 130 in a die-last approach. In such an embodiment, the surface mounting may be a flip chip connection, for example, with solder bumps 150 and optional micro-bumps 151 onto landing pads 152 of the routing substrate 130. Micro-bumps 151 may be smaller than solder bumps 150, and may be used in particular for the die-to-die routing 140 for finer pad pitch and wiring. In the illustrated embodiment micro-bumps 151 may be substantially smaller (less volume) than solder bumps 150 (e.g. for regular flip chip). . . .

All this means is, when we were comparing the relative Tbps/mm from the Next Platform chart (below) earlier in this thread, the more relative Tbps/mm value for CoWoS-R would have been the >10 Tbps/mm from the Advanced Packaging side of the chart coupled with the power and latency on the Standard Packaging side. (Theoretically ~x10+ Apple's original UltraFusion.)

Eliyan rates its SBD PHY at 4.55 Tbps/mm off of a 100 µm pitch. Combined with CoWoS-R their Interconnect Standard should be able to do multiples of that figure going all the way down to a 25 µm pitch. If Apple is the client Eliyan has and TSMC has been developing CoWoS-R for Apple, they are getting everything in place for a very long, expansive and unconstrained (beyond silicon interposers) chiplet design future.

Last edited:

When you get a chance, I'd be interested to hear what you had in mind.

One main idea is splitting the SoC into two or more dies using a (partially) stacked approach. This would improve compute density and permit more cost-and yield-efficient utilization of cutting edge manufacturing processes. Another one is improving the memory subsystem, either by utilizing faster RAM or larger caches (stacked die approach would make it possible to build something like multi-tier RAM for example).

I am quite certain that something like this is coming, the big question is the timeline. It is possible that one needs backside power delivery and other advancements to land first.

Of course, more basic architectural improvements (like doubling FP32 pipes in the GPU etc.) are independent of platform improvements.

I don't know, but if you're trying to track the movement in advanced packaging, there's also GUC (Global Unichip), which is practically a subsidiary of TSMC (their only customer, who holds a 30% stake). If you're talking TSMC's advanced packaging, you're talking GUC. The only reason I'm aware of them is because they are featured on the TSMC site in the "Chronicle of InFO/CoWoS/SoIC" timelines. But TSMC doesn't integrate those links until much later, long after the actual news release, a year or more. So here are three interesting GUC news releases that are not yet found on the TSMC site:[...] Which got me wondering who might be using CoWoS-R, or be the motivation for this approach?

View attachment 2402348

Seems to meet the primary aspirations outlined in Apple's x4 approach:

- Die Last ✅

- RDL Interposer (Not limited to the Reticle Limits of Silicon Interposers) ✅

Basically a combination of the CoWoS Die Last approach with a RDL Fan-Out (InFO) interposer suited to building large chiplet SiP's. All that's needed from there is an interconnect that will meet their bandwidth, latency and power spec's without the constraint of needing a silicon interposer [...]

2024/01/10 :: GUC Taped Out UCIe 32G IP using TSMC's 3nm and CoWoS Technology :: "The chip uses TSMC’s N3P silicon process and CoWoS advanced packaging technology."

This may be related to two other joint press releases:

The first dates to 2023/04/26 :: Cadence Collaborates with GUC on AI, HPC and Networking in Advanced Packaging Technologies

The second dates to 2024/01/10 :: GUC Tapes Out Complex 3D Stacked Die Design on Advanced FinFET Node Using Cadence Integrity 3D-IC Platform

It's probably a safe guess to say the "advanced FinFET node" in question is N3P, given GUC's own news release on the same day.

This could point to big changes in M5 (which is likely to be on N3P) in line with @leman's thinking, just not quite as soon as he had hoped...

Last edited:

For people still interested in the INT8 ANE business, here are some interesting items to read and think about.

First we have

machinethink.net

There are many interesting posts here, but let me highlight

machinethink.net

There are many interesting posts here, but let me highlight

https://machinethink.net/blog/mobile-architectures/

which discusses multiple mobile vision networks as implemented on iOS.

Big points are how different the world was just a few years ago (most of these networks run faster on CPU than GPU), and if they can run on ANE they are very fast -- but many cannot, or cannot run well (sometimes HW limitations, but often also SW limitations).

Along with these we have

machinethink.net

machinethink.net

machinethink.net

machinethink.net

machinethink.net

machinethink.net

machinethink.net

which sequentially give a view of how ML has evolved at Apple. Even though the sequence ends in 2020, you can already see the huge strides. Many people seem still stuck in the pre-2020 era, unaware of how much has changed and continues to change. We see ML move from the CPU (and FP32) to the GPU (and FP16) to tentative initial steps (mainly FP16) on the ANE.

machinethink.net

which sequentially give a view of how ML has evolved at Apple. Even though the sequence ends in 2020, you can already see the huge strides. Many people seem still stuck in the pre-2020 era, unaware of how much has changed and continues to change. We see ML move from the CPU (and FP32) to the GPU (and FP16) to tentative initial steps (mainly FP16) on the ANE.

One reason I bring this up is to show that while things evolve, they evolve on a scale of years not months; the new shiny will not be in Apple API's next year. OTOH what you can also see (though the author is more polite about this than I would be!) is that Apple is evolving the HW and tools WELL. They add new functionality as required (moving from ONNX and Tensorflow to Python and Pytorch as the community moved; adding new layers as required; dropping obsolete functionality so they aren't slowed down by backward compatibility) and they're also aggressively evolving the hard stuff that's useful but not sexy and not obvious -- changing how models are packaged, changing how they are expressed, designing MIL to improve the compiler, adding non-obvious layer types to support things like mixture of models.

This is a lot of reading (the posts are long and sometimes technical) but IMHO well worth it if you want to be informed on this stuff.

With all that in mind, now look at

especially

and

github.com

github.com

Note that these are the tools that convert an existing model (written in eg PyTorch) into something that can be compiled by Apple's compiler into binaries that run on CPU/GPU/ANE.

Thus, in terms of time, first HW has to ship. Then then Apple ML Compiler has to add functionality to support the HW. Then internal Apple APIs take advantage of the new compiler functionality. Finally MLTools can define new layers (and new mappings of existing layers) for a particular eg PYTorch model.

So essentially CoreMLTools is both long-delayed relative to HW in terms of what's available, but ALSO ahead of existing 3rd party software in terms of what's coming up.

So relevant to us, we care about INT8 functionality. What do we see in the release notes?

As expected (and I'm discussing primarily for ANE) we see that in the early days (like 2020) quantization was supported, no surprise since quantization is part of the ANE hardware. BUT the specifics are what matter.

Quantization at this time is only for WEIGHTS and is lookup of an FP16 value. In other words the primary execution model (certainly for 3rd parties) is W16A16 - FP16 weights multiplying FP16 activations. The weights in memory may be 8 or 4 bits, but they're bounced through a (hardware, on ANE) lookup table to lookup an FP16 value.

It's only in mid-2021 we switch from DEFAULT math in FP32 to FP16... Point is that's the default. Crazy high-end stuff like palletization to reduce your memory footprint is leading edge – Apple's still trying to get people to understand that FP16 (or more precisely BF16) is probably what they should be using, not FP32.

It's only in mid-2022 that they talk a lot about reducing the size of weights. I described the stuff in 2020, but that seems to be maybe mostly internally targeted? Or maybe they had very quick and dirty palletization code, no fancy optimization schemes?

Anyway in mid-2022, that's when reducing the size of weights (beyond the obvious FP32->FP16 of 2021). So now we have three options (and again these are all for WEIGHTS)

- pruning (replace values close to zero w/ zero; a bitmask says where the skipped zero values are in an array of weights)

- quantization (so 1, 2, 4, 6, 8bit index into a table of FP16s)

- linear quantization (so weight of interest is m*x+k where m and k are FP16 constants, and x is an INT8)

What are the consequences?

All three shrink the size of the weights, in flash, in DRAM, and even in ANE L2. They are only decompressed just before the multiply-adds occur (and pruned values are skipped, so no multiply-add for those values).

BUT with linear quantization, even though the weight is larger (8 bits rather than maybe 4 bits or 6 bits for palletization lookup) the weight can be multiplied directly in the FMAC! As long as you have FMAC hardware that can handle a mixed FP16 and INT8 input, which ANE can.

So with linear quantization you have the option now to do the math in INT8 (wrapping up the scaling by m and offset by k into generic layer postprocessing).

This presumably gives you a slight power win in moving the weights around (INT8 rather than FP16)?

But

- activations are still FP16.

- apparently at this stage (2022) you can choose to prune a set of weight, or to palletize/linear quantize but not BOTH (even though the ANE HW supports both).

2023 seems to have (publicly...) mainly about tweaking all the above.

But get to 2024 (ie now!) and things get interesting. Looking at the notes for coremltools 8.0b1 we see:

First is more of the same. We now have a 3bit palletized lookup.

And we have that the lookup table can produce an INT8 rather than just an FP16. So we can get such power advantages as exist by moving INT8 weights around inside the ANE rather than FP16 weights.

but more interesting is the non-same:

"A new API to support activation quantization using calibration data, which can be used to take a W16A16 Core ML model and produce a W8A8 model"

What does this mean?

I've stressed repeatedly that till now activations have been FP16. In other words the data that flows through the network, although maybe multiplied by INT8s, is FP16. The reason for this is that we know the weights before we run the model, and we can palletize them or linearly quantize them based on that knowledge - weights don't change. But the data that flows into the net (the particular image, or speech, or text) we don't know. So we can't be sure of the data range of the intermediate data that flows through each layer -- we use an FP16 format to be sure that, whether those value are large or small they "fit".

What coreMLTools 8 gives us is a way to profile our network on "representative" data so that coreMLTools can learn, for each layer, the expected range of input and output values. IF you have this range, THEN you can also represent the activations as m*x+k, where m and k again are FP16, while x is INT8, and you can mostly move the overall scaling and bias of the activation into some cleanup stage (normalization, pooling, activation function, whatever). So this allows you to perform many layers as W8A8 (ie both weights and activations are INT8).

So again why bother? This mostly doesn't affect flash or DRAM storage. It probably affects local storage in the ANE L2, so effectively makes the L2 larger, which is nice! But what we all care about is does it affect COMPUTE?

Option one is that that data path from activation storage on the ANE to the FMAC hardware is 16 bits wide. So you could imagine things like loading two INT8 activations, then, over two cycles, performing the first W8A8 multiply-accumulate, then the second one. This saves us some power in moving data to the FMAC hardware, and means that we can avoid the FMAC shifting hardware for the add part of the operation. (The multiply is much the same for FP16*INT8, but the add part for FP16 is a lot messier than the add of an INT8*INT8.) So we get some power savings and this probably holds for every ANE from A12 on (the first real ANE; the "ANE" on the A11/iPhone X is basically for FaceID and nothing else).

But another possibility opens up. What if we added at some point (say on the A17/M4) second INT8*INT8 multiplier? So the hardware consists of two (more or less identical) multipliers both of which can handle INT8*INT8 (and at least one can handle the very similar multiplies of BF16 *INT8 and BF16*BF16), one (trivial) int adder, and the messy and complicated FP16 shifter/adder? This would be a way to reconcile all the various points we have heard claimed:

- it gives us double the FMAC throughput for INT8*INT8

- it doesn't cost *that* much in hardware - sure it costs some, but substantially less than doubling the size of the ANE. We get to reuse the FP16 wide activation storage and path into the FMAC, and the existing FMAC hardware, without having to double the messy part of that hardware.

- it explains why Apple shifted to saying the ANE hardware had double the TOPs (while being vague as to what that meant).

But why doesn't anything show up in the benchmarks? This functionality has only been added to the public facing coreML tools in the last month or so. Although the hardware supported it, no SW could use it. I am guessing that GB6 is basically some sort PyTorch neurals nets that are run through coreMLTools to generate the final mac/iPhone binaries...

In fact elsewhere in the docs we see

So this functionality is in some sense available already (but likely only for Apple's networks, until they tested that everything seemed to work OK and moved it to coreML Tools for 3rd party networks)?

One final thing. The docs also refer to 4bit linear quantization available for weights. There is a strange patent,

https://patents.google.com/patent/US20210279557A1

that describes how to fiddle the existing multiplier in the ANE to perform two 4-bit wide multiplies. I didn't know what to make of this, but maybe it's real some sense, present in hardware (at least for A17/M4)? But, like we've seen with the INT8 story, multiple steps are necessary before you get a visible performance boost from INT4 multiplies... For a year or two we may just see this available as an option that slightly reduces power, or that's used by Apple internal networks before we maybe get a W4A4 model with doubled speed.

There's also an interesting statement in

where they refer to "vector palletization". Maybe I'm misinterpreting this, but to me this looks like what used to be called vector quantization for folks familiar with video compression. Point is, it opens up the possibility of even more extreme quantization. You might think that quantizing say LLama down to an average of ~3.5 bits per weight is about as good as it gets (4 bits per weight for "important" layers, maybe 2 bits for "unimportant" layers). But that assumes you palletize one weight at a time. What if you use a single index to specify the value of two weights? Whether this works well or not depends on the structure of your weights, but it certainly seems plausible that there are cases like this.

And don't forget that a big nVidia win is their use of "structured sparsity" so that half the weights are by definition zero. Apple has so far not been able to use that (either you can exploit sparsity or palletization, but not both) but with coreMLTools 8 that changes. So maybe these large models, already palletized to ~3.5 bits per weight, can shrink by another 2x or so if we toss the zeroes? This is a win on both the storage side (flash, DRAM, and in ANE L2) but also a computation win in that zeros that are explicitly marked as such in the weight stream will be skipped in compute.

First we have

Machine, Think!

Matthijs Hollemans is an independent machine learning consultant, app developer, and author of Machine Learning by Tutorials and Core ML Survival Guide.

https://machinethink.net/blog/mobile-architectures/

which discusses multiple mobile vision networks as implemented on iOS.

Big points are how different the world was just a few years ago (most of these networks run faster on CPU than GPU), and if they can run on ANE they are very fast -- but many cannot, or cannot run well (sometimes HW limitations, but often also SW limitations).

Along with these we have

iOS 11: Machine Learning for everyone

Overview and opinions about the new machine learning APIs announced for iOS 11

A peek inside Core ML

Reverse engineering Core ML and the compute kernels it uses under the hood

An in-depth look at Core ML 3

What is new in Core ML 3, including all the new neural network layer types

Apple machine learning in 2020: What’s new?

A look at what has changed in Apple’s machine learning APIs for iOS and macOS

One reason I bring this up is to show that while things evolve, they evolve on a scale of years not months; the new shiny will not be in Apple API's next year. OTOH what you can also see (though the author is more polite about this than I would be!) is that Apple is evolving the HW and tools WELL. They add new functionality as required (moving from ONNX and Tensorflow to Python and Pytorch as the community moved; adding new layers as required; dropping obsolete functionality so they aren't slowed down by backward compatibility) and they're also aggressively evolving the hard stuff that's useful but not sexy and not obvious -- changing how models are packaged, changing how they are expressed, designing MIL to improve the compiler, adding non-obvious layer types to support things like mixture of models.

This is a lot of reading (the posts are long and sometimes technical) but IMHO well worth it if you want to be informed on this stuff.

With all that in mind, now look at

especially

and

Releases · apple/coremltools

Core ML tools contain supporting tools for Core ML model conversion, editing, and validation. - apple/coremltools

Note that these are the tools that convert an existing model (written in eg PyTorch) into something that can be compiled by Apple's compiler into binaries that run on CPU/GPU/ANE.

Thus, in terms of time, first HW has to ship. Then then Apple ML Compiler has to add functionality to support the HW. Then internal Apple APIs take advantage of the new compiler functionality. Finally MLTools can define new layers (and new mappings of existing layers) for a particular eg PYTorch model.

So essentially CoreMLTools is both long-delayed relative to HW in terms of what's available, but ALSO ahead of existing 3rd party software in terms of what's coming up.

So relevant to us, we care about INT8 functionality. What do we see in the release notes?

As expected (and I'm discussing primarily for ANE) we see that in the early days (like 2020) quantization was supported, no surprise since quantization is part of the ANE hardware. BUT the specifics are what matter.

Quantization at this time is only for WEIGHTS and is lookup of an FP16 value. In other words the primary execution model (certainly for 3rd parties) is W16A16 - FP16 weights multiplying FP16 activations. The weights in memory may be 8 or 4 bits, but they're bounced through a (hardware, on ANE) lookup table to lookup an FP16 value.

It's only in mid-2021 we switch from DEFAULT math in FP32 to FP16... Point is that's the default. Crazy high-end stuff like palletization to reduce your memory footprint is leading edge – Apple's still trying to get people to understand that FP16 (or more precisely BF16) is probably what they should be using, not FP32.

It's only in mid-2022 that they talk a lot about reducing the size of weights. I described the stuff in 2020, but that seems to be maybe mostly internally targeted? Or maybe they had very quick and dirty palletization code, no fancy optimization schemes?

Anyway in mid-2022, that's when reducing the size of weights (beyond the obvious FP32->FP16 of 2021). So now we have three options (and again these are all for WEIGHTS)

- pruning (replace values close to zero w/ zero; a bitmask says where the skipped zero values are in an array of weights)

- quantization (so 1, 2, 4, 6, 8bit index into a table of FP16s)

- linear quantization (so weight of interest is m*x+k where m and k are FP16 constants, and x is an INT8)

What are the consequences?

All three shrink the size of the weights, in flash, in DRAM, and even in ANE L2. They are only decompressed just before the multiply-adds occur (and pruned values are skipped, so no multiply-add for those values).

BUT with linear quantization, even though the weight is larger (8 bits rather than maybe 4 bits or 6 bits for palletization lookup) the weight can be multiplied directly in the FMAC! As long as you have FMAC hardware that can handle a mixed FP16 and INT8 input, which ANE can.

So with linear quantization you have the option now to do the math in INT8 (wrapping up the scaling by m and offset by k into generic layer postprocessing).

This presumably gives you a slight power win in moving the weights around (INT8 rather than FP16)?

But

- activations are still FP16.

- apparently at this stage (2022) you can choose to prune a set of weight, or to palletize/linear quantize but not BOTH (even though the ANE HW supports both).

2023 seems to have (publicly...) mainly about tweaking all the above.

But get to 2024 (ie now!) and things get interesting. Looking at the notes for coremltools 8.0b1 we see:

First is more of the same. We now have a 3bit palletized lookup.

And we have that the lookup table can produce an INT8 rather than just an FP16. So we can get such power advantages as exist by moving INT8 weights around inside the ANE rather than FP16 weights.

but more interesting is the non-same:

"A new API to support activation quantization using calibration data, which can be used to take a W16A16 Core ML model and produce a W8A8 model"

What does this mean?

I've stressed repeatedly that till now activations have been FP16. In other words the data that flows through the network, although maybe multiplied by INT8s, is FP16. The reason for this is that we know the weights before we run the model, and we can palletize them or linearly quantize them based on that knowledge - weights don't change. But the data that flows into the net (the particular image, or speech, or text) we don't know. So we can't be sure of the data range of the intermediate data that flows through each layer -- we use an FP16 format to be sure that, whether those value are large or small they "fit".

What coreMLTools 8 gives us is a way to profile our network on "representative" data so that coreMLTools can learn, for each layer, the expected range of input and output values. IF you have this range, THEN you can also represent the activations as m*x+k, where m and k again are FP16, while x is INT8, and you can mostly move the overall scaling and bias of the activation into some cleanup stage (normalization, pooling, activation function, whatever). So this allows you to perform many layers as W8A8 (ie both weights and activations are INT8).

So again why bother? This mostly doesn't affect flash or DRAM storage. It probably affects local storage in the ANE L2, so effectively makes the L2 larger, which is nice! But what we all care about is does it affect COMPUTE?

Option one is that that data path from activation storage on the ANE to the FMAC hardware is 16 bits wide. So you could imagine things like loading two INT8 activations, then, over two cycles, performing the first W8A8 multiply-accumulate, then the second one. This saves us some power in moving data to the FMAC hardware, and means that we can avoid the FMAC shifting hardware for the add part of the operation. (The multiply is much the same for FP16*INT8, but the add part for FP16 is a lot messier than the add of an INT8*INT8.) So we get some power savings and this probably holds for every ANE from A12 on (the first real ANE; the "ANE" on the A11/iPhone X is basically for FaceID and nothing else).

But another possibility opens up. What if we added at some point (say on the A17/M4) second INT8*INT8 multiplier? So the hardware consists of two (more or less identical) multipliers both of which can handle INT8*INT8 (and at least one can handle the very similar multiplies of BF16 *INT8 and BF16*BF16), one (trivial) int adder, and the messy and complicated FP16 shifter/adder? This would be a way to reconcile all the various points we have heard claimed:

- it gives us double the FMAC throughput for INT8*INT8

- it doesn't cost *that* much in hardware - sure it costs some, but substantially less than doubling the size of the ANE. We get to reuse the FP16 wide activation storage and path into the FMAC, and the existing FMAC hardware, without having to double the messy part of that hardware.

- it explains why Apple shifted to saying the ANE hardware had double the TOPs (while being vague as to what that meant).

But why doesn't anything show up in the benchmarks? This functionality has only been added to the public facing coreML tools in the last month or so. Although the hardware supported it, no SW could use it. I am guessing that GB6 is basically some sort PyTorch neurals nets that are run through coreMLTools to generate the final mac/iPhone binaries...

In fact elsewhere in the docs we see

| iOS17 / macOS14 | * Quantization: * activation quantization (W8A8 mode), accelerated on NE for A17 pro/M4 chips |

One final thing. The docs also refer to 4bit linear quantization available for weights. There is a strange patent,

https://patents.google.com/patent/US20210279557A1

that describes how to fiddle the existing multiplier in the ANE to perform two 4-bit wide multiplies. I didn't know what to make of this, but maybe it's real some sense, present in hardware (at least for A17/M4)? But, like we've seen with the INT8 story, multiple steps are necessary before you get a visible performance boost from INT4 multiplies... For a year or two we may just see this available as an option that slightly reduces power, or that's used by Apple internal networks before we maybe get a W4A4 model with doubled speed.

There's also an interesting statement in

where they refer to "vector palletization". Maybe I'm misinterpreting this, but to me this looks like what used to be called vector quantization for folks familiar with video compression. Point is, it opens up the possibility of even more extreme quantization. You might think that quantizing say LLama down to an average of ~3.5 bits per weight is about as good as it gets (4 bits per weight for "important" layers, maybe 2 bits for "unimportant" layers). But that assumes you palletize one weight at a time. What if you use a single index to specify the value of two weights? Whether this works well or not depends on the structure of your weights, but it certainly seems plausible that there are cases like this.

And don't forget that a big nVidia win is their use of "structured sparsity" so that half the weights are by definition zero. Apple has so far not been able to use that (either you can exploit sparsity or palletization, but not both) but with coreMLTools 8 that changes. So maybe these large models, already palletized to ~3.5 bits per weight, can shrink by another 2x or so if we toss the zeroes? This is a win on both the storage side (flash, DRAM, and in ANE L2) but also a computation win in that zeros that are explicitly marked as such in the weight stream will be skipped in compute.

Last edited:

I believe the M4 is faster at encoding. iPad Pro reviews showed a speed increase.Does anyone think the Media Engine will get an upgrade? I know with the M4 they added AV1 decode, but for video professionals the engine seems relatively unchanged from the M1 Pro.

What would you want? My impression is that professionals don't use hardware encoders. Decode is there for everything it's likely to support (there are some formats not supported, but you know they're never going to). What else do you want it to do?Does anyone think the Media Engine will get an upgrade? I know with the M4 they added AV1 decode, but for video professionals the engine seems relatively unchanged from the M1 Pro.

Not sure who gets to be a professional, but plenty of people making money with fcp/resolve etc are using hardware encoding.What would you want? My impression is that professionals don't use hardware encoders. Decode is there for everything it's likely to support (there are some formats not supported, but you know they're never going to). What else do you want it to do?

I don't know, but if you're trying to track the movement in advanced packaging, . . .

C.C. Wei’s (seemingly annoyed) response in the earnings transcript made me curious, that’s all. And CoWoS-R just seemed to fit with Apple’s x4 objectives (again), so I got interested again. Nothing using it has hit the market, so not much has been speculated either. A couple recent papers were published in June, one by TSMC . . .

The CoWoS-R technology is currently in production with high assembly yields. We build a large package (97 x 95 mm2) with 4 large SoC and 12 HBMs on a 5.5X CoWoS-R interposer.

. . . which, at the very least, is a promising indication of where the process is at.

Excellent find. Admirable restraint on your part. Easy to leap to conclusions, especially when combined with the two January 10 news releases, which appear to line up with this. Could just be science news, and not directly related to their customers, but the specifics, like you say, are "promising."C.C. Wei’s (seemingly annoyed) response in the earnings transcript made me curious, that’s all. And CoWoS-R just seemed to fit with Apple’s x4 objectives (again), so I got interested again. Nothing using it has hit the market, so not much has been speculated either. A couple recent papers were published in June, one by TSMC . . .

. . . which, at the very least, is a promising indication of where the process is at.

We've got three key ingredients: [1] news of N3P tape outs in January and likely risk production in June; [2] news of collaborations with Cadence IP for interposer and 3D designs; [3] TSMC publishing a successful experiment with a quad SoC design using CoWoS-R advanced packaging.

All of this, together, could mean in 2025 we will once again be treated to Apple being a couple of years ahead of everyone else. UltraFusion 2.0, here we come?

What that would mean for M4 Ultra is hard to say. If my equation of TSMC N3P + Apple M5 = 2025 holds true, then all bets are off. It may or may not be easier to say after September/October...

Last edited:

If we get new macs in oct they may as well be M5 series? And then M5 Ultra for Studio and Pro next summer?Excellent find. Admirable restraint on your part. Easy to leap to conclusions, especially when combined with the two January 10 news releases, which appear to line up with this. Could just be science news, and not directly related to their customers, but the specifics, like you say, are "promising."

We've got three key ingredients: [1] news of N3P tape outs in January and likely risk production in June; [2] news of collaborations with Cadence IP for interposer and 3D designs; [3] TSMC publishing a successful experiment with a quad SoC design using CoWoS-R advanced packaging.

All of this, together, could mean in 2025 we will once again be treated to Apple being a couple of years ahead of everyone else. UltraFusion 2.0, here we come?

What that would mean for M4 Ultra is hard to say. If my equation of TSMC N3P + Apple M5 = 2025 holds true, then all bets are off. It may or may not be easier to say after September/October...

Yeah exaclty that would be worth the wait for the Ultra and Studio Max & Pro. Give it to the underwhelming stuff to shine for a few months to boost sales like they did with the M4 for iPads.If we get new macs in oct they may as well be M5 series? And then M5 Ultra for Studio and Pro next summer?

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.