Hi there

I recently purchased a MSI 980ti for my Mac Pro 4.1 that i flashed to 5.1.

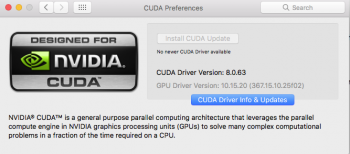

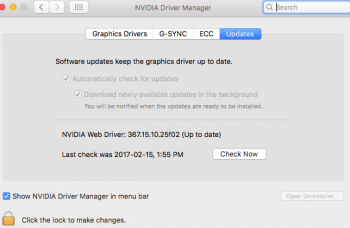

I have the most recent Nvidia web drivers and Cuda

I bought 2x 6 to 8pin adaptors (https://tinyurl.com/huewm22) to supply power to the card from the motherboard. booted her up just fine.

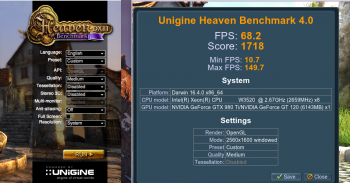

I tried playing a game (Rust) and had horrible FPS. i ran cinebench R15 and had same FPS as my old gtx760. and Geekbench had same scores

Does anyone see a problem with this setup? I'm not sure if i need an external PSU to get the full benefits of the card

Could it be under wattaged?

Any help would be greatly appreciated

Extras:

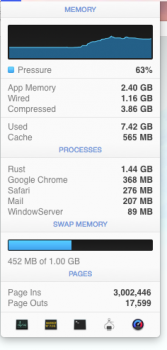

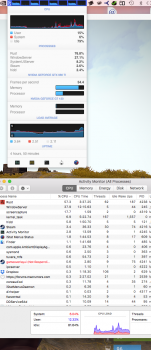

I have attached a screenshot of my Istat when the GPU is under load PCI slot 1

Cinebench gtx760= 47 fps whereas 980 ti= 45fps

according to https://www.tonymacx86.com/threads/graphics-testing-benchmarking-chart.177227/

I should be getting 99 FPS

With the nvidia drivers i've just kept on updating them since the 760 simply installed it + Cuda and nothing else (not sure if the issue lies somewhere in there) haven't used clover for any setting modifications

used the method below and still the 45fps exists

I recently purchased a MSI 980ti for my Mac Pro 4.1 that i flashed to 5.1.

I have the most recent Nvidia web drivers and Cuda

I bought 2x 6 to 8pin adaptors (https://tinyurl.com/huewm22) to supply power to the card from the motherboard. booted her up just fine.

I tried playing a game (Rust) and had horrible FPS. i ran cinebench R15 and had same FPS as my old gtx760. and Geekbench had same scores

Does anyone see a problem with this setup? I'm not sure if i need an external PSU to get the full benefits of the card

Could it be under wattaged?

Any help would be greatly appreciated

Extras:

I have attached a screenshot of my Istat when the GPU is under load PCI slot 1

Cinebench gtx760= 47 fps whereas 980 ti= 45fps

according to https://www.tonymacx86.com/threads/graphics-testing-benchmarking-chart.177227/

I should be getting 99 FPS

With the nvidia drivers i've just kept on updating them since the 760 simply installed it + Cuda and nothing else (not sure if the issue lies somewhere in there) haven't used clover for any setting modifications

used the method below and still the 45fps exists