I’m creating this thread as a place for discussion and observation on Apple Silicon graphics performance with Metal FX upscaling.

(I’m posting here in the Apple Silicon forum, rather than the Mac & PC Gaming forum, because I believe the discussion, in the long run, is much broader than just how it performs in one or two specific games and has implications beyond gaming.)

As of right now, Resident Evil Village is the only application using MetalFX Upscaling so it will be the point of reference until others arrive.

First a TLDR (as this is a long post!):

MetalFX Upscaling is pretty good for 1.0 release, but is currently a story of two modes.

Quality mode looks and runs great giving a 40~50% performance boost at 4K with very little perceptible difference vs native resolution. It also looks very good at sub 4K resolutions including both 1440P and 1080P.

Performance Mode on the other hand looks quite poor and although it delivers over 2x the performance of native resolution, it isn't quite ready for prime time (In Village, Quality Mode at 1521P delivers a higher quality resolve with slightly better performance.)

Now, on to my detailed initial observations:

Test setup:

14” M1 Max MacBook Pro

32 Core GPU

32GB Memory

42” LG C2 OLED

*Note on the Interlaced Mode in Resident Evil Village*

Interlaced mode = Checkerboard Rendering a temporal reconstruction technique that debuted with the PS4 Pro in 2016 and renders at ~1512P internally targeting 4K. Only temporal upscaling option available on PC ATM.

Resident Evil Village Benchmarking methodology:

Settings:

Preset: None (Custom settings based on Digital Foundry's optimized settings with minor tweaks)

Resolution: 4K

Scaling: 1.0

Mesh Quality: Max

Texture Quality: High (4GB) (tested this setting at various levels, High(8GB) gives performance within 1-2FPS but seems to give less consistent frametimes on my machine)

Shadow Quality: High (Max seems to perform basically the same but I didn't feel like retesting - also should benefit VRAM limited cards like the 3070 a bit)

Volumetric Lighting Quality: Mid (large performance uplift vs higher settings, basically same visuals as confirmed by DF)

SSAO: SSAO (CACAO seems to have an outsized performance hit on M1 Max)

Film Grain: Off

All other effects (Contact Shadows, Subsurface Scattering, Bloom, DoF, etc): On

*This exercise started out as a test to reconfirm DF's finding on settings and see if there were any particular settings that might have an unusually large impact on Mac. Therefore, I did not use a preconfigured settings preset. For those curious CACAO seems more costly than on PC, while significant performance can be gained disabling Bloom

Scenes:

Title screen

Upstairs hallway near railing holding Rose

*Haven’t had time to play farther into the game at the moment, so testing is limited to initial areas (I’ve beaten the game previously on PS5)

Type of upscaling in RE Village: Temporal Antialiased Upscaling

Reasoning:

1. The images don’t show the kind of artifacts/over sharpening you’d expect to see with spatial upscaling.

2. When using the performance mode on a large high-res screen, you can see the characteristics of the image change in motion in ways that don’t happen at native res. Image stability both standing still and while in motion are “variable.”

3. The aliasing in performance mode tends to present in a way that, again suggests it is being temporally reconstructed on the fly (it can flicker when resolving certain types of materials, objects and edges)

4. Reconstruction performance seems to scale to some degree with framerate (quality mode at 30~45FPS has flickering artifacts that don’t appear when the framerate Is closer to 60)

Upscaling Quality at 4K (hallway & title screen):

Quality Mode: Very impressive visual result almost indistinguishable from native as long as FPS is >50. Significantly higher quality resolve than Interlaced Mode with much less (almost no) artifacting/flicker.

Performance mode: Very poor results even at 4K, delivering noticeably worse image quality than not only Interlaced Mode (let alone native) but also quality mode running at a significantly lower post reconstruction resolution. Honestly speaking, from an image quality standpoint, this just isn’t ready for prime time.

Interlaced mode: A reasonable 4K-ish image that resolves less detail, is more aliesed, and is less stable in motion than MetalFX Quality Mode.

Upscaling Performance at 4K (hallway):

Quality Mode: ~44% performance uplift over native resolution

Performance mode: ~2.25x uplift over native resolution

*Quality Mode (1521P) : ~2.35x performance uplift over native resolution (looks better than Performance Mode)

Interlaced Mode: ~38% performance uplift over native resolution (this isn’t using MetalFX it’s just for comparison)

Initial thoughts on MetalFX Upscaling & internal resolution speculation:

Quality Mode: Incredibly impressive delivering near native image quality while improving performance by >40% with very little, if any, perceptible loss of detail or atifacting from what I have seen so far.

Performance Mode: Image quality is a bit of a dumpster fire at the moment, and thus I reeally don't feel this is ready for prime time although the results are tolerable on a smaller screen.

Internal Resolutions: I’m not Digital Foundry, but testing various resolutions and comparing performance to MetalFX Upscaling I'd guess at 4K Quality Mode renders internally at ~1440P-1521P while Performance Mode could be ~1080P (considering the frame time cost of upscaling)

Honestly speaking Performance Mode's really needs more work as upscaling from quarter res is much more helpful from a performance standpoint and DLSS, FSR 2.1, and XeSS all deliver much more convincing quarter res (1080P -> 4K) reconstruction (in other games).

At this point it'd be better if they just enabled the resolution scaler for Quality Mode (like it is for normal and interlaced mode) so that you could have the UI render at native res, while the graphics are rendered at X% of the target output resolution.

MetalFX Upscaling Tentative Conclusion:

Quality mode is extremely impressive and from what I've seen so far competes well with other prominent upscaling tech (DLSS 2.x, FSR 2.x, XeSS, etc), althoguh I imagine closer examination may reveal aspects where it's still very "1.0." Performance Mode however feels more like a beta that just needs more time in the oven (unless RE Village just has a bad implementation.) Hopefully it will get better in time but right now it can't hold a candle to other reconstruction techniques.

Overall despite the performance mode stumbles, the tech is incredibly impressive, especially for a 1.0 release (from Apple no less) and really opens the door for all Apple Silicon Macs (not just M1 Pro/Max) to have a long bright future ahead for graphics/gaming.

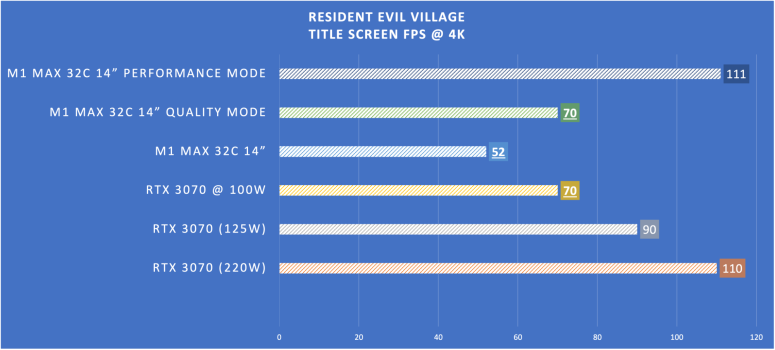

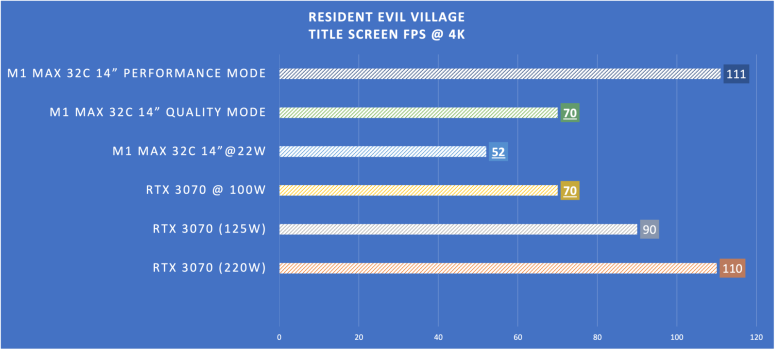

Cross platform comparison:

Comparison Platforms:

Windows PC: R9 3900X with RTX 3070

(*RTX 3070 @ 45% power (100W) is the lowest my GPU can go. This should be somewhat similar to the fastest RTX 3070 Laptop edition, although they ALSO have ~15% less CUDA cores on top of being power limited so they're likely a bit slower. @125W it should be similar to how a lot of 3080 Laptops perform)

PlayStation 5 (I may add this later if time permits, but TLDR should be a locked Interlaced 4K60 without RT)

Important Observations about 14" M1 Max Power/Thermal Throttling in Resident Evil Village:

The GPU is basically 100% loaded here all the time. Running at high resolutions causes the 32 core M1 Max in the 14" MBP to throttle significantly under load. While the rated clockspeed is 1292Mhz, I've seen it briefly dip as low as 850Mhz during actual gameplay at 4K, with it normally hovering around 950~1075Mhz. It is quite likely that the 16" MBP and Mac Studio variants of the M1 Max could be up to or more than 20% faster (although I have no way to test).

Of note, the GPU starts out fast and then slows down as it hits 99C and becomes heatsoaked, performance then drops until the temperature stabilizes around 88C (I'd recommend a custom fan curve here)

Interestingly, decreasing the internal rendering resolution results in noticeably higher GPU clockspeeds.

On the title screen for example:

Native 4K: ~1000Mhz ~ 1050Mhz

Quality Mode 4K: 1050Mhz~1150Mhz

Quality Mode 1440P: 1125Mhz - 1225Mhz

Quality Mode 1080P: 1225Mhz - 1292Mhz

This is another reason it's REALLY important for Apple to improve Performance Mode. It could dramatically increase the performance of more power / thermally constrained Macs.

Thoughts on Apple's initial positioning of the M1 Max:

During the initial reveal of the M1 Max, Apple compared the GPU to the 3060 Laptop Edition, 3070 Laptop Edition, and 3080 Laptop Edition. Important to note these ARE NOT the same as the desktop cards. The 3070 Laptop edition is much slower than the desktop 3070 while the 3080 Laptop is still slower (albeit much closer at >125W + having more CUDA cores) to a desktop 3070 (220W).

Assuming the M1 Max in the 16" MBP and Mac Studio are indeed ~20% faster, it is entirely possible that the M1 Max can, with appropriate cooling & power, manage to come close to or match a 3070 Laptop Edition in this game. In that case I think it's fair to say that performance is in line with the middle of the expectations (3070 Laptop) Apple set for the 16" MBP and Mac Studio, and likely similar to or slightly faster than the lower end of expectations (3060 Laptop) for the 14" MBP.

However, it's also possible this game will not run much faster on the 16" MBP / Mac Studio due to being limited by the TLB (tile buffer), the Tile Rendering architecture of the Apple Silicon GPU, some other aspect of the Apple Silicon GPU, drivers, or just being plain slower in this particular game, so more data is needed.

In summary, if performance does scale up with the 16" MBP and Mac Studio, I'd call it a very good result (~3070 Laptop equivalent) vs expectations set, whereas if it doesn't it's still an acceptable result, but at the lower end of the expectations Apple set and honestly a bit disappointing.

The comparison with the 3080 Laptop however, in this game is unlikely to hold up very well without using MetalFX Upscaling.

Nonetheless, particularly when making use of MetalFX upscaling all configurations of the M1 Max become very competitive against their PC counterparts.

Furthermore the Mac can do all of this at just 22W total system power, so gaming on the go is actually viable on battery. Furthermore, you can get a nice looking 30 or even 60FPS experience using Low Power Mode at only 10W(!)

(I’m posting here in the Apple Silicon forum, rather than the Mac & PC Gaming forum, because I believe the discussion, in the long run, is much broader than just how it performs in one or two specific games and has implications beyond gaming.)

As of right now, Resident Evil Village is the only application using MetalFX Upscaling so it will be the point of reference until others arrive.

First a TLDR (as this is a long post!):

MetalFX Upscaling is pretty good for 1.0 release, but is currently a story of two modes.

Quality mode looks and runs great giving a 40~50% performance boost at 4K with very little perceptible difference vs native resolution. It also looks very good at sub 4K resolutions including both 1440P and 1080P.

Performance Mode on the other hand looks quite poor and although it delivers over 2x the performance of native resolution, it isn't quite ready for prime time (In Village, Quality Mode at 1521P delivers a higher quality resolve with slightly better performance.)

Now, on to my detailed initial observations:

Test setup:

14” M1 Max MacBook Pro

32 Core GPU

32GB Memory

42” LG C2 OLED

*Note on the Interlaced Mode in Resident Evil Village*

Interlaced mode = Checkerboard Rendering a temporal reconstruction technique that debuted with the PS4 Pro in 2016 and renders at ~1512P internally targeting 4K. Only temporal upscaling option available on PC ATM.

Resident Evil Village Benchmarking methodology:

Settings:

Preset: None (Custom settings based on Digital Foundry's optimized settings with minor tweaks)

Resolution: 4K

Scaling: 1.0

Mesh Quality: Max

Texture Quality: High (4GB) (tested this setting at various levels, High(8GB) gives performance within 1-2FPS but seems to give less consistent frametimes on my machine)

Shadow Quality: High (Max seems to perform basically the same but I didn't feel like retesting - also should benefit VRAM limited cards like the 3070 a bit)

Volumetric Lighting Quality: Mid (large performance uplift vs higher settings, basically same visuals as confirmed by DF)

SSAO: SSAO (CACAO seems to have an outsized performance hit on M1 Max)

Film Grain: Off

All other effects (Contact Shadows, Subsurface Scattering, Bloom, DoF, etc): On

*This exercise started out as a test to reconfirm DF's finding on settings and see if there were any particular settings that might have an unusually large impact on Mac. Therefore, I did not use a preconfigured settings preset. For those curious CACAO seems more costly than on PC, while significant performance can be gained disabling Bloom

Scenes:

Title screen

Upstairs hallway near railing holding Rose

*Haven’t had time to play farther into the game at the moment, so testing is limited to initial areas (I’ve beaten the game previously on PS5)

Type of upscaling in RE Village: Temporal Antialiased Upscaling

Reasoning:

1. The images don’t show the kind of artifacts/over sharpening you’d expect to see with spatial upscaling.

2. When using the performance mode on a large high-res screen, you can see the characteristics of the image change in motion in ways that don’t happen at native res. Image stability both standing still and while in motion are “variable.”

3. The aliasing in performance mode tends to present in a way that, again suggests it is being temporally reconstructed on the fly (it can flicker when resolving certain types of materials, objects and edges)

4. Reconstruction performance seems to scale to some degree with framerate (quality mode at 30~45FPS has flickering artifacts that don’t appear when the framerate Is closer to 60)

Upscaling Quality at 4K (hallway & title screen):

Quality Mode: Very impressive visual result almost indistinguishable from native as long as FPS is >50. Significantly higher quality resolve than Interlaced Mode with much less (almost no) artifacting/flicker.

Performance mode: Very poor results even at 4K, delivering noticeably worse image quality than not only Interlaced Mode (let alone native) but also quality mode running at a significantly lower post reconstruction resolution. Honestly speaking, from an image quality standpoint, this just isn’t ready for prime time.

Interlaced mode: A reasonable 4K-ish image that resolves less detail, is more aliesed, and is less stable in motion than MetalFX Quality Mode.

Upscaling Performance at 4K (hallway):

Quality Mode: ~44% performance uplift over native resolution

Performance mode: ~2.25x uplift over native resolution

*Quality Mode (1521P) : ~2.35x performance uplift over native resolution (looks better than Performance Mode)

Interlaced Mode: ~38% performance uplift over native resolution (this isn’t using MetalFX it’s just for comparison)

Initial thoughts on MetalFX Upscaling & internal resolution speculation:

Quality Mode: Incredibly impressive delivering near native image quality while improving performance by >40% with very little, if any, perceptible loss of detail or atifacting from what I have seen so far.

Performance Mode: Image quality is a bit of a dumpster fire at the moment, and thus I reeally don't feel this is ready for prime time although the results are tolerable on a smaller screen.

Internal Resolutions: I’m not Digital Foundry, but testing various resolutions and comparing performance to MetalFX Upscaling I'd guess at 4K Quality Mode renders internally at ~1440P-1521P while Performance Mode could be ~1080P (considering the frame time cost of upscaling)

Honestly speaking Performance Mode's really needs more work as upscaling from quarter res is much more helpful from a performance standpoint and DLSS, FSR 2.1, and XeSS all deliver much more convincing quarter res (1080P -> 4K) reconstruction (in other games).

At this point it'd be better if they just enabled the resolution scaler for Quality Mode (like it is for normal and interlaced mode) so that you could have the UI render at native res, while the graphics are rendered at X% of the target output resolution.

MetalFX Upscaling Tentative Conclusion:

Quality mode is extremely impressive and from what I've seen so far competes well with other prominent upscaling tech (DLSS 2.x, FSR 2.x, XeSS, etc), althoguh I imagine closer examination may reveal aspects where it's still very "1.0." Performance Mode however feels more like a beta that just needs more time in the oven (unless RE Village just has a bad implementation.) Hopefully it will get better in time but right now it can't hold a candle to other reconstruction techniques.

Overall despite the performance mode stumbles, the tech is incredibly impressive, especially for a 1.0 release (from Apple no less) and really opens the door for all Apple Silicon Macs (not just M1 Pro/Max) to have a long bright future ahead for graphics/gaming.

Cross platform comparison:

Comparison Platforms:

Windows PC: R9 3900X with RTX 3070

(*RTX 3070 @ 45% power (100W) is the lowest my GPU can go. This should be somewhat similar to the fastest RTX 3070 Laptop edition, although they ALSO have ~15% less CUDA cores on top of being power limited so they're likely a bit slower. @125W it should be similar to how a lot of 3080 Laptops perform)

PlayStation 5 (I may add this later if time permits, but TLDR should be a locked Interlaced 4K60 without RT)

Important Observations about 14" M1 Max Power/Thermal Throttling in Resident Evil Village:

The GPU is basically 100% loaded here all the time. Running at high resolutions causes the 32 core M1 Max in the 14" MBP to throttle significantly under load. While the rated clockspeed is 1292Mhz, I've seen it briefly dip as low as 850Mhz during actual gameplay at 4K, with it normally hovering around 950~1075Mhz. It is quite likely that the 16" MBP and Mac Studio variants of the M1 Max could be up to or more than 20% faster (although I have no way to test).

Of note, the GPU starts out fast and then slows down as it hits 99C and becomes heatsoaked, performance then drops until the temperature stabilizes around 88C (I'd recommend a custom fan curve here)

Interestingly, decreasing the internal rendering resolution results in noticeably higher GPU clockspeeds.

On the title screen for example:

Native 4K: ~1000Mhz ~ 1050Mhz

Quality Mode 4K: 1050Mhz~1150Mhz

Quality Mode 1440P: 1125Mhz - 1225Mhz

Quality Mode 1080P: 1225Mhz - 1292Mhz

This is another reason it's REALLY important for Apple to improve Performance Mode. It could dramatically increase the performance of more power / thermally constrained Macs.

Thoughts on Apple's initial positioning of the M1 Max:

During the initial reveal of the M1 Max, Apple compared the GPU to the 3060 Laptop Edition, 3070 Laptop Edition, and 3080 Laptop Edition. Important to note these ARE NOT the same as the desktop cards. The 3070 Laptop edition is much slower than the desktop 3070 while the 3080 Laptop is still slower (albeit much closer at >125W + having more CUDA cores) to a desktop 3070 (220W).

Assuming the M1 Max in the 16" MBP and Mac Studio are indeed ~20% faster, it is entirely possible that the M1 Max can, with appropriate cooling & power, manage to come close to or match a 3070 Laptop Edition in this game. In that case I think it's fair to say that performance is in line with the middle of the expectations (3070 Laptop) Apple set for the 16" MBP and Mac Studio, and likely similar to or slightly faster than the lower end of expectations (3060 Laptop) for the 14" MBP.

However, it's also possible this game will not run much faster on the 16" MBP / Mac Studio due to being limited by the TLB (tile buffer), the Tile Rendering architecture of the Apple Silicon GPU, some other aspect of the Apple Silicon GPU, drivers, or just being plain slower in this particular game, so more data is needed.

In summary, if performance does scale up with the 16" MBP and Mac Studio, I'd call it a very good result (~3070 Laptop equivalent) vs expectations set, whereas if it doesn't it's still an acceptable result, but at the lower end of the expectations Apple set and honestly a bit disappointing.

The comparison with the 3080 Laptop however, in this game is unlikely to hold up very well without using MetalFX Upscaling.

Nonetheless, particularly when making use of MetalFX upscaling all configurations of the M1 Max become very competitive against their PC counterparts.

Furthermore the Mac can do all of this at just 22W total system power, so gaming on the go is actually viable on battery. Furthermore, you can get a nice looking 30 or even 60FPS experience using Low Power Mode at only 10W(!)

Attachments

Last edited: