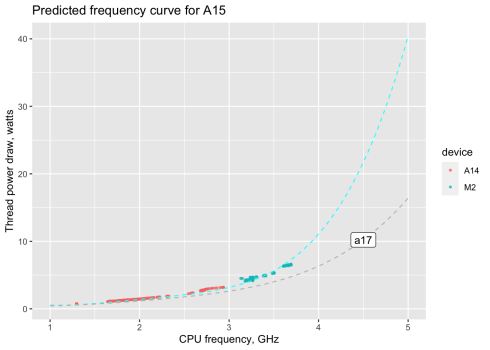

And here is a fitted prediction curve (using fourth degree polynomial, going higher doesn't improv the fit) for A17

View attachment 2282345

Take this with a grain of salt obviously, but given how good the fit is, I'd say we have even more evidence that A17 is designed with desktop use in mind. I think 4.5 Ghz at 10 watts should at least be achievable, and that would give desktop M3 GB6 single of > 3600

P.S. Looking at this graph I can't wonder whether there is some intent behind some crucial points. It it a coincidence that A17 Pro is clocked to peak exactly at 5Ghz, or that the curve hits 4.5 Ghx

exacly at 10 watts and 5Ghx at approx 15 watts? These are all important psychological points.