@PowerMac G4 MDD hay is your card new or used? did you flash it with new bios? under windows is it crashing with default Wattman settings ?

are you watching it in windows with wattman (or like app) to see why it crashes, heat,power, stability?

it will be relay odd if it's a new card and crashing with no changes made to it.

@h9826790 have you modded the power limits of the card in a new way, THX for the GPU monitor thing ill try it now

ill try it now

O and if any one who has not used liquid metal before try's it be vary safe, it's not like normal thermal compounds.

it has much better heat transfer but is also conductive and melts some mettle so you have to be make shore not to spill any, make shore you follow instructions and maybe watch a good video to see how it's done.

if your new to it a good branded thermal past that is not conductive is fairly safe (and may well be a upgrade over the factory past) as long as you dont use to little your fine.

it will void the sapphire warranty (at least in the UK/EU)

@calmasacow look at the first two pages of this topic, it has all you need. but also dont forget not all cards are the same, my card has a low ASIC so it dose not work as well as some also it was used to mine cripto and i suspect the mem controller may be a tad sad from that or something.

or something.

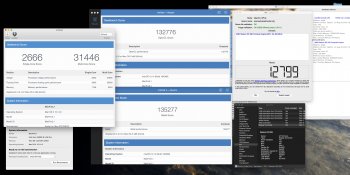

for high lux mark just use the one click mem timing button and your done, that will lift your score

for low fan nose drop it down to something close to 1300mhz

voltage seems to be dependent on ASIC quality, better quality and it looks like it auto uses lower voltage (im not 100% but think so, at least up to a point) low ASIC quality and higher voltage (thats my card)

edit thanks h98 that script is super easy compared to using terminal with the self updating

are you watching it in windows with wattman (or like app) to see why it crashes, heat,power, stability?

it will be relay odd if it's a new card and crashing with no changes made to it.

@h9826790 have you modded the power limits of the card in a new way, THX for the GPU monitor thing

O and if any one who has not used liquid metal before try's it be vary safe, it's not like normal thermal compounds.

it has much better heat transfer but is also conductive and melts some mettle so you have to be make shore not to spill any, make shore you follow instructions and maybe watch a good video to see how it's done.

if your new to it a good branded thermal past that is not conductive is fairly safe (and may well be a upgrade over the factory past) as long as you dont use to little your fine.

it will void the sapphire warranty (at least in the UK/EU)

@calmasacow look at the first two pages of this topic, it has all you need. but also dont forget not all cards are the same, my card has a low ASIC so it dose not work as well as some also it was used to mine cripto and i suspect the mem controller may be a tad sad from that

for high lux mark just use the one click mem timing button and your done, that will lift your score

for low fan nose drop it down to something close to 1300mhz

voltage seems to be dependent on ASIC quality, better quality and it looks like it auto uses lower voltage (im not 100% but think so, at least up to a point) low ASIC quality and higher voltage (thats my card)

edit thanks h98 that script is super easy compared to using terminal with the self updating

Last edited: