Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

So what are the firepro d300's?

- Thread starter Zellio

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It's absolutely down to the drivers!

When your getting down to professional software running in a business environment, then it's all about getting the optimised driver for your key software. This is part of the uplift cost for the pro card - the back office work to certificate and optimise. Not something I would expect a soft modder to take into account. Take a look at this site and the driver options that AMD offer for the pure amount of software. I know it's CAD/Win related, but it's just an example. Accuracy of the model in the modelling environment is also key, not the frame rate that rules in the game/benchmark world. I'm not sure how this will fit with OSX drivers and software requirements as I have no experience in this.

http://support.amd.com/en-us/download/workstation/certified#k=#s=101

The lower link is also quite interesting for the power issues in the W cards. Look at the table at the bottom of the second page. Again I know we don't yet know how or if these relate to the D series or whether they are 7xxx series mods as per tomshardware discovery in a hackintosh. Have we discovered anymore on whether it's a 450w PSU across the range, or whether it's staggered depending on expected system loading?

And I'm not supping any sort of cool aid!

http://www.fireprographics.com/resources/AMD_FirePro_Solidworks_BenchMarking_Sheet_A4.pdf

PS - just noticed the max res on the W series on the above table. Is this an indicator on the genesis of the D series cards?

I'm guessing what you're saying is: If the only difference between the W9000 and 7970 is drivers, then someone would've soft-modded the 7970 to run at W9000 speeds.

The fact that the above has not happened yet doesn't prove anything. The W9000 runs identically--and I mean +/- one FPS--to the 7970 in gaming. Clearly the W9000 performs multiples better in professional tasks, but if there were substantive differences in the hardware itself, wouldn't the gaming benchmarks differ? ATI was thoroughly embarrassed when their previous models were soft-modded and performed at the same level as their pro cards. What's more likely: They changed the way they make the cards or that they just got smarter at preventing soft-modding?

As we saw with Glide, CUDA, and [soon] Mantle, software designed to take advantage of different aspects of the hardware can make things run multiples better. Even apps ported from CUDA to OpenGL have ridiculously better performance in Nvidia Vs AMD. This is all software.

I am highly skeptical of the real differences between the hardware, nothing I've seen posted here and elsewhere does anything to change my mind. This could easily all be smoke and mirrors done with drivers and by the way: They've done it before.

When your getting down to professional software running in a business environment, then it's all about getting the optimised driver for your key software. This is part of the uplift cost for the pro card - the back office work to certificate and optimise. Not something I would expect a soft modder to take into account. Take a look at this site and the driver options that AMD offer for the pure amount of software. I know it's CAD/Win related, but it's just an example. Accuracy of the model in the modelling environment is also key, not the frame rate that rules in the game/benchmark world. I'm not sure how this will fit with OSX drivers and software requirements as I have no experience in this.

http://support.amd.com/en-us/download/workstation/certified#k=#s=101

The lower link is also quite interesting for the power issues in the W cards. Look at the table at the bottom of the second page. Again I know we don't yet know how or if these relate to the D series or whether they are 7xxx series mods as per tomshardware discovery in a hackintosh. Have we discovered anymore on whether it's a 450w PSU across the range, or whether it's staggered depending on expected system loading?

And I'm not supping any sort of cool aid!

http://www.fireprographics.com/resources/AMD_FirePro_Solidworks_BenchMarking_Sheet_A4.pdf

PS - just noticed the max res on the W series on the above table. Is this an indicator on the genesis of the D series cards?

Last edited:

CUDA 6 released. OpenCL falls even farther behind. Not that it matters much here. NO CUDA FOR YOU!

http://www.anandtech.com/show/7515/nvidia-announces-cuda-6-unified-memory-for-cuda

http://www.anandtech.com/show/7515/nvidia-announces-cuda-6-unified-memory-for-cuda

CUDA 6 released. OpenCL falls even farther behind. Not that it matters much here. NO CUDA FOR YOU!

http://www.anandtech.com/show/7515/nvidia-announces-cuda-6-unified-memory-for-cuda

Based on that article, CUDA 6 seemed to be mostly for developer convenience rather than performance improving.

Based on that article, CUDA 6 seemed to be mostly for developer convenience rather than performance improving.

True but developer convenience is not unimportant. They are laying the groundwork for the Maxwell chips which do this in hardware. A big weak spot in OpenCL is memory management and this isn't going to help.

True but developer convenience is not unimportant.

agree.. in fact, that's the main reason (as far as i understand) many developers have chosen cuda over openCL up to this point.

the cuda API is more robust and/or easier for a developer to get working in their applications.. it's not that cuda is inherently better/faster/more beneficial than openCL.

looking into the future a bit, it's not unlikely that these two languages will eventually merge or that nvidia will contribute instead to the open source language.. it seems going that route will actually pay off better for them instead of continuing to push a proprietary language which other major software/hardware players refuse to support.

[G5]Hydra;18358608 said:Just out of curiosity what about a GPU determines how the OS decides what it is under OSX? The device ID, chipset specifically ?

Also the D300 is an R9 270X and the D700 is an R280X (7970)? What is the D500? An R280 with 512 Stream Processors disabled? It has the 384-bit memory bus etc.

I would also like to know. It will be interesting to see how much Apple will try to charge for the D700 BTO, considering the R280X prices. I'll be annoyed if they are having a laugh.

CUDA 6 released. OpenCL falls even farther behind. Not that it matters much here. NO CUDA FOR YOU!

http://www.anandtech.com/show/7515/nvidia-announces-cuda-6-unified-memory-for-cuda

4 months previous to this, Anantech had another article....

"... The biggest addition here is that OpenCL 2.0 introduces support for shared virtual memory, the basis of exploiting GPU/CPU integrated processors. Kernels will be able to share complex data, including memory pointers, executing and using data without the need to explicitly transfer it from host to device and vice versa. ... "

http://www.anandtech.com/show/7161/...pengl-44-opencl-20-opencl-12-spir-announced/3

Some AMD GPUs ( and APUs) already have the foundation to make this operational. Exactly why a "one vendor only" solution is both limited and not particularly novel.

OpenCL 2.0 will release a bit later but it pose porting and availability problems either.

Aren't the new R9 parts just 7xxx series GPUs binned for higher clocks?

The nMP is likely using the same binned R9 silicon, but based on rated TFlop performance, the nMP GPUs are definitely running at lower core clocks than their 7xxx and R9 counterparts (and similar memory clocks to the 7xxx series).

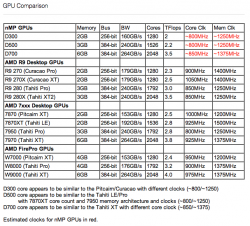

Here's a table comparing all the relevant AMD desktop GPUs with the nMP offerings (with my best guess for clocks in red):

The nMP is likely using the same binned R9 silicon, but based on rated TFlop performance, the nMP GPUs are definitely running at lower core clocks than their 7xxx and R9 counterparts (and similar memory clocks to the 7xxx series).

Here's a table comparing all the relevant AMD desktop GPUs with the nMP offerings (with my best guess for clocks in red):

Attachments

Last edited:

Aren't the new R9 parts just 7xxx series GPUs binned for higher clocks?

Technically, no. A subset, but there are new variants that have the Audio DSP in them. Additionally, the Hawaii parts are new graphics cofinguration. For the rest, the graphics pipeline doesn't have major or minor changes but most of the "X" models aren't the same. Likewise the "X" models with the audio subset clipped off technically are different from old models that didn't have it at all.

Parts of the layout are likely tweaked slightly also to help with speed bumps, but it is not a redesign or major upgrade. (again the Hawaii gets an improved PowerTune management but the others, from reports, are still the same. )

The nMP is likely based on R9 silicon,

maybe, maybe not. There has been no movement on the other FirePro cards to R9 variants.

but based on rated TFlop performance, the nMP GPUs are definitely running at lower core clocks

Tweaked for higher clock bump variants are an odd duck choice for a slower than either one deployment. Unless Apple is looking to "uncork" the Audio DSP later with OS X 10.10 or later, the R9 variants aren't buying much.

Technically, no. A subset, but there are new variants that have the Audio DSP in them. Additionally, the Hawaii parts are new graphics cofinguration. For the rest, the graphics pipeline doesn't have major or minor changes but most of the "X" models aren't the same. Likewise the "X" models with the audio subset clipped off technically are different from old models that didn't have it at all.

Parts of the layout are likely tweaked slightly also to help with speed bumps, but it is not a redesign or major upgrade. (again the Hawaii gets an improved PowerTune management but the others, from reports, are still the same. )

I believe you're incorrect… the R9 270/280 cores are just binned or slight mods to previous Pitcairn/Tahiti chips. Only the 290x is Hawaii (which includes the audio features) and it's definitely not the basis for any of the nMP parts so I didn't include it in the table.

I believe you're incorrect the R9 270/280 cores are just binned or slight mods to previous Pitcairn/Tahiti chips. Only the 290x is Hawaii (which includes the audio features) and it's definitely not the basis for any of the nMP parts so I didn't include it in the table.

The 290x is the R9 290x. You assigned "just binned" to the whole R9 series. But yes currently in the rest of the R9 series TrueAudio isn't present as a feature 'X' or not. [ It is down in R7 260X where it actively shows back up. ]

TrueAudio has been buried before though. When Bonaire, the basis for 260X, first showed up it wasn't a named feature.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.