Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Some Benchmarks Seem Totally Bogus!

- Thread starter Tesselator

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Late to the party, but better late than never  In my 1,1:

In my 1,1:

Uni seems to be CPU bound, as you've all said.

Mine finished Furry in native res without issues. But I have seen on my own eyes how does GF110 power limiter work.

It has beaten your 8800 at least, Tesselator

How do you powering your 570? I use non standard PCIe power cables (about 15 AWG).

Uni seems to be CPU bound, as you've all said.

Mine finished Furry in native res without issues. But I have seen on my own eyes how does GF110 power limiter work.

It has beaten your 8800 at least, Tesselator

How do you powering your 570? I use non standard PCIe power cables (about 15 AWG).

Yeah, looks close enough. I guess folks can see what I mean about just being able to turn on more stuff but getting the same FPS and score just by yours and mine together.

Uni seems to be CPU bound, as you've all said.

Mine finished Furry in native res without issues. But I have seen on my own eyes how does GF110 power limiter work.

It has beaten your 8800 at least, Tesselator

How do you powering your 570? I use non standard PCIe power cables (about 15 AWG).

I'm powering mine from the Motherboard's dual mini-6-pin connectors. But I'm kinda wondering if I should use an aux PSU anyway. I dunno if it's a bug in furmark 0.2.1 and 0.3.0 or not (I guess not) but at 1920x1080 with AA turned off I can only run the test for about 15 to 20 seconds and the system power shuts completely off with a click. I'm assuming it's an overdraw causing some overdraw protection relay to click and shut it all off. With AA turned ON it slows it down enough that it doesn't overdraw and the test completes fine. <shrug> Tiz a mystery...

Also, I'm not sure what a "GF110 power limiter" is.

Yeah, looks close enough. I guess folks can see what I mean about just being able to turn on more stuff but getting the same FPS and score just by yours and mine together.

I'm powering mine from the Motherboard's dual mini-6-pin connectors. But I'm kinda wondering if I should use an aux PSU anyway. I dunno if it's a bug in furmark 0.2.1 and 0.3.0 or not (I guess not) but at 1920x1080 with AA turned off I can only run the test for about 15 to 20 seconds and the system power shuts completely off with a click. I'm assuming it's an overdraw causing some overdraw protection relay to click and shut it all off. With AA turned ON it slows it down enough that it doesn't overdraw and the test completes fine. <shrug> Tiz a mystery...

Also, I'm not sure what a "GF110 power limiter" is.

Tesselator, I have posted about this elsewhere. I can shut off my 3,1 like a LIGHT by running furmark with 7970 or GTX580 or even GTX570 by running furmark. The PC GPU drivers were written to detect this app and keep this from happening, but OSX contains no such protection. My 4,1 can take much more punishment.

Would be interesting to get the paid version of Hardware Monitor and see EXACTLY how many watts it takes to engage the "Big Brother" feature.

Tesselator, I have posted about this elsewhere. I can shut off my 3,1 like a LIGHT by running furmark with 7970 or GTX580 or even GTX570 by running furmark. The PC GPU drivers were written to detect this app and keep this from happening, but OSX contains no such protection. My 4,1 can take much more punishment.

Oh, that's interesting! No, I didn't know that. Thanks for the info bro!

Would be interesting to get the paid version of Hardware Monitor and see EXACTLY how many watts it takes to engage the "Big Brother" feature.

And then I ran FurMark with 8X MSAA and it only consumed about 60% (a little over half) of what PixMark Volplosion shows. It locked up on me after so all I have is this interim grab - and of course if I were to run it without 8X MSAA it would switch off the machine - which by the way the 8800 didn't (for those wondering). Also to note is that I believe there are no "PCI E" sensor points on the MP1,1 and 1,2 so you have to subtract the obvious idle reference value shown between the four tests.

It's interesting that the FurMark test both took so little power and also caused a lock-up after escaping the window.

Last edited:

Uni seems to be CPU bound, as you've all said.

How do you differentiate between a CPU bound or a PCI-e-edition boundary in this case? When I run the Heaven benchmark on my computer (2008 one) it never puts the CPU near its limit. I would rather assume that the pre 2008 mac pros are limited by their pci-e version (being 1.0 instead of 2.0, if I have understood it correctly).

How do you differentiate between a CPU bound or a PCI-e-edition boundary in this case?

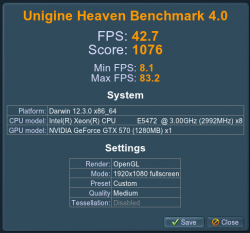

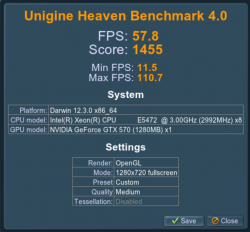

Compare 4.0 scores between comparable machines:

Tesselator's 1,1 2.66 GHz (post #1), GermanyChris 1,1 2.33 GHz (post #17), me 1,1 3.0 GHz (this post).

As you can see, score increases quite linearly with CPU frequency increase.

Here is my 4.0 score:

PCIe bandwidth matters too, no doubt, but comparison between similar machines is more adequate.

Compare 4.0 scores between comparable machines:

Tesselator's 1,1 2.66 GHz (post #1), GermanyChris 1,1 2.33 GHz (post #17), me 1,1 3.0 GHz (this post).

As you can see, score increases quite linearly with CPU frequency increase.

Here is my 4.0 score:

Image

PCIe bandwidth matters too, no doubt, but comparison between similar machines is more adequate.

Ah, thanks for showing me what I already should have read from the images

Ah, thanks for showing me what I already should have read from the imagesBut yes, for unigine the processor speed seems to matter for the 1,1 at least.

Right, totally. Being CPU bound in this case might actually imply that it's limited by CPU Code rather than the CPU itself. To oversimplify in example imagine program code that spent time on the CPU between every frame update on the GPU. Even if the CPU code were only using 2% of a single core to execute the frame rates for the OpeGL updates would get trashed. And as a result of getting trashed in specifically that way, would render the application useless as a benchmark for anything to do with GPU testing. This is what I meant to imply by using "bogus" in the thread title.

It's of course not as simple as in my example but IMO the Unigine engine is extremely poor in design - for whatever reasons.

Ah, thanks for showing me what I already should have read from the imagesBut yes, for unigine the processor speed seems to matter for the 1,1 at least.

I would think this would hold true for all the iterations of Mac Pro's, whether 1,1 or 5,1.

The examples given here have both the 1,1 common and the 570. I would think if the model were 5,1 and the GFX card were 570, with three differing CPU speeds, you would still see the pattern, even if the PCIe speeds were higher, it's still comparing same Pro models.

For what it's Worth.....

Around 30 years ago I worked for McDonnell Douglas and was in charge of many Macintosh computers. I Remember pulling a NIC card from one machine and installing it in another. The network speed was slowed to a crawl. I complained to one of our IT guys (An IBM Contractor) and he basically said.... "Duhhhhhh. Why do you think that is ?" I said "I have no idea." He said because the machine I moved the card to had a much slower processor it was limiting the speed of the NIC card. That was a relivation to me.....

Around 30 years ago I worked for McDonnell Douglas and was in charge of many Macintosh computers. I Remember pulling a NIC card from one machine and installing it in another. The network speed was slowed to a crawl. I complained to one of our IT guys (An IBM Contractor) and he basically said.... "Duhhhhhh. Why do you think that is ?" I said "I have no idea." He said because the machine I moved the card to had a much slower processor it was limiting the speed of the NIC card. That was a relivation to me.....

For what it's Worth.....

That was a relivation to me.....

I of course get what you're saying but then the Uni people should be slating this as a CPU test and not a GPU test. And actually in this case they should slate it as a "How crappy unbalanced code works on your computer" test.

I of course get what you're saying but then the Uni people should be slating this as a CPU test and not a GPU test. And actually in this case they should slate it as a "How crappy unbalanced code works on your computer" test.

Tesselator..... You have a good Night...... =)

Tesselator..... You have a good Night...... =)

Hehe... Thx bro. Even though it's only 1:30 in the afterneen here.

BTW, I noticed your location... I used to live near Bushard and Atlanta. Peace!

Hehe... Thx bro. Even though it's only 1:30 in the afterneen here.

BTW, I noticed your location... I used to live near Bushard and Atlanta. Peace!

OMG.....

I owned a house at Bushard and Adams.........

Now I'm at 8th & Walnut. By the pier. =) Peace +

OMG.....

I owned a house at Bushard and Adams.........

Now I'm at 8th & Walnut. By the pier. =) Peace +

Awesome! By the Pier is better. I lived over Jacks for a bit in the late 70's - Grew up in Seal, 6th & Central.

Getting back to the benchmarking question, a couple of observations.

First, the Heaven benchmark is meant for Mountain Lion only under OS X. I don't know how much difference this might make but it could be very significant. If you're looking at the scores in Lion, then perhaps it would be more appropriate for everyone to run it under Windows?

Secondly, when I ran it under Windows, I noticed that the details in the scenes are far superior to those in OS X. The cobbles and brickwork; the ropes, conduits and screws on the gun carriages are all an order of magnitude more complex. It makes comparisons across operating systems very misleading since the OS X benchmark would seem to be a comparatively easy task.

My Mac Pro is a 4,1/5,1 hybrid with a 3.33 GHz hex, 24 GB of RAM and a GTX 680.

Here is the result of the extreme preset (1600 x 900, windowed, 8 x AA) under 10.8.3:

Under Windows 7 64-bit, using the same preset with the OpenGL renderer, I get this:

And with the DX11 renderer, here's the result:

I would guess that another reason for the drop in performance is that Windows 7 runs flashed cards at PCIe 1.1 speeds but it's apparent from the frame rates that the Windows version of the benchmark is giving the graphics card a much tougher job to do. If this is the case, then differences between the hardware that is feeding data to the cards should make less difference than the benchmarks under OS X.

*edit* It's been pointed out that the lack of tessellation in OS X's implementation of OpenGL is the real reason.

Are any of you guys with 2,1 and 3,1 Mac Pros able to check this out under Windows?

First, the Heaven benchmark is meant for Mountain Lion only under OS X. I don't know how much difference this might make but it could be very significant. If you're looking at the scores in Lion, then perhaps it would be more appropriate for everyone to run it under Windows?

Secondly, when I ran it under Windows, I noticed that the details in the scenes are far superior to those in OS X. The cobbles and brickwork; the ropes, conduits and screws on the gun carriages are all an order of magnitude more complex. It makes comparisons across operating systems very misleading since the OS X benchmark would seem to be a comparatively easy task.

My Mac Pro is a 4,1/5,1 hybrid with a 3.33 GHz hex, 24 GB of RAM and a GTX 680.

Here is the result of the extreme preset (1600 x 900, windowed, 8 x AA) under 10.8.3:

Under Windows 7 64-bit, using the same preset with the OpenGL renderer, I get this:

And with the DX11 renderer, here's the result:

*edit* It's been pointed out that the lack of tessellation in OS X's implementation of OpenGL is the real reason.

Are any of you guys with 2,1 and 3,1 Mac Pros able to check this out under Windows?

Attachments

Last edited:

Getting back to the benchmarking question, a couple of observations.

First, the Heaven benchmark is meant for Mountain Lion only under OS X. I don't know how much difference this might make but it could be very significant. If you're looking at the scores in Lion, then perhaps it would be more appropriate for everyone to run it under Windows?

Secondly, when I ran it under Windows, I noticed that the details in the scenes are far superior to those in the OS X. The cobbles and brickwork; the ropes, conduits and screws on the gun carriages are all an order of magnitude more complex. It makes comparisons across operating systems very misleading since the OS X benchmark would seem to be a comparatively easy task.

My Mac Pro is a 4,1/5,1 hybrid with a 3.33 GHz hex, 24 GB of RAM and a GTX 680.

Here is the result of the extreme preset (1600 x 900, windowed, 8 x AA) under 10.8.3:

Image

Under Windows 7 64-bit, using the same preset with the OpenGL renderer, I get this:

Image

And with the DX11 renderer, here's the result:

Image

I would guess that another reason for the drop in performance is that Windows 7 runs flashed cards at PCIe 1.1 speeds but it's apparent from the frame rates that the Windows version of the benchmark is giving the graphics card a much tougher job to do. If this is the case, then differences between the hardware that is feeding data to the cards should make less difference than the benchmarks under OS X.

Are any of you guys with 2,1 and 3,1 Mac Pros able to check this out under Windows?

Your images are not showing

Secondly, when I ran it under Windows, I noticed that the details in the scenes are far superior to those in the OS X. The cobbles and brickwork; the ropes, conduits and screws on the gun carriages are all an order of magnitude more complex. It makes comparisons across operating systems very misleading since the OS X benchmark would seem to be a comparatively easy task.

It's called "tessellation"

But yes, it does kind of invalidate comparisons between the two OSes, unless you force DX11/GL4 off on the Windows side.

It's called "tessellation"Windows supports DX11 and OpenGL 4, which enables tessellation, while Mac OS X is stuck on OpenGL 3.2 which does not have tessellation shaders.

But yes, it does kind of invalidate comparisons between the two OSes, unless you force DX11/GL4 off on the Windows side.

Does this make Tesselator a very detailed and shady character?

Your images are not showing

Thanks. Should be sorted now. Post is a bit pointless without them...

cal6n said:I would guess that another reason for the drop in performance is that Windows 7 runs flashed cards at PCIe 1.1 speeds but it's apparent from the frame rates that the Windows version of the benchmark is giving the graphics card a much tougher job to do. If this is the case, then differences between the hardware that is feeding data to the cards should make less difference than the benchmarks under OS X.

Properly flashed cards run at 2.0 in Windows. It is unfleshed cards that run at the slower speedin Windows. And this only affect 3,1 and later Mac Pros

Properly flashed cards run at 2.0 in Windows. It is unfleshed cards that run at the slower speedin Windows. And this only affect 3,1 and later Mac Pros

For a comparison, do you have Windows-based Heaven benchmark scores for any of your flashed cards?

*edit* MacVidCards is correct. It's running at 2.0 speeds.

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.