Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

The absence of Bootcamp is preventing me from switching to M1 or M2... Will there ever be a solution?

- Thread starter Aedant

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I actually understand that distinction, but really thought Apple had disabled that. I haven't seen it discussed anywhere and don't do any development on MacOS. (or ARM for that matter)

Yeah, it’s not widely known. Apple uses some very obscure APIs and concepts (locAl descriptor tables) from even before modern 32-bit x86 computing for this.

Fine, I should have said x64. Same difference though. Windows software is x64 code, just like Mac software emulated with Rosetta 2Actually that first sentence is wrong, it emulates X64 Mac Software, not x86 (32-bit) software. Windows on Arm emulates both x86 and x64 Windows software, which makes it quite a bit more complex.

If you want to get really technical, the underlying instruction set is still x86. Which is why 64 bit was initially known as x86-64. Now we have AMD64 and EM64T. But the instruction set is still x86. 64 bit is just an extension on it. Just like Apple Silicon underlying instruction set and design is Arm. But Apple is free to add cores, engines, and build off that Instruction set.Fine, I should have said x64. Same difference though. Windows software is x64 code, just like Mac software emulated with Rosetta 2

Last edited:

It depends on what you need. If you're looking for baremetal Apple Silicon support from Microsoft, you'll be waiting a long time. Insert mythological example of a long time here.

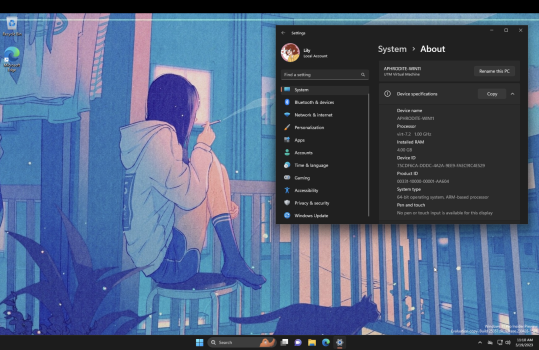

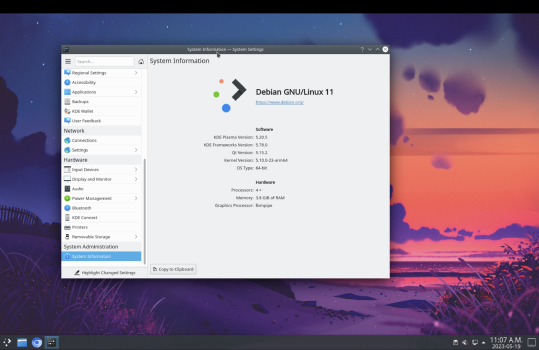

I run both Debian 11 and Windows 11 on my M2 Air using UTM. If you try to run an x86 version of Windows or Linux, the performance is lacking, because of the real-time translations that have to occur. It is cool that it's even possible to emulate x86-64 hardware with UTM, however slow it is. That said, Debian 11 has an ARM64 version, and Windows 11 Insider Preview has an ARM64 version, and both of these run at near-native speeds on this machine, especially Debian. If I need to do anything that isn't accessible via macOS, I've got two other toolsets.

These aren't gaming system replacement VMs, though. The closest thing to Windows gaming support on these systems is Parallels, which I found worked 70% of the time? When it worked, the performance was great, but it also crashed a lot and just flat up refused to run some games. You could go out and drop $5k on a Mac Studio just to have the gobs of power and memory required to give Parallels gaming system levels of performance, but most people would rather just build a PC at that point. I have an XBox, cause I'm pretty casual in my gaming needs and it was the system my partner was already invested in when we moved in together.

I run both Debian 11 and Windows 11 on my M2 Air using UTM. If you try to run an x86 version of Windows or Linux, the performance is lacking, because of the real-time translations that have to occur. It is cool that it's even possible to emulate x86-64 hardware with UTM, however slow it is. That said, Debian 11 has an ARM64 version, and Windows 11 Insider Preview has an ARM64 version, and both of these run at near-native speeds on this machine, especially Debian. If I need to do anything that isn't accessible via macOS, I've got two other toolsets.

These aren't gaming system replacement VMs, though. The closest thing to Windows gaming support on these systems is Parallels, which I found worked 70% of the time? When it worked, the performance was great, but it also crashed a lot and just flat up refused to run some games. You could go out and drop $5k on a Mac Studio just to have the gobs of power and memory required to give Parallels gaming system levels of performance, but most people would rather just build a PC at that point. I have an XBox, cause I'm pretty casual in my gaming needs and it was the system my partner was already invested in when we moved in together.

Attachments

Last edited:

Actually Macs are great for coding. Better than PCs for many development tasks (unless of course you are writing Windows apps).The way I see it, Macs and PCs are better for different purposes. PCs are really good for coding, network configurations, and of course gaming. Whereas Macs are great for everyday use (web browsing, emails, documents and spreadsheets, streaming music and videos, instant messaging, etc.) and also for creativity (digital image manipulation and artwork, audio-visual creation, desktop publishing, web design, etc.) And I am perfectly happy with being cross-platform for such purposes. I point this out in "Get a Mac" commercial parody I made in Wrapper/GoAnimate (I love making deconstruction videos on this platform)...

I wouldn't call Unix a flash in the pan. Apple built an almost 3 trillion dollar company using Unix.As did Windows, they succeeded where CPM, Commodore OS, Unix, and a whole plethora of other flashes in the pan, without their own hardware.

You actually don't know that. ARM is not the be all and end all of processors. Same for the current intel CPU architecture. Something will come along that's better. I'm thinking it'll be more compatible with both, or it will be fast enough to emulate whatever CPU. It wont be soon though.

The first x86 CPU, the 8086, was released in 1978, the first ARM CPUs in the mid 80s. In the last 40 years many other CPU architectures have been designed. None seem to have the same success.

One issue is probably, as you have mentioned, software support. The developers of C & C++ compilers have a lot of experience optimizing for these architectures. x86 may own the desktop (at least for now) but ARM dominates mobile and is making serious inroads into the server market. This is largely because of power consumption but Intel and AMD charge a lot of money for powerful server CPUs which helps ARM too. Amazon designs its own ARM SoCs for its AWS data centers and sell compute at a discount to x86.

I have been using a console for gaming for a while now. Can't beat sitting on the couch in front of a large TV with a multi-speaker surround sound system. I use an Xbox Series X because of its backwards compatibility but in many ways the PS5 is a better platform. Both have more powerful GPUs than my 2020 iMac with the 5700XT and a 10 core i9 and both seem to run recent games better than almost any PC or Mac.Agreed. Buy the tool for the job.

With how recent games are handling the 13900k and 4090, I’m thinking of ditching PC for consoles. I’m tired of bad PC ports and treatment.

It depends on your situation. If you want one device that can game, develop, run pretty much anything, and take it with you anywhere, then a gaming laptop is pretty much your only solution. So that is what I got.

Of course, it hurts that I am from the prior generation that never really got into double sticks on controllers LOL. I went from Nintendo 64 directly to PC mouse and keyboard gaming. So yes, I miss the days when a macbook with a decent graphics adapter could actually decently game when booted into windows.

Of course, it hurts that I am from the prior generation that never really got into double sticks on controllers LOL. I went from Nintendo 64 directly to PC mouse and keyboard gaming. So yes, I miss the days when a macbook with a decent graphics adapter could actually decently game when booted into windows.

I started gaming on a Commodore 64. I did game on a PC later but unless it's a strategy game (e.g. Civilization) I think a console offers a better experience. I have never owned a gaming laptop and have no plans to do so. I do own a laptop but it is almost 10 years old. I would rather do real work on a desktop.It depends on your situation. If you want one device that can game, develop, run pretty much anything, and take it with you anywhere, then a gaming laptop is pretty much your only solution. So that is what I got.

Of course, it hurts that I am from the prior generation that never really got into double sticks on controllers LOL. I went from Nintendo 64 directly to PC mouse and keyboard gaming. So yes, I miss the days when a macbook with a decent graphics adapter could actually decently game when booted into windows.

When you connect my gaming laptop to a 27” 1440p monitor and an external keyboard and mouse, what do I have?I started gaming on a Commodore 64. I did game on a PC later but unless it's a strategy game (e.g. Civilization) I think a console offers a better experience. I have never owned a gaming laptop and have no plans to do so. I do own a laptop but it is almost 10 years old. I would rather do real work on a desktop.

And I loved my C64C! Archon rocked. So did Mission: Impossible. Questron…so many floppy disks…

None of the others were "made" (in the gangster movie sense) by being chosen by IBM for the PC and becoming part of a huge, abusive monopoly. 8086 would be a footnote in history if it had had to compete on technical merit. Even Intel originally designed the 8086 as a stopgap because their "proper" 32-bit chip (8800/iAPX 32) was delayed (...even the 8 bit 8080 was losing out to the Zilog Z80).The first x86 CPU, the 8086, was released in 1978, the first ARM CPUs in the mid 80s. In the last 40 years many other CPU architectures have been designed. None seem to have the same success.

Even ARM initially out-performed Intel, but was doomed to failure because it didn't run DOS/Windows - it was a sleeper hit because of its low power consumption & ARM carving themselves a niche in embedded/mobile (with help from Apple and the Newton) so it was ready to go when mobile devices boomed.

Any successful future ISA will likely be designed and optimised for the developers of compilers and language runtimes - probably with the chip designers writing their own backends or contributing to projects like LLVM. That's a trend that started way back with the RISC movement, including ARM. Its really only the x86 that has roots going back to the "let's design a nice assembly language for application programmers" days (easy porting of 8080/Z80 CP/M software was one reason IBM went x86).One issue is probably, as you have mentioned, software support. The developers of C & C++ compilers have a lot of experience optimizing for these architectures.

Software support is probably the only reason x86 is still around - ARM got a shoe-in with the mobile market partly because established Windows applications weren't much use on a phone screen, and in the server market running Linux (binary/assembly-language compatibility is an x86 Windows disease - Linux/Unix have a focus on source-level compatibility and ARM has been well supported since the early 1990s).

As I said in an earlier post - the issue of binary compatibility for applications should die out as Win16/Win32-era applications gradually get replaced or re-written with modern compilers & using high-level frameworks for graphics, vector processing, neural computing etc. Source-level compatibility has always been the aim for Linux/Unix, and MacOS (apart from being Unix) is in fairly good shape as a result of being pretty ruthless at killing legacy support - if they want to move to a new ISA in future it should be a click-and-drool re-compile for most.

(Really, x86 has been a curse on computing and a major reason why we can't have nice things...)

When you connect my gaming laptop to a 27” 1440p monitor and an external keyboard and mouse, what do I have?

A slower and harder to upgrade desktop than you would have if you built a PC and used a large tower case. For example, my work machine which I primarily use for software development has a 32 core ThreadRipper. Hard to get that kind of power in a laptop.

If you need that type of power. I literally do all my dev work on servers I remote into. So a slower machine is very relative here. The rest is writing novels and simple graphics and video editing. So as long as Scrivener, Standard Notes, Office, And the Affinity apps can run fast? I am good.A slower and harder to upgrade desktop than you would have if you built a PC and used a large tower case. For example, my work machine which I primarily use for software development has a 32 core ThreadRipper. Hard to get that kind of power in a laptop.

So that's perfectly fine for you if you need to compile bad mf apps locally. I don't.

I do, however, like to game Apex, Diablo 4, etc...and I like to be able to do it when I travel. So for my needs, a gaming laptop is perfect.

For example, my work machine which I primarily use for software development has a 32 core ThreadRipper. Hard to get that kind of power in a laptop.

Depends on what compiler is being used and what is being compiled but for something like Apache bigger isn't always better.

https://openbenchmarking.org/test/pts/build-apache

Maybe my standards are just super-low not being a huge gamer, but I just picked up an M2 Mac mini and CS:GO runs smooth as butter as far as I can tell. Way better than the 2011 iMac it replaced anyway. 😄Both CSGO and Team Fortress 2 are 32-bit x86 games that run under Rosetta 2 using Crossover graphics API translation but they're either slow or really slow so suspect Rosetta 2 isn't ideal for 32-bit x86 code.

yep your standards are lowMaybe my standards are just super-low not being a huge gamer, but I just picked up an M2 Mac mini and CS:GO runs smooth as butter as far as I can tell. Way better than the 2011 iMac it replaced anyway. 😄

Bootcamp could still come back. The primary reason Windows for ARM won't run on bare metal with Macs is because of Microsoft's deal with Qualcomm that expires later this year. Microsoft has no reason not to support Mx Macs if Apple will provide the drivers and there's no legal reaosn stopping them. That said, even if they do suddenly get it running, it's limited to DirectX 11 games just like Parallels. All the DX12 games wouldn't work.

Bootcamp could still come back. The primary reason Windows for ARM won't run on bare metal with Macs is because of Microsoft's deal with Qualcomm that expires later this year. Microsoft has no reason not to support Mx Macs if Apple will provide the drivers and there's no legal reaosn stopping them. That said, even if they do suddenly get it running, it's limited to DirectX 11 games just like Parallels. All the DX12 games wouldn't work.

Please see the Parallels news release from February of this year. Yes, I'm aware you said bare metal, and this is in Parallels, but hopefully all are now aware of this.

Run Windows 11 on Mac | Parallels

Run Windows 11 on your Mac with the only Microsoft-authorized solution for Apple silicon. Fast, secure, and optimized for M1, M2, and M3 chips.

Parallels is fully authorized and blessed by Microsoft for Windows on ARM (Apple) hardware.

Apple makes booting anything other than MacOS difficult (ask the Asahi Linux people); if they wanted to give code, examples, and development effort to Asahi folks, I suspect we could have far better bare metal support (in Linux).

There's no way Microsoft would ever code straight for Apple ARM hardware on such an unfriendly platform; Apple doesn't STOP others from coding for Macs, but they sure don't help, either. Add the fact that Apple could stop all of Windows from working with a firmware update or two (and they've DONE THIS - Power Computing!), and it's a complete nonstarter for Microsoft.

Please see the Parallels news release from February of this year. Yes, I'm aware you said bare metal, and this is in Parallels, but hopefully all are now aware of this.

Run Windows 11 on Mac | Parallels

Run Windows 11 on your Mac with the only Microsoft-authorized solution for Apple silicon. Fast, secure, and optimized for M1, M2, and M3 chips.www.parallels.com

Parallels is fully authorized and blessed by Microsoft for Windows on ARM (Apple) hardware.

Apple makes booting anything other than MacOS difficult (ask the Asahi Linux people); if they wanted to give code, examples, and development effort to Asahi folks, I suspect we could have far better bare metal support (in Linux).

There's no way Microsoft would ever code straight for Apple ARM hardware on such an unfriendly platform; Apple doesn't STOP others from coding for Macs, but they sure don't help, either. Add the fact that Apple could stop all of Windows from working with a firmware update or two (and they've DONE THIS - Power Computing!), and it's a complete nonstarter for Microsoft.

Wrong. Microsoft wouldn’t have to do much of anything to make Windows work on Macs. It’d be up to Apple to do the same things they did with bootcamp on intel and write the drivers and boot loader to allow it to work in the first place.

And as for Apple not stopping people from coding for Macs, nVidia would like a word with you.

Wrong. Microsoft wouldn’t have to do much of anything to make Windows work on Macs. It’d be up to Apple to do the same things they did with bootcamp on intel and write the drivers and boot loader to allow it to work in the first place.

And as for Apple not stopping people from coding for Macs, nVidia would like a word with you.

Wow. So why can't the Asahi Linux guys do it, if it's that easy?

Apple made a standard PC (with Intel processor machines). We don't need BootCamp anything to get an Intel Mac to fully work in Windows; Microsoft's effort on that was zero - just take a normal Windows ISO and boot from it. That's not the case with an ARM Windows install, where Apple publishes no specs or details, AND is on a nonstandard platform.

The fact is Apple has zero interest (so far), and MS isn't going to throw money at something that's one firmware update from Apple away from breaking (without a guarantee from Apple).

I'm not sure if it's a language issue or what, but I was pretty clear that Apple doesn't help people, at all, in coding - your nVidia example proves MY point. Apple didn't stop them, but sure didn't help them. How you could realize that and then say I was wrong in my point / post is...confusing.

Wow. So why can't the Asahi Linux guys do it, if it's that easy?

Apple made a standard PC (with Intel processor machines). We don't need BootCamp anything to get an Intel Mac to fully work in Windows; Microsoft's effort on that was zero - just take a normal Windows ISO and boot from it. That's not the case with an ARM Windows install, where Apple publishes no specs or details, AND is on a nonstandard platform.

The fact is Apple has zero interest (so far), and MS isn't going to throw money at something that's one firmware update from Apple away from breaking (without a guarantee from Apple).

I'm not sure if it's a language issue or what, but I was pretty clear that Apple doesn't help people, at all, in coding - your nVidia example proves MY point. Apple didn't stop them, but sure didn't help them. How you could realize that and then say I was wrong in my point / post is...confusing.

Your post is wrong because you make a lot of bold assumptions and take them as fact when they are incorrect.

You say you didn’t need BootCamp to get windows working, yet I was there in early 2006 with a Macbook pro trying to do that and failing. BootCamp didn’t come out for months after the computers did and getting windows on that machine was a nightmare until BC was released. Apple did the work so that we could have Windows dual boot and they continue to update and support it.

Asahi linux is in the same place now that Windows was before boot camp, but Apple isn’t going to write drivers for Linux. They have for Windows in the past… and there’s nothing to stop them from doing it again once that exclusivity agreement expires later this year. I’m not saying they will, but it’d be a minimal amount of effort for them to do it.

Your post is wrong because you make a lot of bold assumptions and take them as fact when they are incorrect.

Then thanks for correctly me so kindly.

You say you didn’t need BootCamp to get windows working, yet I was there in early 2006 with a Macbook pro trying to do that and failing. BootCamp didn’t come out for months after the computers did and getting windows on that machine was a nightmare until BC was released. Apple did the work so that we could have Windows dual boot and they continue to update and support it.

My recollection was a Windows ISO was all that was required, and Boot Camp a convenience. Was there a time when Apple's X86 implementation wasn't standard, so it was actually required, rather than being a convenience? More power on Apple, then, to standardizing so that extra work wasn't required.

I'm not sure how that helps. Is your point that Apple could do that again, and Microsoft has a standard ARM Windows ISO that would work? Unless there's some ARM standard out there that Apple writes to (and Microsoft codes to) I don't see how that helps... Is there such a standard? Given that Apple has custom-made their ARM chips with all kinds of extensions, this isn't like X86 was, where the Intel X86 chips really were The Standard.

Asahi linux is in the same place now that Windows was before boot camp, but Apple isn’t going to write drivers for Linux. They have for Windows in the past… and there’s nothing to stop them from doing it again once that exclusivity agreement expires later this year. I’m not saying they will, but it’d be a minimal amount of effort for them to do it.

Obviously here's hoping they do that. But let's talk about what Apple wrote for Windows in the past - a few INFs and basic programming for mouse, keyboard, and a few other fairly light bits. AMD/nVidia did the graphics, Intel the chipset and associated logic, someone else the sound, NIC, wireless, and so forth. Apple's job was really, really light compared to what it would be now, where it's almost All Apple.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.