Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

There is a problem with the new 2021 16-inch MacBook Pro...

- Thread starter JohnHerzog

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The problem is more that you can't display correct color values at higher brightness for SDR content because clipping will occur.

You will just end up clipping highlights and shadows leaving you with overexposed content because the color profile doesn't hold the required data. There is nothing whiter than white, you would just push anything near white into being fully white removing highlight detail in the progress. The crushed blacks will simply turn greyish, expose noise and remove contrast. The same goes for tones of red, green and blue.

This is simply not true. HDR content simply allows for a wider range of color values to be displayed all at once, but there is no stopping your white value from getting brighter.

Wanna see a whiter white?

Wanna see a whiter white? CSS trick/bug to display a brighter white by exploiting browsers' HDR capability and Apple's EDR system

kidi.ng

^ if you are on a new MacBook, going to that page will show text that is white... and far brighter than the white that's currently on the display (even if the display is at max brightness). That's how you get white that is "whiter".

Go read what nits and candelas are, how they are calculated, and then you won't make the mistake of comparing the measurements on a 16 inch screen versus 6 inch screen.

Nits measurement is still comparable between a 16-inch screen vs a 6-inch screen. Nit as a unit means candelas per meter squared. It is independent of display size.

Most content are encoded in sRGB or rec. 709 with limited tonality. 8-bit and 256 shades.This is simply not true. HDR content simply allows for a wider range of color values to be displayed all at once, but there is no stopping your white value from getting brighter.

Wanna see a whiter white?

Wanna see a whiter white? CSS trick/bug to display a brighter white by exploiting browsers' HDR capability and Apple's EDR systemkidi.ng

^ if you are on a new MacBook, going to that page will show text that is white... and far brighter than the white that's currently on the display (even if the display is at max brightness). That's how you get white that is "whiter".

Nits measurement is still comparable between a 16-inch screen vs a 6-inch screen. Nit as a unit means candelas per meter squared. It is independent of display size.

I'm not arguing that white cannot be brighter. I'm saying you will lose detail in most content because the color profile doesn't retain information if pushed.

I didn’t realize monitor/display hoods still existed as I haven’t seen any since CRTs were common. An interesting evolution.Perhaps time to visit the optometrist to ensure you don't have cataracts developing? 1000 nits is extremely bright when used indoors, unless you are sitting directly in front of a window looking at the sun.

There *is* a use case for having very bright displays when used outdoors, e.g. for camera screens. However, this is very much an edge-case for most laptop computer usage. If you really do need to use your laptop in very bright surroundings there are "sun-shades" to help improve visibility.

Do you wear sunglasses?I personally prefer the maximum brightness output possible even in a dimly lit environment. I would use 1000 nits to surf the web

Most content are encoded in sRGB or rec. 709 with limited tonality. 8-bit and 256 shades.

I'm not arguing that white cannot be brighter. I'm saying you will lose detail in most content because the color profile doesn't retain information if pushed.

And I'm saying you are mistaken. Color profile has nothing to do with display brightness. The fact is that you don't lose details with your sRGB content going from 10% display brightness to 50% and then to 100%.

Making the display go from 500 nits to 1000 nits is just like making it go from 100% to now, say... 200%. Your sRGB and Rec. 709 contents will still map properly. This is because color profiles are entirely dependent on gamma, and not at all dependent on display brightness.

The new MacBook screens have much wider gamut than both sRGB and Rec. 709 so there's no chance you'll lose any color information at all within those color spaces. The reason why you are seeing a loss of information when you try to push these contents is because your current hardware device (your display for instance) is unable to display the pushed content. Say, if we try to "push" contents to display at 1000 nits on a display that's only capable of 500 nits, then yeah, you'll lose details. That much I can concede.

But again, that is not a problem with the current MacBook screens. These screens are capable of being pushed to 1000 nits sustained full white. This is evident with HDR content. Right now, max brightness for SDR on these displays are basically about 50% of what they are truly capable of.

Wow, some folks use their Macs in extreme ways. I just use it and get the job done. If its not affecting 7 billion other people, I think you will live.

Nits measurement is still comparable between a 16-inch screen vs a 6-inch screen. Nit as a unit means candelas per meter squared. It is independent of display size.

Why are you telling me what a nit means? Are you my video editing and colourist teacher from 1996 and every year since? In your lifetime you have watched movies, commercials and seen posters and images graded by me.

Last edited:

And I'm saying you are mistaken. Color profile has nothing to do with display brightness. The fact is that you don't lose details with your sRGB content going from 10% display brightness to 50% and then to 100%.

Making the display go from 500 nits to 1000 nits is just like making it go from 100% to now, say... 200%. Your sRGB and Rec. 709 contents will still map properly.

sRGB targets 80 nits.

Most colourists grade at 120 nits.

HDR videos don't brighten a full image to 1000+ nits. Ideally only the areas of the image where it is needed otherwise their grading would differ too much on the various displays and cinema screens.

Candela per square metre - Wikipedia

You sound like you just want to make things up as you go along.

Forget HDR. The objective is to enable the utilization of the entire 1000-nit sustained brightness output capacity of the Built-in Liquid Retina XDR Display for SDR content. i.e. Increasing the maximum brightness of the MacBook

Something akin to the iPhone 13 Pro technical specifications:

- 1000 nits max brightness (typical); 1200 nits max brightness (HDR)

- SDR: 1000 nits (typical); HDR: 1250-1300 nits (sustained); Peak: 1600 nits (max)

Also, in the equation cd/m2 meter squared is a constant. The only variable is the candela

Last edited:

Any ophthalmologist or optometrist will tell you that the eye is one of the most complex and most useful organs in the human body, the complexity of which is on par with the human brain. To be very carefully used, and certainly not abused.I personally prefer the maximum brightness output possible even in a dimly lit environment. I would use 1000 nits to surf the web

Methinks you will be joining the Ray Charles, Andrea Bocelli, Stevie Wonder, George Shearing appreciation society in the not too distant future. If you don't aready have one, you may wish to buy and start practising on a braille keyboard. Please let us know how you get on in a few years from now........

Last edited:

Dude 😄

When you look out the window of your house from a dimly lit room how many 'nits' do you think the window is emitting? Is looking out the window of your house going to cause irreversible damage to your eyes? The laptop is much dimmer than even just looking out a window - In fact that is what I want; A laptop that can reproduce the illusion of looking through a window nit-for-nit color-for-color.

When you look out the window of your house from a dimly lit room how many 'nits' do you think the window is emitting? Is looking out the window of your house going to cause irreversible damage to your eyes? The laptop is much dimmer than even just looking out a window - In fact that is what I want; A laptop that can reproduce the illusion of looking through a window nit-for-nit color-for-color.

Presumably, this is similar to "snow blindness"?When the retina's light-sensing cells become over-stimulated from looking at a bright light, they release massive amounts of singling chemicals, injuring the back of the eye as a result.

Also the high contrast between a bright screen and dark surroundings may cause eyestrain or fatigue that could lead to a headache.

but...but....someone is wrong on the Internet! It's ....Wow, some folks use their Macs in extreme ways. I just use it and get the job done. If its not affecting 7 billion other people, I think you will live.

sRGB targets 80 nits.

Most colourists grade at 120 nits.

HDR videos don't brighten a full image to 1000+ nits. Ideally only the areas of the image where it is needed otherwise their grading would differ too much on the various displays and cinema screens.

Candela per square metre - Wikipedia

en.wikipedia.org

You sound like you just want to make things up as you go along.

I don't think you have any idea what you are talking about.

When Nits Are Not Enough: Quantifying Brightness in Wide Gamut Displays

As the industry transitions to wide color gamuts, such as Rec.2020, continuing to use nit counts to compare display brightness levels is inadequate at best, misleading at worst.

And you are the one making things up:

The Dolby Vision format is capable of representing videos with a peak brightness up to 10,000 cd/m2 and a color gamut up to Rec. 2020.

Source:

Dolby Vision - Wikipedia

Do we need to continue duking this out so you can tell me about which movies you helped graded? Or can we accept that your understanding of nits/brightness/luminance relative to color space is flawed?

Viewing Environment & Calibration

Accepted standards for viewing environment & display calibratio

Such arrogant, patronizing comments really add nothing useful to the conversation.Wow, some folks use their Macs in extreme ways. I just use it and get the job done. If its not affecting 7 billion other people, I think you will live.

Everyone is entitled to use their own device any way they see fit, regardles of whether you consider it extreme or not. And nobody asked whether you think he can live or not. What you think about his ability to live without this feature is totally irrelevant.

sRGB targets 80 nits.

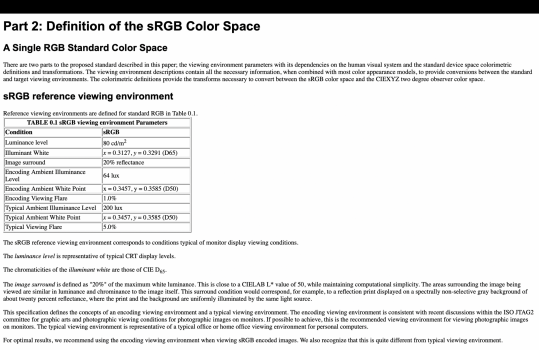

By the way, this is fundamentally misleading. Here's the sRGB reference viewing condition:

While one would theoretically use the viewing conditions which represent the actual or typical viewing environment, if this is done with 24 bit images a significant loss in the quality of shadow detail results. This is due to encoding the typical viewing flare of approximately 5.0 percent into a 24 bit image as opposed to the encoding viewing flare of 1 percent. Therefore we recommend using the encoding viewing environment for most situations including when one's viewing environment is consistent with the typical viewing environment and not the encoding viewing environment.

The encoding ambient illuminance level is intended to be representative of a dim viewing environment. Note that the illuminance is at least an order of magnitude lower than average outdoor levels and approximately one-third of the typical ambient illuminance level.

Source: https://www.w3.org/Graphics/Color/sRGB.html

Again, not everyone sits in a dark room to view sRGB images on their computers at 80 nits.

Attachments

Was Bruce Willis in any of them? If so, I have questions.Why are you telling me what a nit means? Are you my video editing and colourist teacher from 1996 and every year since? In your lifetime you have watched movies, commercials and seen posters and images graded by me.

By the way, this is fundamentally misleading. Here's the sRGB reference viewing condition:

View attachment 1930527

Source: https://www.w3.org/Graphics/Color/sRGB.html

Again, not everyone sits in a dark room to view sRGB images on their computers at 80 nits.

Don't contest with nonsense because you are actually contesting thousands of professionals like me just so you can support some idiotic thread created by someone who thinks our displays should be set to 1000 nits minimum. What utter madness.

This is the third time someone has made a thread with the same suggestion and I am one of dozens of members who have said the same thing.

We grade for SDR and print between 80-120 nits. We cannot have a default or minimum 1000 nits.

We grade HDR a little higher but we use a preview monitor to visualise how it would look on different displays. We cannot have a default or minimum 1000 nits.

There's no contesting this. You're not a pro if you try to. Give up.

Was Bruce Willis in any of them? If so, I have questions.

Would have been proud to work on the original Die Hard but nothing else he did.

Don't contest with nonsense because you are actually contesting thousands of professionals like me just so you can support some idiotic thread created by someone who thinks our displays should be set to 1000 nits minimum. What utter madness.

This is the third time someone has made a thread with the same suggestion and I am one of dozens of members who have said the same thing.

We grade for SDR and print between 80-120 nits. We cannot have a default or minimum 1000 nits.

We grade HDR a little higher but we use a preview monitor to visualise how it would look on different displays. We cannot have a default or minimum 1000 nits.

There's no contesting this. You're not a pro if you try to. Give up.

What? No. Have you read this thread?

The OP and many others are asking to see if the 1000 nits of sustained brightness will eventually be unlocked for regular use and not just inside of HDR. That means 1000 nits of max brightness.

Ignoring your "professional use case", you do realize... there are people who want to use their MacBooks outdoors and in extremely bright sunlight environment where even 1000 nits just barely makes the screen visible right?

Or are you saying only color grading can be considered a "professional use case" now? That's nonsense.

And again, please don't try to change the subject. Do you realize your grading environment has to be at a specific ambient lighting lux for these nits numbers you are throwing out to make sense? No? Then please stop claiming to be a pro. You are not as knowledgeable on this topic as you think you are.

According to a study by Michigan State University neuroscientists, spending too much time in dimly lit rooms and offices may actually change the brain's structure and hurt one's ability to remember and learn.

The Michigan State University researchers studied the brains of Nile grass rats after exposing them to dim and bright light for four weeks.

The rodents exposed to dim light lost about 30 percent of capacity in the hippocampus, a critical brain region for learning and memory, and performed poorly on a spatial task they had trained on previously.

The rats exposed to bright light, on the other hand, showed significant improvement on the spatial task. Further, when the rodents that had been exposed to dim light were then exposed to bright light for four weeks (after a month-long break), their brain capacity - and performance on the task - recovered fully.

msutoday.msu.edu

msutoday.msu.edu

The Michigan State University researchers studied the brains of Nile grass rats after exposing them to dim and bright light for four weeks.

The rodents exposed to dim light lost about 30 percent of capacity in the hippocampus, a critical brain region for learning and memory, and performed poorly on a spatial task they had trained on previously.

The rats exposed to bright light, on the other hand, showed significant improvement on the spatial task. Further, when the rodents that had been exposed to dim light were then exposed to bright light for four weeks (after a month-long break), their brain capacity - and performance on the task - recovered fully.

Does dim light make us dumber?

Spending too much time in dimly lit rooms and offices may actually change the brain's structure and hurt one's ability to remember and learn, indicates groundbreaking research by MSU neuroscientists.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.