Think of the

- G4 Cube

- Newton

- Pippin

- Puck Mouse

- Xserve/Xserve Raid

You missed the Apple 3 and the Lisa. As I said, they take risks - I didn't say they never failed.

You can't count the XServe there - it was successful for years before the switch to Intel, better support for Windows networks on Macs and increasing respectability of Linux made it irrelevant.

Who knows how the G4 Cube would have done if it hand't had cracking and overheating problems? The Newton was pretty influential and possibly axed before its time because of problems elsewhere in Apple. The "hockey puck" mouse... no, you're right, that was just unmitigated form-over-function stupidity.

Apple prices their Pro application extremely competitive. FCPX, LogicX, Aperture

they are all very cheap and thus target the prosumer market as well as the pro user market. Clearly they want to put their software into as many hands as possible.

Apple make their money selling hardware, not software. Offering "pro" software at low prices encourages people to buy Macs.

This is a relatively recent development: FCP, Aperture, OS X Server used to be far more expensive. The "pro" market is shrinking - Apple is betting big on the "prosumer" market being more profitable.

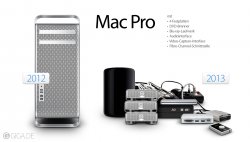

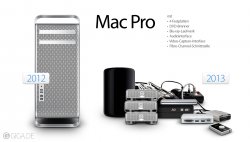

But then comes the new Mac Pro and all the components individual prices point to a higher system price level than before

The published prices for Intel Xeon processors and AMD FirePro graphics tell you absolutely zip, nothing, nada about the possible price of the new Mac Pro. Apple are a huge buyer of components. This is Intel's flagship for Thunderbolt 2 and AMD's standard-bearer for OpenCL vs. CUDA, and both Intel and AMD would quite like Apple to keep using their lesser chips in their mainstream computers, too. Apple will have got a very, very good price for this stuff. The price of the MacPro is more about how much markup Apple wants to make.

Target the system at the 1500-2000 $ price range and it would perhaps be another top seller.

...but how many of those sales would represent the lost sale of an iMac, Mac Mini, Mac Book Pro or "proper" Mac Pro?

Certainly, the chances of an "xMac" appearing at, or around the same time as, the new Mac Pro are zero. If you're in the market for a headless Mac with a decent GPU, Apple want you to buy a Mac Pro.

fanboi, I use what works best for me, and this certainly isn't it.

fanboi, I use what works best for me, and this certainly isn't it.