Or in a Z with four GPUs....Asynchronous Shaders. Imagine that working in Mac Pro with dual GPUs with extreme parallel/asynchronous capabilities.

I have not been more excited for any type of technology execution, than for Async.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Will there be a new Mac Pro in 2015?

- Thread starter Anto38x

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

i made this picture just for you

Why hasn't a PC hardware company at least attempted to copy the nMP form factor yet?

A deep, slavish copy of the design?

Maybe in part because Apple has spent 10's of millions suing Samsung on the phone issues and probably would do so with the Mac Pro also if it was too exact of a clone. There is "all-in-one" iMac stuff out there, but the Mac Pro design is a rather bigger, distinctive step away and Apple has probably piled the design IP high and deep.

Isn't the Tube the future and the Box a dinosaur. Wasn't that sort of in the cool-aid that Apple was serving up a couple of years ago.

I don't remember them saying the boxes were dinosaur, but more so that they didn't have to conform to those constraints. With modern component they could approach creating a system that was compact enough for the desktop as opposed to desk side.

There are lots of computer form factors that Apple doesn't sell. XServe is dead as an Apple product, that doesn't mean nobody is going to use a rack mounted computer anymore. Apple itself certainly does, they don't make them for public consumption though.

Classic desktops are shrinking though relative to the overall personal computer market. But there is no extinct event where the dinosaurs couldn't possibly adapt. The role and influence is shrinking though. Same as the slow fades from big iron Mainframes and late "mini computers" (still cabinet sized).

Well?

if Apple factory didn't catch up to order for 10-12 months you'd probably would see some more approximate clones. But most of the system vendors are experimenting with "Smaller"/Small Form factor options.

Before the new Mac Pro design rolled out Boxx had this system.

https://www.boxxtech.com/products/rendering-and-simulation/renderpro?PROD155

It is a slightly different focus ( two Xeon E5 and one GPU and not particularly pressed about noise ) as opposed to ( one Xeon E5 and two GPUs and noise limitation a foucs ) but neither one is late 1980's basic design inspired boxes with standard slots.

Right now most of the vendors probably feel they can explore SFF without having to be Cylinder shaped, black, and slavishly copying Apple's design. Most are still on the shotgun, try everything, approach still. That costs enough in R&D. It isn't like they need more design dilution.

Right now the top three workstation vendors ( Dell , HP, Lenovo) are engaging more so in being the Anti-Apple than trying to exactly copy. There is more than one subset of users that Apple isn't explicitly targeting (and a smaller group that they largely never were.... people looking for primarily a subcomponent vendor) .

..

Asynchronous shader part is the best,

About 11:59-17:00

I don't think this is as unique to AMD as he implied. Maxwell 2 has some foundational work (and

I'm sure there will be some proprietary CUDA extension to match that. ) Vulkan and DirectX12 will be

more hardware neutral ways of implementing it though.

http://www.anandtech.com/show/9124/amd-dives-deep-on-asynchronous-shading

and really sounds like he's talking about the idea of Mac Pro.

No, not really. Asynchronous shader is more so about putting two 'jobs' on a single GPU. The Mac Pro is more so about having a 'compute' GPU and a 'graphics' GPU. Something that weaves more work on a single GPU doesn't particularly support expanding to two.

Apple could possibly get more performance out of the Mac Pro hardware if coupled being able to "walk and chew gum at the same time" with the workloads they distribute on to the GPUs. General Purpose computation pragmatically means doing more than one thing at the same time for a preemptive/multitasking OS. When GPGPUs can do more of that they they will be better matched to pull more load off the the CPUs. In that respect yes, "over time put more load on GPGPUs" is where the Mac Pro design focus is going. Software wise Apple is somewhat surprisingly behind the curve getting that out the door as a production implementation.

Metal has separate Render and Compute command queues so conceptually could do some "walk and chew gum" work if the dependencies are clear. But Metal for the Mac is arriving about two years after the new Mac Pro showed up. It is nice... hopefully it is not too late.

A history of well designed failures.

http://www.blakespot.com/sgi/images/sgi_front.jpg

Those three failed even though they were more modular than the nMP.

I would not exactly call the SGI O2 a failure. It's still one of the favourites of many die hard SGI users. It's silent, does not take up to much real estate, and it keeps your electrical bill at a reasonable level.

But it's a very good example in comparison to the Mac Pro. Similar as the Mac Pro it was targeted towards certain user groups. Mainly video and visualization tasks. You could add a decent VideoIn/VideoOut card to the system. The GPU shared memory with the system, so you could access up to 1GB of texture memory. Which was unheard of at this time - or at least very very very expensive on other workstation.

So if the O2 did fit your usage pattern, it was a pretty package with a lot of punch.

However, if you did not bother about video, it had some serious short comings. The R5000 CPU was kind of slow compared to the competition. The R12000 was fast, but you had to sacrifice one HDD slot for it. Memory was custom 288 Pin memory exclusively built for this system. Instead of DVI it used LVDS output for the digital flat panel display.

So, same as the Mac Pro, it was (is) a highly opinionated system. Using lots of custom form factors made a cool design, but limited expansion options and made expansion quite expensive. I just hope that the nMP does not follow the footsteps of the O2.

P.S.

I still have two O2 up and running. Even retrofitted them with SSD.

P.S.

I still have two O2 up and running. Even retrofitted them with SSD.

What can you do with them in 2015?

Ah, yes, again the famous Anandtech article. Already on Anandtech forums there was pointed out that Ryan have got it wrong about Maxwell v 2.0 and their async capabilities, and it has 1 queue engine that is capable of 32 compute tasks. AMD GCN from 1.1 has 1 graphics and 8 compute engines that are capable of 8 queues at the same time time. Which goes for 64 or more compute tasks. How that translates? Well in all fairness for Maxwell it would not be that bad as KeplerAbout 11:59-17:00

I don't think this is as unique to AMD as he implied. Maxwell 2 has some foundational work (and

I'm sure there will be some proprietary CUDA extension to match that. ) Vulkan and DirectX12 will be

more hardware neutral ways of implementing it though.

http://www.anandtech.com/show/9124/amd-dives-deep-on-asynchronous-shading

No, not really. Asynchronous shader is more so about putting two 'jobs' on a single GPU. The Mac Pro is more so about having a 'compute' GPU and a 'graphics' GPU. Something that weaves more work on a single GPU doesn't particularly support expanding to two.

Apple could possibly get more performance out of the Mac Pro hardware if coupled being able to "walk and chew gum at the same time" with the workloads they distribute on to the GPUs. General Purpose computation pragmatically means doing more than one thing at the same time for a preemptive/multitasking OS. When GPGPUs can do more of that they they will be better matched to pull more load off the the CPUs. In that respect yes, "over time put more load on GPGPUs" is where the Mac Pro design focus is going. Software wise Apple is somewhat surprisingly behind the curve getting that out the door as a production implementation.

Metal has separate Render and Compute command queues so conceptually could do some "walk and chew gum" work if the dependencies are clear. But Metal for the Mac is arriving about two years after the new Mac Pro showed up. It is nice... hopefully it is not too late.

All of what you are writing is absolutely true. Let me put this to all of us this way. I don't know how it will be executed, but I know that AMD experimented on their own with the idea of one GPU - Compute, the other one - graphics, and working on drivers and asynchronous queues for it.

And also to note - it is possibly the first time when we see that good form of Out-of-Order execution. Something that Apple's own Cyclone cores are famous for.

Probably been said before but the nMP is like sticking a round peg in a square hole. Apple made a great attempt but Computer components by their very design is not round or cylindrical- why dont you guys wake up and see that the nMP design is not only flawed but probably doomed.Why hasn't a PC hardware company at least attempted to copy the nMP form factor yet? Isn't the Tube the future and the Box a dinosaur. Wasn't that sort of in the cool-aid that Apple was serving up a couple of years ago.

Well?

Last edited:

What can you do with them in 2015?

Let's call it a hobby. Mostly some coding and toying around with various graphics/video tools. Most modern internet stuff and everything HD-Video is a no go on these systems in 2015.

Turns out, that MVC may have not been right in saying that Fury X is OC'ed to hell.

http://www.techpowerup.com/reviews/AMD/R9_Fury_X_Overvoltage/2.html

http://tpucdn.com/reviews/AMD/R9_Fury_X_Overvoltage/images/scaling.gif

-48 mV on Core and yet still 80 MHz OC on the core. However, the benefit of it is meaningless.

http://tpucdn.com/reviews/AMD/R9_Fury_X_Overvoltage/images/memory.gif Much better stats with Memory OC. As I said before, Downclocking and undervolting the core would bring the power consumption to 125W Range, and yet, increasing the bandwidth of the Memory would bring massive performance boosts. If only Pro app could use memory performance...

http://www.techpowerup.com/reviews/AMD/R9_Fury_X_Overvoltage/2.html

http://tpucdn.com/reviews/AMD/R9_Fury_X_Overvoltage/images/scaling.gif

-48 mV on Core and yet still 80 MHz OC on the core. However, the benefit of it is meaningless.

http://tpucdn.com/reviews/AMD/R9_Fury_X_Overvoltage/images/memory.gif Much better stats with Memory OC. As I said before, Downclocking and undervolting the core would bring the power consumption to 125W Range, and yet, increasing the bandwidth of the Memory would bring massive performance boosts. If only Pro app could use memory performance...

Probably been said before but the nMP is like sticking a round peg in a square hole. Apple made a great attempt but Computer components by their very design is not round or cylindrical- why dont you guys wake up and see that the nMP design is not only flawed but probably doomed.

What does that have to do with anything? The chassis is cylindrical, but the components are still affixed to a flat surface inside. The only thing the nMP design prevents is swapping out for off the shelf GPU upgrades.

Apple made a great attempt but Computer components by their very design is not round or cylindrical-

that's not necessarily true. components aren't inherently square.. they're mostly round. (wires/capacitors/etc).. that they're placed on flat square boards is a means to ease manufacturing but not needed for it to work and almost certainly not the best use of space.

Let's call it a hobby. Mostly some coding and toying around with various graphics/video tools. Most modern internet stuff and everything HD-Video is a no go on these systems in 2015.

I can imagine. A cousin has a 2007 MBP with OSX 10.5 and nearly all websites and software asks her to upgrade to 10.6.8 minimum.

heh, that would be somethingI would love to see bent silicon wafers

still, they're more likely flat because that's how we've learned to manufacture them. doesn't mean flat is optimum though and it's probable other shapes would perform better.. idk, if a manufacturer wants to take the attitude "just because things are the way they are today, that doesn't mean it's the best.. let's experiment"... then hey, I'm backing it.. that's how technology progresses.

...Apple made a great attempt but Computer components by their very design is not round or cylindrical

How many computer fan components are not round along one of their axis? Not many if any.

After lift off the outer case, the power and case release buttons, and taking into the fan what is left on the new Mac Pro design that is round?

Yes, the outer case is designed in concert with the single round fan. It isn't so much that Apple is obsessed with round as much as many of the other classic PC designs completely ignore cooling until the end. The Mac Pro is not a cooling design by committee system. Whether a committee or a single design team do the cooling implemetation really has little to do with the natural size/shape of the computer components.

What the Mac Pro is not is a container for arbitrary components.

Seymour Cray had a 'misguided' round computer.....

I guess that design was doomed to be unsuccessful too, huh?

why dont you guys wake up and see that the nMP design is not only flawed but probably doomed.

It is not going to fail or not based primarily on the case shape.

Turns out, that MVC may have not been right in saying that Fury X is OC'ed to hell.

http://www.techpowerup.com/reviews/AMD/R9_Fury_X_Overvoltage/2.html

http://tpucdn.com/reviews/AMD/R9_Fury_X_Overvoltage/images/scaling.gif

-48 mV on Core and yet still 80 MHz OC on the core. However, the benefit of it is meaningless.

http://tpucdn.com/reviews/AMD/R9_Fury_X_Overvoltage/images/memory.gif Much better stats with Memory OC. As I said before, Downclocking and undervolting the core would bring the power consumption to 125W Range, and yet, increasing the bandwidth of the Memory would bring massive performance boosts. If only Pro app could use memory performance...

I'm going to guess that you didn't actually read the article.

The conclusion was that 150W of EXTRA power was needed to gain 3fps.

Nowhere do I see anything that even begins to support your theory that there is a magic setting where the Fury will sip power and post high speeds at anything.

You seem to see things that aren't there. They found little room for RAM overclocking and even less benefit.

It's obvious that you wish it were true and that this card would move the nMP out of the "joke GPU" category. But to anyone with eyes and a brain, no.

Look again. -48 mV on core allows 80 MHz increase in core clock, and 40W LESS power consumption. Also test is only in BF3, which is not best for testing this. As have been proved on Anandtech forum, again.

As we both agree, overclocking the core of Fury does not benefit a lot. However overclocking the memory does benefit a lot. However, I did not cherry picked information just to prove my state, all what I said and proved is completely valid and consistent with my statement. Undervolt and underclock the core, and get as much bandwidth as possible.

Only you want to people believe that nMP has Joke GPUs, as you said. I wonder why...

As we both agree, overclocking the core of Fury does not benefit a lot. However overclocking the memory does benefit a lot. However, I did not cherry picked information just to prove my state, all what I said and proved is completely valid and consistent with my statement. Undervolt and underclock the core, and get as much bandwidth as possible.

Only you want to people believe that nMP has Joke GPUs, as you said. I wonder why...

Last edited:

Apple cares about GPU efficiency. Most high end desktop cards consume north of 250 W of power. Apple has chosen to include 2 efficient GPUs, with a power budget of 125-150 W each. Lets take a look at the D700 and see what this tradeoff got us.

Off the shelf AMD 7970 (full Tahiti)

GPU clock: 925 Mhz, Memory clock: 1375 Mhz, Power consumption: 250 W

Single precision power: 3800 Gflps, Double precision: 947 Gflps

D700 (full Tahiti)

GPU clock: 850 Mhz, Memory clock: 1370 Mhz, Power consumption 125-150 W

Single precision power: 3500 Gflps, Double precision: 870 Gflps

The lesson here is that Apple has chosen 2 efficient GPUs that combined have considerable more power than a higher clocked version of the same GPU. There are diminishing returns when you start ramping up the voltage and clock speeds.

What this means for Fury, is that if Apple chooses to use it, it will likely be an undervolted, underclocked and better binned version than what is shipping in the Fury X, similar to what was done in the D700. Apple isn't going to magically get a higher clocked, lower power version than what AMD has shipped or will ship in the Fury Nano. Rumors have the Fury Nano at 175 W, chances are Apple would need to reduce that even farther to fit within the Mac Pro's power envelope. Obviously if you are in need of the super fast, high clocked, high power consuming GPU on the mac you have no choices (besides an external card via thunderbolt), but Apple made a compromise that results in a faster and quieter machine (once the software catches up to take advantage of dual GPUs).

When it comes to memory overclocking, very few applications are bandwidth limited. This is why Fury is not crushing Nvidia's Maxwell (and losing in a lot of gaming benchmarks), despite the significantly higher bandwidth.

Off the shelf AMD 7970 (full Tahiti)

GPU clock: 925 Mhz, Memory clock: 1375 Mhz, Power consumption: 250 W

Single precision power: 3800 Gflps, Double precision: 947 Gflps

D700 (full Tahiti)

GPU clock: 850 Mhz, Memory clock: 1370 Mhz, Power consumption 125-150 W

Single precision power: 3500 Gflps, Double precision: 870 Gflps

The lesson here is that Apple has chosen 2 efficient GPUs that combined have considerable more power than a higher clocked version of the same GPU. There are diminishing returns when you start ramping up the voltage and clock speeds.

What this means for Fury, is that if Apple chooses to use it, it will likely be an undervolted, underclocked and better binned version than what is shipping in the Fury X, similar to what was done in the D700. Apple isn't going to magically get a higher clocked, lower power version than what AMD has shipped or will ship in the Fury Nano. Rumors have the Fury Nano at 175 W, chances are Apple would need to reduce that even farther to fit within the Mac Pro's power envelope. Obviously if you are in need of the super fast, high clocked, high power consuming GPU on the mac you have no choices (besides an external card via thunderbolt), but Apple made a compromise that results in a faster and quieter machine (once the software catches up to take advantage of dual GPUs).

When it comes to memory overclocking, very few applications are bandwidth limited. This is why Fury is not crushing Nvidia's Maxwell (and losing in a lot of gaming benchmarks), despite the significantly higher bandwidth.

Relevant to the discussion is this forum post here, where someone tries to limit the power consumption of a Fury X to potential Fury Nano limits and sees very little decrease in performance. The catch here is I don't see any great measures of power consumption, so who knows if limiting power through the graphics drivers is actually doing anything.

Turns out, that MVC may have not been right in saying that Fury X is OC'ed to hell.

I don't know why you seem to have such a hard time accepting obvious facts.

I stated that the Fiji cards in Fury X were already OC'd by AMD due to them being behind Nvidia.

At their intro, AMD claimed they were an "Overclocker's Dream"

Nobody, and I mean NOBODY has found this to be true.

Here are a few quotes from the article you linked to prove me wrong:

"My sample's memory doesn't overclock much. I was able to go from the default 500 MHz to 560 MHz, beyond which artifacts start appearing."

"As you can see, power ramps up very quickly, much faster than maximum clock or performance. From stock to +144 mV, power draw increases by 27%, while overclocking potential only went up by 5% and real-life performance increases by only 3%."

"Looking at the numbers, I'm not sure if a 150W power draw increase for a mere 3 FPS increase is worth it for most gamers."

So, where do you see additional OC potential? How is this card not already "OC'ed to hell"? It takes an extra 150 Watts of power to gain 3% increase. I am sorry, but if you don't understand what this means, you just don't get it.

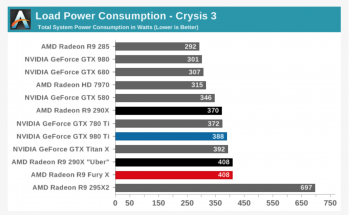

And there is no way in high holy heck that Apple is going to get anything like that in 7,1. Since you seem to prefer Anandtech, I have attached a power draw graph form their Fury review. Note that the Fury-X draws almost 100 watts MORE then the 7970. And to put a 7970 in 6,1 they had to throttle it down. So how much throttling will Fury need? I think we can agree that to lose what is needed it will no longer be a "Fury" but at best a "Mild Tantrum".

I don't know why you are trying to prove things that aren't provable by linking to articles that prove you wrong. Maybe spend a moment reading the comments, they are 4 to 1 in agreement that the card is already maxed out. Read that article about OC'ing a 980Ti, it can go up 20%, no additional 150 Watts needed, no paltry 3% increase in fps.

I am sure some version of this will eventually be in 7,1. But it will be so watered down as to be laughable. Hawaii is a bad fit for nMP, Fiji won't be a good fit until they come out with a down clocked and neutered Nano. And at that point the whole "Thermal core" business gets exposed.There was never a good reason to limit GPUs to 125 Watts. The fact that AMD's GPUs have only needed MORE power for last 2 gens puts Apple in a bad spot.

Don't be too surprised if next nMP gets mobile GPUs, the only ones from AMD that fit in the artificial constraints of nMP.

Attachments

The reason for that small drops in performance regarding limited power supplying from CCC or whatever App for it is the scale of a GPU. Fury has 4096 cores. Its extremely wide. Every core needs their won portion of power. Every core has its own clock. Therefore increasing the Clock rate increases significantly power consumption, but letting it go slower, reduces Power consumption a lot but does not affect in the same way the overall performance.

That is exact opposite for Maxwell GPUs. TomsHardware review of GTX980 and 970 shown, that in GPGPU situations non reference cards sucked only 20W less than R9 290X, while still being less powerful than R9 290X. To get to 4.6 rated TFLOPS of compute power it needed to sustain constantly highest Turbo mode clock, thats what made it consuming 277W of power. If you will get 165W clock cap you will never get to that amount of compute power.

Thats because Maxwell GPUs are very high clocked, but relatively "thin" with big internal caches. Thats what makes them really efficient in games, but really inefficient in GPGPU. GCN cores as we can see can be squeezed to 125W to get 3.5 TFLOPS of compute power whereas GTX 980 squeezed to 125W will be much less powerful. When they get BIOS cap with clock, the power consumption will not exceed the 165W limit, or be slightly higher, however it will not get to the potential of Maxwell GPU. Thats why Green500 says the GCN architecture is the most power efficient architecture that has EVER been on this planet. Power efficiency is not how small amounts of power your GPU uses. But how much power you get from 1W of power consumed. 125W GPU from AMD gets 3.5 Tflop of Compute power. 120W GPU from Nvidia gets 2.3 TFlops of compute power. Which one is more power efficient?

MVC, Im starting to think that you have problems with reading and understanding what you are reading. How can someone think that saying that GPU gets a lot better power efficiency with downclocking and undervolting GPU core clock and OC on Memory means that GPU overclocks great as a whole? Only you believe that. MVC get real, People have proven that AMD GPUs are getting a lot of efficient boosts from downclocking and undervolting GPU. It was already shown in this thread. Hawaii could run on 145W of TDP while having 850 MHz core, Grenada is even more power efficient than Hawaii(Check the power consumption of HiS IceQ(which is closest to "reference" 390) model which gets 1000(5% higher than R9 290) MHz core clock on 390 and 384 GB/s(64 GB/s more than R9 290), and double the amount of memory, while using 5W less power than R9 290).

Every AMD GPU is suitable for Mac Pro, no matter how you will argue with that.

That is exact opposite for Maxwell GPUs. TomsHardware review of GTX980 and 970 shown, that in GPGPU situations non reference cards sucked only 20W less than R9 290X, while still being less powerful than R9 290X. To get to 4.6 rated TFLOPS of compute power it needed to sustain constantly highest Turbo mode clock, thats what made it consuming 277W of power. If you will get 165W clock cap you will never get to that amount of compute power.

Thats because Maxwell GPUs are very high clocked, but relatively "thin" with big internal caches. Thats what makes them really efficient in games, but really inefficient in GPGPU. GCN cores as we can see can be squeezed to 125W to get 3.5 TFLOPS of compute power whereas GTX 980 squeezed to 125W will be much less powerful. When they get BIOS cap with clock, the power consumption will not exceed the 165W limit, or be slightly higher, however it will not get to the potential of Maxwell GPU. Thats why Green500 says the GCN architecture is the most power efficient architecture that has EVER been on this planet. Power efficiency is not how small amounts of power your GPU uses. But how much power you get from 1W of power consumed. 125W GPU from AMD gets 3.5 Tflop of Compute power. 120W GPU from Nvidia gets 2.3 TFlops of compute power. Which one is more power efficient?

MVC, Im starting to think that you have problems with reading and understanding what you are reading. How can someone think that saying that GPU gets a lot better power efficiency with downclocking and undervolting GPU core clock and OC on Memory means that GPU overclocks great as a whole? Only you believe that. MVC get real, People have proven that AMD GPUs are getting a lot of efficient boosts from downclocking and undervolting GPU. It was already shown in this thread. Hawaii could run on 145W of TDP while having 850 MHz core, Grenada is even more power efficient than Hawaii(Check the power consumption of HiS IceQ(which is closest to "reference" 390) model which gets 1000(5% higher than R9 290) MHz core clock on 390 and 384 GB/s(64 GB/s more than R9 290), and double the amount of memory, while using 5W less power than R9 290).

Every AMD GPU is suitable for Mac Pro, no matter how you will argue with that.

I know you are trying to sell your own video cards, but can you at least reference my above argument where I offer an explanation for Apple's decisions on 2 low powered GPUs vs 1 high powered one.I am sure some version of this will eventually be in 7,1. But it will be so watered down as to be laughable. Hawaii is a bad fit for nMP, Fiji won't be a good fit until they come out with a down clocked and neutered Nano. And at that point the whole "Thermal core" business gets exposed.There was never a good reason to limit GPUs to 125 Watts. The fact that AMD's GPUs have only needed MORE power for last 2 gens puts Apple in a bad spo t.

Don't be too surprised if next nMP gets mobile GPUs, the only ones from AMD that fit in the artificial constraints of nMP.

- Status

- Not open for further replies.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.