Which of these possible Mac Pro scenarios do we think are most likely?

Scenario #1 - Haswell

Tagline: "Fury Roadmap"

Availability: announced August-September 2015 along with new iMacs, shipping October-December 2015

Processors: Xeon Haswell-EP v3 configurable up to 18-core [eg, Xeon E5-2699v3 2.3 18c]

Memory: 2133MHz DDR4 memory: configurable to 16GB, 32GB, 64GB, 128GB

Storage: PCIe-based 2GB/sec flash storage: configurable to 512GB, 1TB, 2TB

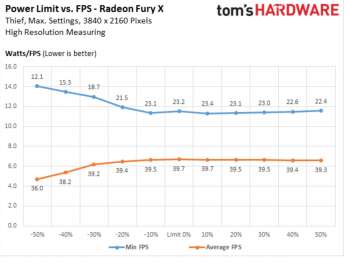

Graphics: Dual AMD Fury/nano tech in good/better/best adaptations

Interfaces: 6x Thunderbolt 2 (DisplayPort), 4x USB 3.1 (reversible plug), 2x legacy USB 3.0 (flat plug), HDMI

Other: no case change, "Mac Pro 6,2" designation, base price $3000 rapidly scaling up according to CTO options

Apple Retina 5K Display announced at same time, similar specs to Dell UP2715K Ultra HD 5K Monitor, compatible with existing and updated 27" iMacs, new retina iMac 21.5", existing Mac Pro (2013), new Mac Pro (2015), and high-spec'd MacBook Pros.

Scenario #2 - Broadwell

Tagline: "Can't update our specs, my ass!"

Availability: announced January-February 2016, shipping March-April 2016 (end Q1 when Intel ships Broadwell-EP)

Processors: Xeon Broadwell-EP v4 processor configurable to whatever relatively high GHz core counts are on offer

Memory: 2400MHz DDR4 memory: configurable to 16GB, 32GB, 64GB, 128GB

Storage: PCIe-based 2GB/sec flash storage: configurable to 512GB, 1TB, 2TB

Graphics: Dual AMD Fury/nano tech in good/better/best adaptations

Interfaces: 6x Thunderbolt 3 / USB 3.1 (reversible), 2x legacy USB 3.0 ports (flat), HDMI, DisplayPort->TB 3 adapter in box

Other: possible slight case size increase to accomodate larger internal heatsink, "Mac Pro 7,1" designation

Scenario #3 - Skylake

Tagline: "Good things come to those who wait ... a long, long time"

Availability: announced mid 2017 at WWDC, shipping next few months if Intel has ramped up chip production

Processors: Xeon Skylake-EP v5 processors configurable up to 28-core

Memory: 2400MHz DDR4 memory configurable to 16GB, 64GB, 128GB, 256GB

Storage: PCIe-based 2+ GB/sec flash storage: configurable to 1TB, 2TB, 4TB

Graphics: Dual AMD future chip tech in good/better/best adaptations

Interfaces: Thunderbolt 3 natch and whatever the industry still considers useful at that point

My money is on #2, considering they've already skipped over Haswell-EP and would only do #1 if it was AMD Fury tech they were waiting for. #3 is just too long to wait, even for Apple's traditionally tortuous Mac Pro upgrade cycle.