Except I don't quite get who the ultimate target market of the XR stuff is - VR gaming/simulations, tethered (or even WiFi'd) to a stack of local "compute modules" doesn't sound like Apple playing to their own strengths.

That isn't how it works. It suspect that Apple has some really secondary VR like things attached to the headset because there is a larger set of folks that have some 'Sci Fi' exposure to VR than AR. But like the iPhone didn't 'bet the farm' on gaming as a primary purpose for an iPhone , I really don't think it is likely that Apple's primary target here is VR at all.

Reportedly Apple nixed/killed the concept of running this as a tethered heads years ago. Ive and some others didn't want any wires (and wireless isn't all that tractable at higher resolutions) so the primary mode is to run the computations

inside the headset.

Reports also indicate that there are

two SoCs in the headset. When Apple has two GPUs inside the MP 2013, one was named the "Compute GPU" and the other the "Display GPU". if there are two SoCs inside the headset , then there is a more than decent chance they'll be given 'assignment' names in addition to some M-xx or X-xxx or whatever longitudinal product name Apple wants to slap on them to increment the numeric suffix on. They are probably not monomaniacally symmetric ( so in that sense different from MP's pair). One is probably specialized for sensor data fusion , screen handling , object inference , etc ( with a custom collection of fixed function units aimed at doing all of that a much lower Perf/Wat than general GPU/CPU/NPU cores can) . The other is probably running more of the basic iOS/iPadOS workload that generates video output that will be layered on top of reality. The video output here is perhaps run through the other out to the screens.

One of those gets tagged "Compute" module because it is doing the compute part that the other one doesn't do.

There was several threads a while ago where folks had taken some patent about "mGPU workload distribution" and turn that into a utter fantasy about discrete GPUs. When actually looked at the specific definition inside the patent could see ( if not delusional) that those are

internal GPU core clusters. There are lots of folks who "want to see" a discrete card that they can plug in , so they desperately mutate anything close into that. I suspect something very similar is going on here and that these very high end HPC, compute cards are largely the result of the 'telephone game'.

These "compute modules" in some reports are running iOS ( or a close derivate of iOS). Some computational box that plugs into wall socket current to do "Nvidia killer" GPU compute workloads..... errr why would Apple throw iOS at that task?????

Most of the talk seems to be about AR and for that the money will be in something that can be worn stand-alone or as an iPhone peripheral. WWDC may be aimed at developers, but they're probably going to want developers to target mobile apps.

I suspect that "iPhone peripheral" will be a secondary option mode. Reportedly, there is a socket on the side where could sit and tether it to a Mac as an "external display" for a period of time. They could do the same thing with iPhone and also run 'notifications pushed to another device' like the Watch. I think those are somewhat placeholders until the xrOS software ecosystem gets fully populated with native stuff has opposed to 'quick hack' iPadOS ports (that probably do more to blow up battery life , than are useful in motivating a $3K price point. )

I suspect it is a mistake to think this thing is like what the Apple Watch was when it came out. A device that was woefully depending upon something else to do anything 'useful'. Lots of things point to Apple putting a 'game changing' amount of 'horsepower' inside the headest. Way , way , way more than anyone else has done so far.

And that will bring change. Change in the preconceptions of what can and cannot be done on the device. Has happened in PCs ( in 80's still heard Mainframe , Minicompute folks talking about how need a 'real computer' to do blah blah blah). Happened with SSDs wiping out huge chunks of the HDD market. Happened with the smarthphone.

Almost every other headset vendor out there is buying off the shelf chips from Qualcomm and others to power the headset. Who has done a large completely custom SoC for one ( at somewhat reasonable prices) ? [ Microsoft has coprocessor for Hololens but not the majority of all the computation required. ]

If the rest of the Mac line up was not there and Apple only had a $6K Mac Pro that was the whole line up and tried to pitch that machine to folks who primarily just need to read email , surf the internet , and play a casual game they probably wouldn't 'get it' . Whereas if you go into a extremely not mainstream shop that does large scale video editing the Mac Pro isn't that hard to sell at all. That's the problem at this point. There is huge faction of folks who think Apple is 'talking' to them and they really aren't. Apple will take you money if want to buy one just because you can... but that isn't the point.

This headset should be aimed more at someone who has to fix/troubleshoot a $20M jet engine , than virtually try on $20 dresses in a virtual world. At $20M/year pro athlete workouts, than a housewife looking for a yoga session on Apple Fitness+ . A surgeon doing AR enhanced surgery , than a parent looking for tele-medicine help for their baby with a fever.

It is a productivity enhancement device more than "escape from reality" device.

For a Mac with multiple Mx Ultras it would make more sense to fit a bunch of them in a 19" Rack case, which is something likely to appeal to customers needing that sort of power anyway. You can already get a rack bracket for 2x studios - and if you just used the mainboards alongside a shared power supply you could greatly reduce the height, even more by making the units deeper and lower with the heatsink behind & connected via heatpipe.

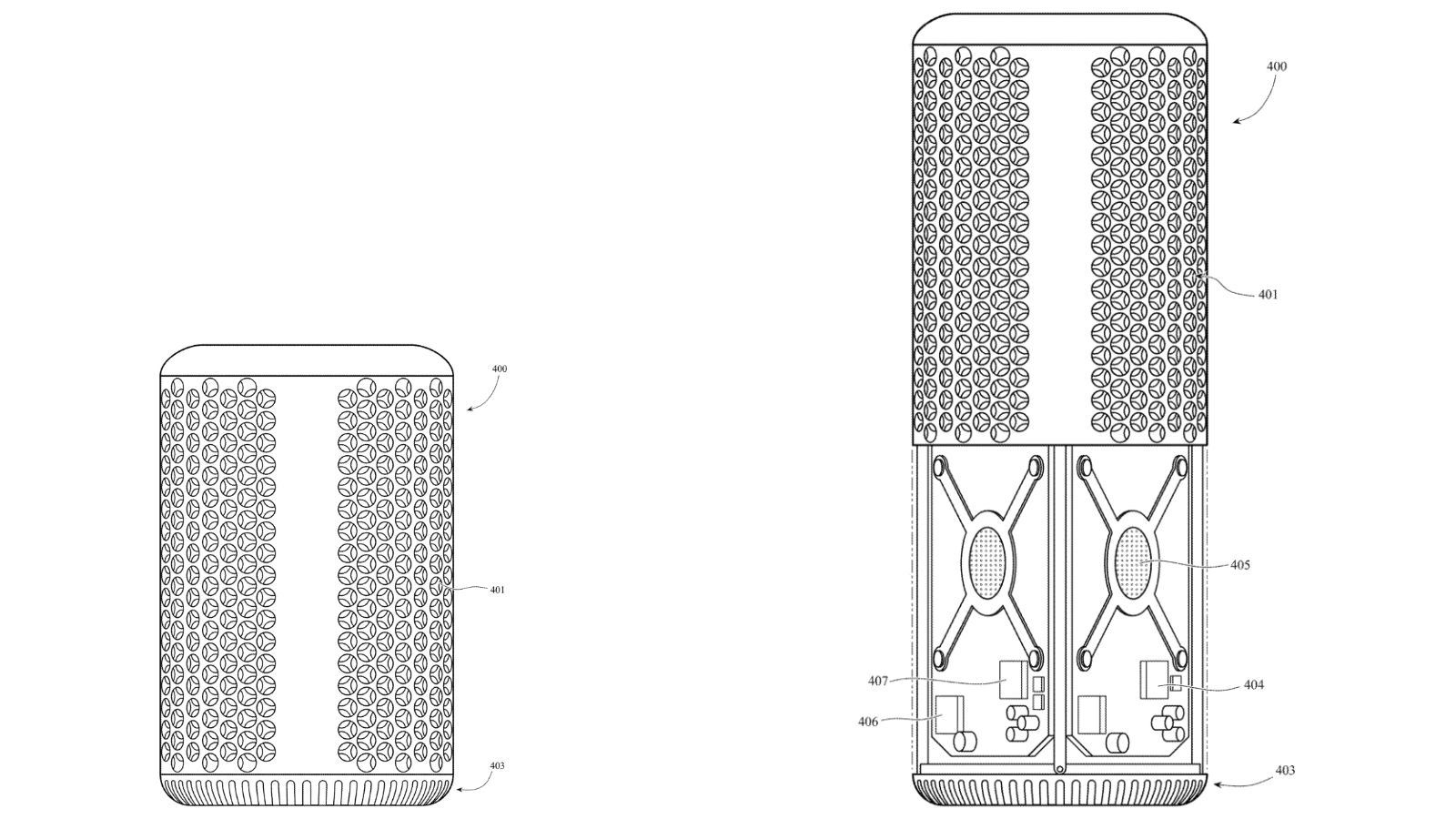

It would be simpler to just do a "mac on a card". If each board is running a independent macOS instance then just follow the normal PCI-e standard card practice of putting the power supply off card. Get rid of most of the external ports to cut down on non SoC components to the board (and other power drains). [ e.g., on EtherNet port , one TB port (video / DFU/ etc) and one USB C port (run KVM if want to). And software Ethernet via PCI-e back to host backplane/bus. ]

Apple wouldn't have to do the "rack chassis". They could just fit into other boxes ( like the Mac Pro) or any other 3U-5U box that took a full lenght , full height card ( all the 400-700W GPU cards popping up means those will be around even in non OAM card from. )

Effectively it would still be a Mac. Just one that wasn't completely enclosed inside of eco-friendly, recycled aluminum. ( not a "compute module" but a complete Mac system SoC , SSD , ports , fan(s) etc. )

Could do a fanless one but just recycling the Afterburner card and replacing a Mn Pro (?) (and SSD and few ports) for the FPGA if all that fit inside of a 75W budget.