Why I use an assortment of GPU-related performance tools

I'm currently in the process of consolidating my GPUs. My current goal is to have most of my 57 GPU processors with at least 3G of Vram, in seven systems: two 4-GPU processor systems, three 8-GPU processor systems and two 12-GPU processor systems [Yes, I'm not ready to give up that dream of >8 GPUs in a system, not just yet anyway]. As to 20 of my GTX 590 1.5Gs (each with two GPU processors) and 4 of my GTX 480s, they'll hopefully enjoy being housed in six 4-GPU card systems (five of which will really be 8-GPU processor systems because the 590s are 2-GPU processor cards). This move is intended to help better control my application program licensing fees (they're per system, not per card) and cut down on the number of systems that I have to monitor. In sum, my goal is to cut my total number of systems, as close as possible, in about half.

I'll be running mainly Octane Render [

http://render.otoy.com/features.php ], FurryBall

*/ and TheaRender [

https://www.thearender.com/site/index.php/features.html ] on the systems with

> 3G Vram. I intend to run RedShift 3d and TheaRender on the Fermi systems, i.e, those with

< 2G Vram (that includes my GTX 690). RedShift 3d already has out-of-core rendering

**/ implemented. TheaRender is a hybrid renderer that can run only on CPUs or only on GPUs or simultaneously on the CPUs and GPUs.

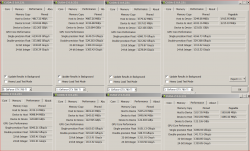

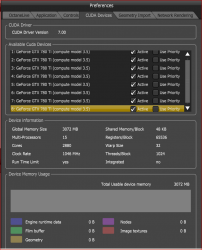

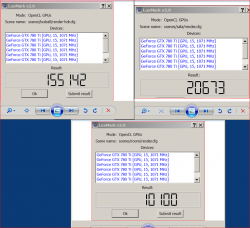

I had never installed iStat until Sedor mentioned it in his earlier post here. I thank him for the reference. While iStat looks like a useful utility, I personally also need utilities more closely related to the persistent proper functioning of my GPUs and that is tied more closely to the application at hand, since the GPU could be recognized by a general system utility, such as iStat, but might not be properly operating in the application at issue (such as intermittent down clocking) in a way that iStat might not reveal. That's why I'll also use CUDA-Z, GPU-Z, EVGA's Precision X and MSI's AfterBurner, along with the OSX and CUDA developer tools that I mentioned in my post above, as well as rendering application built-in utilities (like those in Octane) to help ensure that all is well constantly.

*/ FurryBall's "Two For The Price Of One" sale ends February 16, 2015. [

http://furryball.aaa-studio.eu ]

**/ " Out-of-Core Architecture

Redshift uses an out-of-core architecture for geometry and textures, allowing you to render massive scenes that would otherwise never fit in video memory.

A common problem with GPU renderers is that they are limited by the available VRAM on the video card that is they can only render scenes where the geometry and/or textures fit entirely in video memory. This poses a problem for rendering large scenes with many millions of polygons and gigabytes of textures.

With Redshift, you can render scenes with tens of millions of polygons and a virtually unlimited number of textures with off-the-shelf hardware. " [

https://www.redshift3d.com/products/redshift ]

Octane is beginning to implement this feature with the recent release of

Version 2.21.1, calling it "Out-of-core Textures." "Out-of-core textures allow you to use more textures than would fit in the graphic memory, by keeping them in the host memory. Of course, the data needs to be sent to the GPU while rendering therefore slowing the rendering down. If you don't use out-of-core textures rendering, speed is not affected.

Out-of-core textures come also with another restriction: They must be stored in non-swappable memory which is of course limited. So when you use up all your host memory for out-of-core textures, bad things will happen, since the system can't make room for other processes. Since out-of-core memory is shared between GPUs, you can't turn on/off devices while using out-of-core textures.

You can enabled and configure the out-of-core memory system via the application preferences. For net render slaves you can specify the out-of-core memory options during the installation of the daemon.") [

http://render.otoy.com/forum/viewtopic.php?f=33&t=44704&hilit=out+of+core ].

Furthermore, Octane's FAQ page has been revised to state the following:

"OctaneRender 2.0 or older texture count limitations (

Versions 2.01 and onward do not have these limitations) [Emphasis Added.] ... . [

http://render.otoy.com/faqs.php - click "Hardware and Software." ] Looks like someone forgot to reconcile the version numbers, however.