Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

AMD Confirms RX 480 At $199 USD, Other APU & Polaris Announcements

- Thread starter lowendlinux

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Amount of VRAM has really marginal affect on performance of the GPUs. Yes, it allows to work with 4K data for example. But if we think about overall performance - it has minor affect. Compute TFLOPs performance - that is what is most important.

This is simply not true for the BruceX 5K video benchmark. It's rendering 5K video, per the web page:

https://blog.alex4d.com/2013/10/30/brucex-a-new-fcpx-benchmark/

BruceX is a small Final Cut Pro X XML file that you import into Final Cut Pro. It creates a very short timeline at the highest possible standard resolution that Final Cut can handle: 5120 by 2700 (at 23.975 fps). It uses standard Final Cut generators, titles and transitions. As it uses many layers of complex content, it requires lots of GPU RAM.

This is why folks with 2GB cards have really terrible performance in this benchmark, for example. Show me another single GPU setup that can come close to the score I got with my 12GB TITAN X. I'm sure the next generation cards with 8GB will be comparable, but video memory is hugely important for that specific test.

Well, you expressed exactly my thoughts. If you edit video for up to 1440p your VRAM will have very minor impact on your performance. If you edit 4K video, or higher - it is completely different story.This is simply not true for the BruceX 5K video benchmark. It's rendering 5K video, per the web page:

https://blog.alex4d.com/2013/10/30/brucex-a-new-fcpx-benchmark/

This is why folks with 2GB cards have really terrible performance in this benchmark, for example. Show me another single GPU setup that can come close to the score I got with my 12GB TITAN X. I'm sure the next generation cards with 8GB will be comparable, but video memory is hugely important for that specific test.

Currently still 80% of video market is still not higher than 1080p. And by that I meant - overall performance.

Amount of VRAM has really marginal affect on performance of the GPUs. Yes, it allows to work with 4K data for example. But if we think about overall performance - it has minor affect. Compute TFLOPs performance - that is what is most important.

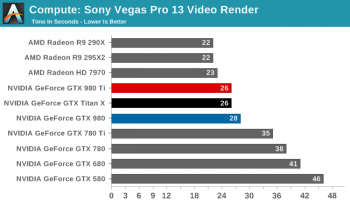

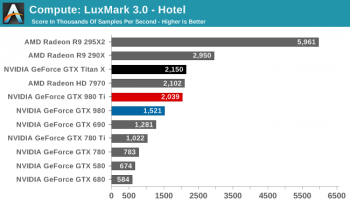

And just to emphasise that, here's the video rendering and OpenCL performance between 980Ti/Titan X vs the older Radeon cards.

Luxmark

http://images.anandtech.com/graphs/graph9306/74793.png

Sony Vegas

http://images.anandtech.com/graphs/graph9306/74797.png

The Nvidia's expensive high end cards look slow. Great for games but not professional use.

On OSX the GeForce cards perform much slower than on Windows. The Radeons don't suffer from such a divergent delta between the two operating systems.

Attachments

three new Mobile Gpu, no way both are targeted at the rMBP16 only, this means at lest two new Products with dGPU options, maybe Apple is planning 2 dGPU Options on the rMBP16 and updated iMac 5K with another 2-3 dGPU options (same high end as rMBP16 and another more powerful)., as on the current iMac retina- rMBP lineup.

The bad is no new MP on the horizon until AMD releases VEGA 10 or adopt again nVidia on the MP line.

The bad is no new MP on the horizon until AMD releases VEGA 10 or adopt again nVidia on the MP line.

Last edited:

Little sidenote:

you have 3 GB of VRAM.

Each card has it's own VRAM. It doesn't combine. You have to cards with 3 GB each.

TBH, I really don't know, but I don't think FCPX is mirroring the VRAM, is FCPX (OpenCL?) using the similar technique as the crossfire does?

If he does need more VRAM to go with that 7000 series then there are 7970 with 6GB for about 110-120 bucks. Dual GPU 7990 series you can pick up for 200 bucks. You can even pick up a monster 295X 8GB for about 300 and the price drops fast. These require more power though but have very high performance in OpenCL...with none of the bugs that Nvidia has been having problems with.

Is there anyone actually can get the 7970 6G or 7990 work properly in a Mac Pro under OSX?

TBH, I really don't know, but I don't think FCPX is mirroring the VRAM, is FCPX (OpenCL?) using the similar technique as the crossfire does?

Is there anyone actually can get the 7970 6G or 7990 work properly in a Mac Pro under OSX?

I did a search. Someone got the 6G to work but said it was better unflashed. EFI was causing OpenCL crashes. Most threads about 7970 back then became an Internet fight about power consumption.

Can't see any confirmation about 7990 except that if it could be detected it would show as two cards and not one.

Your dual 7950 or a dual 280 series is the safest bet. But if 480 support comes along that will be tremendous. Two cards and no power mods to do. As long as Sierra can be installed.

I did a search. Someone got the 6G to work but said it was better unflashed. EFI was causing OpenCL crashes. Most threads about 7970 back then became an Internet fight about power consumption.

Can't see any confirmation about 7990 except that if it could be detected it would show as two cards and not one.

Your dual 7950 or a dual 280 series is the safest bet. But if 480 support comes along that will be tremendous. Two cards and no power mods to do. As long as Sierra can be installed.

I'd be very interested to see if 480s in Crossfire would work and how they'd perform. Would be cheaper to get two of these than a 1070 and get (potentially) better performance than a 1080! But then, might be worth seeing what we get from Vega..

TBH, I really don't know, but I don't think FCPX is mirroring the VRAM, is FCPX (OpenCL?) using the similar technique as the crossfire does?

Yes, it is always mirrored in OpenCL Apps currently (FCPX for sure).

Newer Frameworks like Mantle and DX12 allow for stacking, as far as I know, but the App / Game has to be optimized / programmed to do that.

VRAM also has a HUGE benefit for Apps like FCPX.

That is why I hope so much for 1080/70 for cMP. Or one of the newer AMD cards. Because the RAM makes so much difference. Look at barefeats benchmark (BruceX) for 980 / 980ti. Difference is so big, because of the additional 2 GB of RAM.

Last edited:

Yes, it is always mirrored in OpenCL Apps currently (FCPX for sure).

Newer Frameworks like Mantle and DX12 allow for stacking, as far as I know, but the App / Game has to be optimized / programmed to do that.

VRAM also has a HUGE benefit for Apps like FCPX.

That is why I hope so much for 1080/70 for cMP. Or one of the newer AMD cards. Because the RAM makes so much difference. Look at barefeats benchmark (BruceX) for 980 / 980ti. Difference is so big, because of the additional 2 GB of RAM.

1080's OpenCL performance is very weak though. At 1700Mhz it loses or equals in Luxmark against cards that are 600mhz slower. That is on Windows. GeForce are even slower on Mac at OpenCL.

1080's OpenCL performance is very weak though. At 1700Mhz it loses or equals in Luxmark against cards that are 600mhz slower. That is on Windows. GeForce are even slower on Mac at OpenCL.

Thanks for the info. But I figure it will be a big improvement over 7950 with 3GB?

And from what I see it would be a big improvement over GTX 980 for me, because of amount of VRAM, even if computation is not that much better. At lease, that is my reasoning

Thanks for the info. But I figure it will be a big improvement over 7950 with 3GB?

And from what I see it would be a big improvement over GTX 980 for me, because of amount of VRAM, even if computation is not that much better. At lease, that is my reasoning

Over the 7950 yes. But the cost is very high compared to Radeon Fury with better OpenCL and video rendering. The Polaris and Vega should soundly continue to beat GeForce.

I'm at a conundrum too. I sold my 680 and 980. Now my cMP has the GT120 and my Skylake workstation only has the iGPU. I need to get two graphic cards soon and I really want to make an optimal choice that strikes a good balance between price, gaming performance and hassle free workstation performance.

Last edited:

Over the 7950 yes. But the cost is very high compared to Radeon Fury with better OpenCL and video rendering. The Polaris and Vega should soundly continue to beat GeForce.

Great. Looking forward to a solution for 480x in cMP. In tandem

Yes, it is always mirrored in OpenCL Apps currently (FCPX for sure).

Newer Frameworks like Mantle and DX12 allow for stacking, as far as I know, but the App / Game has to be optimized / programmed to do that.

VRAM also has a HUGE benefit for Apps like FCPX.

That is why I hope so much for 1080/70 for cMP. Or one of the newer AMD cards. Because the RAM makes so much difference. Look at barefeats benchmark (BruceX) for 980 / 980ti. Difference is so big, because of the additional 2 GB of RAM.

I see, I never think about that. But anyway, my dual 7950 can finish BurceX in 15 second. It's still hard to believe that the VRAM is so important in this test. Otherwise, my effective 3G VRAM setup should perform very poor in this test.

Also, the 980Ti itself is much much faster then the 980, regardless if more VRAM. If TitanX perform much better in BruceX then the 980Ti, then I think it's easier to conclude the difference is due the the VRAM.

May I know the core clocks on your GPUs? Thanks in advance.I see, I never think about that. But anyway, my dual 7950 can finish BurceX in 15 second. It's still hard to believe that the VRAM is so important in this test. Otherwise, my effective 3G VRAM setup should perform very poor in this test.

Also, the 980Ti itself is much much faster then the 980, regardless if more VRAM. If TitanX perform much better in BruceX then the 980Ti, then I think it's easier to conclude the difference is due the the VRAM.

May I know the core clocks on your GPUs? Thanks in advance.

I just use the stock 800MHz (VRAM 1250MHz) setting. I did OC them some time ago, but didn't feel significant difference (may be because I limit the power draw as well to avoid pulling too much from the 6pins), so back to stock setting now.

I see, I never think about that. But anyway, my dual 7950 can finish BurceX in 15 second. It's still hard to believe that the VRAM is so important in this test. Otherwise, my effective 3G VRAM setup should perform very poor in this test.

Also, the 980Ti itself is much much faster then the 980, regardless if more VRAM. If TitanX perform much better in BruceX then the 980Ti, then I think it's easier to conclude the difference is due the the VRAM.

In such rendering actions frames are coming into the memory and also out of video memory after they have been rendered, so it's not that easy to fill all the VRAM. Rendering isn't as memory intensive as real time video playback from timeline.

So you have overall 5.7 TFLOPs of compute power on both GPUs.I just use the stock 800MHz (VRAM 1250MHz) setting. I did OC them some time ago, but didn't feel significant difference (may be because I limit the power draw as well to avoid pulling too much from the 6pins), so back to stock setting now.

In such rendering actions frames are coming into the memory and also out of video memory after they have been rendered, so it's not that easy to fill all the VRAM. Rendering isn't as memory intensive as real time video playback from timeline.

I see, this can explain why my rendering time is pretty good (e.g. BruceX), but not necessary has smooth realtime playback when i edit 4K video with some filters (I couldn't remember if I always have smooth playback after background rendering completed. But before the background rendering completed, the playback can be quite choppy)

the 480 is just the mid range card so comparing it to the 1070/1080 might be incorrect maybe look more at the 1060 when it's out.

it's interesting to me as it's cheep, only uses 1 6 pin & looks like it might get native osx support where if i look at nvidia the gtx670/680/770 (760?) are the last cards with native osx drivers (no bootscreen but no one needs that ) and we will just have to wait to see if any of the 10xx card's will even work in osx which is a pain.

) and we will just have to wait to see if any of the 10xx card's will even work in osx which is a pain.

it's interesting to me as it's cheep, only uses 1 6 pin & looks like it might get native osx support where if i look at nvidia the gtx670/680/770 (760?) are the last cards with native osx drivers (no bootscreen but no one needs that

no bootscreen but no one needs that

It depends, when I made a clean install of 10.12, I still need the boot screen to use recovery partition, otherwise can't enable TRIM.

https://semiaccurate.com/forums/showpost.php?p=266518&postcount=2022

The GPU at stock clocks perform slightly faster than R9 390X. On stock 6 pin connector maximum core clock is 1400 MHz.

There will be 6+8 pin versions of RX 480 with over 1600 MHz.

The GPU at stock clocks perform slightly faster than R9 390X. On stock 6 pin connector maximum core clock is 1400 MHz.

There will be 6+8 pin versions of RX 480 with over 1600 MHz.

Power consumption for RX 480 under load is around 100W.We have received a tip from a reader claiming he was able to run an Ethereum hashrate test on the upcoming AMD Radeon RX 480 GPUs providing us with a photo proof that we are publishing here. Do note that for the moment we cannot confirm this result for sure, but it does seems legit enough for us to consider it as a highly possible. Unfortunately the hashrate that is apparently achieved at stock frequencies is a bit disappointing at just around 24 MHS for Ethereum Dagger-Hashimoto mining with about 100W, we are also told that with a memory overclock 26-28 MHS are possible with about 120W of power usage. If these results turn out to be true the Radeon RX 480 would not be that great for mining Ethereum as we suspected recently. On the other hand the RX 470 could end up being a much more interesting choice for low power Ethereum mining if it manages to achieve the same hashrate, but at a better price when it hits the markets sometime next month. Guess we’ll have to wait a bit more to see confirmations if this is the actual hashrate for RX 480 mining Ethereum, but with the 256-bit memory bus these results unfortunately are on track with the pessimistic expectations we already had instead of the optimistic version…

Power consumption for 1400 MHz core clock is around 120W.

And this is max possible core clock for RX480 with stock 6 pin connector. The GPU will be very close to bigger counterparts in DX12 applications.

They say it can hit 1500mhz on air but the OC utility is Windows only.Power consumption for RX 480 under load is around 100W.

Power consumption for 1400 MHz core clock is around 120W.

And this is max possible core clock for RX480 with stock 6 pin connector. The GPU will be very close to bigger counterparts in DX12 applications.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.