Hi,

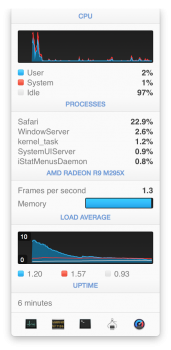

I monitored the temps of my system, and observed the following:

* CPU maxes out around 89 deg. C.

* GPU hits 101 deg. C after about 10 minutes, then the fan ramps up and it drops to around 97 deg. C where it seems to sit consistently thereafter.

During the drop in GPU temp, no observable loss of performance was noted.

I monitored the temps of my system, and observed the following:

* CPU maxes out around 89 deg. C.

* GPU hits 101 deg. C after about 10 minutes, then the fan ramps up and it drops to around 97 deg. C where it seems to sit consistently thereafter.

During the drop in GPU temp, no observable loss of performance was noted.