That website is misleading. Metal 3 and the Metal backend for Pytorch are two different things. It looks like the marketing people didn't know where to put that Pytorch can use Apple's GPU, and put it with the Metal enhancements for games.

Metal Overview - Apple Developer

Metal powers hardware-accelerated graphics on Apple platforms by providing a low-overhead API, rich shading language, tight integration between graphics and compute, and an unparalleled suite of GPU profiling and debugging tools.developer.apple.com

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Silicon deep learning performance

- Thread starter gl3lan

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

That is a potential way of improving performance, but I don't think anything changed for compute with MPS. The focus was more graphics related. They do mention to have higher efficiency, but who knows what that means as they refer to it in the context of raytracing.how about the updates to the shaders in metal 3?

I think it's also too early to report on beta versions, as there are too many bugs or unexplained issues going on right now which is unacceptable for productive work and only acceptable for trying a few things. See the latest issue here: https://github.com/pytorch/pytorch/issues/84936

It's frustrating.

While I'm here, let me quickly comment on it. Eventually Apple will have to adapt to it, once others do. This is all highly experimental for now, but the benefits can definitely be there for FP8, particularly when it comes to the whole green AI thing. However, Bill Vass and his team at Amazon AWS already played around with FP8 and also FP4 and there isn't a generic result so far when it comes to "everyone should do this now". I think it's going to be some time before we see any real progress on "new" data formats. There's also some Canadian company working on new hardware tailored for DL specifically and scaling in mind. Haven't heard anything new for a while now. I forget the name, it's a former AMD engineer who started it IIRC.Should Apple adopt this format?

FP8 Formats For Deep Learning

FP8 is a natural progression for accelerating deep learning training inference beyond the 16-bit formats common in modern processors. In this paper we propose an 8-bit floating point (FP8) binary interchange format con…www.arxiv-vanity.com

how about the updates to the shaders in metal 3 which PyTorch uses?

Mesh shaders

This new geometry pipeline replaces vertex shaders with two new shader stages — object and mesh — that enable more flexible culling and LOD selection, and more efficient geometry shading and generation.

Why would PyTorch be using mesh shaders? It’s not a gaming engine.

Tensorflow Metal backend runs a little better, but not that much.I think it's also too early to report on beta versions, as there are too many bugs or unexplained issues going on right now which is unacceptable for productive work and only acceptable for trying a few things. See the latest issue here: https://github.com/pytorch/pytorch/issues/84936

It's frustrating.

tensorflow-metal | Apple Developer Forums

Connect with fellow developers and Apple experts as you give and receive help on tensorflow-metal

Apple is very committed to it, and it gets much better with each iteration. However, you never know what operations have been ported to the GPU or what bugs have been fixed because Apple doesn't write change logs.

I tried Tensorflow on Metal before, but it also produced inconsistent results at the time. That's why I stopped fiddling with TF on Mac and switched to PyTorch. For more serious things I have to use both, but on Nvidia and it's more PyTorch these days than TF. Maybe I should try TF on Mac again, it's been a while.Tensorflow Metal backend runs a little better, but not that much.

Apple is very committed to it, and it gets much better with each iteration. However, you never know what operations have been ported to the GPU or what bugs have been fixed because Apple doesn't write change logs.tensorflow-metal | Apple Developer Forums

Connect with fellow developers and Apple experts as you give and receive help on tensorflow-metaldeveloper.apple.com

It would be nice to try a few smaller things on AS machines, especially MBPs. Maybe even inference only, for quickly hooking up some robots in the lab and test some stuff and not having to go via git and other machines. I don't really think it's TF or PyTorch related, it's Apple with Metal which is causing this. As you say, they don't have detailed changelogs, so it's always a ton of work one has to put in to figure out what's going on. George Hotz did a bunch of reverse engineering in the early M1 days to figure out how things work exactly, but that's not really feasible.

It would be really nice to see a list of supported features that actually work as intended for both TF and PyTorch with Metal. That way, people would know what they get. And while we have seen progress in the past years, both frameworks make general non-Metal related progress as well. What we'd really need is a schedule and timeframe when we catch up to things and when we can expect everything to work.

Apple is slowly improving the Tensorflow documentation.

developer.apple.com

developer.apple.com

However, the people writing information about new releases seem to have learned marketing rather than how to write proper changelogs.

Tensorflow Plugin - Metal - Apple Developer

Accelerate the training of machine learning models with TensorFlow right on your Mac.

However, the people writing information about new releases seem to have learned marketing rather than how to write proper changelogs.

It would be really nice to see a list of supported features that actually work as intended for both TF and PyTorch with Metal.

There is no complete list yet, but at least the Pytorch developers list the new operations added in each release.

- Added aten::index_add.out operator for MPS backend (#79935)

- Added aten:

relu operator for MPS backend (#82401)

- Added aten::bitwise-not operator native support for MPS backend (#83678)

- Added aten::tensor::index_put operator for MPS backend (#85672)

- Added aten::upsample_nearest1d operator for MPS backend (#81303)

- Added aten::bitwise_{and|or|xor} operators for MPS backend (#82307)

- Added aten::index.Tensor_out operator for MPS backend (#82507)

- Added aten::masked_select operator for MPS backend (#85818)

- Added aten::multinomial operator for MPS backend (#80760)

Release PyTorch 1.13: beta versions of functorch and improved support for Apple’s new M1 chips are now available · pytorch/pytorch

Pytorch 1.13 Release Notes Highlights Backwards Incompatible Changes New Features Improvements Performance Documentation Developers Highlights We are excited to announce the release of PyTorch 1....

That is at least something. But the amount of problems these releases have is beyond crazy. There's usually always some sort of fixing required beyond setting device to mps, especially when using public code from papers while linux just works. Plus it is still very, very slow in some (most) cases. Currently trying RAFT for optical flow (from this paper: https://arxiv.org/pdf/2003.12039.pdf) on M1 Max and it's painfully slow with mps.There is no complete list yet, but at least the Pytorch developers list the new operations added in each release.

Does it benefit Apple in any way?

www.semianalysis.com

www.semianalysis.com

How Nvidia’s CUDA Monopoly In Machine Learning Is Breaking - OpenAI Triton And PyTorch 2.0

Over the last decade, the landscape of machine learning software development has undergone significant changes.

Large scale? No. Software aside, Apple has a hardware problem... the M1 Ultra still doesn't beat a 1080Ti, so in that sense it's years behind. They need to up their game and introduce workstation or server/enterprise type of hardware if they want to play this game. The competition is RTX6000/8000 and at least A100. And once they manage to get close, the software kicks in. They can rely on 3rd party software like PyTorch and that might get better over time, but Nvidia knows this and they already reacted to this years ago.Does it benefit Apple in any way?

On a side note, scalability might be another issue. I recently spoke to someone running a 4000 node A100 cluster. It's easy to use, for everyone. How do we scale macOS "servers" to that level? Slurm, OpenMP, ...?

As for Nvidia, they know there are alternatives to Cuda/cuBLAS/cuDNN, but is that really their focus these days? The past few years, Nvidia worked on other software. Omniverse with Metaverse applications took over and everyone who isn't focused on theoretical research will benefit from it. Those with robotic applications will use IsaacSim. Autonomous driving? Nvidia Drive. Genomics? Nvidia Clare. Medial diagnosis? Maybe Nvidia Kaolin. General physical accurate synthetic data generation? Nvidia Replicator. Digital Twins? A mix of all the Omniverse tools. There's literally something for everyone. Outside of that Nvidia eco-system, there's literally nothing equivalent.

Sure I could use CoppeliaSim instead of Isaac Sim for robotics and I do for teaching. Simply because it requires way less hardware and it's learning curve isn't as steep as Isaac Sim. Students can install it on their laptop for introduction level courses. Research and advanced stuff? Not so much. The same argument can be made for Carla vs Drive.

So in addition to much more powerful hardware and well supported software, they need these tool and I doubt they could rely on 3rd party (maybe open source) software for this.

For small home application and playing around with very basic stuff like cats and dogs classifiers, sure, that's an option.

Is it getting easier to use Tensorflow on macOS?

blog.tensorflow.org

blog.tensorflow.org

It appears that Tensorflow 2.13 supports FP16 and BF16 on Apple GPU.

TensorFlow 2.13 is the first version to provide Apple Silicon wheels, which means when you install TensorFlow on an Apple Silicon Mac, you will be able to use the latest version of TensorFlow. The nightly builds for Apple Silicon wheels were released in March 2023 and this new support will enable more fine-grained testing, thanks to technical collaboration between Apple, MacStadium, and Google.

What's new in TensorFlow 2.13 and Keras 2.13?

TensorFlow 2.13 has been released! We're highlighting the new Keras V3 format as default for .keras extension files and much more!

It appears that Tensorflow 2.13 supports FP16 and BF16 on Apple GPU.

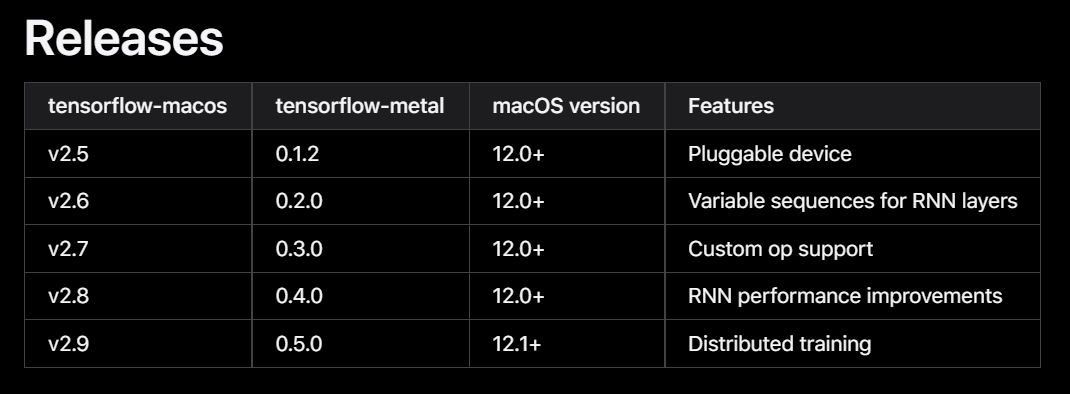

| tensorflow | tensorflow-metal | MacOs | features |

|---|---|---|---|

| v2.5 | v0.1.2 | 12.0+ | Pluggable device |

| v2.6 | v0.2.0 | 12.0+ | Variable seq. length RNN |

| v2.7 | v0.3.0 | 12.0+ | Custom op support |

| v2.8 | v0.4.0 | 12.0+ | RNN perf. improvements |

| v2.9 | v0.5.0 | 12.1+ | Distributed training |

| v2.10 | v0.6.0 | 12.1+ | |

| v2.11 | v0.7.0 | 12.1+ | |

| v2.12 | v0.8.0 | 12.1+ | |

| v2.13 | v1.0.0 | 12.1+ | FP16 and BF16 support |

Last edited:

How is AI training in Apple Silicon nowadays? I'm doing a final project on uni with my M1 and it seems like from what I've seen for some models when training it still bugs out? On some it is not reliable? Anyone has experience with doing training and stuff on the M1 Macs? Thanks!

This is a blog post about the Apple’s new Transformer-powered predictive text model

jackcook.com

jackcook.com

A look at Apple’s new Transformer-powered predictive text model

I found some details about Apple’s new predictive text model, coming soon in iOS 17 and macOS Sonoma.

Pytorch 2.1 was released this week. Unfortunately, the release notes don't indicate whether the MPS backend has stabilized or is still in beta.What about PyTorch?

- Add lerp implementation (#105470), logit (#95162), hardsigmoid (#95164),hypot (#95196), xlogy (#95213), log_sigmoid(#95280), fmax and fmin (#95191), roll(#95168), copysign (#95552), pow.Scalar (#95201), masked_scatter (#95743), index_fill (#98694), linalg.vector_norm (#99811), histogram (#96652), aminmax (#101691), aten::erfinv (#101507), cumprod (#104688), renorm (#106059), polar (#107324)

- Add optional minor argument to is_macos13_or_newer (#95065)

- Allow float16 input to float32 LayerNorm (#96430)

- Add higher order derivatives warning to max_pool2d (#98582)

- Expose mps package in torch (#98837)

- Prerequisite for MPS C++ extension (#102483)

Release PyTorch 2.1: automatic dynamic shape compilation, distributed checkpointing · pytorch/pytorch

PyTorch 2.1 Release Notes Highlights Backwards Incompatible Change Deprecations New Features Improvements Bug fixes Performance Documentation Developers Security Highlights We are excited to anno...

Microsoft was preparing Macs for OpenAI employees. 🤣🤣

The company is readying work spaces, training clusters and Macs to ensure OpenAI's workers can hit the ground running, the source said.

Microsoft was preparing Macs for OpenAI employees. 🤣🤣

I don't find this surprising. An Ultra is one of the most cost-efficient computers for working with large model development. With models of that size RAM becomes the bottleneck and Apple Silicon has the biggest GPU RAM pools among workstations, while being considerably cheaper. Nvidia has much faster ML hardware, true, but the fastest hardware won't do much if you can't feed it with data.

Im highly doubtful that they run models on the Macs. Most likely they have clusters with some flavor of Linux and Nvidia gpus. They probably just write code on the Macs and like safari.Microsoft was preparing Macs for OpenAI employees. 🤣🤣

All San Francisco / SV software devs use Macs. It’s extremely rare to find one who doesn’t use a Mac.I don't find this surprising. An Ultra is one of the most cost-efficient computers for working with large model development. With models of that size RAM becomes the bottleneck and Apple Silicon has the biggest GPU RAM pools among workstations, while being considerably cheaper. Nvidia has much faster ML hardware, true, but the fastest hardware won't do much if you can't feed it with data.

It doesn’t mean they run any models local. Most likely the models are run in the cloud or some sort of on premise Nvidia setup.

We are still waiting for the MacBook ULTRA with 18- to 20-inch and M3 ULTRA chip.I don't find this surprising. An Ultra is one of the most cost-efficient computers for working with large model development. With models of that size RAM becomes the bottleneck and Apple Silicon has the biggest GPU RAM pools among workstations, while being considerably cheaper. Nvidia has much faster ML hardware, true, but the fastest hardware won't do much if you can't feed it with data.

That’s simply not going to happen.We are still waiting for the MacBook ULTRA with 18- to 20-inch and M3 ULTRA chip.

OpenAI models require a lot of computing power and Microsoft was going to provide it.Im highly doubtful that they run models on the Macs. Most likely they have clusters with some flavor of Linux and Nvidia gpus. They probably just write code on the Macs and like safari.

Our data shows one of OpenAI’s next training supercomputers in Arizona was going to have more than 75,000+ GPUs in a singular site by the middle of next year.

Our data also shows us that Microsoft is directly buying more than 400,000 GPUs next year for both training and copilot/API inference. Furthermore, Microsoft also has tens of thousands of GPUs coming in via cloud deals with CoreWeave, Lambda, and Oracle.

Microsoft Swallows OpenAI’s Core Team – GPU Capacity, Incentive Structure, Intellectual Property, OpenAI Rump State

OpenAI is nothing without its people.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.