Pretty much a non sequitur, but sure!OpenAI models require a lot of computing power and Microsoft was going to provide it.

Microsoft Swallows OpenAI’s Core Team – GPU Capacity, Incentive Structure, Intellectual Property, OpenAI Rump State

OpenAI is nothing without its people.www.semianalysis.com

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Silicon deep learning performance

- Thread starter gl3lan

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Yes. Most people I know do a lot of work of their work in the cloud running in data centers and use big datasets, GPU/TPU processing power, and lots of drive space to build reasonably sized models.They certainly won't run anything but toy models on their Macs. These are desktop systems to work on and connect to larger clusters. A Macbook Air would be totally fine for that type of work and I doubt they have availability issues with Nvidia hardware.

Even further, a lot of tech companies here in San Francisco / SV are switching to cloud IDEs and cloud dev environments. So the computer is just a shell. Macs are still used though.Yes. Most people I know do a lot of work of their work in the cloud running in data centers and use big datasets, GPU/TPU processing power, and lots of drive space to build reasonably sized models.

At my tech company, we're piloting this now with a few devs.

This looks cool.

github.com

github.com

github.com

github.com

MLX is an array framework for machine learning on Apple silicon, brought to you by Apple machine learning research.

GitHub - ml-explore/mlx: MLX: An array framework for Apple silicon

MLX: An array framework for Apple silicon. Contribute to ml-explore/mlx development by creating an account on GitHub.

GitHub - ml-explore/mlx-examples: Examples in the MLX framework

Examples in the MLX framework. Contribute to ml-explore/mlx-examples development by creating an account on GitHub.

That's certainly very interesting, but I have to wonder how much support it will see in the future. First Apple got involved in TF, then they pretty much abandoned it to get involved with PyTorch a while later, which looks like they forgot about as well. And now this is the next thing for them... the big question is for how long until it's abandoned?

Wish they would stick to something, fix it when issues are discovered and generally support it well.

I'll keep an eye on this. I just had to get rid of some money from this years budget, so I ordered some more Mac Studios. So maybe I'll setup a small Mac MLX playground and see how well this works.

Wish they would stick to something, fix it when issues are discovered and generally support it well.

I'll keep an eye on this. I just had to get rid of some money from this years budget, so I ordered some more Mac Studios. So maybe I'll setup a small Mac MLX playground and see how well this works.

I think that's to misinterpret things.That's certainly very interesting, but I have to wonder how much support it will see in the future. First Apple got involved in TF, then they pretty much abandoned it to get involved with PyTorch a while later, which looks like they forgot about as well. And now this is the next thing for them... the big question is for how long until it's abandoned?

Wish they would stick to something, fix it when issues are discovered and generally support it well.

I'll keep an eye on this. I just had to get rid of some money from this years budget, so I ordered some more Mac Studios. So maybe I'll setup a small Mac MLX playground and see how well this works.

Apple worked on TF and PyTorch because in both cases there was obvious value in writing the "drivers" (ie the glue code) that would route TF and PyTorch primitives to appropriate Apple hardware, whether GPU or ANE. Once that was done, there was no need for Apple to stick around; their job is not to tell TF or PyTorch how to write new API's.

Meanwhile MLX is maybe positioned somewhat in the same place as Metal, namely a set of preferred API's and tools that are optimized (and will remain optimized) for Apple HW. The host for all ML code that Apple writes internally, and the suggested host for all external code where people are willing to do some extra work to get optimal Apple performance?

The Metal backend for Tensorflow and Pytorch is still in beta, so Apple still has work to do. On top of that, I doubt that Tensorflow and Pytorch developers will support the Metal backend, so Apple should fix any issues with those frameworks on Apple hardware.Apple worked on TF and PyTorch because in both cases there was obvious value in writing the "drivers" (ie the glue code) that would route TF and PyTorch primitives to appropriate Apple hardware, whether GPU or ANE. Once that was done, there was no need for Apple to stick around; their job is not to tell TF or PyTorch how to write new API's.

I hope Apple would create an MLX-based backend at least for Pytorch.Wish they would stick to something, fix it when issues are discovered and generally support it well.

That's certainly very interesting, but I have to wonder how much support it will see in the future. First Apple got involved in TF, then they pretty much abandoned it to get involved with PyTorch a while later, which looks like they forgot about as well. And now this is the next thing for them... the big question is for how long until it's abandoned?

Wish they would stick to something, fix it when issues are discovered and generally support it well.

I'll keep an eye on this. I just had to get rid of some money from this years budget, so I ordered some more Mac Studios. So maybe I'll setup a small Mac MLX playground and see how well this works.

I agree. So far, Apples attempts to bring ML development to Apple Silicon is full of half-baked efforts. They have released a series of incomplete buggy backends only seemingly to abandon them very soon thereafter. Same goes for their fairly new ML Compute framework which is now marked as deprecated.

MLX also seems incomplete, and the documentation is very minimal. I do appreciate the fact that it is MIT licensed and has a C++ implementation. Maybe the idea is to use MLX as a new backend for the future TF/PyTorch/Jax compatibility? I’m a bit concerned that they are rolling their own Python API, surely they don’t consider challenging the big frameworks? I don’t see a world where it would be wise.

I think that's to misinterpret things.

Apple worked on TF and PyTorch because in both cases there was obvious value in writing the "drivers" (ie the glue code) that would route TF and PyTorch primitives to appropriate Apple hardware, whether GPU or ANE. Once that was done, there was no need for Apple to stick around; their job is not to tell TF or PyTorch how to write new API's.

The problem is that these drivers are incomplete, buggy, badly documented, and closed source - at least for TF, not sure about others). So the job is hardly done. MLX is just the last preview-quality implementation in a series of related efforts, and Apples track record here is not the most reliable.

Meanwhile MLX is maybe positioned somewhat in the same place as Metal, namely a set of preferred API's and tools that are optimized (and will remain optimized) for Apple HW. The host for all ML code that Apple writes internally, and the suggested host for all external code where people are willing to do some extra work to get optimal Apple performance?

True, and there is certainly value in having a high quality abstract layer that can be used to quickly write framework drivers. But as I wrote I am a bit concerned that they are implementing their own JAX clone, it’s very easy to get lost in an effort like that and I don’t see much chance for MLX to be widely adopted.

In general I would agree, but what Apple delivered so far doesn't work as expected. It's bugged and produces non-reliable results. We can't really use it other than for playing around and try a few things. But when the results can be different from running it on a CPU (on any platform including Apple) or other GPU platforms, then it's really of no use. I'm not sure if that's fixed now, as everyone I know abandoned it as well after waiting for bug fixes. That might be the reason why Apple never fixed it though, everyone left already.Apple worked on TF and PyTorch because in both cases there was obvious value in writing the "drivers" (ie the glue code) that would route TF and PyTorch primitives to appropriate Apple hardware, whether GPU or ANE. Once that was done, there was no need for Apple to stick around; their job is not to tell TF or PyTorch how to write new API's.

No doubt it's incomplete. I'm fine with that for now though. I just hope they have not brought it into existence and are done now. A monthly patch with patch notes (what's been fixed, what new features added) would be nice to have from now on. And no, I can't imagine they want to replace TF/PyTorch for everyone. That's not going to happen.MLX also seems incomplete, and the documentation is very minimal. I do appreciate the fact that it is MIT licensed and has a C++ implementation. Maybe the idea is to use MLX as a new backend for the future TF/PyTorch/Jax compatibility? I’m a bit concerned that they are rolling their own Python API, surely they don’t consider challenging the big frameworks? I don’t see a world where it would be wise.

No doubt it's incomplete. I'm fine with that for now though. I just hope they have not brought it into existence and are done now. A monthly patch with patch notes (what's been fixed, what new features added) would be nice to have from now on. And no, I can't imagine they want to replace TF/PyTorch for everyone. That's not going to happen.

They do seem more passionate about this particular project, with devs actively answering questions on twitter and hacker news. Of course, only convenient questions ^^ We will see how it goes.

For me it's also very interesting as I am currently developing a library for basic GPU algorithms (e.g. sort), and it would be a nice test benchmarking it against what Apple engineers wrote.

In general I would agree, but what Apple delivered so far doesn't work as expected. It's bugged and produces non-reliable results. We can't really use it other than for playing around and try a few things. But when the results can be different from running it on a CPU (on any platform including Apple) or other GPU platforms, then it's really of no use. I'm not sure if that's fixed now, as everyone I know abandoned it as well after waiting for bug fixes. That might be the reason why Apple never fixed it though, everyone left already.

No doubt it's incomplete. I'm fine with that for now though. I just hope they have not brought it into existence and are done now. A monthly patch with patch notes (what's been fixed, what new features added) would be nice to have from now on. And no, I can't imagine they want to replace TF/PyTorch for everyone. That's not going to happen.

Is the real conclusion that there's so much flux in this space that Apple, being Apple, simply aren't going to waste time continuing to support a model (TF and PyTorch) that they think is not very good? Same way they spent a few years with OpenGL and OpenCL, learned all the things that made these suck, and walked away from them?

I honestly don't know enough about this space to have opinions. I do know that there are always people wedded to old code who refuse to move to something new (hell, there are still people writing GL) and they tend to have very loud voices; but that doesn't change the fact that Apple has always been a company that will drop a technology (HW or SW) as soon as it thinks it has discovered something better.

Another possibility is that they believe they have laid out the path for how to improve TF and PyTorch on Apple Silicon, and now it's up to that open source community, that's *always* boasting about how fantastic it is, to implement the last few steps, now that Apple has made it clear what needs to be done?

One final question. Where does Mojo fit in all this? If Mojo is going to be the optimal way to execute Python/PyTorch going forward (everywhere, but especially on Apple Silicon) once again why would Apple bother continuing to support a sub-optimal solution?

This might sound confrontational, but it isn't meant to be! I genuinely do not know the answers to these questions; they're just issues that arise to me as aspects of what might be driving Apple's choices.

I can't imagine that Apple would be naive enough to think that major backers of Tensorflow and Pytorch, such as Google, Intel or Nvidia, will help optimize either of those frameworks on Apple hardware.Another possibility is that they believe they have laid out the path for how to improve TF and PyTorch on Apple Silicon, and now it's up to that open source community

Yep. To be blunt, the reality here is that AI at Apple is a joke. Also, no serious AI researchers are doing experiments on Mac hardware.I can't imagine that Apple would be naive enough to think that major backers of Tensorflow and Pytorch, such as Google, Intel or Nvidia, will help optimize either of those frameworks on Apple hardware.

At the most basic level, the Metal kernels from mlx are useful to PyTorch etc. Getting a bit more in depth, the library architecture will inform and give insight WRT hardware and applications. As I see it, it’s a win-win.I can't imagine that Apple would be naive enough to think that major backers of Tensorflow and Pytorch, such as Google, Intel or Nvidia, will help optimize either of those frameworks on Apple hardware.

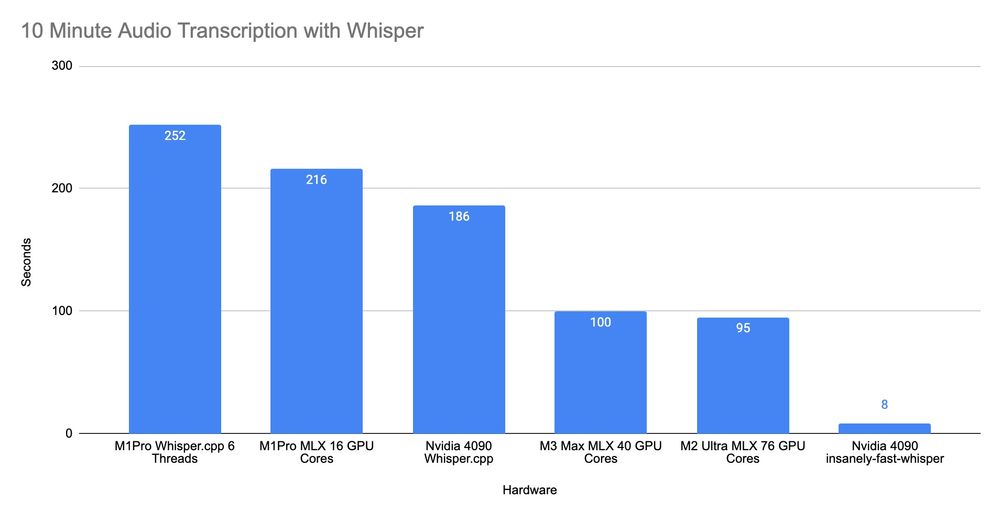

Some people have already started playing with MLX.

owehrens.com

owehrens.com

Edit: The author of the post has added a RTX 4090 result using a more optimized implementation of Whisper for Nvidia GPUs.

Whisper: Nvidia RTX 4090 vs M1Pro with MLX (updated with M2/M3)

How fast is my Whisper Benchmark with the MLX Framework from Apple? Nvidia 4090 / M1 Pro / M2 Ultra / M3

Edit: The author of the post has added a RTX 4090 result using a more optimized implementation of Whisper for Nvidia GPUs.

Last edited:

I do prototyping in MATLAB before things over to PyTorch, and I know others (mostly engineering folk) who do the same. My current project involves piping high resolution signals through two LSTMs and a 1D CNN. It would likely take months from start to finish on a CPU. Unfortunately only Nvidia GPUs are usable in MATLAB, and PyTorch support and performance for other vendors is grim.

I can manage VRAM issues with smaller batches and other tricks (fitting my current project into the 16GB VRAM I have available is manageable), but I can't manage at all without GPU support. Until Apple improves PyTorch support and performance, they could offer 1TB VRAM for all I care. My work is impossible using CPU cores (except for inference steps within the pipeline which are actually a tad faster). A real shame.

I can manage VRAM issues with smaller batches and other tricks (fitting my current project into the 16GB VRAM I have available is manageable), but I can't manage at all without GPU support. Until Apple improves PyTorch support and performance, they could offer 1TB VRAM for all I care. My work is impossible using CPU cores (except for inference steps within the pipeline which are actually a tad faster). A real shame.

Last edited:

I find it interesting that Apple is promoting Swift and MLX for ML researchers.

www.swift.org

www.swift.org

On-device ML research with MLX and Swift

The Swift programming language has a lot of potential to be used for machine learning research because it combines the ease of use and high-level syntax of a language like Python with the speed of a compiled language like C++.

Apple has published an interesting paper about multimodal LLM.

arxiv.org

arxiv.org

MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training

In this work, we discuss building performant Multimodal Large Language Models (MLLMs). In particular, we study the importance of various architecture components and data choices. Through careful and comprehensive ablations of the image encoder, the vision language connector, and various...

Apple seems to be taking MLX more and more seriously.

developer.apple.com

developer.apple.com

Explore machine learning on Apple platforms - WWDC24 - Videos - Apple Developer

Get started with an overview of machine learning frameworks on Apple platforms. Whether you're implementing your first ML model, or an ML...

The M4 Max reportedly transcribed an audio file using Whisper V3 Turbo in only 2:29 minutes with MLX while consuming 25 watts of power. The RTX A5000 graphics card reportedly performed the same test in 4:33 with a power consumption of 190 watts.

www.tomshardware.com

www.tomshardware.com

Apple M4 Max CPU transcribes audio twice as fast as the RTX A5000 GPU in user test — M4 Max pulls just 25W compared to the RTX A5000's 190W

The M4 Max is nearly 14 times more power efficient than Nvidia's previous-gen professional GPUs at audio transcoding.

Do you have any sense for how much of this is due to relative CPU rather than relative GPU performance?The M4 Max reportedly transcribed an audio file using Whisper V3 Turbo in only 2:29 minutes with MLX while consuming 25 watts of power. The RTX A5000 graphics card reportedly performed the same test in 4:33 with a power consumption of 190 watts.

Apple M4 Max CPU transcribes audio twice as fast as the RTX A5000 GPU in user test — M4 Max pulls just 25W compared to the RTX A5000's 190W

The M4 Max is nearly 14 times more power efficient than Nvidia's previous-gen professional GPUs at audio transcoding.www.tomshardware.com

Tasks that are both CPU- and GPU-dependent are sometimes faster on the M4 Max than on a even an RTX 4090-equipped desktop PC, not because the M4 Max's GPU is faster than a 4090 (it's not), but because the CPU performance is a bottleneck, and the M4 Max has a much faster CPU.

Media engines could also have a major impact on performance.Do you have any sense for how much of this is due to relative CPU rather than relative GPU performance?

There is a video showing the percentage of CPU and GPU usage while performing the task.

Do you have any sense for how much of this is due to relative CPU rather than relative GPU performance?

Tasks that are both CPU- and GPU-dependent are sometimes faster on the M4 Max than on a even an RTX 4090-equipped desktop PC, not because the M4 Max's GPU is faster than a 4090 (it's not), but because the CPU performance is a bottleneck, and the M4 Max has a much faster CPU.

In addition to the media engines doing the transcoding mentioned by the article, the major source of bottlenecks for non-parallelized inference is typically memory bandwidth. However, the A5000 has 768GB/s while the M4 Max has 546Gb/s, so the advantage should still be with the A5000. Finally, while still impressive, the A5000 is ~28 TFLOPs, slightly less than the desktop 4070 (regular) that Apple has been shown to be matching in Blender rendering performance.Media engines could also have a major impact on performance.

There is a video showing the percentage of CPU and GPU usage while performing the task.

Last edited:

It's nearly all GPU. That article's author also weirdly confuses transcribing and transcoding. Whisper is a speech-to-text (i.e. transcription) model and video encoders/decoders have nothing to do with it. Maybe the author is confused because of ML encoder/decoder terminology, which has nothing to do with media codecs. Regardless, that article is rubbish.Do you have any sense for how much of this is due to relative CPU rather than relative GPU performance?

Tasks that are both CPU- and GPU-dependent are sometimes faster on the M4 Max than on a even an RTX 4090-equipped desktop PC, not because the M4 Max's GPU is faster than a 4090 (it's not), but because the CPU performance is a bottleneck, and the M4 Max has a much faster CPU.

I have to admit I was a little confused by that ... but not knowing the internals of whisper I wasn't sure exactly what was being done. Silly me for assuming they knew what they were talking about. (A smaller issue is the typo that the Max GPU has 10 cores instead of 40).It's nearly all GPU. That article's author also weirdly confuses transcribing and transcoding. Whisper is a speech-to-text (i.e. transcription) model and video encoders/decoders have nothing to do with it. Maybe the author is confused because of ML encoder/decoder terminology, which has nothing to do with media codecs. Regardless, that article is rubbish.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.