Yes, it would be great if Cupertino would pay attention, but it's also not nearly that simple...

If you look at Windows, it only really supports live GPU switching between GPUs of the same brand. Nvidia Optimus doesn't work with AMD GPUs. That's because each GPU has it's own unique extensions. What happens if you swap to an AMD GPU from an Nvidia app while you're running an app that relies on Nvidia extensions? Blam! 1's and 0's everywhere, and lost data.

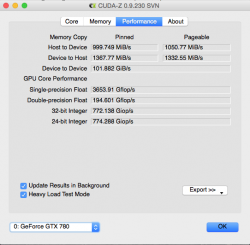

I know Windows can recover from driver faults, but I'm not entirely sure what they guarantee over there. I don't think they necessarily promise an application using the GPU won't crash. I know on OS X that would be very hard to do. Apple might be able to pad around it (using RAM as a buffer), but most applications assume they're continuing to work with the same chunk of VRAM. What happens when you're using a 6 gigabyte card full of data and you switch to a 1 gigabyte card? Apps don't know how to deal with that. What happens if you're doing a bunch of CUDA stuff and suddenly the card swaps to AMD? What happens when you have a lot of very important data that is only in VRAM on a card, and suddenly the user unplugs the card? How does that data make it over to the new card?

Look, I don't mean to trash anything MacVidCards is doing, I think it's totally valuable for power users that know what they're doing. But the idea that Apple is somehow locking this all down in advance of launching their own solution? That just sounds absolutely nuts to me given all the problems here. Even if Apple wanted to solve them I'm not entirely sure they are solvable without a lot of changes to OpenGL and a lot of app developers recoding their applications. And there are a lot of applications.

Windows (and OS X, for that matter) is able to do a lot of these things because they only promise very specific situations will work, and almost always that requires sticking to one brand of gear that remains internal to the machine. When you open it up to GPUs that can be unexpectedly removed at any time, along with any combination of different GPUs, things start to get very very messy.

So the answer is to sell an entire line of computers that ages twice as fast as anything that can change a GPU? And never try to use a TB eGPU so you never have to worry about what happens if you foolishly try to unplug it while using it?

Have a look at the Yosemite/Mavericks/ML forums. Dozens of frustrated iMac and MacBook users stuck in older OSs primarily because the GPU doesn't have 64 bit drivers.

Apple successfully is FORCING new purchases through this mechanism.

What's the solution to the myriad problems you just listed?

DON'T UNPLUG THE GPU WHILE USING IT !!!

To me, this is up there with "Don't unplug a drive while writing data to it" and "Don't blow dry your hair while standing in a bathtub full of water" and "Don't drain the oil from your car engine while it is running"

What is the HUGE DRIVING FORCE to be able to unplug a GPU? Why do you keep bringing it up? Where are the posts from people demanding a GPU they can unplug and remove from computer while it is running?

That's right, there aren't any.

All of those problems go away if you just use your brain instead of insisting on unplugging an eGPU while using it.

This is why I frequently think you are a voice from Cupertino. Who cares about unplugging an eGPU while using it? How about "Do people want fire that can be fitted nasally?" What other silly requirements can we list that have no rational connection to how people actually use a computer?

Don't do a dumb, silly thing and an eGPU means you can use OctaneRender on a Mini. Wow, a revelation.

But if you use Apple/Intel's requirement that all TB devices MUST be hot-pluggable then an eGPU doesn't make the grade. (What happens if you unplug your RAID from TB while it is writing? How does that work but not an eGPU?)

So, insist that ALL TB devices MUST BE HOT PLUGGABLE and deny yourself an eGPU on moral grounds.

Or use your brain, don't jerk the plug out while it is running and allow yourself to update the 3+ year old GPUs in your nMP.

Hmmm....