Who's cMP has that PCIe SSD? It's really hard to keep track of all of these different system configs you're talking about. SATA 2 can still support 300MB/sec, which is more than enough for a mechanical HD. If you have 2 SSDs on 2 different controllers, you could still get 600MB/sec in a RAID0 config. Obviously it'd be better to have 2 SATA 3 drives in RAID0 and then you'd be approaching the nMP throughput of 1GB/sec (I was able to get this with 2 840 PRO drives, since each can do 500MB/sec).

That guy is very likely limited by the fact he only has 2GB of VRAM. Each system will have a different bottleneck, and 2GB of memory to render a 5K video project is likely not enough and will end up causing a ton of thrashing (which is why his scores are more than 4x slower than mine).

Disabling the background rendering should mean that FCP is not caching the movie data in RAM, that's kind of the whole point of this and other FCP benchmarks (and why you need to restart FCP each time and clear out all its caches between runs).

My understanding of "disabling background rendering" means that FCPX will not render the video. but not won't store the original imported video data into the cache.

Anyway, you are the persona suggest that SSD make the difference, and my single SATA 2 SSD vs that dual SATA (possible SATA 3 after firmware update) in RAID 0 suggest that you are wrong. And now you say that single sSD via SATA 2 is more than enough.

You suggest that a faster CPU make the difference, we point out that FCPX is not that CPU single thread limiting, and all in a sudden you said the CPU is not even working hard, so it's not the factor.

You are the person to initial those theories. That's good, but when we point out how unlikely the theory is correct. You suddenly stand on the other side and say we are considering the irrelevant factor?

May be that's because my English is bad. I miss understand your meaning. If that's the case, I am sorry for my rudeness. I voice that out just because I feel strange that every time I tell you why that's not right, then you will teach me back almost exactly the same thing

And now, one more theory you suggest is the QuickSync.

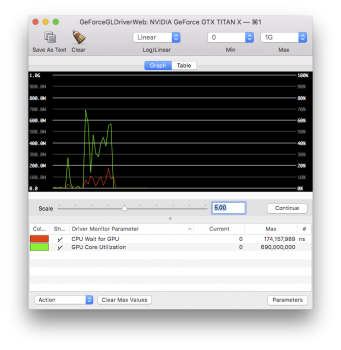

I just runt he benchmark again. The GPU is working hard through out the whose process. As AFAIK, BruceX do 2 things.

1) Rendering

2) encoding

And GPU should not do anything on the encoding part. So, I am quite sure QuickSync won't help that much. My CPU is exactly the old slow CPU as you describe. If my CPU is not limiting my dual GPU to perform. Then Quick sync won't help, because as you said, the bottleneck is not there.

Since the video is only 2 seconds long, and the output files is a mov file with Apple Quicktime codec. I highly doubt QuickSync can provide any benefit in this benchmark. If the video is longer, and the test is clearly divided into 2 part, rendering and encoding, and the output file is a H.264 MP4 file, may be the iMac can save a lot of time in the encoding part. However, not in this case.

Last but not least. I know quick sync is not gonna to help, please no need to teach me back on the same subject