The ultra has 16 P-cores and 4 efficiency cores.It’s really hard to make sence of since the Intel chip has 16 lower-performance cores and 8 P-cores, where Ultra has 20 P-cores.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Geekbench 6 results and discussions

- Thread starter senttoschool

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Anyone with a Mac Studio full spec? 20 Core CPU/60 Core GPU/128GB RAM

Hard to say. From a cursory glance it seems that each P-cluster (4 P cores) adds about 4K points, or 50% of M1 MT score. So there is definitely some inefficiency, but without detailed comparison I don’t immediately see how it relates to other devices.

It’s important to note however that a P-cluster in Apples design shares L2 cache, so communication between cores in a cluster will be very efficient. Exchanging data between clusters is probably much slower (which is likely what we see here). I wonder how this compares to AMD desktop designs which have similar topology.

It’s really hard to make sence of since the Intel chip has 16 lower-performance cores and 8 P-cores, where Ultra has 20 P-cores.

I am going to run GB6 on my AMD system later today (which has two clusters of 6 cores connected via on-die interconnect), so I'll have some frame of reference to compare how the Ryzen 9 5900x compares to the M2 Max under multicore loads. In GB5, the M2 Ultra outperformed the Ryzen 9 by roughly 25% in both single and multi-core tests, despite a significantly higher clock speed. But that performance delta may shift wildly given the new testing methodology employed by GB6.

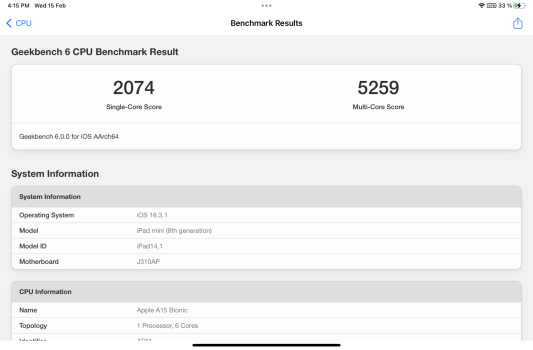

Just for the sake of it I ran GB6 on all of the devices we have around the house that are compatible. I'll share the results from fastest to slowest. An interesting thing I noticed is that the multi-core results from iPhones are inconsistent, perhaps due to thermal throttling or background processes that interfere with the test. I'm guessing that it's due to throttling since the tests take a few minutes but I have no proof.

I tested the my 14" MacBook Pro (the base M1 Pro model) also in low power mode. Then the CPU performed similarly to a standard M1 while the GPU retained its full performance.

All devices are running the lastest OS (macOS 13.2.1 or iOS/iPadOS 16.3.1).

I tested the my 14" MacBook Pro (the base M1 Pro model) also in low power mode. Then the CPU performed similarly to a standard M1 while the GPU retained its full performance.

All devices are running the lastest OS (macOS 13.2.1 or iOS/iPadOS 16.3.1).

2021 14" MacBook Pro M1 Pro (8 CPU cores, 14 GPU cores):

2021 14" MacBook Pro M1 Pro (8 CPU cores/14 GPU cores) in low power mode:

2020 Mac mini M1 (8/8 cores):

6th gen iPad mini A15 (6/5 cores, under-clocked compared to other A15 models):

iPhone 12 mini A14 (6/4 cores):

iPhone 11 A14 (6/4 cores):

5th gen iPad mini A12 (6/4 cores):

10.5" iPad Pro A10X (3/12 cores, P and E cores not available at the same time):

Attachments

Damn those thumbnails of video template. I would never click that crap.16" M2 Max 64gb 1Tb 38c

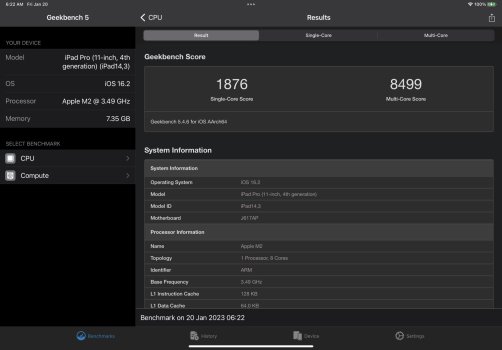

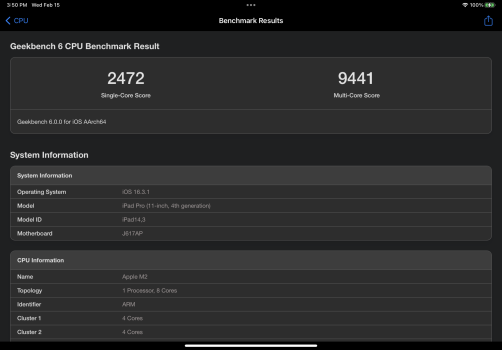

High Power and Low Power Readings

You guys might be interested in this YouTuber on the new Geekbench scoring

h

Damn those thumbnails of video template. I would never click that crap.

Ziskind actually does some good testing of these machines and their capabilities. A lot of his testing deals with coding and development-related tasks, which is what I use my Macs for. To me, it's significantly more relevant than MaxTech's approach.

Sorry! Wish we could have another simple-to-run coding benchmark created for us as we're not coders and have no idea how to code lol. Ziskind is great, though. Glad he's there for all of the Apple coders out there.Ziskind actually does some good testing of these machines and their capabilities. A lot of his testing deals with coding and development-related tasks, which is what I use my Macs for. To me, it's significantly more relevant than MaxTech's approach.

As far as I’m concerned, you have done more in your testing than all the other Youtubers when the newer products come out. Because of your testing, I made a change from the 14 inch to now a 16 inch M2.Sorry! Wish we could have another simple-to-run coding benchmark created for us as we're not coders and have no idea how to code lol. Ziskind is great, though. Glad he's there for all of the Apple coders out there.

Just to throw some Mac Studio results in:

M1 Ultra (48 core GPU) - 2365 Single-Core, 17117 Multi-Core, 133942 Compute (Metal).

The scaling on the multi-core isn't great, but about what i would expect from Geekbench. The compute score is showing atrocious scaling compared to other M1 chips.

As a comparison, a stock i7 13700KF (8 P-cores, 8 E-cores) comes in at 2780/18688, and 210255 compute (OpenCL) with an RTX 3090. The scaling on the 3090 compute vs the M1 Ultra also seems low which makes me wonder if the benchmark does not measure properly past a certain performance point.

M1 Ultra (48 core GPU) - 2365 Single-Core, 17117 Multi-Core, 133942 Compute (Metal).

The scaling on the multi-core isn't great, but about what i would expect from Geekbench. The compute score is showing atrocious scaling compared to other M1 chips.

As a comparison, a stock i7 13700KF (8 P-cores, 8 E-cores) comes in at 2780/18688, and 210255 compute (OpenCL) with an RTX 3090. The scaling on the 3090 compute vs the M1 Ultra also seems low which makes me wonder if the benchmark does not measure properly past a certain performance point.

Ziskind gets lots of things extremely wrong and is often very lazy with his methodology. What annoys me is that he has much less of an excuse for being bad at these things than the MaxTech guys, who aren't engineers at all. (To the extent Ziskind does have an excuse, it's that IIRC he's not someone with experience in doing anything particularly close to the metal, which is the background that teaches you the most about benchmarking, interpretation of results, and so on.)Ziskind actually does some good testing of these machines and their capabilities. A lot of his testing deals with coding and development-related tasks, which is what I use my Macs for. To me, it's significantly more relevant than MaxTech's approach.

With the caveat that this is preliminary:

On GB5, the SC score of the i9-13900KF is ≈ 9% higher than that of the M2 Max in the 16" MBP (2230/2050)

On GB6, the i9's SC score is ≈ 15% higher (3270/2850).

The percentages could be off, but the qualitative result is that GB6 appears to credit a bigger SC performance advantage to the Intel chip than GB5.

On GB5, the SC score of the i9-13900KF is ≈ 9% higher than that of the M2 Max in the 16" MBP (2230/2050)

On GB6, the i9's SC score is ≈ 15% higher (3270/2850).

The percentages could be off, but the qualitative result is that GB6 appears to credit a bigger SC performance advantage to the Intel chip than GB5.

Last edited:

Where are you getting 3350 ST for 13900k? It shouldn't be that high. It could just be a highly overclocked and RAM optimized computer.With the caveat that this is preliminary:

On GB5, the SC score of the i9-13900KF is ≈ 9% higher than that of the M2 Max in the 16" MBP (2230/2050)

On GB6, the i9's SC score is ≈ 18% higher (3350/2850).

The percentages could be off, but the qualitative result is that GB6 appears to credit a bigger SC performance advantage to the Intel chip than GB5.

We need a reviewer to run GB6 at stock settings.

Like most Youtubers, they start out producing content that was close to the truth. Then they get analytics showing them that more clickbait titles get more views, and they slowly start drifting towards making 100% clickbait videos that are often misleading.Ziskind gets lots of things extremely wrong and is often very lazy with his methodology. What annoys me is that he has much less of an excuse for being bad at these things than the MaxTech guys, who aren't engineers at all. (To the extent Ziskind does have an excuse, it's that IIRC he's not someone with experience in doing anything particularly close to the metal, which is the background that teaches you the most about benchmarking, interpretation of results, and so on.)

I looked again and only found these, but thought I had seen more previously:Where are you getting 3350 ST for 13900k? It shouldn't be that high. It could just be a highly overclocked and RAM optimized computer.

We need a reviewer to run GB6 at stock settings.

Here's three results (ignore nos. 2 and 4, since those are the KS) that might be more reasonable, that averages 3270. I edited my post to use this number, which gives a 15% difference, still qualitatively higher than GB5. But you're right, we do need to get some from reviewers running stock machines. Maybe I got too excited and jumped the gun

I looked again and only found these, but thought I had seen more previously:

View attachment 2159987

Here's three results (ignore nos. 2 and 4, since those are the KS) that might be more reasonable, that averages about 3270. That gives a 15% difference, still qualitatively higher than GB5. But you're right, we do need to get some from reviewers running stock machines. Maybe I got too excited and jumped the gun.

View attachment 2159991

Geekbench Search - Geekbench

It varies between 2800 - 3300. We won't know until a reputable reviewer runs the test with stock settings.

I wouldn't say it's more real-world. For instance, it's not representative of what I do. When I'm using a lot of cores, I'm more likely to be running a several single-threaded C++ programs in the background while using several different single-threaded office applications. I.e., more like what GB5 tests. Thus I'd instead say GB6 captures another important use case, which is valuable.

- MT testing has been changed from each core having discrete tasks to cores working together on a single task. This reflects more real world and would capture interactions between cores such as core-to-core latency.

I watched the interview with Poole about GB6 (linked below), and at about 12:00 he talks about this, but I can't tell if he's saying some of his MC tasks are embarassingly parallel and some are "distributed" (the kind of tasks you describe) or that all are distributed, but some scale better than others. As you can probably infer, I think GB6's MC score should be based on a mixture of distributed and embarassingly parallel tasks, to cover both use cases.

Also, I have a concern about the truly distributed tasks: Getting those to scale to many cores (say, >10) is, as you know, highly non-trivial. I'm specifically wondering if achieving good scaling requires a lot of platform-dependent optimization (such that you can find apps that scale well on, say, AMD but not AS, and visa-versa). If so, doing cross-platform comparisions using distributed MC tests could be problematic, especially at high core counts, since then you get into the question of whether the scaling has been equivalently optimized for each platform.

Last edited:

I wouldn't say it's more real-world. For instance, it's not representative of what I do. When I'm using a lot of cores, I'm more likely to be running a several single-threaded C++ programs in the background while using several different single-threaded office applications. I.e., more like what GB5 tests. Thus I'd instead say GB6 captures another important use case, which is valuable.

I think what you describe is the third use case, which is desktop operation in a multiprocess contended scenario. Besides performance, another key indicator here would be the overall system responsiveness.

I watched the interview with Poole about GB6 (linked below), and at about 12:00 he talks about this, but I can't tell if he's saying some of his MC tasks are embarassingly parallel and some are "distributed" (the kind of tasks you describe) or that all are distributed, but some scale better than others. As you can probably infer, I think GB6's MC score should be based on a mixture of distributed and embarassingly parallel tasks, to cover both use cases.

Also, I have a concern about the truly distributed tasks: Getting those to scale to many cores (say, >10) is, as you know, highly non-trivial. I'm specifically wondering if achieving good scaling requires a lot of platform-dependent optimization (such that you can find apps that scale well on, say, AMD but not AS, and visa-versa). If so, doing cross-platform comparisions using distributed MC tests could be problematic, especially at high core counts, since then you get into the question of whether the scaling has been equivalently optimized for each platform.

Yeah, I agree, there is a certain lack of transparency here. It's also not clear how exactly these algorithms work, maybe some of them are just not optimal. Like, how exactly do they do distributed file compression? Is it one file or multiple files? How big is the file? etc.

Here's the results of my 14" MBP compared to my Ryzen 9 gaming PC:

Ryzen 9, MBP with power saving off, MBP with power saving on:

Ryzen 9, MBP with power saving off:

I omitted the Vulkan test on the Ryzen 9 and Metal score on the MBP since those are not cross-platform, but the Metal score is listed in a prior post in this thread.

Ryzen 9, MBP with power saving off, MBP with power saving on:

Ryzen 9, MBP with power saving off:

I omitted the Vulkan test on the Ryzen 9 and Metal score on the MBP since those are not cross-platform, but the Metal score is listed in a prior post in this thread.

Results from my NUC running Win 11 Home

Core i7 -12700H, Arc 770M

Intel(R) Client Systems NUC12SNKi72

1911

Single-Core Score

8661

Multi-Core Score

86469

OpenCL Score

69462

Vulkan Score

Core i7 -12700H, Arc 770M

Intel(R) Client Systems NUC12SNKi72

1911

Single-Core Score

8661

Multi-Core Score

86469

OpenCL Score

69462

Vulkan Score

They should eventually publish PDF docs with more detailed descriptions of each benchmark. The GB5 versions are linked here:Yeah, I agree, there is a certain lack of transparency here. It's also not clear how exactly these algorithms work, maybe some of them are just not optimal. Like, how exactly do they do distributed file compression? Is it one file or multiple files? How big is the file? etc.

Interpreting Geekbench 5 Scores / Geekbench / Knowledge Base - Primate Labs Support

They haven't published the GB6 version of this knowledge base article yet, but past history suggests they will.

They should eventually publish PDF docs with more detailed descriptions of each benchmark. The GB5 versions are linked here:

Interpreting Geekbench 5 Scores / Geekbench / Knowledge Base - Primate Labs Support

support.primatelabs.com

They haven't published the GB6 version of this knowledge base article yet, but past history suggests they will.

How long after the GB5 release were those docs published? That might serve as a indicator of when we might see the GB6 versions.

More from John Poole on GB6 MC scores:

If these specific processes really are memory bandwidth limited on x86, then AS's architecture might make a difference, because of its high memory bandwidth. I don't know what the memory bandwidth of specific x86 systems is but, in https://www.anandtech.com/show/17024/apple-m1-max-performance-review/2, Andrei Frumusanu wrote: "While 243GB/s is massive, and overshadows any other design in the industry, it’s still quite far from the 409GB/s the chip is capable of." This indicates that AS's bandwidth should be much higher. [Of course, there's also latency to consider.] In any case, it would be interesting to look at these specific subscores.

By "memory-constrained" I assume he means bandwidth only, rather than that plus size. He doesn't say whether the attendant difference in RAM size has an effect (the single-channel RAM is 8 GB, while the dual-channel RAM is 16 GB), but since the difference is so obvious, and he doesn't mention it, I infer it's not relevant.The new "shared task" approach requires cores to co-operate by sharing information. Given the larger datasets used in Geekbench 6 several workloads are now memory-constrained, rather than CPU-constrained, on most systems.

Here are two Geekbench 6 results for a 5950X system, one result run with single-channel RAM and one result run with dual-channel RAM:

https://browser.geekbench.com/v6/cpu/compare/95075?baseline=95686

Several workloads, including File Compression, Object Detection, and Photo Filter almost double in performance when moving from single-channel to dual-channel RAM.

If these specific processes really are memory bandwidth limited on x86, then AS's architecture might make a difference, because of its high memory bandwidth. I don't know what the memory bandwidth of specific x86 systems is but, in https://www.anandtech.com/show/17024/apple-m1-max-performance-review/2, Andrei Frumusanu wrote: "While 243GB/s is massive, and overshadows any other design in the industry, it’s still quite far from the 409GB/s the chip is capable of." This indicates that AS's bandwidth should be much higher. [Of course, there's also latency to consider.] In any case, it would be interesting to look at these specific subscores.

Last edited:

More from John Poole on GB6 MC scores:

By "memory-constrained" I assume he means bandwidth only, rather than that plus size. He doesn't say whether the attendant difference in RAM size has an effect (the single-channel RAM is 8 GB, while the dual-channel RAM is 16 GB), but since the difference is so obvious, and he doesn't mention it, I infer it's not relevant.

If these specific processes really are memory bandwidth limited on x86, then AS's architecture might make a difference, because of its high memory bandwidth. I don't know what the memory bandwidth of specific x86 systems is but, in https://www.anandtech.com/show/17024/apple-m1-max-performance-review/2, Andrei Frumusanu wrote: "While 243GB/s is massive, and overshadows any other design in the industry, it’s still quite far from the 409GB/s the chip is capable of." This indicates that AS's bandwidth should be much higher. [Of course, there's also latency to consider.] In any case, it would be interesting to look at these specific subscores.

I think there is some confusion between dual channel and dual rank there. With the DDR 4 and 5 specs, you can have dual channel, single rank RAM (8GB sticks are single rank, 16GB or higher are dual rank). However, if you are running 4 8GB sticks in a DDR 4/5 system, the motherboard can see it as dual rank RAM anyways, which provides a slight boost in those configurations. I know AMDs Zen 3 and Zen 4 designs support this, but I'm not entirely sure about Intel.

I assume Poole knows the difference betweeen ranks and channels and, since he was referring to the latter, figured the 8 GB system was 1 x 8 GB, while the 16 GB system was 2 x 8 GB (and properly configured as dual-channel to take advantage of two sticks). Hence single-channel and dual-channel, respectively.I think there is some confusion between dual channel and dual rank there. With the DDR 4 and 5 specs, you can have dual channel, single rank RAM (8GB sticks are single rank, 16GB or higher are dual rank). However, if you are running 4 8GB sticks in a DDR 4/5 system, the motherboard can see it as dual rank RAM anyways, which provides a slight boost in those configurations. I know AMDs Zen 3 and Zen 4 designs support this, but I'm not entirely sure about Intel.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.