This would be covered. The reason why is that you're not max-loading each core. You load an app, use it, burst the core, go to another application, and repeat. You might have one or two applications running some medium process continuously but you're not compiling a huge coding project and then running CPU rendering at the same time. Each core has to do multiple things for multiple applications. This is better covered in GB6.I wouldn't say it's more real-world. For instance, it's not representative of what I do. When I'm using a lot of cores, I'm more likely to be running a several single-threaded C++ programs in the background while using several different single-threaded office applications. I.e., more like what GB5 tests. Thus I'd instead say GB6 captures another important use case, which is valuable.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Geekbench 6 results and discussions

- Thread starter senttoschool

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

You're making assumptions about my work that aren't correct. In many cases, where I've had a lot of polymers to model, I would run a number of C++ programs equal to the number of cores (each program would take a few to several days to finish). That way the work would finish as soon as possible, since I only used the computer for office work maybe 5 hours/day. MacOS is pretty good about giving priority to the interactive applications, so the responsiveness remained OK. [This was for the development phase; when this was completed I would switch to the cluster.]The reason why is that you're not max-loading each core....You might have one or two applications running some medium process continuously.....

Actually, it's better covered in GB5, since all my programs were single-threaded. It's GB6 that adds distributed computing. [I think it was good they added this, even if it doesn't correspond to my work, since some apps are multi-threaded.]Each core has to do multiple things for multiple applications. This is better covered in GB6.

Last edited:

So isn't this what was already mentioned in the original post?You're making assumptions about my work that aren't correct. In many cases, where I've had a lot of polymers to model, I would run a number of C++ programs equal to the number of cores (each program would take a few to several days to finish). That way the work would finish as soon as possible, since I only used the computer for office work maybe 5 hours/day. MacOS is pretty good about giving priority to the interactive applications, so the responsiveness remained OK. [This was for the development phase; when this was completed I would switch to the cluster.]

Actually, it's better covered in GB5, since all my programs were single-threaded. It's GB6 that adds distributed computing. [I think it was good they added this, even if it doesn't correspond to my work, since some apps are multi-threaded.]

Your workload is not typical and does not reflect the "real world". In this case, I think we can all agree that "real world" simply means typical workloads (80%). Previously, GB5 was testing for the 20% case, now it's testing for the 80% case.

Not sure what you're saying here. Most of what you wrote was presuming to tell me what my workload was without having any actual idea, and most of what I wrote was explaining why that was wrong. Not sure what that has to do with the original post. And I notice there's nothing in your response where you acknowledge you got it wrong, which would seem to be the right thing to do in this case.So isn't this what was already mentioned in the original post?

This is typical for many scientists who do computer modeling, and write our own code*. [Macs are particularly popular among that group, because they offer both a refined UI and a native *nix interface.] You're making a common mistake—assuming workflows outside your personal experience aren't "real world". It's real-world for us.Your workload is not typical and does not reflect the "real world". In this case, I think we can all agree that "real world" simply means typical workloads (80%). Previously, GB5 was testing for the 20% case, now it's testing for the 80% case.

My university's computer clusters are filled by people who are each doing exactly what I describe--running as many single-threaded jobs as the clusters' schedulers will allow. And when we're doing development work on our local machines, we're doing exactly the same thing. We might run the same program on several different targets, or several different variant programs on a single target.

[*Most scientists who write code aren't professional programmers; we just know enough to write the code we need. That means most of the code we write is going to be single-threaded, since writing distributed programs is hard.]

And I wouldn't be so quick to say GB is now testing for the 80% case, since the overwhelming majority of programs remain single-threaded. It comes down to the relative number of distributed vs. non-distributed tests within their MT benchmark.

Last edited:

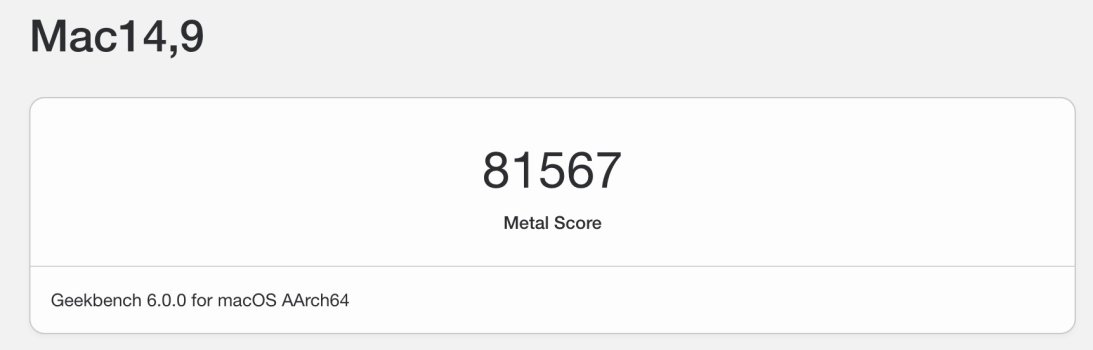

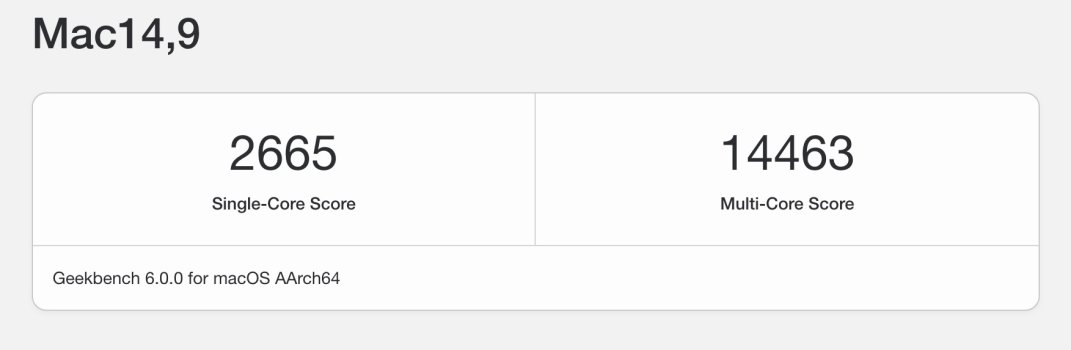

Here is the effect of going from GB 5.5.1 to GB 6.0.0 on the scores for a 2019 27" i9-9900K iMac/128GB RAM/Radeon Pro 580X (8GB) running MacOS 12.6.3. You can see that SC increased by 21%, MC decreased by 4%, and both Open CL and Metal increased by 9%. And the MC scaling efficiency decreased by 20% (not unexpected, given the change to the MC workload). I ran each test three times and selected the highest result:

Suggestion to those posting scores: Post both your GB_6.0.0 and GB_5.5.1 scores, and calculate the %differences (GB6/GB5 – 1), so we can see how these vary by platform.

[With efficiency cores, calculating the scaling efficiency is tricky (how many performance cores does each efficiency core count as?) (for the M1 I belive it's 1/4, but don't know what it is for the M2 or the various Intel and AMD CPUs), but the % diff in scaling efficiency is independent of core count since, when you calculate that, the core counts cancel out.]

Suggestion to those posting scores: Post both your GB_6.0.0 and GB_5.5.1 scores, and calculate the %differences (GB6/GB5 – 1), so we can see how these vary by platform.

[With efficiency cores, calculating the scaling efficiency is tricky (how many performance cores does each efficiency core count as?) (for the M1 I belive it's 1/4, but don't know what it is for the M2 or the various Intel and AMD CPUs), but the % diff in scaling efficiency is independent of core count since, when you calculate that, the core counts cancel out.]

Last edited:

Much like SPEC CPU, which John Poole took a lot of inspiration from, each major version of GB CPU generates scores normalized against a different baseline processor.Here is the effect of going from GB 5.5.1 to GB 6.0.0 on the scores for a 2019 27" i9-9900K iMac/128GB RAM/Radeon Pro 580X (8GB) running MacOS 12.6.3. You can see that SC increased by 21%, MC decreased by 4%, and both Open CL and Metal increased by 9%. And the MC scaling efficiency decreased by 20% (not unexpected, given the change to the MC workload). I ran each test three times and selected the highest result:

View attachment 2160943

Suggestion to those posting scores: Post both your GB_6.0.0 and GB_5.5.1 scores, and calculate the %differences (GB6/GB5 – 1), so we can see how these vary by platform.

"Geekbench 5 scores are calibrated against a baseline score of 1,000 (which is the score of a Dell Precision 3430 with a Core i3-8100 processor). Higher scores are better, with double the score indicating double the performance."

"Geekbench 6 scores are calibrated against a baseline score of 2,500 (which is the score of a Dell Precision 3460 with a Core i7-12700 processor). Higher scores are better, with double the score indicating double the performance."

Because of this, it makes no sense to spend any time on direct comparisons of GB5 and GB6 CPU scores. They're different unit systems. You could perhaps try to cobble together some form of conversion equation by running both GB5 and GB6 on both the reference systems Primate Labs used, but even that would be sketchy imo.

Much like SPEC CPU, which John Poole took a lot of inspiration from, each major version of GB CPU generates scores normalized against a different baseline processor.

"Geekbench 5 scores are calibrated against a baseline score of 1,000 (which is the score of a Dell Precision 3430 with a Core i3-8100 processor). Higher scores are better, with double the score indicating double the performance."

"Geekbench 6 scores are calibrated against a baseline score of 2,500 (which is the score of a Dell Precision 3460 with a Core i7-12700 processor). Higher scores are better, with double the score indicating double the performance."

Because of this, it makes no sense to spend any time on direct comparisons of GB5 and GB6 CPU scores. They're different unit systems. You could perhaps try to cobble together some form of conversion equation by running both GB5 and GB6 on both the reference systems Primate Labs used, but even that would be sketchy imo.

It's not just the different baselines that make direct comparisons of GB5 and GB6 irrelevant. GB6 uses a different methodology for testing multicore performance, which makes any direct comparisons between GB5 and 6 multicore scores pointless. The closest thing I can think of in terms of a direct comparison between GB5 and GB6 that wouldn't be irrelevant would be comparing where a given Mx series SoC falls in the overall results (e.g., if the M1 Max is 22nd in the GB5 overall rankings, but 13th in the GB6 rankings). But even then, you're just showing where the SoC ranks under that specific testing criteria.

Nope. Since the scores are proportional to speed of task completion ("double the score is double the performance") in both GB5 and GB6, the respective devices used as baselines in GB5 and GB6 have no effect on the performance ratios.Much like SPEC CPU, which John Poole took a lot of inspiration from, each major version of GB CPU generates scores normalized against a different baseline processor.

"Geekbench 5 scores are calibrated against a baseline score of 1,000 (which is the score of a Dell Precision 3430 with a Core i3-8100 processor). Higher scores are better, with double the score indicating double the performance."

"Geekbench 6 scores are calibrated against a baseline score of 2,500 (which is the score of a Dell Precision 3460 with a Core i7-12700 processor). Higher scores are better, with double the score indicating double the performance."

Because of this, it makes no sense to spend any time on direct comparisons of GB5 and GB6 CPU scores. They're different unit systems. You could perhaps try to cobble together some form of conversion equation by running both GB5 and GB6 on both the reference systems Primate Labs used, but even that would be sketchy imo.

Making this more explicit: Assuming Primate's phrasing properly describes how they are generating their scores, the following would hold: Suppose, on a specific task, completion takes device A 20 seconds and device B 10 seconds. Then device B's score for that task will be twice that of device A's. This is independent of which benchmark you're in, or what device was used to calibrate it.

Consequently (using simple nos. purely for illustration): Suppose devices A and B respectively score 1000 and 2000 in GB5, and 2000 and 8000 in GB6. That means device B is twice as fast as A on the GB5 tasks, but four times as fast on the GB6 tasks. Hence we know the performance disparity between devices A and B is twice as large with the GB6 tasks than the GB5 tasks. This kind of information is intriguing.

On the contrary, it's specifically because the tasks are different that such comparision has a point. Suppose the values I used above were MC scores. That would mean the performance disparity between devices A and B is significantly greater with the GB6 MC tasks than the GB5 MC tasks. Given that GB6 has distributed MC tasks, and GB5 does not, that suggests distributed tasks are particularly challenging for device A, and warrants further investigation.It's not just the different baselines that make direct comparisons of GB5 and GB6 irrelevant. GB6 uses a different methodology for testing multicore performance, which makes any direct comparisons between GB5 and 6 multicore scores pointless. The closest thing I can think of in terms of a direct comparison between GB5 and GB6 that wouldn't be irrelevant would be comparing where a given Mx series SoC falls in the overall results (e.g., if the M1 Max is 22nd in the GB5 overall rankings, but 13th in the GB6 rankings). But even then, you're just showing where the SoC ranks under that specific testing criteria.

For instance, if GB6's MC suite contains a mixture of distributed and non-distributed tasks, you might then want to look at device A's individual MC task scores, and see if it particularly stuggles with the distributed ones.

Last edited:

Geekbench 5 is not reliable for CPU benchmarking. The processor here works only for a short time at maximum power.

Better is Cinebench R23 which loads the processor at 100% all the time.

Better is Cinebench R23 which loads the processor at 100% all the time.

Cinebench is useful for comparing sustained highly parallel performance without regard for variables like memory bandwidth. Geekbench tries to simulate a more comprehensive and varied workload. I wouldn't call Cinebench better necessarily, but it is a lot more straightforward and easier to extrapolate as an estimate for specific workloads that might be similar. And then mostly worthless for dissimilar workloads. I have no idea what real workloads that Geekbench could estimate for, though. Bursty, bandwidth reliant, mediocre-scaling workloads aren't unrealistic, but not as obvious to me.Geekbench 5 is not reliable for CPU benchmarking. The processor here works only for a short time at maximum power.

Better is Cinebench R23 which loads the processor at 100% all the time.

Geekbench 5 is not reliable for CPU benchmarking. The processor here works only for a short time at maximum power.

Better is Cinebench R23 which loads the processor at 100% all the time.

Cinebench is not a good CPU benchmark because it’s a highly specific workload. This is like measuring the overall performance of a car by how well it can get out of mud. It’s an interesting metric in its own right but it won’t tell you anything useful about how it handles on the highway or in the commuter stop and go traffic.

CB is however useful as a “light” stress test, to study the thermal behavior of the system. Intel uses a Cinebench as a standard benchmark these days because their CPUs perform particularly well on this kind of tests (CB favors high clocks, wide vector units, fast caches, and scales very well with high number of cores and SMT)

Why buy a computer with a powerful processor if we will not use it to the maximum ? Geekbench can add up to 30% more points for faster memory . But in real calculations (like Cinebench) the gain from faster memory is negligible. Amother matter is throlling when CPU lowers performance when temperatures increase. The Geekbench benchmark time is too short to verify it - it is only 2 to 3 minutes. Cinebench stress lasts about a dozen minutes with the maximum load of the CPU . So conclusion. M1/M2 chips slow down in long/stress benchmark. Geekbench add more points for silicon CPUs thanks to faster memory . This is the reason for such different results. Of course Cinebench R23 is designed and optimized for M1/M2 chips too not only for Intel.

Last edited:

Why buy a computer with a powerful processor if we will not use it to the maximum ? Geekbench can add up to 30% more points for faster memory . But in real calculations (like Cinebench) the gain from faster memory is negligible. Amother matter is throlling when CPU lowers performance when temperatures increase. The Geekbench benchmark time is too short to verify it - it is only 2 to 3 minutes. Cinebench stress lasts about a dozen minutes with the maximum load of the CPU . So conclusion. M1/M2 chips slow down in long/stress benchmark. Geekbench add more points for silicon CPUs thanks to faster memory . This is the reason for such different results.

Not quite sure what you are arguing for? First, Apple Silicon does not suffer much from throttling under sustained operation because its dynamic clock range is much lower. This is primarily a problem with x86 CPUs where “base” and “boost” clock can vary by up to 40-50%. So Geekbench actually overestimates x86 performance under sustained scenario. Second, we know very well why Apple Silicon performs poorly in Cinebench. In part, this is a deliberate choice on Apples side in order to make their systems more energy efficient. Apple CPUs simply do not have the raw clocks, per-instruction SIMD throughout or cache bandwidth of x86 CPUs - and these things contribute to why Apple can be as fast with 5 watts of power as Intel is with 20 or more watts of power in general purpose processing. You cant win them all, there are choices to be made. At the same time Apple excels at many real-world tasks such as code compilation or scientific computations.

BTW, the problem with “faster memory” you mention was in Geekbench 3. That has long been addressed.

This is directly from the Are Technical article about GB 6...

"That means Geekbench isn't always great for measuring sustained performance—how your device will run over a long period of time—or how it will perform when your CPU and GPU are both active at the same time, when each component is consuming its own power and generating its own heat."

So for a lot of people, mostly creatives, GB6 isn't always the most useful benchmark for us. Creatives are almost always hammering the CPU and the GPU all the time. IMO the only reason Apple Silicon is so performant for editing are the dedicated encode/decode hardware; that was a really smart decision by Apple; although once you sprinkle lots of effects and filters, the performance starts to fall fast. The power (wattage used) vs. performance is pretty remarkable though.

It will be interesting to see if Apple's new Mac Pro will reverse direction a bit and run the silicon at much higher clocks since there will be ample space for cooling.

"That means Geekbench isn't always great for measuring sustained performance—how your device will run over a long period of time—or how it will perform when your CPU and GPU are both active at the same time, when each component is consuming its own power and generating its own heat."

So for a lot of people, mostly creatives, GB6 isn't always the most useful benchmark for us. Creatives are almost always hammering the CPU and the GPU all the time. IMO the only reason Apple Silicon is so performant for editing are the dedicated encode/decode hardware; that was a really smart decision by Apple; although once you sprinkle lots of effects and filters, the performance starts to fall fast. The power (wattage used) vs. performance is pretty remarkable though.

It will be interesting to see if Apple's new Mac Pro will reverse direction a bit and run the silicon at much higher clocks since there will be ample space for cooling.

So for a lot of people, mostly creatives, GB6 isn't always the most useful benchmark for us. Creatives are almost always hammering the CPU and the GPU all the time.

Is this really the case though? I was always under impression that creative workflows are about bursty workflows — applying user-initiated processing steps which should ideally get completed as quickly as possible. That's not the usual definition of sustained performance (aside of course things like rendering or encoding). Of course, I fully agree that Geekbench is not really useful for creatives, simply because creative workflows are usually connected to specific software suites. There are other ways to estimate performance for these tasks though, e.g. PugetBench.

IMO the only reason Apple Silicon is so performant for editing are the dedicated encode/decode hardware; that was a really smart decision by Apple; although once you sprinkle lots of effects and filters, the performance starts to fall fast. The power (wattage used) vs. performance is pretty remarkable though.

Unified memory and large caches help too, especially with video work. Scrubbing through timelines etc. requires large amounts of data to be transferred to and from GPU quickly; the delay can be noticeable with the usual PCIe bottleneck. Even the fastest GPU won't help you if transferring the data takes a fraction of a second.

It will be interesting to see if Apple's new Mac Pro will reverse direction a bit and run the silicon at much higher clocks since there will be ample space for cooling.

Sure hope so, but probably not with the current generation of silicon. You'd need new hardware capable of running at higher speed.

It all depends on what you’re doing. But it is very apparent the difference on doing anything on my 2018 MBP vs. my Mac Pro.Is this really the case though? I was always under impression that creative workflows are about bursty workflows — applying user-initiated processing steps which should ideally get completed as quickly as possible. That's not the usual definition of sustained performance (aside of course things like rendering or encoding). Of course, I fully agree that Geekbench is not really useful for creatives, simply because creative workflows are usually connected to specific software suites. There are other ways to estimate performance for these tasks though, e.g. PugetBench.

Unified memory and large caches help too, especially with video work. Scrubbing through timelines etc. requires large amounts of data to be transferred to and from GPU quickly; the delay can be noticeable with the usual PCIe bottleneck. Even the fastest GPU won't help you if transferring the data takes a fraction of a second.

Sure hope so, but probably not with the current generation of silicon. You'd need new hardware capable of running at higher speed.

I notice you didn't mention Embree. Is that no longer an issue for using Cinebench to compare Intel and ARM processors?Cinebench is not a good CPU benchmark because it’s a highly specific workload. This is like measuring the overall performance of a car by how well it can get out of mud. It’s an interesting metric in its own right but it won’t tell you anything useful about how it handles on the highway or in the commuter stop and go traffic.

CB is however useful as a “light” stress test, to study the thermal behavior of the system. Intel uses a Cinebench as a standard benchmark these days because their CPUs perform particularly well on this kind of tests (CB favors high clocks, wide vector units, fast caches, and scales very well with high number of cores and SMT)

I think that's true for many workflows, including routine office work. The most common and irritating office work delays I see with my 2019 i9 iMac are repeated small delays of ≈ 0.5 s – 3 s*, which could collectively be termed "machine responsiveness", but machine responsiveness seems like it would be hard to benchmark directly since these "mini-delays" are typically not replicable. I don't know how well results on the standard general benchmarks, like Geekbench and SPEC, correlate with machine responsiveness.I was always under impression that creative workflows are about bursty workflows — applying user-initiated processing steps which should ideally get completed as quickly as possible.

*Well, that plus that it takes over a minute from when I login to when my user account fully loads.

Last edited:

I notice you didn't mention Embree. Is that no longer an issue for using Cinebench to compare Intel and ARM processors?

Didn’t want to write too much

I think that's true for many workflows, including routine office work. The most common and irritating office work delays I see with my 2019 i9 iMac are repeated small delays of ≈ 0.5 s – 3 s*, which could collectively be termed "machine responsiveness", but machine responsiveness seems like it would be hard to benchmark directly since these "mini-delays" are typically not replicable. I don't know how well results on the standard general benchmarks, like Geekbench and SPEC, correlate with machine responsiveness.

*Well, that plus that it takes over a minute from when I login to when my user account fully loads.

Yeah, those things are most impactful and I would even know how to start measuring them.

@mr_roboto & @dmccloud :

I created a couple of Excel tables that should make the math more concrete. They demonstrate one can calculate a figure of merit for the relative effect of changing benchmarks on device performance, and that this figure is independent of any change in calibration devices, or the baseline scores assigned to those devices. Hence your contention, that my suggestion to do this "makes no sense" because of " different unit systems" or "different baselines", doesn't hold:

Consider two devices, X and Y. Suppose that, on a specific MC task in GB5, device X is faster than device Y. Further suppose that, on GB6, that task is replaced with a more challenging distributed task and that, compared to their performances with the GB5 task, this GB6 task takes device X four times as long to complete, but takes device Y only twice as long to complete. Intutitively, the change from the GB5 task to the GB6 task favors device Y over device X by a factor of two. Note this is independent of how long the GB5 tasks take X and Y. All that matters is how much their relative performance changes when we switch to GB6.

In the top tables I've assigned completion times for the GB5 task to devices X and Y. These are arbitrary, except that I've made device X faster, as described above. Then, also as described above, I made the GB6 X and Y completion times 4x and 2x as long, respectively. I then calculated the ratio by which the change from GB5 to GB6 favors Y over X, and got a figure of merit of "2", corresponding to the common-sense intuitive understanding mention above.

Note: In each case I calculate the resulting GB score from:

(baseline device score) x (baseline device time)/(test device time)

This implements Primate's prescription that the score is directly proportional to the performance.

I then repeated this calculation in the bottom tables, except this time I calculated the GB5 and GB6 scores for X and Y based on entirely different calibration devices, with different task completion times, and different assigned baseline scores. These cells are highlighted in light blue. You can see these changes have absolutely no effect on the figure of merit, which retains its value of 2. [The figure of merit, which is the relative scoring benefit seen by Y vs X in changing benchmarks, is shown in the orange cells. I show two different ways to calculate it.]

[The times highlighted in yellow, which are the task completion times in GB5 and GB6 for X and Y, of course remain the same, since they are independent of which calibration devices are used, depending only on the device and the task.]

Of course, this is just an illustration of the math. In practice, you woudn't want to calculate ratios for one device vs. another. Instead, you'd want to calculate ratios for each device vs. the average for all devices. Devices with a ratio greater than one would be relatively favored by the change in benchmark, while the opposite would be the case for devices with a ratio less than one.

I created a couple of Excel tables that should make the math more concrete. They demonstrate one can calculate a figure of merit for the relative effect of changing benchmarks on device performance, and that this figure is independent of any change in calibration devices, or the baseline scores assigned to those devices. Hence your contention, that my suggestion to do this "makes no sense" because of " different unit systems" or "different baselines", doesn't hold:

Consider two devices, X and Y. Suppose that, on a specific MC task in GB5, device X is faster than device Y. Further suppose that, on GB6, that task is replaced with a more challenging distributed task and that, compared to their performances with the GB5 task, this GB6 task takes device X four times as long to complete, but takes device Y only twice as long to complete. Intutitively, the change from the GB5 task to the GB6 task favors device Y over device X by a factor of two. Note this is independent of how long the GB5 tasks take X and Y. All that matters is how much their relative performance changes when we switch to GB6.

In the top tables I've assigned completion times for the GB5 task to devices X and Y. These are arbitrary, except that I've made device X faster, as described above. Then, also as described above, I made the GB6 X and Y completion times 4x and 2x as long, respectively. I then calculated the ratio by which the change from GB5 to GB6 favors Y over X, and got a figure of merit of "2", corresponding to the common-sense intuitive understanding mention above.

Note: In each case I calculate the resulting GB score from:

(baseline device score) x (baseline device time)/(test device time)

This implements Primate's prescription that the score is directly proportional to the performance.

I then repeated this calculation in the bottom tables, except this time I calculated the GB5 and GB6 scores for X and Y based on entirely different calibration devices, with different task completion times, and different assigned baseline scores. These cells are highlighted in light blue. You can see these changes have absolutely no effect on the figure of merit, which retains its value of 2. [The figure of merit, which is the relative scoring benefit seen by Y vs X in changing benchmarks, is shown in the orange cells. I show two different ways to calculate it.]

[The times highlighted in yellow, which are the task completion times in GB5 and GB6 for X and Y, of course remain the same, since they are independent of which calibration devices are used, depending only on the device and the task.]

Of course, this is just an illustration of the math. In practice, you woudn't want to calculate ratios for one device vs. another. Instead, you'd want to calculate ratios for each device vs. the average for all devices. Devices with a ratio greater than one would be relatively favored by the change in benchmark, while the opposite would be the case for devices with a ratio less than one.

Last edited:

Didn’t want to write too muchAnd to be honest, I came to believe that it’s more important to discuss the weak points of Apple Silicon rather than just blame it’s poor performance on suboptimal code alone. I’m fairly convinced that a raytracer written and optimized for Apple Silicon specifically could be another 10-20% faster but it still won’t beat x86 because if the reasons I mentioned.

Not sure, what you are trying to say, but Embree is the single reason for poor performance on AS. There is no weakness in the architecture, which would make AS performing inherently poor with raytracing tasks.

Last edited:

Not sure, what you are trying to say, but Embree is the single reason for poor performance on AS. There is no weakness in the architecture, which would make AS performing inherently poor with raytracing tasks.

It's not about raytracing, it's about SIMD-heavy code. Both Apple and modern x86 CPUs can do around 512 bits worth of SIMD operations per clock (give or take). But x86 CPUs run at higher clocks and have more L1D cache bandwidth to support thee operations. It's all about tradeoffs really. Apple is more flexible (their 4x smaller SIDM units are better suited for more complex algorithms and scalar computation) and more efficient (not paying for higher bandwidth and higher clocks), but this also mean it cannot win when it comes to a raw SIMD slugfest. We see it across all kinds of SIMD-oriented workflows btw, not just CB.

Is it possible to write a CPU raytracer that would perform better on Apple Silicon than embreee? I am sure it is. Embree isn't really written with a CPU in mind that has four (albeit smaller) independent SIMD units, and I wouldn't be surprised if certain operations (like ray-box intersection) can be implemented more efficiently on ARM SIMD. But no matter how efficient your code is, this doesn't change the fact that Apple Silicon is at disadvantage in SIMD throughout compared to x86 CPUs on the hardware level.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.