You shouldn't mod your card at all, no way to cool the PCIe switch.

If I get the V2 or V3.1 HP7101A - should I just leave any modding or only change the fan?

You shouldn't mod your card at all, no way to cool the PCIe switch.

You can change the rotation with HPT management first. If the fan noise pitch still makes you crazy, change to one of the two other low rotation that are compatible.If I get the V2 or V3.1 HP7101A - should I just leave any modding or only change the fan?

You can change the rotation with HPT management first. If the fan noise pitch still makes you crazy, change to one of the two other low rotation that are compatible.

Mirroring/Duplication is RAID1.One thing I'm a bit confused about is that both the HP software and DU say that the RAIDed drives are 3TB available - shouldn't it say 1.5TB since it's RAID 0?

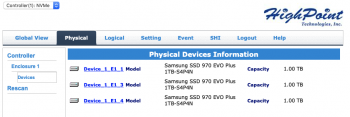

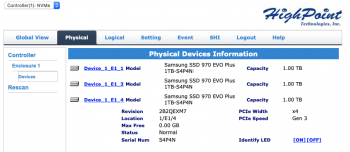

3 x 1TB RAID 0

View attachment 942428

Compare with "AJA System Test Lite.app" and "Blackmagic Disk Speed Test.app"? My Mac mini 2018 internal 128 GB drive has these sequential Read/Write results:I think I'm getting the expected speeds for the configurations.

It's not meaningful to compare MP7,1 results with a MP5,1. PCIe v3.0 vs PCIe v2.0.These are better than my numbers. Maybe someone more knowledgeable can answer: does it matter that @ncc1701d's tests were using 512 MiB and mine were in 1 GiB?

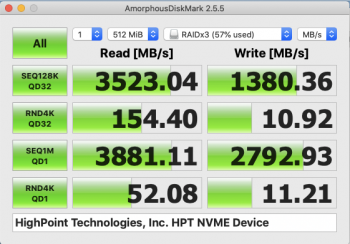

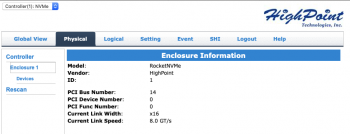

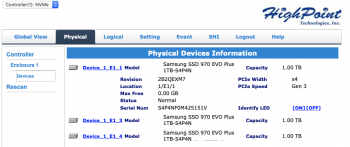

My 3x 1TB Samsung Evo Plus in slots 2-4 on the highpoint 7101A-1 in RAID 0 (HPT drivers+WebGUI RAID) HFS+ (MacOS Journaled), installed in PCIe slot #2

View attachment 942528

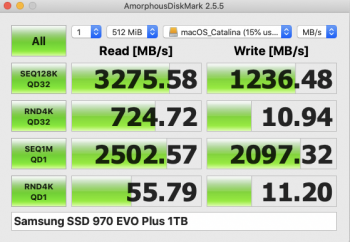

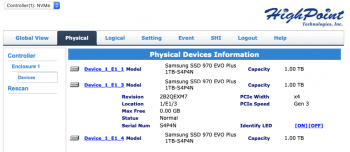

My 1TB Samsung Evo Plus boot drive in slot 1 on the HPT card with APFS

View attachment 942529

It's not meaningful to compare MP7,1 results with a MP5,1. PCIe v3.0 vs PCIe v2.0.

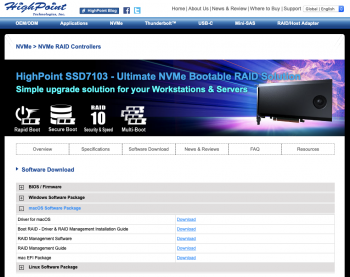

www.highpoint-tech.com

www.highpoint-tech.com

Very nice. Can connect eight NVMe devices. It's like the LQD4500 but not as compact (uses U.2 instead of M.2) and uses PCIe 3.0 instead of PCIe 4.0.Thoughts on this just released versus 7101A?

U.2 Non-Bootable - Gen3 | HighPoint-tech.com

HighPoint high port count (HPC) SSD7180 & SSD7184 & SSD7120 NVMe RAID controllers are ideal for professional applications that require a small-footprint, mass-storage NVMe solution that can take full advantage of the PCIe 3.0 x16 transfer bandwidth. This performance-focused NVMe RAID...www.highpoint-tech.com

Hi everyone, I'm trying to dig through this thread to estimate what my read write speeds should be in a 2019 7,1 Mac Pro. I just picked up this controller and 4 x 1tb Evo SSDs. Any guesses?

Also, would it be better to run Windows on 1tb by itself, and then run extra Mac storage on 3 x 1TB? Or would it be better to Raid 0 all 4 and setup Windows on a small partition? I think I only need 256gb-500gb max. Wondering which setup is ideal?

Thoughts on this just released versus 7101A?

U.2 Non-Bootable - Gen3 | HighPoint-tech.com

HighPoint high port count (HPC) SSD7180 & SSD7184 & SSD7120 NVMe RAID controllers are ideal for professional applications that require a small-footprint, mass-storage NVMe solution that can take full advantage of the PCIe 3.0 x16 transfer bandwidth. This performance-focused NVMe RAID...www.highpoint-tech.com

Right. Not easy to boot Windows or macOS in RAID unless it's a hardware RAID instead of a software RAID.run Windows by itself and the other 3 in raid 0 (if you have another means of backup)

Should not be a problem. I think you need to enable external booting in Startup Security Utility.can you boot off the single drive in the pcie card ?

It should not down speed. The downstream M.2 devices should run at PCIe 4.0 x4. The upstream link is limited to the link rate and link width of the PCIe slot.if you buy the PCIe 4 model will it down speed for the new Mac Pro or just not run at all?

run Windows by itself and the other 3 in raid 0 (if you have another means of backup)

can you boot off the single drive in the pcie card ?

Partition the external drive manually if you can't use Boot Camp Assistant to do it. SATA and NVMe should be a normal Windows install for Macs like the MacPro7,1 that can boot Windows using UEFI. There are special instructions for installing Windows to USB. Once Windows is installed, then you can install Boot Camp drivers to support Apple devices and displays.I thought you couldn't use bootcamp on an external drive (?).

I'm using one the of the 1TB blades for windows - can I get someone with a PC to format it for windows, install a copy of Windows 10 on it, then put it back into the HP7101A and then use the bootcamp drivers? (it was looking too hard, so that's why I just thought I'd go with the Virtual apps)Partition the external drive manually if you can't use Boot Camp Assistant to do it. SATA and NVMe should be a normal Windows install for Macs like the MacPro7,1 that can boot Windows using UEFI. There are special instructions for installing Windows to USB. Once Windows is installed, then you can install Boot Camp drivers to support Apple devices and displays.

Thanks for sharing your most recent experience here on your 7101A-1. I, too, have the same question regarding BLOCK SIZE and SECTOR SIZE settings in HighPoint GUI. Mine are currently at 64k BLOCK SIZE and 4k SECTOR SIZE. See Photos of this setting and also my speed results -- I am very curious if my deviations from some of what you all posted earlier are due to my settings here (and my non-official HP driver currently being used, but that works).Just got my HighPoint 7101A-1 NVMe installed. I was really confused about BLOCK SIZE and SECTOR SIZE during the setup. I did 512k and 512k for both. Have no idea what these settings are supposed to be.

Speeds are good! 6-7k reads and writes on plain EVOs.

Also, I'm going to keep watching but it seems like the fans are on high since creating this RAID which is concerning.

Edit: I've read a lot here about fans. I have a 3 pin card with v3.1 on it. For some reason I'm not seeing any firmware version in the software, and I don't see anywhere to control the fans. Can anyone give me advice on how to keep this beast quiet?

Edit 2: Ahh. I DO have the fan control. I turned it to low and it's quiet. I'm hoping if it gets hot it will crank up. But I'm super happy. This is a good piece of hardware.

I can't figure this out at all. The process to install to an external USB doesn't work because the 7101A isn't a USB and isn't detected as such by VMware during the install process. I've asked Highpoint and they said they will refer it to their engineers. I'm going to try and install a dummy macOS on the 1X1TB blade and then use its bootcamp to install Windows. Failing that, VMware is coming out with version 12 which is supposed to be free in October. I'll just use that.Partition the external drive manually if you can't use Boot Camp Assistant to do it. SATA and NVMe should be a normal Windows install for Macs like the MacPro7,1 that can boot Windows using UEFI. There are special instructions for installing Windows to USB. Once Windows is installed, then you can install Boot Camp drivers to support Apple devices and displays.

I can't figure this out at all. The process to install to an external USB doesn't work because the 7101A isn't a USB and isn't detected as such by VMware during the install process. I've asked Highpoint and they said they will refer it to their engineers. I'm going to try and install a dummy macOS on the 1X1TB blade and then use its bootcamp to install Windows. Failing that, VMware is coming out with version 12 which is supposed to be free in October. I'll just use that.

Hi y'all. Returning to my venerable cheese grater after a year or so of focusing on other projects--cool to see all the progress being made with OpenCore and Titan Ridge. Looking forward to diving into some updates!

I managed to snag a 7101A from Amazon Warehouse for super cheap--and seems it was never actually used. It's the updated 2.0 PCB with de-lidded PLX chip and 3-wire fan. Haven't installed the HP drivers to check the firmware revision yet, but did load in two Samsung 970 Evo blades and tested that the card works properly. Not really planning on any RAID (though glad to have the option). Just nice to have up to four blades for JBOD and only needing one PCI slot.

I skimmed through the thread but am wondering if anyone was ultimately successful in putting this in slot 2 with the monster Sapphire RX580 in slot 1. I know I can/should reverse them but would really rather not lose the use of slot 3. And I'm aware that putting the 7101A in slot 2 may present some airflow concerns with the GPU. But I would at least like to try for a while and will just keep an eye on the temps--that is if I can manage to get the fans to clear the underside of the 7101A.

In my first attempt I was not able to get enough separation between the cards. I wonder if this will be harder with the Rev 2 card because it has all the additional standoffs for 42/60mm m.2 blades--guess I could try to remove them.

But before reinventing the wheel I just thought I would ask if anyone is successfully running with this configuration and has any tips--removing screws/brackets, adding spacers, etc.

Thanks!