I personally think AMD is done in the Windows laptop market. They'll be the budget option again. Intel is hyper aggressive right now; undercutting AMD on prices, spending billions to buy TSMC wafers at the same time as AMD or even before AMD, and releasing new architectures at an extremely rapid pace.

too early to write AMD off in laptop space. It isn't their priority, but they are not sitting still either.

iGPU wise they are still more than competitive with Intel iGPU. Apple's iGPU has "bent" the evaluation metrics for laptop iGPUs. AMD isn't on an even with Apple , but they are doing better than Intel.

The space were a system vendor can drop the laptop dGPU and just go with an iGPU is not the "budget laptop option" location.

AMD managed to get the TSMC N6 laptop discrete GPU out the door before Intel did. Intel is buying wafer but for the moment they are not shipping them. [ In part because working to get more solid drivers. ]

Intel is releasing new code names at a rapid pace. Not really new architectures. Rocket Lake got a new name more so to being backported from 10nm-class design to 14nm-class one. Alder Lake -> Raptor Lake probably is not a new architecture. "Raptor Cover" is pretty likely a cleaned up "Golden Cove" with the appropriate laptop and desktop revisions to from the server baseline design of Golden Cove. [ e.g. take out the stuff they are not using that bloats out the die space consumption. ] The 'little' , 'E' cores are reported to be exactly the same ( Gracemont) There is certainly no arch move there. Just lots more of them. ).

Here is a table from videocardz

"....

| VideoCardz.com | Alder Lake | Raptor Lake | Meteor Lake | Arrow Lake | Lunar Lake | Nova Lake |

|---|

| Launch Date | Q4 2021 | Q4 2022 | Q2 2023 | Q4 2023 | Q4 2024 | 2025 |

|---|

| Fabrication Node | Intel 7 | Intel 7 | Intel 4 | TBC | TBC | TBC |

|---|

| Big Core µArch | Golden Cove | Raptor Cove | Redwood Cove | Lion Cove | Lion Cove | Panther Cove |

|---|

| Small Core µArch | Gracemont | Gracemont | Crestmont | Skymont | Skymont | Darkmont |

|---|

..."

Intel 14th Gen Core Meteor Lake Stephen Shankland from CNET takes a tour inside Intel’s Arizona chipmaking fab and shares the first photos of the next-gen mobile chip. While Intel is just about to announce its 12th Gen Core Alder Lake-P/M series next year, the first photographs of test silicon...

videocardz.com

Golden Cove ~= Raptor Cove then have big/small covering two years. Lion Cove/Skymonth clearly cover two years. Intel has some more gap filling than AMD is doing , but the schedule above is basically a two year cycle on architectures.

Intel's bloated P cores and a relatively fixed laptop die space budget means Intel is taking a hit on space they can allocate to iGPU and other functions. if they shrink the P cores with "Raptor Cove" and then throw all of that,

and more , at E cores, then they are not going to catch up in iGPU space.

AMD also put substantive effort into their latest generation into improving battery life in systems that use their chips.

The USB 4 driver update is exclusive to Ryzen 6000 until Zen 4 arrives

www.anandtech.com

AMD is on TSMC N6 and Intel is on N7. This is a step forward from the N7 they have been using. It isn't the N5 that will ship later this year , but it is shipping now. The laptop dGPUs from Intel and AMD probably lean in AMD's favor.

In short, Intel isn't offering the better CPU+GPU package system balance. Most of this "AMD is doomed" is coming myopic CPU drag racing benchmarks. Does AMD have a "Intel Killer" product offering and focus in the laptop space? No. Do they have enough to defend the position with will have carved out by the middle of 2022? Probably yes. Can Intel buy back a few percentage points? Probably. However, that won't be enough to squeeze AMD completely out as long as Intel trails in iGPU performance and AMD CPU cores are "good enough".

AMD probably knows better than Intel that going back to rely on the "budget" systems is probably a dead end.

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

www.anandtech.com

Samsung’s chip announcement is light on details and lacks performance claims.

arstechnica.com

Decent chance that Samsung implemented the RNDA2 architecture at least in part by themselves. There isn't a humongous leap between having just one X1 core and going to like 4 X1 and 4 710 cores and having a Windows 11 on Arm system to compete with Snapdraong 8cx gen 3 ( 4 X2 + 4 A78 )

Intel is willing to sacrifice its margins to put AMD back in the rearview mirror. I think they will succeed.

Intel is about to get into a battle with Nvidia on dGPUs. With TSMC in buying EUV ASML machines . With TSMC and Samsung on competing for contract fab design wins. With a AMD+Xlinix team up. Ampere/Amazon/etc on cloud services CPU packages. etc.

If all of that margin squeeze was

only pointed at AMD that would be a bigger deal. However, like the old "Risk" game maxim goes ... don't get into a land war in Asia. Intel is fighting a broad front 'war' against multiple competitors at at he same time. A substantive chunk of Intel's margin scrafice is going to be stopping the "bleed" of percentage ; not buying it all back.

If AMD releases Zen4 in Q4 of 2022, it will mark 2 years since Zen3. In that same time, Intel will have gone from Tiger Lake to Alder Lake to Raptor Lake. Intel is targeting Meteor Lake in 2023.

As stated above. Raptor isn't a arch move. It is far more a "optimize" iteration than a "tick" (process ) or "tock" (architecture) one. Intel hand waving about how they are fully back on the tick-tock model probably wants to posture Raptor Lake as a "Architecture" move. It isn't.

Not sure why you think it's exotic. It's clear that the laptop/desktop world is heading towards big.Little. AMD is actually years behind Intel on this because Zen4 won't be big.Little. As a software developer, big.Little makes total sense to me even in high-power devices.

Has very little to do with the big.little classification and far more to do with the hefty multiple ratio between big.little cores. 8:8 -> 8:16 . The 16 is far more demonstrative that the big's are really suffering from being "too big". Going to something tagged as a 'little" or 'E' because they are being on fab node evolution to save die space at least as much to save energy.

Again, big.Little is here to stay. It's not exotic. big.Little has been mainstream on mobile for over a decade. It makes perfect sense for the vast majority of consumer workloads.

There is little justification why those ratios should be the same for desktop / laptop as they were for handheld mobiles that basically unused most of the time.

On a day to day basis, most consumers will notice a faster single-thread CPU more than a multi-CPU thread. big.Little allows Intel/Apple/ARM to design and implement a few really big high-performance cores and then let little cores work on background tasks.

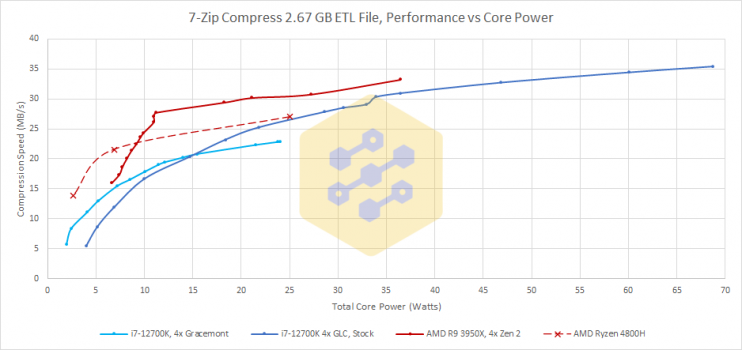

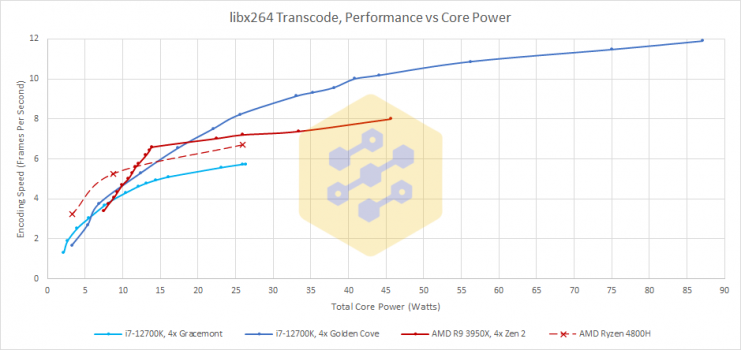

What folks tend to notice more on their laptops is the battery life. Intel is throwing power consumption at hitting those single thread drag racing marks.

We'll see as the battery reviews come out for Gen 12 and Ryzen 6000 systems that are not high end gamer focused as to who is doing the better job at delivering better battery life.

In addition, Intel's E cores have the performance of Skylake at 1/3 the wattage. That's damn impressive as long as you don't compare to Apple Silicon.

Skylake was on 14nm 2014-6 and 2019-22 Gracemont cores are on Intel 7. If have a 50% node shrink , then about 50% of the power savings is pretty close to a "no brainer" competency demonstration and not "amazing". Intel put in some significant updated design work here. But they also are comparing back to a very old, relatively unoptimized node.