I haven't read it, but cmd-f on "battery" and "efficien" found nothing.PC World has published an article comparing the performance of Apple's M1 Max and Intel's 12th-gen Core i9 flagship.

Laptop brawl: Apple's M1 Max vs. Intel's 12th-gen Core i9 flagship

Bengals vs. Chiefs, Bud Light vs. Bud, Apple M1 Max vs. Intel 12th-gen Core i9—we do our part to carry on the long tradition of pointless partisan squabbling and rabid one-upsmanship.www.pcworld.com

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Intel Alder Lake vs. Apple M1

- Thread starter leman

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It's funny, A78 cores at 2.2-2.6GHz are in the 1-1.5W in SpecInt depending on the node and all.Zen 3 is a year old and Zen 4, barring delays, will be here at the end of the year. That’s a reasonable cadence. Intel’s next chip Raptor Lake will be a reorganized Alder Lake similar to A15 relative to A14. It’s important to remember how much ground AMD had to catch up to Intel, that Zen 3 surpassed them by as much as it did surprised everyone, including AMD. Further AMD does have Zen 3+ coming to the desktop soon. For laptops they’re still competitive in terms of perf/W. Finally it’s important to remember that Intel’s total solution is not quite as cheap as it first appears: DDR5 is expensive and necessary if Alder Lake’s cores are to actually stretch their legs and motherboard prices have gone up.

The issue is that Alder Lake’s Golden Cove cores are still too big and power hungry to match AMD in core count. AMD’s performance cores are still too far ahead of Intel’s here. Thus Intel introduced midrange Gracemont cores to up the number of cores that could fit on a single die without blowing up power or die size. Now these midrange cores are actually quite nifty but should not be confused with traditional little or E-cores. This is what @Andropov and @leman were trying to explain earlier in thread with @senttoschool. Yes Alder Lake is heterogeneous in core size (and unfortunately ISA, that’s one indicator that Alder Lake’s design was a bit rushed), but Intel’s heterogeneity’s raison d’être is different from say Apple’s. In some ways it is more similar to ARM’s tri-level designs … just without the little cores. The focus of such midrange cores is on multithreaded throughput perf/W while the focus of little cores is to as efficiently as possible keep housekeeping threads off the main cores. Midrange Gracemont and A7x cores *can* do that housekeeping just as A5x and Icestorm cores can be used for multithreaded throughput. But in neither case is it their primary function. (Icestorm is a weird case because it actually exists somewhere between A5x cores and A7x cores. But that’s a whole ‘nother topic.) Bottom line is though: while AMD may adopt heterogeneous CPUs they don’t face quite the same problems as Intel. They might decide that it also makes sense to go with midrange cores for themselves but they might not.

Overall I wouldn’t put the relationship between Intel 12 Gen and AMD Zen 3 as a lack of progress from AMD but Intel finally unf***ing themselves and moving to counter AMD’s surprise resurrection.

MediaTek's Dimensity 1200 on N6 has four A78's, one of which is maximally clocked to 3GHz, and hits about 1000 on GB5 ST, albeit at 2.2 watts of core power per Anandtech. Still ridiculously impressive relative to Gracemont, IMO.

Well, one thing to bear in mind is Nuvia-designed Phoenix cores won't arrive until 2023, and second of all:I think Intel will easily beat Mediatek and Qualcomm CPUs in terms of power efficiency, if that comparison even makes sense (remember, power efficiency is not the same as low absolute power consumption). Where it lands relative to the M1 remains to be seen when we have better data. I think the most efficient Alder Lakes will be the P and U versions, which according to Intel engineers are optimized for power, while the H parts seem to have the same performance-oriented profile as the desktop parts.

Anytime a design has more cores, running highly-parallelized (to degrees not necessarily present in the real world) benchmarks with each respective core at lower voltages, frequencies (as opposed to fewer cores of virtually any kind running towards their peaks) will offer a much better shot at yielding superior "performance per watt" in aggregate for a given point on a power-to-performance graph. Performance will scale nearly linearly with clock rate, but the actual power consumption through the range of frequencies will not, so even with an efficient architecture, you can only get so far vs someone with this strategy so long as their modest frequency energy efficiency isn't completely worthless.

Various firms can take advantage of this by clocking 10-20 dopey (inefficient, if performant at their peaks) cores at reasonable ranges and showing "superior energy efficiency" for a single absurd point. But this elides a great deal still IMO.

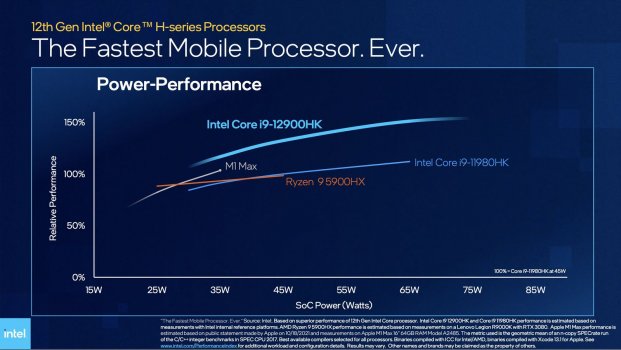

This is essentially what Intel have done with the 14-core 12900HK with that single comparison of it "beating" the M1 Max/Pro at 35-40 watts or whatever (and marginally at that). Intel marketing graph I am referencing is depicted below, at the bottom of the post obviously.

This is also why I prefer comparing on a core-to-core basis, because it teases out the least malleable variables - who actually has an efficient microarchitecture that is not reliant on boosting to 4.7-5GHz to realize performant ST indices? What do the power consumption figures actually look like per core?

Jesus, even MediaTek and Qualcomm with crappy ARM IP (only crappy relative to Apple really) can hit 3.5/4.2 watts for an X2 hitting the 1200-1275 range, at 3GHz respectively.

If Apple wanted to scale a few extra performance cores and dip on out GPU area or encoding crap, they could, and it would end the facile graphs like the one from "Team Blue" below. AMD will actually do exactly this with a 16-Core Zen 4 mobile solution, and frankly Zen 3+ as it stands would certainly beat the 12900HK in the 80-120W range if you doubled it's cores to 16. Whatever Qualcomm comes up with will be in a similar position in terms of core scaling and efficiency, and I'm told the Phoenix cores will be more efficient than the A720 & X3, (though the SOC that uses them in 2023 will not be a heterogenous CPU) anyways, so this too will be competitive and a bad time for Intel.

Plausibly accurate but also somewhat misleading graph below:

Attachments

Last edited:

Lol Geekbench is in no meaningful sense garbage.Joking aside. Geekbench is garbage. Real world workloads are best.

Zen 3 + a la Rembrandt very much was a refresh even on desktop, the desktop SKU's just aren't coming until Q3/Q4. The 3D cache 5800 isn't even Rembrandt, it's just the 5800 with TSV-cache and lower clock rates. Rembrandt is about replacing Vega graphics with RDNA2 & doing so on a slightly better N6 node. That's still coming to replace the 5600/5700G APU's, just later than for mobile.Zen4 will be 2 years after Zen3. I don't think that's a reasonable cadence. That's slow compared to Intel's current roadmap.

I wouldn't use the word "reorganized" to describe A14 to A15. That would imply that they use the same cores but just organized differently. We see from Anandtech's breakdown of the A15 that the cores did in fact change, especially the efficiency cores which received a massive upgrade.

Raptor Lake is expected to have upgrades to ADL cores.

Zen 3+ desktop isn't a refresh. It's a single SKU (5800X) that has 3D cache glued on. It's targeted at gaming only as its clock speeds needed to decrease in order to accommodate extra heat. Perhaps you're confusing it with AMD's 6nm mobile Zen3 refresh?

You don't need DDR5 for Alder Lake. It can work with DDR4. In some applications, DDR4 was faster than DDR5 and vice versa.

This isn't an issue. This is a design choice. Golden Cove beats Zen3 in ST by nearly 20%. That's 1-2 generation difference. This is why it's big.

Again, this is a design decision. ADL is primarily aimed at laptops but works well on desktop too. On desktop, the little cores do indeed massively boosts MT in a smart way. I don't see anything wrong with the design vs AMD when the results speak for themselves.

I don't think they were trying to explain it to me. I'm well aware of ADL's power ratings since I invest in semiconductor companies and follow every product closely. I'm also typing on an M1 Pro laptop right now with an A15 iPhone 13 next to me.

@Andropov and @leman were a bit confused. They were trying to say that ADL's little cores aren't designed to be as low power as little cores inside Apple Silicon. I was merely trying to point out that ADL was designed to compete against AMD, and to improve efficiency on laptops. I think ADL accomplishes both. It does not matter if ADL's little cores aren't "traditional" little cores.

The computer world has moved to big.Little in virtually every category except servers. big.Little makes too much sense for phones, laptops, and desktops. It's not an advantage for AMD that they don't a big.Little design right now.

I don't think people are saying AMD is lacking progress. I think people are saying that ADL is hugely impressive in the x86 world and comfortably beats AMD's products on desktop and laptops at the moment.

I expect Zen4 to beat Raptor Lake in perf/watt in Q4 of this year but I expect Meteor Lake to surpass Zen4 two quarters later. If Zen5 takes two years to come out like Zen3 to Zen4, I think AMD will be in huge trouble.

Ampere Altra Is First ARM-based 64-bit Server Processor, Packs 80 Cores, Challenging Intel Xeon And AMD EPYC

Ampere, a lesser-known company, has come up with an 80 Core 64-Bit Processors designed primarily for high-end servers and relevant remote cloud

appuals.com

appuals.com

Energy efficiency is the term you are looking for. And I've seen those quotes, I'm sure the scheduling could differ along with default clock rates and maybe the uncore power, but unless there are drastically different binning techniques going on for the voltages per SKU here I don't know how much it will differ in the end - you can still configure the desktop processors to target more efficient uses of power on Linux or Windows, and we have a pretty good glimpse of what the actual core power consumption looks like for each ADL microarchitecture.I think Intel will easily beat Mediatek and Qualcomm CPUs in terms of power efficiency, if that comparison even makes sense (remember, power efficiency is not the same as low absolute power consumption). Where it lands relative to the M1 remains to be seen when we have better data. I think the most efficient Alder Lakes will be the P and U versions, which according to Intel engineers are optimized for power, while the H parts seem to have the same performance-oriented profile as the desktop parts.

I do tend to agree the 2+8 SKU will prove modestly competitive and a massive upgrade over TGL and ICL which were horrible IME/IMO.

Last edited:

I joked to a buddy this summer with the unveiling of ADL that Intel Thread Director is like an Iron Lung for Polio.As a (former) algorithms researcher, machine learning sounds like a massive overkill for such a simple scheduling problem. On the other hand, it could plausibly improve the efficiency a little bit to justify itself.

Tasks that need realtime priority are already annotated. For everything else, scheduling is just a matter of deciding whether to finish something non-urgent sooner or later. And, unlike with heterogeneous memory, scheduling mistakes with heterogeneous compute are easy to fix. Once the scheduler realizes it has made a mistake, it can correct the situation in a matter of milliseconds.

I’ll have to defend the honour of John Poole here.Joking aside. Geekbench is garbage. Real world workloads are best.

Geekbench is a pretty damn good, and transparent, benchmark of its kind. It doesn’t try to measure everything, it focusses on core performance with a fairly wide range of subtests, and is also cross platform and thus allows comparisons between architectures.

Its drawback is mainly that it produces an aggregate ”figure of merit” and that world+dog LOVES to draw exaggerated conclusions from miniscule differences in aggregate score. But this is not a problem with the benchmark per se, it’s a problem with how people use benchmarks.

(Note that it provides all the subtest results.)

Application tests are great but their results typically lacks transferability to other applications or even just doing another operation in the same application! Also, you typically have no idea what compilers, much less compiler settings, were used for the application on different platforms. Nor do they necessarily even run the same underlying code. You get a number, but have no idea what it means in relation to another number.

Personally, I’d like to see some memory subsystem tests in Geekbench. Like it or not, the benchmark is used for platform comparisons, and the memory subsystem is very important to the performance of a modern SoC. Maybe it will return in rewamped/improved form in Geekbench 6.

I’ll have to defend the honour of John Poole here.

Geekbench is a pretty damn good, and transparent, benchmark of its kind. It doesn’t try to measure everything, it focusses on core performance with a fairly wide range of subtests, and is also cross platform and thus allows comparisons between architectures.

Its drawback is mainly that it produces an aggregate ”figure of merit” and that world+dog LOVES to draw exaggerated conclusions from miniscule differences in aggregate score. But this is not a problem with the benchmark per se, it’s a problem with how people use benchmarks.

I think Geekbench would be a much better benchmark if it also supported testing sustained performance. It favors systems optimized for burst…

If you want memory subsystem tests I tend to think SpecInt2017 fits that criterion quite well.I’ll have to defend the honour of John Poole here.

Geekbench is a pretty damn good, and transparent, benchmark of its kind. It doesn’t try to measure everything, it focusses on core performance with a fairly wide range of subtests, and is also cross platform and thus allows comparisons between architectures.

Its drawback is mainly that it produces an aggregate ”figure of merit” and that world+dog LOVES to draw exaggerated conclusions from miniscule differences in aggregate score. But this is not a problem with the benchmark per se, it’s a problem with how people use benchmarks.

(Note that it provides all the subtest results.)

Application tests are great but their results typically lacks transferability to other applications or even just doing another operation in the same application! Also, you typically have no idea what compilers, much less compiler settings, were used for the application on different platforms. Nor do they necessarily even run the same underlying code. You get a number, but have no idea what it means in relation to another number.

Personally, I’d like to see some memory subsystem tests in Geekbench. Like it or not, the benchmark is used for platform comparisons, and the memory subsystem is very important to the performance of a modern SoC. Maybe it will return in rewamped/improved form in Geekbench 6.

I remember seeing somewhere that Geekbench 6 would tackle this but I can't find such a thing anymore by searching (or John Poole's twitter, where I thought I saw it). Oh well.I think Geekbench would be a much better benchmark if it also supported testing sustained performance. It favors systems optimized for burst…

I somewhat agree, but the burst performance is still somewhat relevant and one can always loop a shorter benchmark and average out the scores.I think Geekbench would be a much better benchmark if it also supported testing sustained performance. It favors systems optimized for burst…

Last edited:

RE multiples: oh they're going to. Here on out, the scaling will mostly be one of E cores for the next 3-5 years from the rumors (certainly for the top SKU). I'm talking about 8/32 P/E ratios for Arrow Lake which is 15th gen. 8/24 for Raptor Lake and Meteor Lake, which are 13th and 14th generation respectively."... This theory is way out there, but it's plausible because AMD doesn't have a formidable low-power CPU core architecture to rival "Gracemont ..."

AMD wasn't 100% clear on how Bergamo was getting two 128 core counts. That could be very straightforwardly done with 16 core CCDs ( 8 * 16 = 128 ). So what AMD Zen 4c core would be is a core that allowed them to double up the number of cores on a single CCD of roughly about the same size or just incrementally bigger.

That seems more likely than Bergamo being some huge bloated CCD count solution ( e.g., 16 * 8 => 128 ).

There is a pretty good chance that the Zen4c isn't as comprehensive as the mainstream Zen 4 core. They are probably getting rid of something to crank the core count higher for a specifically selected set of workloads. (e.g., throw out high performance computing , matrix , and media while going after http server , double float javascript , and java. ). Also it won't be surprising if there are some clock caps on these also. Average data center workloads don't need max single thread drag racing speed . ( if have dozens of different active users juggling between then there are no "bursty" workloads where run and then the whole SoC goes to idle and then another race-to-sleep. )

There are a group of folks speculating that if AMD can make a mainstream Ryzen with a Zen2/3/4 CCD then they could make a consumer products with a Zen4c/5c/etc product. [ the Moorse Law Dead guy threw that spitball out there in a session with not much direct evidence driving it at all. ] I won't bet the farm on those theories. At least not without seeing how AMD 'cut down' the 4c.

Intel was suppose to do a "base station " sever of Gracemont with up to 24 cores to follow up the Atom P59xxB server products ( "Snow Ridge") . In 2020 that was suppose to be on the "old' Intel 7. Intel hasn't done high core count Atoms for consumers. That isn't really setting a trend for AMD to follow either.

If you are stuck on "N7" and your competitors are on "N5" it doesn't have to be sustainable; just a good enough stopgap. If with "Intel 4" they are still adding additional multiples to the E core count then yeah that is a problem.

Multiple chip arrives at the desktop/laptop level. Intel is already shipping evaluation/certification multi-chip to the big internet services providers.

It doesn't seem like the top model will be gaining any more P cores for some time. They are basically doing the inverse of Apple, not that the core nomenclature necessarily means anything in an absolute scale, an Apple Firestorm core will probably be more energy efficient than whatever Intel has for several years to come, but you get the idea.

The only consistent commentary I’ve seen on geekbench is that it’s a highly accurate and sensible benchmark when it supports one’s argument, and complete ******** when it doesn’t.

The lack of a sustained workload does suck, and there is a Windows penalty, and previous "crypto" workload boosts for Intel processors with AVX-512 were ridiculous, but on net for boost workloads if you look at the ST integer stuff it gives you a decent idea of the performance potential at peak in a single number that is easy to assay since the program has a free tier.The only consistent commentary I’ve seen on geekbench is that it’s a highly accurate and sensible benchmark when it supports one’s argument, and complete ******** when it doesn’t.

I don't think it's that simple. Power efficiency usually has a "sweet spot", so running - to use an exaggerated example - a hypothetical 100 cores at 30 MHz instead of a single core at 3 GHz will not be optimal (since at some point the base idle consumption of all those cores dominates), even if you have a perfectly parallelizable workload.Well, one thing to bear in mind is Nuvia-designed Phoenix cores won't arrive until 2023, and second of all:

Anytime a design has more cores, running highly-parallelized (to degrees not necessarily present in the real world) benchmarks with each respective core at lower voltages, frequencies (as opposed to fewer cores of virtually any kind running towards their peaks) will offer a much better shot at yielding superior "performance per watt" in aggregate for a given point on a power-to-performance graph.

Now when you start adding hetergeneous cores it becomes even more complicated since other factors such as area efficiency, the OS's scheduling and core migration capabilities etc. come into play, and different cores will have different efficiency for different workloads.

I think many people still underestimate the impact that the introduction of heterogeneous architectures to x86 will have in the future. The "thread director" is essentially a way to achieve tighter integration between hardware and OS. Once the software support in Windows and Linux matures, Intel will have a lot of new knobs to finetune CPUs for different purposes just by changing thread director parameters (which is what they have apparently done for the P and U Alder Lakes). This goes well beyond just pushing background tasks to efficiency cores.

Their only real advantage for doing that would be higher transistor counts on die thanks to TSMC's 5nm process. But that advantage may be short-lived if Intel can execute on their roadmap.If Apple wanted to scale a few extra performance cores and dip on out GPU area or encoding crap, they could, and it would end the facile graphs like the one from "Team Blue" below.

Last edited:

It's funny, A78 cores at 2.2-2.6GHz are in the 1-1.5W in SpecInt depending on the node and all.

MediaTek's Dimensity 1200 on N6 has four A78's, one of which is maximally clocked to 3GHz, and hits about 1000 on GB5 ST, albeit at 2.2 watts of core power per Anandtech. Still ridiculously impressive relative to Gracemont, IMO.

Well, one thing to bear in mind is Nuvia-designed Phoenix cores won't arrive until 2023, and second of all:

Anytime a design has more cores, running highly-parallelized (to degrees not necessarily present in the real world) benchmarks with each respective core at lower voltages, frequencies (as opposed to fewer cores of virtually any kind running towards their peaks) will offer a much better shot at yielding superior "performance per watt" in aggregate for a given point on a power-to-performance graph. Performance will scale nearly linearly with clock rate, but the actual power consumption through the range of frequencies will not, so even with an efficient architecture, you can only get so far vs someone with this strategy so long as their modest frequency energy efficiency isn't completely worthless.

Various firms can take advantage of this by clocking 10-20 dopey (inefficient, if performant at their peaks) cores at reasonable ranges and showing "superior energy efficiency" for a single absurd point. But this elides a great deal still IMO.

This is essentially what Intel have done with the 14-core 12900HK with that single comparison of it "beating" the M1 Max/Pro at 35-40 watts or whatever (and marginally at that). Intel marketing graph I am referencing is depicted below, at the bottom of the post obviously.

This is also why I prefer comparing on a core-to-core basis, because it teases out the least malleable variables - who actually has an efficient microarchitecture that is not reliant on boosting to 4.7-5GHz to realize performant ST indices? What do the power consumption figures actually look like per core?

Jesus, even MediaTek and Qualcomm with crappy ARM IP (only crappy relative to Apple really) can hit 3.5/4.2 watts for an X2 hitting the 1200-1275 range, at 3GHz respectively.

If Apple wanted to scale a few extra performance cores and dip on out GPU area or encoding crap, they could, and it would end the facile graphs like the one from "Team Blue" below. AMD will actually do exactly this with a 16-Core Zen 4 mobile solution, and frankly Zen 3+ as it stands would certainly beat the 12900HK in the 80-120W range if you doubled it's cores to 16. Whatever Qualcomm comes up with will be in a similar position in terms of core scaling and efficiency, and I'm told the Phoenix cores will be more efficient than the A720 & X3, (though the SOC that uses them in 2023 will not be a heterogenous CPU) anyways, so this too will be competitive and a bad time for Intel.

Plausibly accurate but also somewhat misleading graph below:

The graph is accurate in the sense that Intel used the ICC compiler to give themselves a free 45% speed boost relative to their AMD and Apple competition. So a little more than somewhat misleading even if the numbers are “real”.

Yes, there are a few subtests that reach all the way out to main RAM.If you want memory subsystem tests I tend to think SpecInt2017 fits that criterion quite well.

When I did/do these things myself, I just measured LinPack performance on progressively larger matrices to gauge where the cliff edges were in the cache to RAM hierarchy along with a STREAM run. The last few years, Andrei Frumusanu did great work in characterising memory subsystem performance, in far more detail than I've ever tried on my own. Alas, he no longer does these tests (or at least doesn't publish them).

Given how instrumental the memory subsystem is to the overall performance of modern day SoCs, test suites are in remarkably short supply.

PC World has published an article comparing the performance of Apple's M1 Max and Intel's 12th-gen Core i9 flagship.

Laptop brawl: Apple's M1 Max vs. Intel's 12th-gen Core i9 flagship

Bengals vs. Chiefs, Bud Light vs. Bud, Apple M1 Max vs. Intel 12th-gen Core i9—we do our part to carry on the long tradition of pointless partisan squabbling and rabid one-upsmanship.www.pcworld.com

so the M1 max was crushed

I haven't read it, but cmd-f on "battery" and "efficien" found nothing.

The article was half tongue in cheek, but no they didn’t really talk about power except a slight nod in the beginning to acknowledge that maybe the two laptops are in slightly different weight classes, quite literally.

The Macworld version of the article was better and basically mirrors the conclusions of everyone else: yes the i9 wins most of the benchmarks, but at the expense of massive power draw which is a big asterisk. And, in this case, the Mac is also actually cheaper too.

Fact-checking the benchmarks: Intel's Alder Lake Core i9 vs Apple's M1 Max

Intel's latest chip is faster than Apple's but there are caveats.

SPECFloat I think actually has the more demanding memory tests, which is why Apple’s M1 Pro/Max gets such crazy good scores on some of the sub tests.If you want memory subsystem tests I tend to think SpecInt2017 fits that criterion quite well.

Yes, there are a few subtests that reach all the way out to main RAM.

When I did/do these things myself, I just measured LinPack performance on progressively larger matrices to gauge where the cliff edges were in the cache to RAM hierarchy along with a STREAM run. The last few years, Andrei Frumusanu did great work in characterising memory subsystem performance, in far more detail than I've ever tried on my own. Alas, he no longer does these tests (or at least doesn't publish them).

Given how instrumental the memory subsystem is to the overall performance of modern day SoCs, test suites are in remarkably short supply.

Sadly (for us) he’s also no longer with Anandtech. Like many a reviewer of a similar caliber he’s gotten swooped up by a tech company (don’t know who).

Last edited:

45%? How so? For the M1 they used the Xcode compiler, which is the most optimized available for the M1.The graph is accurate in the sense that Intel used the ICC compiler to give themselves a free 45% speed boost relative to their AMD and Apple competition. So a little more than somewhat misleading even if the numbers are “real”.

Xcode just uses vanilla clang basically. The ICC uses clang too but has an exclusive front end and a lot of other optimizations that they don’t upstream (totally legal to do). Intel themselves claim a 48% uplift in SpecInt 2017 over standard clang (on Intel chips ofc) which almost perfectly matched the 45% increase in SpecInt 2017 scores that we see on the graph. We know the 11th gen scores of Tiger Lake from Anandtech vs Apple M1 Max when Spec was compiled using standard clang -Ofast for both. The M1 beat the 11th gen by 37%. In the figure in @BigPotatoLobbyist ‘a post, @Andropov had previously measured the Tiger Lake chip beating the M1 Max by 8% - so a 45% difference which is almost perfectly concordant with Intel’s own claims:45%? How so? For the M1 they used the Xcode compiler, which is the most optimized available for the M1.

Intel® C/C++ Compilers Complete Adoption of LLVM

Next-generation Intel C/C++ compilers are even better because they use the LLVM open source infrastructure. I discuss what it means for users of the compilers, why we did it, and the bright future.

Relevant graph is down near the bottom. Some caveats like the Xcode version of clang is older than the one mentioned in the link above and less optimized and its ARM vs x86 clang. But overall it’s scarily similar to Intel’s compiler claims. @Andropov thinks that there might also be flag issues.

Last edited:

Source? I'm sure they optimized particularly the backend as much as possible.Xcode just uses vanilla clang basically.

Even if that is true it doesn't mean that it's possible get an additional 45% out of Apple's optimized Xcode compiler on the M1 too. I think it's only fair to use the best compiler available for each platform.The ICC uses clang too but has an exclusive front end and a lot of other optimizations that they don’t upstream (totally legal to do). Intel themselves claim a 48% uplift in SpecInt 2017 over standard clang (on Intel chips ofc) which almost perfectly matched the 45% increase in SpecInt 2017 scores that we see on the graph.

For that you can purchase the pro version.I think Geekbench would be a much better benchmark if it also supported testing sustained performance. It favors systems optimized for burst…

I haven't seen such penalty by comparing results between macOS and bootcamp.The lack of a sustained workload does suck, and there is a Windows penalty

Even if that is true it doesn't mean that it's possible get an additional 45% out of Apple's optimized Xcode compiler on the M1 too. I think it's only fair to use the best compiler available for each platform.

Depends on what you want to compare really. It kind of looks as if Intel team fine-tuned the compiler parameters to get the best scores for the individual tests while leaving default build settings for M1. This approach is fine for a HPC workload, where you have one of a kind software that runs on a specific target machine, so of course you'll fine-tune the settings... but doesn't really work for the common case where software is precompiled and distributed in a binary format. And besides, one can argue that leveraging an advanced auto-vectorising compiler is nice and all, but at this point you are not measuring the performance of the hardware, but the performance of the compiler.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.