Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

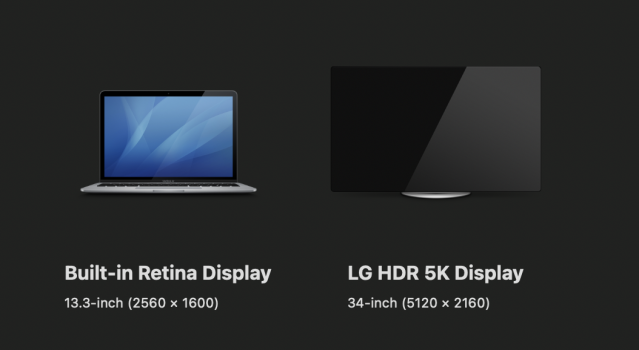

M1 MBP & LG 34 inch ultra wide 5K2K

- Thread starter flapflapflap

- Start date

-

- Tags

- 5k ultrawide mbp

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

No fix in the latest 11.5 beta 2 - it's frustrating.

I just released my frustration in a tweet. Sometimes Support gets back on these but I expect no information in case they would.

I just released my frustration in a tweet. Sometimes Support gets back on these but I expect no information in case they would.

How many people are on this thread? and how many others have viewed this thread that have the same problem, or want to buy one of these monitors? There must be a way to get Apple's attention on this once and for all. How can we all coordinate together in a way that will be heard and action taken by Apple? Or at least some kind of valid response? Any ideas? The 5k2k is literally the only ultrawide monitor that has a hope of rendering text half decently on Mac OS, and I cannot work with the available resolutions.

How many people are on this thread? and how many others have viewed this thread that have the same problem, or want to buy one of these monitors? There must be a way to get Apple's attention on this once and for all. How can we all coordinate together in a way that will be heard and action taken by Apple? Or at least some kind of valid response? Any ideas? The 5k2k is literally the only ultrawide monitor that has a hope of rendering text half decently on Mac OS, and I cannot work with the available resolutions.

federighi@apple.com

thanks, maybe I'll give Tim a quick call and get him to take care of it.

fair enough, I get that, but it's more than just this display. Apple has been systematically disabling external monitors from displaying OSX optimally over many many years. (with the exception of LG 5k 27 and 4k 24 which I suspect apple is benefiting from financially). This issue is just another step in that process. From everything I've read, there is no technical reason why M1 mini can't do practical and usable scaled resolutions for almost all displays, and, to allow font smoothing/sub-pixel anti-aliasing for non-retina displays, which seems to now be impossible. Retina displays are great, I would buy one in a second if it existed in a 34 or 32 inch, if it worked correctly with new Macs, and at a somewhat reasonable price.The number of users who have a 5k2k display and M1 mac is ridiculously small for apple to care. They will just advise us to upgrade to the M2 mac that will have beefier GPU to support the higher scaled resolution. Sorry but we got 1st gen scr*&ed.

Anyways, obviously in vain, but I was just hoping more people would want to try to find a way to get some increased visibility and put some pressure on Apple about this stuff.

Last edited:

You can be surprised sarcastic guy. I wrote to them (both) and actually have received some call/answers/help.thanks, maybe I'll give Tim a quick call and get him to take care of it.

You are funny. You call for a riot and then don't have the balls to write one email.

Last edited:

relax dude, intention was not to offend. I seriously thought you were joking about directly contacting the Senior VP of Apple engineering. Perhaps if you had provided some context in your reply rather than just a supposed email address of an Apple SVP. Rather surprising.You can be surprised sarcastic guy. I wrote to them (both) and actually have received some call/answers/help.

You are funny. You call for a riot and then don't have the balls to write one email.

lol can't believe Apple went from "we're working on it!" to never getting around to it and just deleting the support article altogether. Yikes.

Anyways, guess I'll be putting this monitor on craigslist, smh.

Anyways, guess I'll be putting this monitor on craigslist, smh.

Following. I have this monitor and have been thinking about getting a M1 Mini for myself. But the next upgrade for my work laptop is the M1 Pro 13". I only ever use this monitor at 3840x1620 scaled - from a skim of the last 2 pages here I /think/ that works?

This monitor is "weird." When it was released, there were a lot of issues getting support. I can't use mine over TB or it crashes every few days requiring me to plug/unplug the power to get it working again. I've been using the Moshi usbc/display port cable from Apple and it's been flawless for the past few weeks on my Intel 16"

This monitor is "weird." When it was released, there were a lot of issues getting support. I can't use mine over TB or it crashes every few days requiring me to plug/unplug the power to get it working again. I've been using the Moshi usbc/display port cable from Apple and it's been flawless for the past few weeks on my Intel 16"

Just chiming in here. I bought an M1 mini a month or so ago and also bought a Dell U4021QW Display. Then I found this forum and tried everything on here to fix the problem.... However, with no success I decided to pick a resolution that seemed as good as possible and run with it. Here we are a month later and I've been using SwitchResX to work at 4096x1728 @60Hz for a good few weeks and I have to say I love it. Yes, OK, if I pixel peep then the text is a teeny bit fuzzy, but it's really not bad. Just as good as my 1440 display on my PC. I have no problem reading emails and working in high-end graphic apps all day long (Adobe, Cinema4d, Capture One etc).

I had to set the monitor resolution to 'pixel-for-pixel' (which is a Dell option) and I used 'Font Smoothing Adjuster' set to 'light'.

In some ways the display seems to have improved over time, like it's settled into it's resolution better than it was at first.... Either way, I'm very pleased with it and I'd be happy to stick with it long-term if it wasn't for the fact that I'll be getting an M2 mini the minute they hit the shelves because I bloody love this one

Cheers

I had to set the monitor resolution to 'pixel-for-pixel' (which is a Dell option) and I used 'Font Smoothing Adjuster' set to 'light'.

In some ways the display seems to have improved over time, like it's settled into it's resolution better than it was at first.... Either way, I'm very pleased with it and I'd be happy to stick with it long-term if it wasn't for the fact that I'll be getting an M2 mini the minute they hit the shelves because I bloody love this one

Cheers

Hi guys, I stumbled on this thread from reddit. I have done a ton of testing in this area, and want to share what I have learned. Also, apologies in advance as I haven't read this thread in its entirety yet.

TL;DR: I am confident there is NOT going to be any sort of software/driver fix from Apple for those on here waiting for potential access to the highest "looks like" fractional retina scaling level.

First, you need to understand how macOS hidpi works, and how it is different from win10 and linux. On win10 and linux, to go from 1x to 1.5x scaling, the output resolution is kept at native 5120x2160 and the display elements themselves are just scaled up. Easy on the GPU, but prone to graphical glitches (like the zoom setting in a web browser). On macOS it is totally different. There are 3 modes:

1. Non-retina 1x: This is what you get by default on a <4k display, and also what you get if you do option-click in the Displays Pref Pane and select one of the "low resolution" modes (choosing the native 5120x2160 mode works this way as well, but is not labeled "low resolution" like the rest of the 1x modes). Just like win10, it renders at native 5120x2160, uses the 1x artwork, and is done. Easy.

2. Retina 2x ("looks like 2560x1080" on a 5K2K such as the LG in this thread or my Dell U4021QW): This is the classic retina. It renders at the native 5120x2160 using the *2x* artwork, and is done. Easy.

3. Fractional retina e.g. 1.33x ("looks like 3840"): This is where things get tricky, and taxing on the GPU. First the GPU internally renders the entire display environment at an appropriate 2x resolution, in this case a whopping 7680x3240 (MORE than 6K!!!), including the 2x artwork, then downsamples that whole frame to the output/native resolution of 5120x2160. This should make it clear why it is so much more taxing on the GPU, and why even many recent macs (M1 and 2020 Intel iGPU included) cannot select the highest fractional retina scaling mode. Unless Apple changes their way of doing hidpi, I don't see this changing.

The other point that I think is very important is the fact that M1 CAN output the full 5120x2160@10bit@60Hz if you choose native resolution (5120x2160 @ 1x), or 2x retina scaling ("looks like 2560x1080"), or even fractional scaling levels up to (reportedly) "looks like 3008". This rules out many other potential architecture/driver/software issues. As we have seen, the only difference between "looks like 3008" and "looks like 3840" is needing to do the pre-rendering and downsampling steps at an even higher resolution. This leads me to conclude that the M1 quite simply doesn't seem to have the GPU power to render 5K2K at "looks like 3840".

Side note 1: Many pre-2020 and especially pre-2018 macs are also limited by their older TB3 controllers and/or iGPUs, one or both of which were unable to do required DP 1.4. Sometimes I see people confusing these two issues, but they are separate. TB3 controller was solved with Titan Ridge macs in 2018. iGPU was solved with Ice Lake intel macs in 2020.

Side note 2: Many people are also confused by the fact that Apple reports compatibility with "one external display at 5k" or "one external display at 6k" on their website. This is misleading, because those specs should be SPECIFICALLY referring to the LG 5K and Apple XDR 6K. The LG uses a custom 2x DP 1.2 stream setup to allow compatibility all the way back to ~2016 macs. Such macs definitely cannot run other 5k or 5k2k displays. The XDR, from what I can tell, can either utilize a custom DP 1.4 scheme (4+1 HBR3 lanes) or DSC, depending on what type of hardware is connecting. Likewise, those features are not available on other displays such as the Dell or LG 5K2K.

Side note 2.1: Last point here is that both the LG 5K and XDR 6K are sized right physically to give you a comfortable UI size (similar physical size to that of a standard 110 PPI non-retina display) at classic 2x retina scaling, which, like was discussed, is easy on the GPU. There is no claim on the Apple spec pages about compatibility with fractional retina scaling levels.

Here are two demonstrations that I think will be helpful:

1. Screenshot of 5K2K at "looks like" 3840x1620: Note that the screenshot size is NOT 5120x2160, or even 3840x1620, but actually 7680x3240 as discussed above. Note that this is what is reported in the System Information window in the screenshot too.

ibb.co

ibb.co

2. Comparison of retina modes: This is also helpful to get a feel for the tradeoff between pixel size, UI size, potential interpolation (required at any fractional retina mode as discussed above, and the reason why these are never quite as "crisp" as 2x aka standard retina)

gist.github.com

gist.github.com

Anyway, please let me know if you have any lingering questions. I understand this will be a discouraging perspective to many on here, so I am happy to clarify the best I can...

TL;DR: I am confident there is NOT going to be any sort of software/driver fix from Apple for those on here waiting for potential access to the highest "looks like" fractional retina scaling level.

First, you need to understand how macOS hidpi works, and how it is different from win10 and linux. On win10 and linux, to go from 1x to 1.5x scaling, the output resolution is kept at native 5120x2160 and the display elements themselves are just scaled up. Easy on the GPU, but prone to graphical glitches (like the zoom setting in a web browser). On macOS it is totally different. There are 3 modes:

1. Non-retina 1x: This is what you get by default on a <4k display, and also what you get if you do option-click in the Displays Pref Pane and select one of the "low resolution" modes (choosing the native 5120x2160 mode works this way as well, but is not labeled "low resolution" like the rest of the 1x modes). Just like win10, it renders at native 5120x2160, uses the 1x artwork, and is done. Easy.

2. Retina 2x ("looks like 2560x1080" on a 5K2K such as the LG in this thread or my Dell U4021QW): This is the classic retina. It renders at the native 5120x2160 using the *2x* artwork, and is done. Easy.

3. Fractional retina e.g. 1.33x ("looks like 3840"): This is where things get tricky, and taxing on the GPU. First the GPU internally renders the entire display environment at an appropriate 2x resolution, in this case a whopping 7680x3240 (MORE than 6K!!!), including the 2x artwork, then downsamples that whole frame to the output/native resolution of 5120x2160. This should make it clear why it is so much more taxing on the GPU, and why even many recent macs (M1 and 2020 Intel iGPU included) cannot select the highest fractional retina scaling mode. Unless Apple changes their way of doing hidpi, I don't see this changing.

The other point that I think is very important is the fact that M1 CAN output the full 5120x2160@10bit@60Hz if you choose native resolution (5120x2160 @ 1x), or 2x retina scaling ("looks like 2560x1080"), or even fractional scaling levels up to (reportedly) "looks like 3008". This rules out many other potential architecture/driver/software issues. As we have seen, the only difference between "looks like 3008" and "looks like 3840" is needing to do the pre-rendering and downsampling steps at an even higher resolution. This leads me to conclude that the M1 quite simply doesn't seem to have the GPU power to render 5K2K at "looks like 3840".

Side note 1: Many pre-2020 and especially pre-2018 macs are also limited by their older TB3 controllers and/or iGPUs, one or both of which were unable to do required DP 1.4. Sometimes I see people confusing these two issues, but they are separate. TB3 controller was solved with Titan Ridge macs in 2018. iGPU was solved with Ice Lake intel macs in 2020.

Side note 2: Many people are also confused by the fact that Apple reports compatibility with "one external display at 5k" or "one external display at 6k" on their website. This is misleading, because those specs should be SPECIFICALLY referring to the LG 5K and Apple XDR 6K. The LG uses a custom 2x DP 1.2 stream setup to allow compatibility all the way back to ~2016 macs. Such macs definitely cannot run other 5k or 5k2k displays. The XDR, from what I can tell, can either utilize a custom DP 1.4 scheme (4+1 HBR3 lanes) or DSC, depending on what type of hardware is connecting. Likewise, those features are not available on other displays such as the Dell or LG 5K2K.

Side note 2.1: Last point here is that both the LG 5K and XDR 6K are sized right physically to give you a comfortable UI size (similar physical size to that of a standard 110 PPI non-retina display) at classic 2x retina scaling, which, like was discussed, is easy on the GPU. There is no claim on the Apple spec pages about compatibility with fractional retina scaling levels.

Here are two demonstrations that I think will be helpful:

1. Screenshot of 5K2K at "looks like" 3840x1620: Note that the screenshot size is NOT 5120x2160, or even 3840x1620, but actually 7680x3240 as discussed above. Note that this is what is reported in the System Information window in the screenshot too.

Screen-Shot-2021-04-13-at-6-52-41-PM hosted at ImgBB

Image Screen-Shot-2021-04-13-at-6-52-41-PM hosted on ImgBB

2. Comparison of retina modes: This is also helpful to get a feel for the tradeoff between pixel size, UI size, potential interpolation (required at any fractional retina mode as discussed above, and the reason why these are never quite as "crisp" as 2x aka standard retina)

macOS retina scaling comparison

macOS retina scaling comparison. GitHub Gist: instantly share code, notes, and snippets.

Anyway, please let me know if you have any lingering questions. I understand this will be a discouraging perspective to many on here, so I am happy to clarify the best I can...

Last edited:

Mac OS X during 10.4 and 10.5 did support fractional scaling up to 3x. It might be possible to get those again if you can patch macOS to use a scale other than 2x when it is generating scaled modes.3. Fractional retina e.g. 1.33x ("looks like 3840"): This is where things get tricky, and taxing on the GPU. First the GPU internally renders the entire display environment at an appropriate 2x resolution, in this case a whopping 7680x3240 (MORE than 6K!!!), including the 2x artwork, then downsamples that whole frame to the output/native resolution of 5120x2160. This should make it clear why it is so much more taxing on the GPU, and why even many recent macs (M1 and 2020 Intel iGPU included) cannot select the highest fractional retina scaling mode. Unless Apple changes their way of doing hidpi, I don't see this changing.

All we know is that macOS does not try to create scaled modes greater than 6K. Again, a patch to macOS might change that (the 6K limit is rather arbitrary - it was probably only chosen because the XDR exists). How much GPU power is required to do 6K? My W5700 can do scaled modes up to 16K x 16K (scaled down to 4K output) but it doesn't perform all that great.This leads me to conclude that the M1 quite simply doesn't seem to have the GPU power to render 5K2K at "looks like 3840".

Yup, before Titan Ridge, we had Alpine Ridge which is limited to DisplayPort 1.2. My Mac mini 2018 has Titan Ridge but the iGPU is limited to DisplayPort 1.2.Side note 1: Many pre-2020 and especially pre-2018 macs are also limited by their older TB3 controllers and/or iGPUs, one or both of which were unable to do required DP 1.4. Sometimes I see people confusing these two issues, but they are separate. TB3 controller was solved with Titan Ridge macs in 2018. iGPU was solved with Ice Lake intel macs in 2020.

Unless the display is a two cable display like the Dell UP2715K which uses two DisplayPort 1.2 signals just like the LG to support 5120x2880 60Hz 10bpc RGB.Side note 2: Many people are also confused by the fact that Apple reports compatibility with "one external display at 5k" or "one external display at 6k" on their website. This is misleading, because those specs should be SPECIFICALLY referring to the LG 5K and Apple XDR 6K. The LG uses a custom 2x DP 1.2 stream setup to allow compatibility all the way back to ~2016 macs. Such macs definitely cannot run other 5k or 5k2k displays.

Or you use a lower refresh rate. 5K at 39Hz with a single DisplayPort 1.2 connection can work on the LG UltraFine 5K but some GPU/macOS combinations don't support width > 4096 without using dual tiles.

Apple uses some trick to get two HBR3 four lane connections over Thunderbolt 3. Apple does not allow do the trick when there's a Thunderbolt 3 device between the XDR and the host. Two 4 lane HBR3 connections is 51.84 Gbps which is more than Thunderbolt 3 can do, but the XDR only requires 38 Gbps which can work over Thunderbolt 3 (40 Gbps) because Thunderbolt does not transmit the DisplayPort stuffing symbols used to fill the DisplayPort bandwidth.The XDR, from what I can tell, can either utilize a custom DP 1.4 scheme (4+1 HBR3 lanes) or DSC, depending one what type of hardware is connecting.

Would be nice if there was a DisplayPort to DisplayPort adapter that can convert DisplayPort 1.4 (with or without DSC) to dual DisplayPort 1.2. An MST Hub can do that but not with macOS and the two streams would count against the total number of streams allowed.Likewise, those features are not available on other displays such as the Dell or LG 5K2K.

Of course not. The fractional retina scaling levels is handled by the GPU. The GPU just needs to output a signal that the displays can accept.Side note 2.1: Last point here is that both the LG 5K and XDR 6K are sized right physically to give you a comfortable UI size (similar physical size to that of a standard 110 PPI non-retina display) at classic 2x retina scaling, which, like was discussed, is easy on the GPU. There is no claim on the Apple spec pages about compatibility with fractional retina scaling levels.

My 16K x 16K (or Looks like 8K x 8K) screenshots are about 265 MB.Here are two demonstrations that I think will be helpful:

1. Screenshot of 5K2K at "looks like" 3840x1620: Note that the screenshot size is NOT 5120x2160, or even 3840x1620, but actually 7680x3240 as discussed above. Note that this is what is reported in the System Information window in the screenshot too.

Screen-Shot-2021-04-13-at-6-52-41-PM hosted at ImgBB

Image Screen-Shot-2021-04-13-at-6-52-41-PM hosted on ImgBBibb.co

Missing a test of 5K scaled down to 4K and output to 5K display which scales up the 4K input to 5K. The GPU does the first scaling and the display does the second scaling.2. Comparison of retina modes: This is also helpful to get a feel for the tradeoff between pixel size, UI size, potential interpolation (required at any fractional retina mode as discussed above, and the reason why these are never quite as "crisp" as 2x aka standard retina)

macOS retina scaling comparison

macOS retina scaling comparison. GitHub Gist: instantly share code, notes, and snippets.gist.github.com

Apple did come up with a solution. Their solution was to decide it's not a problem (i.e., not supported) and to forget about it.lol can't believe Apple went from "we're working on it!" to never getting around to it and just deleting the support article altogether. Yikes.

Anyways, guess I'll be putting this monitor on craigslist, smh.

Is this resolution problem a general problem with the software of Big Sur or does it only concern the M1 apple devices? I am asking because I am interested in buying a Dell 40" widescreen display for my MacBook Pro 2018.

These issues are not related to Big Sur. They are also not specific to M1, per se. macOS hidpi is demanding in general, and the problem is compounded by the anemic gpus and connectivity in some recent macs.Is this resolution problem a general problem with the software of Big Sur or does it only concern the M1 apple devices? I am asking because I am interested in buying a Dell 40" widescreen display for my MacBook Pro 2018.

Regarding your 2018 MacBook Pro…look around very carefully on here, Reddit, Dell reviews, etc., for specific reports of people with your model. I do suspect you will have issues. What is the specific model? You need to make sure it has a Titan Ridge TB3 controller (I think most/all 2018 do). Also, what is the GPU? If I recall correctly, the Vega upgrade may be the only one that can push 5K2K@10bit@60Hz and all the fractional retina levels. iGPU will only be able to do 5K2K@10bit@30Hz due to its DP 1.2 HBR2 limitation. Radeon Pro can do 5K2K@10bit@60Hz since both it and the Titan Ridge controller can do DP 1.4/HBR3, but will likely NOT be able to do the highest fractional scaling levels.

But please look around for some first hand confirmations before making this big purchase. Unfortunately many people are ending up disappointed…

WOW that is all very complicated.These issues are not related to Big Sur. They are also not specific to M1, per se. macOS hidpi is demanding in general, and the problem is compounded by the anemic gpus and connectivity in some recent macs.

Regarding your 2018 MacBook Pro…look around very carefully on here, Reddit, Dell reviews, etc., for specific reports of people with your model. I do suspect you will have issues. What is the specific model? You need to make sure it has a Titan Ridge TB3 controller (I think most/all 2018 do). Also, what is the GPU? If I recall correctly, the Vega upgrade may be the only one that can push 5K2K@10bit@60Hz and all the fractional retina levels. iGPU will only be able to do 5K2K@10bit@30Hz due to its DP 1.2 HBR2 limitation. Radeon Pro can do 5K2K@10bit@60Hz since both it and the Titan Ridge controller can do DP 1.4/HBR3, but will likely NOT be able to do the highest fractional scaling levels.

But please look around for some first hand confirmations before making this big purchase. Unfortunately many people are ending up disappointed…

I miss the old VGA-times ;-)

Thanks a lot for your insights.

Firstly, I agree with you on most everything above.Hi guys, I stumbled on this thread from reddit. I have done a ton of testing in this area, and want to share what I have learned. Also, apologies in advance as I haven't read this thread in its entirety yet.

TL;DR: I am confident there is NOT going to be any sort of software/driver fix from Apple for those on here waiting for potential access to the highest "looks like" fractional retina scaling level.

First, you need to understand how macOS hidpi works, and how it is different from win10 and linux. On win10 and linux, to go from 1x to 1.5x scaling, the output resolution is kept at native 5120x2160 and the display elements themselves are just scaled up. Easy on the GPU, but prone to graphical glitches (like the zoom setting in a web browser). On macOS it is totally different. There are 3 modes:

1. Non-retina 1x: This is what you get by default on a <4k display, and also what you get if you do option-click in the Displays Pref Pane and select one of the "low resolution" modes (choosing the native 5120x2160 mode works this way as well, but is not labeled "low resolution" like the rest of the 1x modes). Just like win10, it renders at native 5120x2160, uses the 1x artwork, and is done. Easy.

2. Retina 2x ("looks like 2560x1080" on a 5K2K such as the LG in this thread or my Dell U4021QW): This is the classic retina. It renders at the native 5120x2160 using the *2x* artwork, and is done. Easy.

3. Fractional retina e.g. 1.33x ("looks like 3840"): This is where things get tricky, and taxing on the GPU. First the GPU internally renders the entire display environment at an appropriate 2x resolution, in this case a whopping 7680x3240 (MORE than 6K!!!), including the 2x artwork, then downsamples that whole frame to the output/native resolution of 5120x2160. This should make it clear why it is so much more taxing on the GPU, and why even many recent macs (M1 and 2020 Intel iGPU included) cannot select the highest fractional retina scaling mode. Unless Apple changes their way of doing hidpi, I don't see this changing.

The other point that I think is very important is the fact that M1 CAN output the full 5120x2160@10bit@60Hz if you choose native resolution (5120x2160 @ 1x), or 2x retina scaling ("looks like 2560x1080"), or even fractional scaling levels up to (reportedly) "looks like 3008". This rules out many other potential architecture/driver/software issues. As we have seen, the only difference between "looks like 3008" and "looks like 3840" is needing to do the pre-rendering and downsampling steps at an even higher resolution. This leads me to conclude that the M1 quite simply doesn't seem to have the GPU power to render 5K2K at "looks like 3840".

Side note 1: Many pre-2020 and especially pre-2018 macs are also limited by their older TB3 controllers and/or iGPUs, one or both of which were unable to do required DP 1.4. Sometimes I see people confusing these two issues, but they are separate. TB3 controller was solved with Titan Ridge macs in 2018. iGPU was solved with Ice Lake intel macs in 2020.

Side note 2: Many people are also confused by the fact that Apple reports compatibility with "one external display at 5k" or "one external display at 6k" on their website. This is misleading, because those specs should be SPECIFICALLY referring to the LG 5K and Apple XDR 6K. The LG uses a custom 2x DP 1.2 stream setup to allow compatibility all the way back to ~2016 macs. Such macs definitely cannot run other 5k or 5k2k displays. The XDR, from what I can tell, can either utilize a custom DP 1.4 scheme (4+1 HBR3 lanes) or DSC, depending on what type of hardware is connecting. Likewise, those features are not available on other displays such as the Dell or LG 5K2K.

Side note 2.1: Last point here is that both the LG 5K and XDR 6K are sized right physically to give you a comfortable UI size (similar physical size to that of a standard 110 PPI non-retina display) at classic 2x retina scaling, which, like was discussed, is easy on the GPU. There is no claim on the Apple spec pages about compatibility with fractional retina scaling levels.

Here are two demonstrations that I think will be helpful:

1. Screenshot of 5K2K at "looks like" 3840x1620: Note that the screenshot size is NOT 5120x2160, or even 3840x1620, but actually 7680x3240 as discussed above. Note that this is what is reported in the System Information window in the screenshot too.

Screen-Shot-2021-04-13-at-6-52-41-PM hosted at ImgBB

Image Screen-Shot-2021-04-13-at-6-52-41-PM hosted on ImgBBibb.co

2. Comparison of retina modes: This is also helpful to get a feel for the tradeoff between pixel size, UI size, potential interpolation (required at any fractional retina mode as discussed above, and the reason why these are never quite as "crisp" as 2x aka standard retina)

macOS retina scaling comparison

macOS retina scaling comparison. GitHub Gist: instantly share code, notes, and snippets.gist.github.com

Anyway, please let me know if you have any lingering questions. I understand this will be a discouraging perspective to many on here, so I am happy to clarify the best I can...

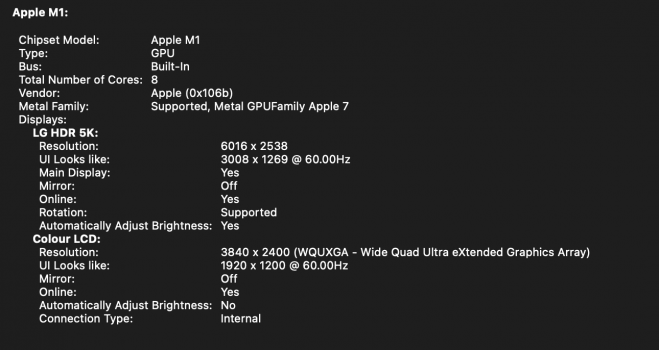

However, if the constraint to running the optimal resolution of 7680 x 3240 (UI Looks like: 3840x1620) was the GPU, how is it that the unit can run my current configuration:

LG HDR 5K:

Resolution: 6016 x 2538

UI Looks like: 3008 x 1269 @ 60.00Hz

Colour LCD:

Resolution: 3840 x 2400 (WQUXGA - Wide Quad Ultra eXtended Graphics Array)

UI Looks like: 1920 x 1200 @ 60.00Hz

This is driving in total 24,484,608 pixels furthermore, it does so comfortably. This is approximately the same amount of pixels as the 24,883,200 it must drive when running our desired resolution on a single external display without the internal display running.

I maintain that the decision-making process can be accounted for as a shortcut on the M1 Architecture team from whoever was working on the Graphics engine where they essentially took the highest resolution monitor the is explicitly supported by Apple, then took its native resolution and made that the highest supported resolution.

I expect that this will be resolved with the upcoming Pro units with an M1X or an M2 chip as I would imagine Pro users will want 8k support. When this occurs, I hope that our monitors cease being crippled and can be used as we could on Intel Macs prior.

Fingers crossed.

Attachments

Last edited:

I have this monitor for work and experienced similar problems with my ThinkPad. It only worked (in Linux and Windows) using DP 1.2

I then installed the LG software and installed a firmware update recently for the monitor and now it just works via TB3 connected to the ThinkPad. Full resolution using DisplayPort 1.4. Do the problems with M1 Macs are the same with the newest firmware?

I plan to get an M1x/M2 MacBook Pro when they release and hope that the screen will work without problems then :/ Anything else would be disappointing

I then installed the LG software and installed a firmware update recently for the monitor and now it just works via TB3 connected to the ThinkPad. Full resolution using DisplayPort 1.4. Do the problems with M1 Macs are the same with the newest firmware?

I plan to get an M1x/M2 MacBook Pro when they release and hope that the screen will work without problems then :/ Anything else would be disappointing

One possibility is that the GPU can do 2 of 10 at a rate of 3 each but not 1 of 20 at a rate of 6.However, if the constraint to running the optimal resolution of 7680 x 3240 (UI Looks like: 3840x1620) was the GPU, how is it that the unit can run my current configuration:

Where 1 and 2 are stream counts, 10 and 20 are pixel counts, and 3 and 6 are data rates (substitute those numbers with numbers that make sense). The GPU in this case is limited by the max data rate for a single stream. It's the same problem as why we have CPUs with 20 cores at 3 GHz instead of CPUs with 10 cores at 6 GHz.

In an Intel GPU, they have to use multiple CRTC's (pipes?) to handle a large resolution.

The tiled display examples I gave (intel with Dell 8K, or old 4K displays) have the limitation in the display but you can image a similar limitation occurring in the GPU. It would be useful to find a document or video example where the limitation is in the GPU alone. Actually the video talks about single tile displays that require multiple pipes and they mention the DSC case (which uses multiple stripes?) and they mention clock limits, etc.

I don't believe the limit is 6K max frame buffer; I'm just saying that there could be reasons for a limit.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.