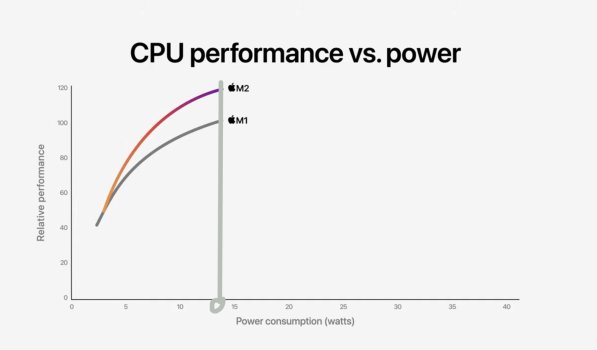

No it's the opposite. It means that the i9 consumes more than 2.3x the power used by the M2 Max.So that would mean, that i9 single core consumes less than 2.3x M2 Max's single core. I see it as hard to believe.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M3 Chip Generation - Discussion Megathread

- Thread starter scottrichardson

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

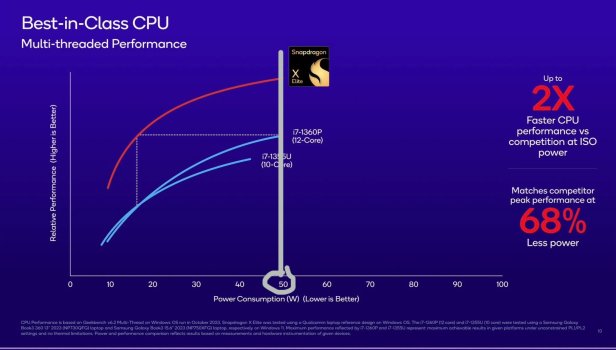

Qualcomm showed a slide where they claim that their new chip is 50% faster than the M2 in multi-threaded tasks. Which makes it about as fast as an M2Pro/Max (according to Geekbench 6, which is the tool they apparently use).

I understand why they used the M2 as a reference if they can't beat the "pro" Apple SoC.

Still, that's not bad if their chip actually use 30% less power than an M2 Max for that.

I understand why they used the M2 as a reference if they can't beat the "pro" Apple SoC.

Still, that's not bad if their chip actually use 30% less power than an M2 Max for that.

So Qualcomm CPU has 50% faster multithreaded performance than base M2 but it says on their graph that it consumes up to 50W

So that means it’s 50% faster than M2 in multi core while using 3.6x more energy.

How is that good?

So that means it’s 50% faster than M2 in multi core while using 3.6x more energy.

How is that good?

Attachments

It’s confusing at the moment. The i7-1360p gets around 10000-11000 gb6 points. The M2 gets around 10000. So is it 50% faster (15000] or 2x faster (20000-22000)?So Qualcomm CPU has 50% faster multithreaded performance than base M2 but it says on their graph that it consumes up to 50W

So that means it’s 50% faster than M2 in multi core while using 3.6x more energy.

How is that good?

Edit: damn fooled again. Missed the 2x at iso power. Disregard. Yeah if it’s 15000 at 50 watts that is weird.

What I am surprised is the amount of CPU claimed by Qualcomm, ie. 12 HP cores vs rumored 8P+4E. Yet CPU cores are divided by 3 clusters of quadcore and only two clusters of CPU able turbo boost up to 4.3GHz. That's mean the last cluster of quadcore might be getting lesser power? As mentioned by AT:

"but it’s a safe assumption that each cluster is on its own power rail, so that unneeded clusters can be powered down when only a handful of cores are called for."

It is possible that Qualcomm might completely disable one main cluster of quadcore CPU, thus lower the power to compete with M3 which most likely still maintain 4P+4E configuration. Pretty clever CPU design, instead of two different dies, they just design one die for 2 product categories. I am curious how big the die size would be?

"but it’s a safe assumption that each cluster is on its own power rail, so that unneeded clusters can be powered down when only a handful of cores are called for."

It is possible that Qualcomm might completely disable one main cluster of quadcore CPU, thus lower the power to compete with M3 which most likely still maintain 4P+4E configuration. Pretty clever CPU design, instead of two different dies, they just design one die for 2 product categories. I am curious how big the die size would be?

Even at these numbers, considering Apple, Google, and Microsoft are serving hundreds of millions of users each the rate is a rounding error to them (I may have been working off of old data, my apologies).The fees seem much higher and very confusing, as there seems to be more than one patent pool.

Standard AVC HEVC Licensing group MPEG LA MPEG LA HEVC Advance Velos estimate Total estimate Number of WW Patents 3,704 4,417 3,321 3,200 10,938 Handset royalty ($) – highest rate $0.20 $0.20 $0.65 $0.75 $1.60 $ per 1,000 patents for handset $0.05 $0.05 $0.20 $0.23 $0.27 Handset cap $10 million $25 million $30 million Unknown $55 million plus Sample total royalty for 10 million units $1.5 million $2.0 million $6.5 million $7.5 million $16.0 million What will TV cost you? Putting a price on HEVC licences

Changes in how you watch movies, stream TV and use video chat are on the way. These will fundamentally affect the economics of how content is delivered to you, as well as the way that the patents underpinning the enabling technology are licensedwww.iam-media.com

Please lets wait for the real devices, for this to be placed and tested on consumers devices. I really would like this to be even better than the last 2-3 attempts, i like that QC still push Windows on Arm...too bad that from my understanding is that in mid 2024 devices with this will come to the market for us ?!

When they tried first, all the charts were cut by around 20% in real native applications usage

When they tried first, all the charts were cut by around 20% in real native applications usage

It would be fun if they only release the Pro chips, and we get the 27/30” iMac together with the MBPs. Would take everyone by surprise.It is possible that the event will focus on prosumer chips. At this point I wouldn’t be surprised if M3 ships in spring.

I most definitely wouldn't want that. I have no actual info, just noting the rumors:

- surplus of M2 chips

- M3 waiting for N3E

If M3's launching/shipping in next week (or two or three ) then they probably are not N3E. They are probably N3B stuff that has been 'gathered' in inventory over last couple of months on the 'fringe' of wafers didn't need for A17 Pro.

I hope that's wrong and Apple M3s all the things.

What is probably wrong is some mass product deployment of the M3 into several systems. If Apple was working to stuff the M3 into 3-4 systems then the likelihood of a leak would be much higher than if they were just trying to do just one (e.g., iMac 24" ).

The fact the date here caught a number of folks 'flat footed' (and some 180 degrees off wrong) is suggestive that this isn't a broad release.

If there is no broad release then there are lots of places for the M2 to go for the next couple of months. Even more when the iPad Air updates in the Spring. If Apple wants to move product this holiday season just shave some 'super fat profits' down to just 'fat profits'. (worked last holiday season with almost nothing new. )

Last edited:

Those cores can turbo from 3.80 GHz to 4.3GHz

Not particularly surprising since these were originally targeted at being 'server' cores in the first place.

It won't be surprising if these have a little bigger footprint than Apple P cores do and are more than huge compared to Apple's E cores.

Still , they are likely not the huge 'porkers' that Intel's P cores are so no 'harm' in not having any smaller core ( E or cloud) options.

I doubt this generation Oryon has to fit in any phone so likely tuned for a different size die area budget ( that is bigger) and thermal budget ( that is also relatively bigger).

If a 12P Oryon really only does 50% better than a 4P+4E M2, that's... pathetic. And if you take them at their word that single-core is substantially better than M2... that's even worse.

I think they've put out a lot of really confusing numbers and we won't really know what's what until we see some actual running systems (by which point M3 will likely have ben out for 6-9 months).

But if the single- and multi-core scores are even close to accurate, it suggests that they have a really serious scaling problem, worse than Apple did with the M1 Ultra.

I hope it does turn out to be a good chip - the more quality competition, the better. So far, I'm not feeling it.

I think they've put out a lot of really confusing numbers and we won't really know what's what until we see some actual running systems (by which point M3 will likely have ben out for 6-9 months).

But if the single- and multi-core scores are even close to accurate, it suggests that they have a really serious scaling problem, worse than Apple did with the M1 Ultra.

I hope it does turn out to be a good chip - the more quality competition, the better. So far, I'm not feeling it.

You misread. It's not two clusters. It's two *cores* that can go to 4.3GHz. And it's not designed with two special cores, they'll just pick the two most efficient cores. (This is what Intel and AMD do.)What I am surprised is the amount of CPU claimed by Qualcomm, ie. 12 HP cores vs rumored 8P+4E. Yet CPU cores are divided by 3 clusters of quadcore and only two clusters of CPU able turbo boost up to 4.3GHz. That's mean the last cluster of quadcore might be getting lesser power?

If a 12P Oryon really only does 50% better than a 4P+4E M2, that's... pathetic. And if you take them at their word that single-core is substantially better than M2... that's even worse.

I think they've put out a lot of really confusing numbers and we won't really know what's what until we see some actual running systems (by which point M3 will likely have ben out for 6-9 months).

But if the single- and multi-core scores are even close to accurate, it suggests that they have a really serious scaling problem, worse than Apple did with the M1 Ultra.

I think this simply suggest that their "turbo boost" comes at a cost and the clock speed of the CPU cores running a multi-core workload is significantly lower, probably around 3.2-3.4 Ghz. Which would be again consistent with the "it's a server core" explanation. We also should keep in mind that GB6 uses cooperative multi-core benchmarks, which is more difficult to scale for everyone (Apple included).

Qualcomm showed a slide where they claim that their new chip is 50% faster than the M2 in multi-threaded tasks. Which makes it about as fast as an M2Pro/Max (according to Geekbench 6, which is the tool they apparently use).

I understand why they used the M2 as a reference if they can't beat the "pro" Apple SoC.

Still, that's not bad if their chip actually use 30% less power than an M2 Max for that.

It appears to use more power actually. M2 pro/max use 35-40 watts, Qualcomm's slide put their chips at 50-55 watts. Of course, it depends what you actually count — core power draw or mainboard power draw.

What I am surprised is the amount of CPU claimed by Qualcomm, ie. 12 HP cores vs rumored 8P+4E. Yet CPU cores are divided by 3 clusters of quadcore and only two clusters of CPU able turbo boost up to 4.3GHz. That's mean the last cluster of quadcore might be getting lesser power? As mentioned by AT:

If there are two cores running, one each in the first two clusters, then that means there are no cores running in the third cluster at all. If there are no cores running in that cluster then why should it get power? Yeah, the power should be lower because there is nothing happening there.

You can probably turn each one of these clusters either off or at least render it into a very comatose state with minimal power draw ( just enough to watch messages go by to see if it should 'wake up'. )

One or two core turbo means the others are or pretty close to off. The 'all core' turbo rate is 3.8GHz. If there is activity on all of the cores the cap is lower. It wouldn't not be surprising if there is shared resources in the 4 core cluser ( L2 cache ) that need to 'not share' if boost over 3.8.

"but it’s a safe assumption that each cluster is on its own power rail, so that unneeded clusters can be powered down when only a handful of cores are called for."

It is possible that Qualcomm might completely disable one main cluster of quadcore CPU, thus lower the power to compete with M3 which most likely still maintain 4P+4E configuration.

There are only two cores running so why competing with 8 core competitors. This only two core turbo is mainly to tackle just single core ( possibly 'race to sleep' ) contexts. Once the other cores are allocated work this max turbo speed disappears.

This likely isn't about the M3. It is very likely about being more competitive with AMD/Intel cores that put more 'juice' trying to maximize ST scores for certain Windows workloads ( that are stuck ST). There is a 'Dual' core mode because can dispatch what might throw at a couple of E cores at the other active cores. So the 'distraction' workloads get thrown at the other turbo core while try to keep the foreground interaction app with the ST constraint code solely focused on the other Turbo code. And every one else ... unallocated.

The OS scheduler shouldn't be allocating secondary work to 2-8 P cores the same way it would to 4 E-cores.

Where they happen to beat a M2/M3 Qualcomm will take the 'win'. HOwever, the primarily objective should be trying to displace AMD/Intel SoC from laptops. Qualcomm is never going to displace a M-series from a Mac. They aren't even in the running for that at all.

Pretty clever CPU design, instead of two different dies, they just design one die for 2 product categories. I am curious how big the die size would be?

I think this is more about not having to do two different kinds of CPU core clusters than different dies.

Also not doing more than one die as a cost saving measure. ( they are late... Can just bin down a cluster if don't need it full time at a different price point. )

It could be as much about managing thermals as it is a "compete for power" issue. Thermals , bandwidth latency/QoS , and then 'limited power distribution' could be the constraints. ( there can also be a P core cluster that can't handle 4.3 but stil want to pass the chip as qualifying. If this two core model is only die specific 'blessed' cores , then may not have a 3rd to pick. )

If a 12P Oryon really only does 50% better than a 4P+4E M2, that's... pathetic. And if you take them at their word that single-core is substantially better than M2... that's even worse.

It is not 'even worse'. The Oryon has a broader range between Max 1-2 core turbo and all-core/base clock speeds. It appears to be goosed to over achieve in ST and then go back to its cloud server core origins when load up all the cores. You seems to be trying to push the notion that multiple core should be Turbo x 12 and it really isn't going to do that.

Also pretty doubtful that the Windows kernel OS scheduler is really fully optimized for this. ( hiccups when 'unusual' Zen 1 architecture showed up with cache segregation that MS wasn't 'used to'). History repeating?

And the more mature Arm scheduler is expecting E-cores ... which are completely missing here. So where does background stuff get tossed too?

Decent chance there is a substantive 'immature' factor here more so than 'pathetic' one. A generation of updates on both software and hardware side some of this may disappear. And GB 6 is a bit wonky also (it hunts some quirks).

Last edited:

Yes, of course. But the announced all-cores turbo is 3.8GHz, and I am perhaps naively assuming that their benchmarks are from a system where they've engineered top-quality cooling. That's not *that* much slower, so the numbers are still lower than I would expect for 12 P cores.I think this simply suggest that their "turbo boost" comes at a cost and the clock speed of the CPU cores running a multi-core workload is significantly lower, probably around 3.2-3.4 Ghz. Which would be again consistent with the "it's a server core" explanation. We also should keep in mind that GB6 uses cooperative multi-core benchmarks, which is more difficult to scale for everyone (Apple included).

Apropos of @deconstruct60's later comment, I wonder why they didn't throw a cluster of ARM's E cores in there, if they didn't have time to design their own? Presumably their NoC, SLC, etc. are different enough from ARM's that it wouldn't be easy to integrate. They may regret not doing the work (or designing their own) though. I guess we'll see once we've got real systems to test.

Last edited:

Yes, of course. But the announced all-cores turbo is 3.8GHz, and I am perhaps naively assuming that their benchmarks are from a system where they've engineered top-quality cooling. That's not *that* much slower, so the numbers are still lower than I would expect for 12 P cores.

Yeah, I fully share the confusion. I don't really understand how 12 cores end up using more power without a noteworthy performance increase if one core can deliver same perf at 30% less power... I'd at least expect same MC performance at 30% less power in that case.

Apropos of @deconstruct60's later comment, I wonder why they didn't throw a cluster of ARM's E cores in there, if they didn't have time to design their own? Presumably their NoC, SLC, etc. are different enough from ARM's that it wouldn't be easy to integrate. They may regret not doing the work (or designing their own) though. I guess we'll see once we've got real systems to test.

Another factor might be that they don't want to deal with the Windows scheduler.

Hah, they don't get to make that choice. Look what happened to AMD in the first-get Zens. They accommodate the Windows scheduler and like it, or possibly work with MS to make some improvements. They do NOT get to ignore it if they want results that aren't embarrassing.Another factor might be that they don't want to deal with the Windows scheduler.

Apropos of @deconstruct60's later comment, I wonder why they didn't throw a cluster of ARM's E cores in there, if they didn't have time to design their own?

Same reason AMD doesn't. Costs and not trying to hit the minimal floor on energy consumption with beating/competitive with Intel H/HX on multicore being a higher priority. Qualcomm is $1B in the 'hole' on the costs for just these P cores. Probably need to make some money before run off and add yet another development path to the overhead.

AMD's c core is not really being done primarily for laptop. It is a 'trickle down' from a server SoC that will pay for itself. It is just a 'extra gravy' that AMD is going to incrementally add those to the

Intel's E-cores are more so a 'smaller' core than a radically deeply energy conserving core. Qualcomm and AMD have a smaller than Intel P core already.

( Intel had to turn off AVX-512 to make the P cores cross compatible along instruction set with their E-cores. Broadly the Oryon cores have same instruction set , but that the quirk save/restore a running process level ( or some corner case narrow instruction(s)) decent chance there is a mismatch. )

Presumably their NoC, SLC, etc. are different enough from ARM's that it wouldn't be easy to integrate. They may regret not doing the work (or designing their own) though. I guess we'll see once we've got real systems to test.

I'd be surprised if Nuvia wasn't using off the shelf Arm core interconnect all along. It would easier to sell the company to a standard Arm interconnect implementor and it would lower the start up costs on their first die they had to get out the door ( if didn't get sold). Either way it doesn't make much sense to go very far off the 'reservation' with a smaller team just getting started on a new design and market target optimizations. Chunks of the die subcomponents they'd be trying to just buy and use.

Throw on top the legal 'dust up' Qualcomm was going to pull pissing off Arm about licensing. If there was a bigger chunk of Arm IP in there Arm would get a much bigger hook to pull the plug .

For a phone product no E-cores would be a problem, but for the thermal range of most Windows laptops are built to and given starting with a cloud server tweaked P core in the first place..... doing a limbo down into the 'phone zone' on the first iteration is a bad idea. Intel gives them a big power umbrella to operate under in this specific market.

Yes, of course. But the announced all-cores turbo is 3.8GHz, and I am perhaps naively assuming that their benchmarks are from a system where they've engineered top-quality cooling. That's not *that* much slower, so the numbers are still lower than I would expect for 12 P cores.

The 'test mule' Snapdragon label on the bezel laptops that Qualcomm was using in keynote demos look like average joe mid range , very affordable laptops. Not high end gaming/workstation laptops with mega blowers on them.

Qualcomm may not succeed in selling these SoCs to many lower margin vendors , but it doesn't help to throw expensive (for low margin folks) cooling systems at these and say ... 'see it is going to work for your stuff' reference systems at them. They are just going to look at it and say 'that's expensive' and mostly walk away.

Most are likely still going to walk away when figure out the full bill of material bundle have to buy from Qualcomm , but don't have to chase the away as soon as you pull the reference system out of the box/bag.

P.S. and how long do can they hold that "all core" turbo. If it disappears quickly then it isn't necessarily going to have traction on a longer benchmark.

[ Intent more to get in/out of some user interaction / interface element quickly so user gets 'snappy' perception and then goes back to little to no user input/respond needed at all for a while. ]

Last edited:

Except there just isn't that pot of money in Cable TV any more...

They seem well aware that their subscribers are mostly over 60 and dying off, and that the most sensible way to run the business is to extract every last dollar they can from it, but not bother to improve anything.

That isn't particularly matching reality. Overwhelming vast majority of Cable TV operators are also Internet service operators. You notion is that it is currently "cable card boxes forever" at Cable operators and that isn't the case. Most are switch to TV over IP. All the major players have Video on Demand. Which makes them do basically the same thing Netflix does only there is usually more commericials ( which Netflix is also moving toward).

The old style signalling for 'two way' pay per view signalling is being throw out for more IP bandwidth.

Comcast and Spectrum are handing out Xumo boxes.

" ... One key difference in the way Mediacom customers will experience the Xumo Stream Box is that they will not purchase cable plans directly through the box. Instead, the Xumo Stream Box will be made available to Mediacom Xtream Internet customers, so it will be used purely as a streaming player by Mediacom users. Both Comcast and Charter customers can purchase pay-TV plans directly through the Xumo Stream Box, and use the device to watch their cable subscription. ..."

Another Broadband Provider to Offer Xumo Streaming Box as Mediacom Signs up for Comcast, Charter Joint Venture

The cable provider won’t offer any of its TV plans through the Xumo Stream Box initially, but it wouldn’t be surprising to see that capability added in the future.

And Spectrum has been selling "Choice" with TV service with "no cable box" for years in various markets.

Dish runs both satellite offerings and Sling. DirecTV same thing ( if they survive being mismanaged by AT&T For a long time. ).

The cable signal bucket is largely just a ballon squeeze into many of the same folks with a 'streamed' distribution as before. The industry is still hooked to pushing high carriage fees and collecting them via "cable vendors" to generate most of the revenue. So just following the money.

As far as I can tell (from my limited interaction with Spectrum about a year ago) they were still using MPEG2 for their codec (so no need to buy new encoder hardware or provide new decoder boxes), and they seemed throughly uninterested in such modern features as 4K or HDR.

Largely because when go from sending one single to 100K endpoints to sending 100K different ordered streams to separate end points then the bandwidth explosion and inefficiencies are relatively large. The compression gap between AV1 and H.266 is 'pissing in the wind' kind of difference. They need more bandwidth period.

Similar with ATSC 3.0 more compression mainly is leading to broadcasting more channels with better feedback and DRM control. Not really a big net savings in aggregate bandwidth consumed.

That isn't particularly matching reality. Overwhelming vast majority of Cable TV operators are also Internet service operators. You notion is that it is currently "cable card boxes forever" at Cable operators and that isn't the case. Most are switch to TV over IP. All the major players have Video on Demand. Which makes them do basically the same thing Netflix does only there is usually more commericials ( which Netflix is also moving toward).

The old style signalling for 'two way' pay per view signalling is being throw out for more IP bandwidth.

Comcast and Spectrum are handing out Xumo boxes.

" ... One key difference in the way Mediacom customers will experience the Xumo Stream Box is that they will not purchase cable plans directly through the box. Instead, the Xumo Stream Box will be made available to Mediacom Xtream Internet customers, so it will be used purely as a streaming player by Mediacom users. Both Comcast and Charter customers can purchase pay-TV plans directly through the Xumo Stream Box, and use the device to watch their cable subscription. ..."

Another Broadband Provider to Offer Xumo Streaming Box as Mediacom Signs up for Comcast, Charter Joint Venture

The cable provider won’t offer any of its TV plans through the Xumo Stream Box initially, but it wouldn’t be surprising to see that capability added in the future.thestreamable.com

And Spectrum has been selling "Choice" with TV service with "no cable box" for years in various markets.

Dish runs both satellite offerings and Sling. DirecTV same thing ( if they survive being mismanaged by AT&T For a long time. ).

The cable signal bucket is largely just a ballon squeeze into many of the same folks with a 'streamed' distribution as before. The industry is still hooked to pushing high carriage fees and collecting them via "cable vendors" to generate most of the revenue. So just following the money.

I did not claim there was no money in " 'cable TV' companies ", I said there was no money in *Cable TV*.

When Charter ships Netflix bits to an Apple TV, there is no reason Charter needs an h.266 license.

Look at what I said. The business that matters going forward is INTERNET STREAMING. Which is dominated by a completely different set of important companies with very different ways of thinking.

Legal strategies that worked on Cable TV (as a 90s business based on a specific set of issues like, for example, no customer choice, a few fixed channels, and costs/negotiations dominated by separate content providers) are very different when you're trying to persuade Apple or Samsung to include h.266 in their Apple TV or SmartTV; or likewise Netflix or YouTube.

Largely because when go from sending one single to 100K endpoints to sending 100K different ordered streams to separate end points then the bandwidth explosion and inefficiencies are relatively large. The compression gap between AV1 and H.266 is 'pissing in the wind' kind of difference. They need more bandwidth period.

Similar with ATSC 3.0 more compression mainly is leading to broadcasting more channels with better feedback and DRM control. Not really a big net savings in aggregate bandwidth consumed.

Nonsense. You seem unaware of what Charter these days is actually selling.

Yes, there is a Charter business that sends content at a fixed time down a wire using early 1990s protocols and viewed by everyone at the same time.

But there is ANOTHER Charter business (that you also get by buying a Charter Cable TV subscription) that is more like Netflix or Prime; using the same Charter Cable TV box (or the Charter app on your Apple TV or phone or whatever) you get to watch, at any time, a large collection of content that more or less mirrors what's available on the Cable TV channels – more or less the same pay movies (eg whatever's on FX or TBS or whatever this month), the same scripted content, the same reality TV, etc.

HOWEVER (and this is my point) that second service, apart from all the other ways that it is garbage compared to Netflix or Prime, has had ZERO tech upgrades since it was unrolled. It provides EXACTLY the same bits as go down the "broadcast" pipe. On the user side this means no 4K and no HDR, but just as important, it means that Charter doesn't seem to care about the fact that by using h.265 they could use a quarter of their current bandwidth.

BTW it's also simply not true that ATSC3 is only being used for more channels. I can clearly see the difference between an ATSC3 broadcast or its equivalent on the ATSC channel.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.