Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M3 vs M4

- Thread starter Dulcimer

- Start date

-

- Tags

- m3 chip m4 chip neural engine

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why? Still extremely good value, a M4 Pro will not make too big of a difference.I feel like I got ripped off buying an M3Pro MBP last November.

I think the M3 Pro has the best power and efficiency for the price.

It’s never ending cycle. My 64 GB M1 Max still runs great, and barring Apple getting M4 Max or M5 max to 256 GB RAM, my plan is to upgrade to M6 or M5 if it is worth.I feel like I got ripped off buying an M3Pro MBP last November.

Yes you can still unpack the weights if you have access on the NPU it isn't free but it would reduce latency for sure, also smaller models can be even more bandwidth limited depending on architecture.Weight storage format does not have to be the same as the internal ALU precision. You can still use bandwidth-saving quantized models and unpack the weights in the NPU. It's as you say, the NPU will be bandwidth-limited on larger models. Increasing the ALU rate is probably not very helpful in this context.

Still without software this have limited use for anyone that is not an Apple.

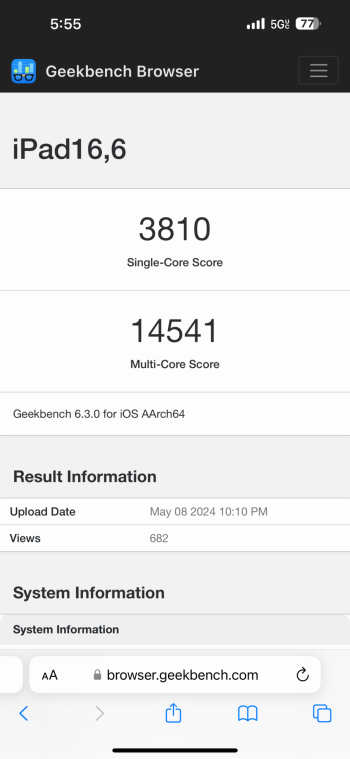

Managed to get a preliminary benchmark on this. The new "AI coprocessor" really fools current version of Geekbench6 and makes the single core score unreasonably high that I even doubt if the Geekbench6 even means anything for M4 and beyond anymore, as the composite score bias towards the "AI workload" like object detection way too much. This might also explain why Apple's performance claim is not based on Geekbench score for M4 iPad, probably because the performance gain would be misleadingly higher than it actually is for most workloads.

Also, the first benchmark reporing 3.9GHz is probably using an early sample or some kind of detection error. The M4 in new iPad Pro can go up to 4.4GHz.

Oh, just found there is an entry in the public database:

Also, the first benchmark reporing 3.9GHz is probably using an early sample or some kind of detection error. The M4 in new iPad Pro can go up to 4.4GHz.

Oh, just found there is an entry in the public database:

Last edited:

Managed to get a preliminary benchmark on this. The new "AI coprocessor" really fools current version of Geekbench6 and makes the single core score unreasonably high that I even doubt if the Geekbench6 even means anything for M4 and beyond anymore, as the composite score bias towards the "AI workload" like object detection way too much. This might also explain why Apple's performance claim is not based on Geekbench score for M4 iPad, probably because the performance gain would be misleadingly higher than it actually is for most workloads.

Also, the first benchmark reporing 3.9GHz is probably using an early sample or some kind of detection error. The M4 in new iPad Pro can go up to 4.4GHz.

Oh, just found there is an entry in the public database:

Quick note on this: the GB6 CPU test does not use the ML coprocessor. That’s just the CPU. If that result is real deal, Apple just built the fastest CPU core in a consumer device.

If you look at the sub item you will notice that the object detection perf is more than doubled compared with M3 while other items stay within in the expected and more reasonable 10%-20% range. At least for the object detection workload, some magic coprocessor is being activated. I think it is inside the new AMX.

Quick note on this: the GB6 CPU test does not use the ML coprocessor. That’s just the CPU. If that result is real deal, Apple just built the fastest CPU core in a consumer device.

The "Next-generation ML accelerators" is listed with the cores, so it looks like it belongs to the core cluster to me just like AMX, or it is referring to AMX. What do you think?

If it makes you feel better, the M2 Pro/Max buyers feel more ripped off since the M3s came out in the same year.I feel like I got ripped off buying an M3Pro MBP last November.

All assuming the result is legit:If you look at the sub item you will notice that the object detection perf is more than doubled compared with M3 while other items stay within in the expected and more reasonable 10%-20% range. At least for the object detection workload, some magic coprocessor is being activated. I think it is inside the new AMX.

A reasonable guess, though you can't be certain.

It really doesn't matter though. That one test does not bias the overall score all that much. Take it out and you still have a 15-20% performance boost for the M4 over the M3. That's not hugely surprising - it looks like they did a variation on Intel's tick-tock from M2->M3->M4 - but it's still a huge deal. Remember this is in the friggin' iPad! It's virtually certain they can push the cores a few hundred MHz more in their laptops and desktops. They are going to *crush* everyone in single-core when those ship, not just beat them as they're doing right now.

Amusingly, all the predictions I made here (in other threads) about the M3, which I got totally wrong, may come true in the M4. A year late, sad to say, but still good stuff.

This makes it likely that Apple's M4 will be superior in single core not only to all existing X86 processors, but to the next generation CPUs coming out soon (Zen 5, and Intel 15xxx). Not to mention QC, already easily beaten by the M3.

I'm very interested to see what the M4 Pro and M4 Max have in store for us. I want the Pro to be 8P8E, but the appearance of the 6 core E cluster in the M4 suggests that that's off the table. Oh well. 6P6E is still going to be pretty strong at 15-20% faster than the M3Pro. In fact, I reluctantly predict no core count increases in the Pro or Max in the M4 generation. They have other things to spend their silicon on, and they're getting big wins from IPC and clock bumps.

[Edit: actually it's plausible they'd go from 4E to 6E in the Max. It's not much, but it's something.]

Last edited:

That isn't how this works. Yes, GB6 Object Detection and Background Blur are based on ML technology, but Primate Labs doesn't write any part of GB's CPU benchmarks to call into system frameworks which may then use a coprocessor to do the actual work. GB CPU is explicitly aimed at testing only the CPU, so Primate compiles open source implementations of the algorithms they want to test for the CPU.If you look at the sub item you will notice that the object detection perf is more than doubled compared with M3 while other items stay within in the expected and more reasonable 10%-20% range. At least for the object detection workload, some magic coprocessor is being activated. I think it is inside the new AMX.

I guarantee you Apple is not analyzing the Arm code in the GB6 benchmark binaries, detecting that one is performing a particular ML task, and substituting an AMX (or other coprocessor) implementation. That is insanely difficult to do in a general way. (The other possibility is a narrowly focused benchmark cheat, which has historical precedent, but I consider extremely unlikely for Apple to do.)

Finally, GB6 uses geometric mean across all the sub-benchmarks to create the final composite score. The reason they do this is that geometric mean has a nice property: when you do one across many data points, a small number of outlier scores (like Object Detection here) don't tend to influence the geomean much. Most of the score difference here is the result of all the subtests showing that "iPad16,6" has a CPU significantly faster than M3.

There's a chance this benchmark is fake, but if it's real, M4 CPUs are not mere copy&paste from M3.

GB CPU is explicitly aimed at testing only the CPU, so Primate compiles open source implementations of the algorithms they want to test for the CPU.

But GB does use the `I8MM`extensions on arm cpus and that feature is used for quantized machine learning workloads. This extension includes Int8 Matrix Multiply instructions which can be implemented in the AMX and be exposed as arm instructions under this extension.

GB6 uses geometric mean across all the sub-benchmarks to create the final composite score.

No, it is not geometric mean, it is a weighted arithmetic mean that integer tests have more weight. One interesting thing is, it seems like the object detection is classified as integer score which has more weight because it is doing "int8" arithmetic. If my guessing about the integer/float test classification is right, then the final composite score will be more closer to 10% over M3 instead of current almost 20% over M3 if we remove this outlier test

Last edited:

So how much are GB deficiencies or being outdated for ML characterization vs the manufacturers (including but not limited to Apple) “gaming” their implementations to optimize their benchmark scores without caring about the resulting impact on actual performance in the field?

I’ll admit that I’m prejudiced and I believe the later is the real issue.

I’ll admit that I’m prejudiced and I believe the later is the real issue.

Uh, unpacking the weights IS free!Yes you can still unpack the weights if you have access on the NPU it isn't free but it would reduce latency for sure, also smaller models can be even more bandwidth limited depending on architecture.

Still without software this have limited use for anyone that is not an Apple.

There are at least two ways in which weights are "packed" (omit zero weights, and quantization). They are described here:

US11120327B2 - Compression of kernel data for neural network operations - Google Patents

Embodiments relate to a neural processor circuit that includes a kernel access circuit and multiple neural engine circuits. The kernel access circuit reads compressed kernel data from memory external to the neural processor circuit. Each neural engine circuit receives compressed kernel data from...

patents.google.com

Later versions of the ANE include a few more ways to compress weights (mostly relevant to vision, but maybe also applicable to audio) based on symmetries in the weights (eg mirror symmetry, or rotational symmetry).

Could people please try to stick to some sort of confirmed reality when making claims in these threads.

For the M4 case, it is the former. Add optimizations for Int8 Matrix Multiply is made specifically for AI workloads and makes a lot of sense, because such workloads will be expected to be more common.So how much are GB deficiencies or being outdated for ML characterization vs the manufacturers (including but not limited to Apple) “gaming” their implementations to optimize their benchmark scores without caring about the resulting impact on actual performance in the field?

I’ll admit that I’m prejudiced and I believe the later is the real issue.

The problem is, in my opinion, is that the AI workloads have too much weight (it should not be classified as integer workload in the first place). But it is understandable as the AI workloads is not a dedicated category when Geekbench 6 was out, and probably the developers never expected that the AI workloads can be optimized in such a way that more than 100% performance increase can happen within a single generation and outperforming each and every other CPUs on the list.

So your analysis is that Apple makes deliberate changes to their HW designs to achieve better scores of GB6-ML and other benchmarks and thenSo how much are GB deficiencies or being outdated for ML characterization vs the manufacturers (including but not limited to Apple) “gaming” their implementations to optimize their benchmark scores without caring about the resulting impact on actual performance in the field?

I’ll admit that I’m prejudiced and I believe the later is the real issue.

NEVER REFERENCES THOSE NUMBERS, IN KEYNOTES OR MARKETING?

That's one hell of an evil plan!

(They have, on rare occasions, referred to CPU or GPU benchmarks. I have not once ever seen them refer to any sort of ML benchmark...)

Geekbench results for M4:

browser.geekbench.com

browser.geekbench.com

Better than I thought especially with single core.

Geekbench Search - Geekbench

Better than I thought especially with single core.

Attachments

Another theory to this is that, with this generation, they really exposed the AMX instructions as SME and being picked up by Geekbench 6.But GB does use the `I8MM`extensions on arm cpus and that feature is used for quantized machine learning workloads. This extension includes Int8 Matrix Multiply instructions which can be implemented in the AMX and be exposed as arm instructions under this extension.

And this is in the iPad. I wonder if there will be any differences in the MacBook Pro.Geekbench results for M4:

Geekbench Search - Geekbench

browser.geekbench.com

Better than I thought especially with single core.

Anyhow, as expected, that is a 10-core M4. It's way faster for single-core, but is roughly the speed of M3 Pro for multi-core.

I'm guessing then for 9-core M4, it might be around 12000 multi-core, or roughly the speed of M3. We shall see next week.

No, it doesn't look like it. Just eyeball the rest of the individual scores. You're still looking at >15%, assuming my eyeballs can do math well enough.[...] If my guessing about the integer/float test classification is right, then the final composite score will be more closer to 10% over M3 instead of current almost 20% over M3 if we remove this outlier test

Apple has never been known to game benchmarks. But they are consistently working smart on top of working hard, when it comes to their silicon. The marketing dept. is not above cherrypicking data on occasion (less often than you might think though), but the engineers are laser-focused on maximizing real performance. Or rather, usually, perf/power.So how much are GB deficiencies or being outdated for ML characterization vs the manufacturers (including but not limited to Apple) “gaming” their implementations to optimize their benchmark scores without caring about the resulting impact on actual performance in the field?

I’ll admit that I’m prejudiced and I believe the later is the real issue.

This Twitter user seems to saying the same thing as speculated by @Gnattu

That it’s really the SME that’s helping here. Granted this person is comparing M3 Max with fans to fanless M4 and adjusting for frequency to say about 3% IPC increase.

We'll see. But in the meantime, why is everyone fixated on IPC?

This Twitter user seems to saying the same thing as speculated by @Gnattu

That it’s really the SME that’s helping here. Granted this person is comparing M3 Max with fans to fanless M4 and adjusting for frequency to say about 3% IPC increase.

This is probably a hangover from the Intel Experience, where they drove clocks at all costs, to everyone's detriment. The fact is, though, that the lowest-hanging fruit for Apple at the moment may well be clock improvements. And they surely seem to have delivered here, beyond the modest process gains from N3E.

I think you are leaping to extremely shaky conclusions.But GB does use the `I8MM`extensions on arm cpus and that feature is used for quantized machine learning workloads. This extension includes Int8 Matrix Multiply instructions which can be implemented in the AMX and be exposed as arm instructions under this extension.

FEAT_I8MM is part of Arm's Advanced SIMD (ASIMD), formerly known as NEON. FEAT_I8MM instructions read and write the processor's core 32-entry 128-bit SIMD register file, V0-V31.

AMX is an Apple custom coprocessor extension. It is truly a coprocessor, by which I mean it isn't very tightly coupled to the main core. AMX instructions essentially send a signal to a remote out-of-core block which goes off and does some computation for a while, then signals the main CPU when it's done. AMX has its own register set which is not part of the standard Arm register set, and it's very different in organization. There are just three AMX registers, X Y and Z, with operations generally reading from X and Y and writing to Z. They are absolutely enormous: X and Y are 512 bytes, Z is 4096 bytes. AMX instructions operate on 64-byte (512-bit) slices of X and Y, writing results to a slice of Z. To cap it all off, there is no way to move data between the AMX X/Y/X and ASIMD V0-31; data has to be copied through memory stores and loads.

So it makes absolutely no sense for Apple's CPU architects to ship some subset of Arm ASIMD instructions to the AMX unit. ASIMD is a classic tightly coupled small-vector low-latency SIMD extension, AMX is a loosely coupled large-vector high-latency coprocessor. These two flavors do not taste great together! If Apple greatly improved FEAT_I8MM in M4, I can just about guarantee it wasn't by moving the work to a coprocessor - they would want to do all ASIMD work in-core.

No, it is not geometric mean, it is a weighted arithmetic mean that integer tests have more weight. One interesting thing is, it seems like the object detection is classified as integer score which has more weight because it is doing "int8" arithmetic. If my guessing about the integer/float test classification is right, then the final composite score will be more closer to 10% over M3 instead of current almost 20% over M3 if we remove this outlier test

See page 9. GB6 CPU integer is the geomean of integer scores, GB6 FP is the geomean of FP scores, and the weighted arithmetic mean is done to combine the int and fp scores into a single "CPU" number.

For this to be true, GB6 would need to already be shipping alternate code paths which use SME instructions in their software. Why would they be doing this prior to availability of any CPUs which can execute SME on the platforms they support? How would they have debugged it, and made sure it worked? Look at this giant Arm ISA feature matrix:Another theory to this is that, with this generation, they really exposed the AMX instructions as SME and being picked up by Geekbench 6.

There are some very modern chips listed there. Literally none of them support SME, not even the latest cores from Arm itself which do support SVE2.

I think you (and that guy on twitter someone else mentioned) don't seem to understand that CPU features have to be explicitly coded for. If you ship a binary containing zero SME instructions, and you never call a system API which uses SME on your behalf, you get nothing out of SME. And on the other side of things, if you ship a binary which uses SME instructions and users attempt to run it on a processor which lacks them, they'll experience your program crashing because it tried to execute an illegal instruction.

There is a workaround for that latter problem - you use system APIs to detect which optional ISA features the processor supports, and select between alternate implementations of key parts of your program based on what it returns. However, once again, what reason is there to believe that GB6 did this prior to the general availability of any CPU which supports SME? They have to test and debug before they ship, how would they have done it before having any hardware to test and debug with?

Great so this will work just dandy in an ever luvin 27" iMacit will draw a fair bit less power (good for MacBooks) and be cheaper to produce so prices should hold the line and we might see more base RAM or storage since Apple would have more margin to work with.

If true, that smokes my M1 Max on single /Multi Core CPU. For my workloads, M2 Ultra is still the king, M3 Max is about 40% better than M1 Max. Can’t wait for what they will do with high end M4 chips.Geekbench results for M4:

Geekbench Search - Geekbench

browser.geekbench.com

Better than I thought especially with single core.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.