Or maybe ArsTechnica forums…I’m guessing AnandTech forums.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M4+ Chip Generation - Speculation Megathread [MERGED]

- Thread starter GoetzPhil

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Or maybe ArsTechnica forums…

Oh yeah, I never think about that abbreviated as AT since they already have Ars.

Yeah, I’ve only ever seen it as Ars over 20+ years…Oh yeah, I never think about that abbreviated as AT since they already have Ars.

AMD's approach to chiplet based CPUs has high (idle) power draw, that's not what Apple wants. How is Intel's?Apple can probably avoid many of Intels and AMD's mistakes

My last PC certainly won the vertical drop award.The M2 Ultra is honestly embarrassing for a $4K desktop computer. You can get vastly more performance in a PC for that price. Apple Silicon was made for laptops and phones. There’d be no shame for Apple if they threw in the white flag and admitted that on desktop, where performance per watt is irrelevant, Apple silicon can‘t compete.

A decent time of 1.8 seconds from release out of my office window to contact on the concrete below.

A magnificent performance, unmatched by any Apple Mac since.

As far as I can tell AMD's high idle power draw is a thing of the past in Zen 4/5. My impression is that the main culprit was the bad GloFlo node the IO die was forced to use on Zen 3 and previous chips. Now they use TSMC N6 dies for IO. I've got this nagging feeling that there was another potential culprit as well, but regardless, high power draw at idle seems to have been solved.AMD's approach to chiplet based CPUs has high (idle) power draw, that's not what Apple wants. How is Intel's?

As @leman mentioned Intel's Arrow Lake suffers from memory bandwidth/latency issues, though it isn't clear that's a 100% a result of the disaggregated design as I don't think Lunar Lake suffers nearly as badly (which had a slightly different but overall similar approach using Foveros) and Intel keeps promising "fixes". So we'll see. Also, AMD's uses chiplets on its server and AI chips and seems to do okay with respect to bandwidth.

Both Intel and AMD use separate IO dies, it is unclear if Apple will follow suit. They might, but they might not. It could be more similar to the Ultra where the IO is on both dies or it could be that all the IO will be on the CPU/GPU die (probably CPU die in that case) and the other die gets its memory through the interconnect.

Here is a rundown of all the various packaging techniques (though unfortunately part of it behind a paywall):

Advanced Packaging Part 2 - Review Of Options/Use From Intel, TSMC, Samsung, AMD, ASE, Sony, Micron, SKHynix, YMTC, Tesla, and Nvidia

Advanced packaging exists on a continuum of cost and throughput vs performance and density.

(part 1 here)

There is a rumor that Nvidia will use CoWoS-L packaging tech for their next Rubin GPU (and I believe on the soon-to-be-released Blackwell) which is somewhat similar to Apple's current InFO-LSI packaging for the Ultra, but fundamentally different from the rumored SoIC fabrication (which I believe AMD uses for its 3D cache). I think Apple uses InFO-R for packaging memory. Intel did too on Lunar Lake but said it was too expensive and they won't be doing that going forwards (one reason, the better one, why Apple charges what it does for memory).

I'm coming from the Other side, and places like AT have serious, extensive discussion on this and other Ua and Litho technologies than I'm seeing in Apple forums.

If I am understanding the queries here, folks are wondering if Apple moving to tiles is going to somehow improve performance?

Generally, no.

Tiles are primarily an economic benefit via increased yield of wafers while defect densities may remain the same per nm/sq between tiles and monolithic full die designs.

A tile that has a defect only affects that tile/die area, small amount of silicon to be trashed.

A SOC/Full fat design monolithic with a similar defect affects multiples of that tile/die area, large amount of silicon to be trashed or binned down if sufficient redundancy has been included.

With Tiles being so small, and high yields may also make it possible to reduce the levels of redundancy they've learned to add from Monolithic die experience, and end up saving even more space and being even less expensive.

So far, I've only run across a handful of folks referenced on AT to X/Twitter posters who actually get into the nitty-gritty details doing comparisons across Apple and other micro-architectures and foundry lithographies.

If Apple is moving to tiles, then it is likely primarily because their monolithic dies are now becoming large enough that adding additional redundancy to overcome defect densities is becoming to high to maintain their cost basis.

Going with tiles is not a get out of jail free card, the downside is that moving from monolithic means you need inter-tile fabrics that are a can of worms on their own and so far have generally led to lower performance than monolithic dies in latency and throughput.

Can be alleviated with high-speed fabrics, and Apple can probably avoid many of Intels and AMD's mistakes with careful R&D. However I might be wrong but I'm not aware of anyone generally beating monolithics inherent latency/throughput advantage with tile use.

Aye, by itself, moving to a disaggregated design will do little to improve performance (in fact would be a slight negative as bandwidth and latency becomes worse/more power hungry). However, because it becomes more economical to produce dies, a secondary effect is that Apple could, in theory, make larger GPUs with more cores for a lower cost than what they would've been able to make with a monolithic design. Of course they would still have to balance power costs of those additional cores, but that could be done with clock speed.

Last edited:

I'm coming from the Other side, and places like AT have serious, extensive discussion on this and other Ua and Litho technologies than I'm seeing in Apple forums.

If I am understanding the queries here, folks are wondering if Apple moving to tiles is going to somehow improve performance?

Generally, no.

Tiles are primarily an economic benefit via increased yield of wafers while defect densities may remain the same per nm/sq between tiles and monolithic full die designs.

A tile that has a defect only affects that tile/die area, small amount of silicon to be trashed.

A SOC/Full fat design monolithic with a similar defect affects multiples of that tile/die area, large amount of silicon to be trashed or binned down if sufficient redundancy has been included.

With Tiles being so small, and high yields may also make it possible to reduce the levels of redundancy they've learned to add from Monolithic die experience, and end up saving even more space and being even less expensive.

Not true.

Any decent modern SoC is designed for resiliency, so that almost all manufacturing flaws can be worked around. Sometimes this is obvious (eg have a way to program out a CPU or GPU core that is flawed, and sell the result as 8 core rather than 10 core, or whatever), sometimes it's more subtle like designing all SRAMs with one or two redundant columns, so that you can "program out" the use of a redundant column.

Meanwhile even apart from the additional power consumed by chiplets (it's always going to cost more to cross a chiplet boundary than to stay within chiplet) packaging at the level required is not cheap AND you can lose yield during the packaging, perhaps more so than you save from your smaller chiplets hitting fewer manufacturing defects.

Two data points:

- Intel makes vastly less profit on its chiplet based SoCs than on its monolithic SoCs. Some of this is paying TSMC, but some of it is just the fact that chiplets are NOT a way to save money. They have various uses, but saving money is not one of them.

search for Intel Lunar Lake. (Though the entire article is interesting)

- Why is HBM so expensive? The general consensus is that "fairly priced" HBM should be about 3x the price of DRAM. Why so high? Because you lose some wafer space to the TSVs and other chiplet paraphernalia, you lose a fair number of chips to *packaging yield*, and the cost of the packaging.

Apple ALREADY use chiplets, in the sense that Ultra's are a chiplet design. If Apple switch to a more aggressive version of such a design (disaggregated GPU vs CPU) it will be for OPTIONALITY (ie the ability to create more SKU variants) than because it saves money.So far, I've only run across a handful of folks referenced on AT to X/Twitter posters who actually get into the nitty-gritty details doing comparisons across Apple and other micro-architectures and foundry lithographies.

If Apple is moving to tiles, then it is likely primarily because their monolithic dies are now becoming large enough that adding additional redundancy to overcome defect densities is becoming to high to maintain their cost basis.

Going with tiles is not a get out of jail free card, the downside is that moving from monolithic means you need inter-tile fabrics that are a can of worms on their own and so far have generally led to lower performance than monolithic dies in latency and throughput.

Can be alleviated with high-speed fabrics, and Apple can probably avoid many of Intels and AMD's mistakes with careful R&D. However I might be wrong but I'm not aware of anyone generally beating monolithics inherent latency/throughput advantage with tile use.

It's a finely balanced issue whether it makes sense. Depends on the cost of masks, the cost per sq-mm of N2, etc.

It's POSSIBLE that given Apple's "traditional" volumes for Max and Ultra, a monolithic design (even with extra silicon "wasted" on duplicate ISPs, Secure Enclaves, more E-cores than makes sense, etc) was optimal.

BUT if Apple recently (say two or three years ago) felt it made sense (maybe even just for internal use) to build up a large number of data warehouse specific machines, and to specialize these, the most pressing being the equivalents of GB300s for training, with some P-compute, maybe no E-compute, and as much GPU compute (maybe along with some ANE?) then maybe the equation changes?

Given the costs of GB300 (IF you can even buy them) and the volumes Apple may want to install, running the numbers may show that, at least for one or two design iterations a chiplet design is actually the cheapest fastest way to get to where Apple wants to be, in that it allows them to do some degree of internal experimentation with the balance of warehouse designs, even if none of the results are ever seen by the public?

That's true, packaging has its own costs. You can save additional money though by doing things like separating out the IO Die and manufacturing it on a cheaper node (like say TSMC N6) and also say repurposing a die without having to completely redesign a larger monolithic design (getting a bit into your later point about packaging smaller die together being useful for flexibility). AMD has said they definitely save money using this approach - it also probably depends on the type of package tech, the size of the dies and what the hypothetical monolithic die might be, and the yields of all of the aforementioned.Not true.

Any decent modern SoC is designed for resiliency, so that almost all manufacturing flaws can be worked around. Sometimes this is obvious (eg have a way to program out a CPU or GPU core that is flawed, and sell the result as 8 core rather than 10 core, or whatever), sometimes it's more subtle like designing all SRAMs with one or two redundant columns, so that you can "program out" the use of a redundant column.

Meanwhile even apart from the additional power consumed by chiplets (it's always going to cost more to cross a chiplet boundary than to stay within chiplet) packaging at the level required is not cheap AND you can lose yield during the packaging, perhaps more so than you save from your smaller chiplets hitting fewer manufacturing defects.

Two data points:

- Intel makes vastly less profit on its chiplet based SoCs than on its monolithic SoCs. Some of this is paying TSMC, but some of it is just the fact that chiplets are NOT a way to save money. They have various uses, but saving money is not one of them.

search for Intel Lunar Lake. (Though the entire article is interesting)

- Why is HBM so expensive? The general consensus is that "fairly priced" HBM should be about 3x the price of DRAM. Why so high? Because you lose some wafer space to the TSVs and other chiplet paraphernalia, you lose a fair number of chips to *packaging yield*, and the cost of the packaging.

I would definitely wager that a huge percentage of Intel's lack of profit on Lunar/Arrow Lake is having to pay TSMC for N3. After all in the article you linked they made the cost comparison to Meteor Lake, where Lunar Lake is more expensive while Meteor Lake actually had more, separate dies (two of which were already TSMC albeit N5 and N6). A counterpoint to my position is that Intel said the packaging memory (not even HBM, just what Apple does on all its chips) was too expensive and would not be continuing to do it for Panther Lake, probably a combination of paying TSMC and simply the cost of including memory on package (which supports your point that packaging, even relatively simple, smaller packaging compared to say HBM, is expensive). I wonder how much Apple's LPDDR on-die packaging cost for the R1. Probably less than but more similar to HBM than their standard memory packaging.

That said, I'm very hopeful about Apple using these technique to (eventually) create more flexible chip designs. From my perspective as a consumer, that's the most exciting.

Apple ALREADY use chiplets, in the sense that Ultra's are a chiplet design. If Apple switch to a more aggressive version of such a design (disaggregated GPU vs CPU) it will be for OPTIONALITY (ie the ability to create more SKU variants) than because it saves money.

It's a finely balanced issue whether it makes sense. Depends on the cost of masks, the cost per sq-mm of N2, etc.

It's POSSIBLE that given Apple's "traditional" volumes for Max and Ultra, a monolithic design (even with extra silicon "wasted" on duplicate ISPs, Secure Enclaves, more E-cores than makes sense, etc) was optimal.

BUT if Apple recently (say two or three years ago) felt it made sense (maybe even just for internal use) to build up a large number of data warehouse specific machines, and to specialize these, the most pressing being the equivalents of GB300s for training, with some P-compute, maybe no E-compute, and as much GPU compute (maybe along with some ANE?) then maybe the equation changes?

Given the costs of GB300 (IF you can even buy them) and the volumes Apple may want to install, running the numbers may show that, at least for one or two design iterations a chiplet design is actually the cheapest fastest way to get to where Apple wants to be, in that it allows them to do some degree of internal experimentation with the balance of warehouse designs, even if none of the results are ever seen by the public?

Last edited:

Not sure why you're trying to disagree with me when everyone has been switching to Tiling because it does in fact reduce losses from chip defects.Not true.

Any decent modern SoC is designed for resiliency, so that almost all manufacturing flaws can be worked around. Sometimes this is obvious (eg have a way to program out a CPU or GPU core that is flawed, and sell the result as 8 core rather than 10 core, or whatever), sometimes it's more subtle like designing all SRAMs with one or two redundant columns, so that you can "program out" the use of a redundant column.

If your redundancy isn't enough to allow you to scavenge an 8 core to a 6 core, or other failure, your loss is a small x tile sized area vs potentially a full CPU or SOC depending on where the failure is.

Packing losses are there, known, and included in Cost Basis and still everyone and their grandmother is making Tiling the defacto Standard, are they also wrong?

I'll see your cite with my own: https://arxiv.org/html/2203.12268v4

So as I said, Apple moving to more of a Tile approach in future is likely do to them reaching common limits where defect density is starting to effect the economics they want to see.

And going with Tiles instead of Monlothic means they will have to do the work on fabric interconnects and interposers, etc, just like AMD and Intel have done.

So far, I don't think I've seen any cases where any of these comms technology have bested what is available when a design is Monolithic. Perhaps Apple, Intel or AMD (TSMC) have some uber-inter-tile link in the works, however I'm happy to be shown to be wrong and some cheap solution is on the way which will make this point moot.

That being said, I'll reiterate that moving to Tiles en masse as Apple might be doing, is not automatically going to increase performance as I thought I was reading. Apple will however be able to look at all the work previously done by others and avoid a lot of costly mistakes/time either using a newer proven technology or doing some heavy lifting with TSMC or other and adapting one in a uniquely Apple way.

Apple bought PA-Semi years ago and ended up spanking ARM/World, and then lost a lot of 0.1% talent which made the M2/M3 lag quite apparent. I've not heard or read anything indicating they've bought out someone with some new, world changing interconnects design though, so I would expect them to be using one of the better, probably more expensive technologies.

Sure, it could. Not in this universe, of course. 😅Studio M4 max could start from $1999

MBP 14" M4 Max 14C 36GB/1TB is 3199 USD. I guess the equivalent Studio setup would be at least 2199 - unless they will try to match M2 Max Studio 30C GPU 32GB/512GB at 1999 USD, but it seems unlikely.Why not?

(Btw, in Europe the prices have always been 600-700 Euros higher than what it should have been based on USD/Euro equivalence. E.g. the above MBP and Mac Studio are priced at 3849 Euros and 2419 Euros, respectively!)

Also, if Mac Studio will be commercialized after the implementation of tariffs, the cost will, of course, be shifted towards customers, meaning that in the US people will start to pay European prices and in Europe Apple products will basically become a non-go...

So far, I don't think I've seen any cases where any of these comms technology have bested what is available when a design is Monolithic.

I’d think that UltraFusion is quite successful at delivering on-die network performance to a tiled solution.

So as I said, Apple moving to more of a Tile approach in future is likely do to them reaching common limits where defect density is starting to effect the economics they want to see.

Why this confidence that they are moving to tiles? As I mentioned before, there are much more interesting packaging solutions they can pursue.

Apple bought PA-Semi years ago and ended up spanking ARM/World, and then lost a lot of 0.1% talent which made the M2/M3 lag quite apparent.

I don’t follow. The velocity of Apple Silicon architectural improvements didn’t really change much since M1, they’ve been delivering consistent year to year improvements and M4 is the widest shipping CPU arch. There is no evidence whatsoever of a design lag or talent loss, just some myths perpetuated by vloggers with unrealistic expectations. In fact, the single-core performance of Apple Silicon went up by 50-60% in four years as measured by popular benchmarks.

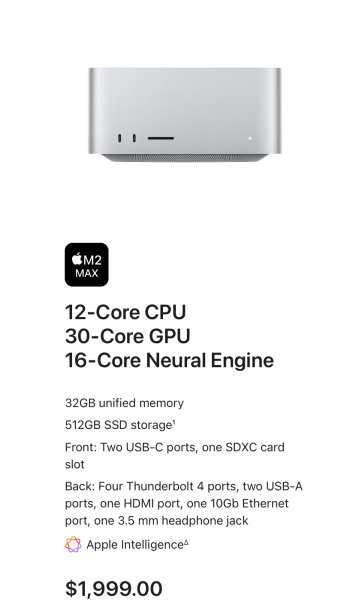

Its already happenedSure, it could. Not in this universe, of course. 😅

Current M2 Max Mac studio starting price

Attachments

Last edited by a moderator:

Yes, because M2 Max and M4 Max are the same thing.Current M2 Max Mac studio starting price

(Btw, in Europe the prices have always been 600-700 Euros higher than what it should have been based on USD/Euro equivalence. E.g. the above MBP and Mac Studio are priced at 3849 Euros and 2419 Euros, respectively!)

That’s because EU prices include the VAT and likely additional tax (recycling etc.). If you subtract the VAT, you end up with 3180 euros base price in Germany base price of that MacBook in Germany or less than $100 USD difference.

So the prices listed in Apple's website don't include the VAT? Interesting, I never payed attention to that, since I was not going to buy overseas something that is available locally anyways. Thanks for the info!That’s because EU prices include the VAT and likely additional tax (recycling etc.). If you subtract the VAT, you end up with 3180 euros base price in Germany base price of that MacBook in Germany or less than $100 USD difference.

Mbp M2 pro/max and Mbp M4 pro/max that are not the same thing kept the same starting priceYes, because M2 Max and M4 Max are the same thing.

Hey even when Apple bump from 8gb to 16gb they didnt raise the price by $1 , again in what universe do you live?

Last edited by a moderator:

The starting price of the M4 Max Studio is a more interesting question than I originally thought actually. The complicating issue (beyond possible tariffs of course) is that the M4 Max laptops have indeed increased in price since the M2 Max (by about $100 for the 14" and $200 for the 16" - also dropping the cheaper 512GB tier of the 16" M2 Max ... which I didn't even remember they had, the 14" M2 Max started at 1TB). However, Apple also likes to keep base models of each product tier as stable as they can so that the M4 Pro still starts at $2000 for the 14" and $2500 for the 16". This is also true all the other base products such as far as I can tell and the M4 Max is the base chip of the Studio.

As such, it is probable that the M4 Max Studio will start at $2K BUT we might also see larger price increases we move up the product sub-tiers than what we saw with the M2 Max Studio. In other words the Studio M4 Ultra (whatever it ends up being) and the full Studio M4 Max may cost more than the M2 Ultra and full M4 Max for the Studio. The base price might also increase if they drop the 512GB base Studio tier and move the Studio to start at 1TB. Possible.

everymac.com

everymac.com

everymac.com

everymac.com

everymac.com

everymac.com

everymac.com

everymac.com

As such, it is probable that the M4 Max Studio will start at $2K BUT we might also see larger price increases we move up the product sub-tiers than what we saw with the M2 Max Studio. In other words the Studio M4 Ultra (whatever it ends up being) and the full Studio M4 Max may cost more than the M2 Ultra and full M4 Max for the Studio. The base price might also increase if they drop the 512GB base Studio tier and move the Studio to start at 1TB. Possible.

MacBook Pro "M2 Max" 12 CPU/30 GPU 14" Specs (14-Inch, M2 Max, 2023, MPHG3LL/A*, Mac14,5, A2779, 8102*): EveryMac.com

Technical specifications for the MacBook Pro "M2 Max" 12 CPU/30 GPU 14". Dates sold, processor type, memory info, hard drive details, price and more.

MacBook Pro "M2 Max" 12 CPU/30 GPU 16" Specs (16-Inch, M2 Max, 2023, BTO/CTO, Mac14,6, A2780, 8103*): EveryMac.com

Technical specifications for the MacBook Pro "M2 Max" 12 CPU/30 GPU 16". Dates sold, processor type, memory info, hard drive details, price and more.

MacBook Pro "M2 Pro" 10 CPU/16 GPU 14" Specs (14-Inch, M2 Pro, 2023, MPHE3LL/A*, Mac14,9, A2779, 8102*): EveryMac.com

Technical specifications for the MacBook Pro "M2 Pro" 10 CPU/16 GPU 14". Dates sold, processor type, memory info, hard drive details, price and more.

MacBook Pro "M2 Pro" 12 CPU/19 GPU 16" Specs (16-Inch, M2 Pro, 2023, MNW83LL/A*, Mac14,10, A2780, 8103*): EveryMac.com

Technical specifications for the MacBook Pro "M2 Pro" 12 CPU/19 GPU 16". Dates sold, processor type, memory info, hard drive details, price and more.

Last edited:

So the prices listed in Apple's website don't include the VAT? Interesting, I never payed attention to that, since I was not going to buy overseas something that is available locally anyways. Thanks for the info!

Listed prices in the US never include the tax. It's very confusing from the European perspective

Both.Listed prices in the US never include the tax. It's very confusing from the European perspectiveI suppose it makes some sense for online shopping, as tax differs by state, but it's the same in supermarkets and restaurants. No idea how it actually works with online purchases, whether the tax gets added to your shopping cart or whether you are supposed to pay it separately.

I remember seeing a couple of youtube videos from High Yield and Asianometry discussing "hybrid bonding" - is that in Apple's near future? Can we expect ''stacked" ultra pieces in an iPhone/iPad within five years?Why this confidence that they are moving to tiles? As I mentioned before, there are much more interesting packaging solutions they can pursue.

Last edited:

I think you have it wrong. I think reduction in feature size will be more linear. I see this as the future..Assuming a 19.5 month refresh cycle

- M1: Q4 2020 5nm

- M2: Q3 2022 5nm

- M3: Q1 2024 3nm (N3)

- M4: Q4 2025 2nm (N2)

- M5: Q2 2027 1.4nm (A14)

- M6: Q4 2028 1.4nm (A14)

- M7: Q3 2030 1nm (A10)

- M8: Q2 2032 0.7nm (A7)

- M9: Q4 2033 0.5nm (A5)

- M10: Q3 2035 0.3nm (A3)

- M1: Q4 2020 5nm

- M2: Q3 2022 5nm

- M3: Q1 2024 3nm (N3)

- M4: Q4 2025 2nm (N2)

- M5: Q2 2027 1nm (A14)

- M7: Q3 2030 0 nm (A10)

- M8: Q2 2032 -1 nm (A7)

- M10: Q3 2035 -2 nm (A3)

So in only 10 years we will have negative future sizes. Macs will produce more power than they consume.

Seriously. Each new process requires an new and MUCH more expensive production line and at some point the cost of replacing the line is no longer worth it. Over the last few years Apple has been getting a free ride, they just wait while chips get smaller. Apple does not need to reinvent much. They are basically running the 1970s vintage BSD Unix on a smaller and cheaper computer. The overall idea has been unchanged for 50+ years. Maybe someday AI will push us away from that model. But on the other hand, the same OS has survived and is older than many people reading this.

Feature size reduction can only continue for so long. In reality "zero" is a hard limit and atoms have finite size.

I would put this in the category of optionality. The way AMD uses chiplets allows them to create a wide range of SKUs from a few base components. This makes economic sense if you don't sell enough in a certain market to justify a dedicated, optimized mask set.That's true, packaging has its own costs. You can save additional money though by doing things like separating out the IO Die and manufacturing it on a cheaper node (like say TSMC N6) and also say repurposing a die without having to completely redesign a larger monolithic design (getting a bit into your later point about packaging smaller die together being useful for flexibility). AMD has said they definitely save money using this approach - it also probably depends on the type of package tech, the size of the dies and what the hypothetical monolithic die might be, and the yields of all of the aforementioned.

But that does not seem to be what Intel has in mind - they're not (as far as I know) building Xeons from their Lunar Lake components (and they can't because, in their infinite wisdom, they place different IP in Xeons from what they place in Lunar Lake...)

Everyone has NOT switched to tiles!Not sure why you're trying to disagree with me when everyone has been switching to Tiling because it does in fact reduce losses from chip defects.

If your redundancy isn't enough to allow you to scavenge an 8 core to a 6 core, or other failure, your loss is a small x tile sized area vs potentially a full CPU or SOC depending on where the failure is.

Packing losses are there, known, and included in Cost Basis and still everyone and their grandmother is making Tiling the defacto Standard, are they also wrong?

I'll see your cite with my own: https://arxiv.org/html/2203.12268v4

So as I said, Apple moving to more of a Tile approach in future is likely do to them reaching common limits where defect density is starting to effect the economics they want to see.

And going with Tiles instead of Monlothic means they will have to do the work on fabric interconnects and interposers, etc, just like AMD and Intel have done.

So far, I don't think I've seen any cases where any of these comms technology have bested what is available when a design is Monolithic. Perhaps Apple, Intel or AMD (TSMC) have some uber-inter-tile link in the works, however I'm happy to be shown to be wrong and some cheap solution is on the way which will make this point moot.

That being said, I'll reiterate that moving to Tiles en masse as Apple might be doing, is not automatically going to increase performance as I thought I was reading. Apple will however be able to look at all the work previously done by others and avoid a lot of costly mistakes/time either using a newer proven technology or doing some heavy lifting with TSMC or other and adapting one in a uniquely Apple way.

Apple bought PA-Semi years ago and ended up spanking ARM/World, and then lost a lot of 0.1% talent which made the M2/M3 lag quite apparent. I've not heard or read anything indicating they've bought out someone with some new, world changing interconnects design though, so I would expect them to be using one of the better, probably more expensive technologies.

AMD has (as I described above) for optionality reasons.

Intel did for marketing reasons and, this being stupid, it's one more thing killing them.

Meanwhile:

nV and Apple both use chiplets as an idea, but make the chiplets as large as practical before gluing them together, not the crazy Ponte Vecchio level disaggregation Intel is championing.

QC, Mediatek, Ampere, AMZ, Google (anyone I left out) are not using them.

Chiplets are a technology, and like most technologies, have their place. My complaint is that you seem to have absolutely drunk the Intel koolaid that chiplets are the optimal design point for everything going forward, even something as small as an M1 class sort of chip (ie something targeting a tablet or low-end laptop).

It may be (unlike Intel I don't claim to predict the future ten years from now) that in ten years chiplets will in fact be the optimal design point for everything from a phone upward. But I doubt it, and that is certainly NOT the case today.

And I'm sorry, I can't take anyone who talks about the "M2/M3" lag seriously. This, like the claims about Apple loss of talent, is the sort of copium nonsense you read about on x86-support-group sites, not in genuine technical discussion.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.