Which test/benchmark did you run to get this result?In Logic Pro, the Mac Pro 2023 M2 Ultra performs worse than the Mac Pro 2019 Intel Xeon 28 core.

View attachment 2226928

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

MP 14,8 Mac Pro 2023 is a scam

- Thread starter Xenobius

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Got it, thanks.

So I just ran it on Logic Pro (10.7.8) with Mac Pro M2 Ultra ( 24-core CPU, 76-core GPU, 32‑core Neural Engine - 192GB RAM)

I ran the test per the directions - https://music-prod.com/logic-pro-benchmarks/

I assume I have done it correctly but not 100%, I just kept duplicating tracks (COMMAND + CLICK) & playing it back.

I attached the updated project for you to check.

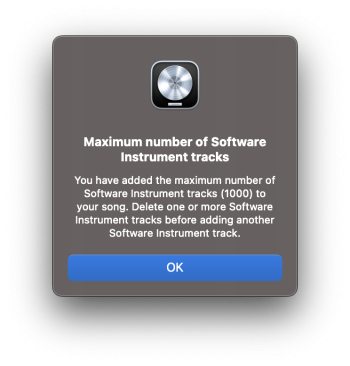

386 tracks was fine (Activity Monitor - 200% CPU), I doubled it to 772 tracks and that was good too (Activity Monitor - 400% CPU), I got up to 1001 tracks before Logic came up with a message about exceeding the 1000 tracks (see attached). It was running the 1001 tracks smoothly and Logic was using between 475-550% of CPU on Activity Monitor.

Attachments

Got it, thanks.

So I just ran it on Logic Pro (10.7.8) with Mac Pro M2 Ultra ( 24-core CPU, 76-core GPU, 32‑core Neural Engine - 192GB RAM)

I ran the test per the directions - https://music-prod.com/logic-pro-benchmarks/

I assume I have done it correctly but not 100%, I just kept duplicating tracks (COMMAND + CLICK) & playing it back.

I attached the updated project for you to check.

386 tracks was fine (Activity Monitor - 200% CPU), I doubled it to 772 tracks and that was good too (Activity Monitor - 400% CPU), I got up to 1001 tracks before Logic came up with a message about exceeding the 1000 tracks (see attached). It was running the 1001 tracks smoothly and Logic was using between 475-550% of CPU on Activity Monitor.

Test it. 550 tracks.

Attachments

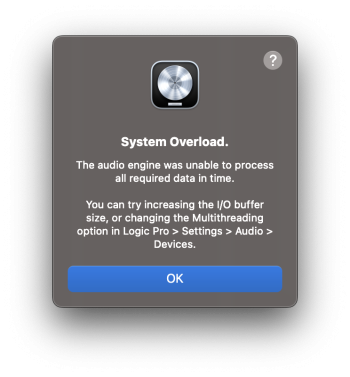

Okay I must have been doing something wrong...Test it. 550 tracks.

That sets off the System Overload.

Attachments

Okay, hol up... I've been reading the past few posts about the Logic benchmark, and I understand how that can be helpful to measure performance. But isn't that Logic benchmark just like one instrument repeated hundreds of times? At least the one I know of is like that. Here's why that's not realistic—when are you EVER going to have 200 pianos, or 200 synths, or 200 whatever, playing at the same time? Different samples consume different amounts of RAM and CPU. I think the Logic benchmarks are good to get an overall idea, but if someone actually tried to write something useful with a lot of tracks (rather than 200 sine waves or whatever), that would be a MUCH better metric of how well it performs on different systems.

Side note, I think Neil Parfitt is absolutely right about how unusable the 2023 Mac Pro is for music production. I get it, everyone's saying he's like 1% of everybody out there, but there are a LOT of other people in his situation. I'm not quite at his stage yet in terms of what he's doing professionally, but I know how this new machine presents a problem for people like him.

Side note, I think Neil Parfitt is absolutely right about how unusable the 2023 Mac Pro is for music production. I get it, everyone's saying he's like 1% of everybody out there, but there are a LOT of other people in his situation. I'm not quite at his stage yet in terms of what he's doing professionally, but I know how this new machine presents a problem for people like him.

Last edited:

We did some tests on Mac Studio with M2 Ultra. The results were 373 and 374 tracks. In the test I gave you there are 550 tracks. Start with 350.Okay I must have been doing something wrong...

That sets off the System Overload.

Thanks for that.We did some tests on Mac Studio with M2 Ultra. The results were 373 and 374 tracks. In the test I gave you there are 550 tracks. Start with 350.

Okay I got it to 388 consistently, 389 gets the warning a couple seconds of into playback.

So an overall improvement of 2 tracks ( .52%)

Thanks for that.

Okay I got it to 388 consistently, 389 gets the warning a couple seconds of into playback.

So an overall improvement of 2 tracks ( .52%)

I tormented my computer for half an hour on 500 tracks and 32 sample buffer.

Side note, I think Neil Parfitt is absolutely right about how unusable the 2023 Mac Pro is for music production.

Excepting the fact that his video is all conjecture & supposition, not actual reflections on T&E usage...

Okay, hol up... I've been reading the past few posts about the Logic benchmark, and I understand how that can be helpful to measure performance. But isn't that Logic benchmark just like one instrument repeated hundreds of times? At least the one I know of is like that. Here's why that's not realistic—when are you EVER going to have 200 pianos, or 200 synths, or 200 whatever, playing at the same time? Different samples consume different amounts of RAM and CPU. I think the Logic benchmarks are good to get an overall idea, but if someone actually tried to write something useful with a lot of tracks (rather than 200 sine waves or whatever), that would be a MUCH better metric of how well it performs on different systems.

Side note, I think Neil Parfitt is absolutely right about how unusable the 2023 Mac Pro is for music production. I get it, everyone's saying he's like 1% of everybody out there, but there are a LOT of other people in his situation. I'm not quite at his stage yet in terms of what he's doing professionally, but I know how this new machine presents a problem for people like him.

This is something I often tell my colleagues, you know? Everyone loves to compare extreme performance. But if they cut back on a little bit of FB, IG, Twitter, or that stupid TikTok, and if they stop stealing time from their bosses or clients, then in most cases, the final completion time of the project would hardly be affected. People can totally disagree with this perspective, but that's just how I see it.

I know this is a little off-topic, but to address your "Apple foldable" comment, I would like to say that before the Google Pixel Fold was released, I was very enthusiastic about Apple making their own foldable. See my topic here for details.I'd settle for an Apple foldable so I can bridge the iPhone and iPad experience with a single device. I have ZERO belief Apple will take such a step as they see 2 devices sold over 1 as the better 'outcome'. As a consumer though and similar to the Mac Pro group disappointed but accepting the Mac Pro is no longer for them, I moved to Samsung simply because they did cater for my needs. Apple continues to make money and impressively so but unless you're drinking the iPhone, iPad and MacBook koolaide you're not their primary focus!

But after many reports of Google Pixel Fold users sharing how their screens or device broke within days, I've changed my mind and now believe that Apple should wait as long as possible before releasing their smartphone/tablet hybrid.

Last edited:

Okay, hol up... I've been reading the past few posts about the Logic benchmark, and I understand how that can be helpful to measure performance. But isn't that Logic benchmark just like one instrument repeated hundreds of times? At least the one I know of is like that. Here's why that's not realistic—when are you EVER going to have 200 pianos, or 200 synths, or 200 whatever, playing at the same time? Different samples consume different amounts of RAM and CPU. I think the Logic benchmarks are good to get an overall idea, but if someone actually tried to write something useful with a lot of tracks (rather than 200 sine waves or whatever), that would be a MUCH better metric of how well it performs on different systems.

The track count doesn't really matter because the new Mac Pro is slower _per track._ Sure, the benchmark is a little extreme (I don't think it's _that_ extreme.) But what the data is actually showing is that the new Mac Pro can handle less tracks.

As long as the new Mac Pro and old Mac Pro are running the same tracks, it's totally fair. One track type is not going to be appreciably different - from a processing perspective - than another.

I don't think what's going on is likely a huge mystery at the end of the day - Logic is very multi core, and M1 Ultras strength is not as much multicore workloads. It has less cores, which lets the 28 core Xeon hold out.

A person in our research group requested more storage and a 2nd GPU to increase our DL experiment workload. I installed an 8TB sabrent nvme in a PCI express card as well as an RTX A6000 GPU in our dell workstation (the other workstation we have is a custom build with a threadripper). I then setup linux drivers and had the A6000 running workloads in pytorch within an hour because Dell didn't throw a tantrum/hissyfit at nvidia and refuse to sign drivers thus NOT screwing over all their customers unlike our favorite company.

Totally off-topic, but how is that Sabrent card? I have a USB-A to SATA dongle (meant for 2.5" SATA drives) made by them and it works fairly well. Was curious as to what a PCIe storage card from them is.

This scenario is apparently too beyond what apple can (read: wants) to provide. Which is more sad and pathetic? apples behavior or the apple apologists stating that this situation isn't "professional" because apple said so. I'm not quite sure.

Eh...it doesn't take a lot to be labeled an Apple apologist around here. Nor doesn't take much to upset those that would like to remain on the Mac platform despite Apple not having any roadmap for those particular use cases in the new Apple Silicon era we now find ourselves in.

It's clear that Apple doesn't seem to cater to the scientific community and your use cases by extension. Though, I'd imagine that what you're experiencing is similar to what I feel in having Windows PCs handle the gaming and x86 virtualization grunt that I would've happily had VMware Fusion, Boot Camp, or even the Intel flavor of macOS itself do instead. Seems better to do that on a Windows PC than an Apple Silicon Mac, especially since Intel Macs aren't being made anymore. My guess is that you're in a similar boat with the Mac Pro not really being sufficient at its higher-end configurations (let alone not having GPU expandability).

The Apple apologists by far, impulse462. Apple can claim business strategy, the apologists have no excuse.

Are you an Apple apologist if you merely restate the fact that Apple no longer cares about a given person or group of people's use cases? Apple clearly cares about fewer use cases with the 2023 Mac Pro than they did with the 2019 Mac Pro. But is it apologism to merely point that out?

Because people are getting offended when this is pointed out like it isn't already a given.

I'd agree that anyone saying that those use cases don't exist is squarely in the apologist camp. But, it's very clear that there are people whose needs were not served by anything other than a 2019 Mac Pro that will be forced to either switch to a PC or be fine with the limitations of the 2023 Mac Pro.

Again, as a lot of folks have pointed out including me, Apple’s been aiming at large scale corporate buyers with the Mac Pro since 2019, IT at a big company doesnt do custom builds like that unless there’s a very very very specific reason for it that cant be satisfied by a prebuilt solution. A uni lab or even a small research group within a company isnt the target market here.

I'm not going to claim to be @impulse462 's bro or anything like that. I have worked with users that have similar use cases at large organizations. And for those systems, it's not at all unheard of to have a custom PC workstation. At the worst, that PC workstation was likely assembled by a company that an IT department can reach out to for support or will have individual components that will have warranties for parts replacements. What you won't have is Hackintosh systems as no one is providing support for those; not even the parts OEMs ("which version of Windows are you using our Saphire W6800X on?...Oh, macOS?...Which model of Mac Pro?...Oh, a Hackintosh, you say!...Good luck with that!" is how that will go).

Usually, IT departments wouldn't build those machines. They'd just source them from somewhere else. It's not unheard of, but you are right in that it's never going to be a standard deployment and will only go to those who really need a machine that isn't merely a Dell Precision or an HP Z6/Z8.You have 2 workstations, the team of 14 people I’m on has at least 5 desktop workstations for people who need it, MacBook Pros or Dell XPS laptops for everyone, a million or so dollars of racked hardware, and millions of spend on cloud infra. If a designer in an adjacent team wants a machine if they ask for a mac pro it’s quite likely a decent bet that itll be purchased and provisioned no questions asked beyond standard approvals, but if they ask for a custom machine IT has to build from parts it would be a nightmare to get

24-core M2 Ultra (no hyperthreading) versus 28-core Xeon (56 threads with hyperthreading) does not seem a fair match-up...

If it's fair for Apple to compare, then I don't see what it's not fair for anyone else to compare.

If it's fair for Apple to compare, then I don't see what it's not fair for anyone else to compare.

Just matching threads to threads...?

24-core M2 Ultra (no hyperthreading) versus 28-core Xeon (56 threads with hyperthreading) does not seem a fair match-up...

Maybe 24-core M2 Ultra (no hyperthreading) versus a 12-core (24 threads with hyperthreading) Xeon would be a more reasonable comparison...?

Maybe? It's kind of interesting for the price difference maybe. But in the end I'm not sure what it proves. The slower Xeon is going to be slower. And it's still a five year old Xeon anyway, so it's not really a good indicator of x86 vs Apple Silicon or x86 vs Apple Silicon pricing.

Dude, without ECC you have NO IDEA OF KNOWING when you had a memory error! That's one big point of having it, so that you get an actual warning of bad or failing memory. That's why EVERY device should have ECC. Computers have error detection in multiple places, what makes RAM, of all things, so unimportant to not have it there?But when was the last time you were working and an Apple Silicon Mac had a memory error?

Why? If I have a large memory pool and one bad or failing chip then the error rate as seen from the processor could be lower than when a smaller device with fewer chips has one bad or failing chip. And in either case, without ECC you'll have no idea that you have a problem and should stop trusting that device.Of course, the more RAM you use, the more important ECC becomes.

Dude, without ECC you have NO IDEA OF KNOWING when you had a memory error! That's one big point of having it, so that you get an actual warning of bad or failing memory. That's why EVERY device should have ECC. Computers have error detection in multiple places, what makes RAM, of all things, so unimportant to not have it there?

RAM tests exist for non-ECC machines. That all being said, the last time I experienced failing RAM on a Mac, said Mac had user-removable RAM and wasn't a 27-inch iMac. And mind you, I come into contact with A LOT of Macs.Why? If I have a large memory pool and one bad or failing chip then the error rate as seen from the processor could be lower than when a smaller device with fewer chips has one bad or failing chip. And in either case, without ECC you'll have no idea that you have a problem and should stop trusting that device.

Er... if you have a large memory pool, you have more memory chips to go wrong - and unless you stick 1TB RAM in a machine and then use it to play Minecraft, you've got lots of memory because you need large data sets loaded into RAM (spanning lots of chips) so your chances of hitting a bad one are higher. Anyway, the main purpose of ECC is to catch and correct 1 bit errors so your program doesn't crash and/or give garbage results - such errors happen fairly randomly due to overheating, electrical noise, radiation and cosmic rays and don't necessarily mean a failing chip.Why? If I have a large memory pool and one bad or failing chip then the error rate as seen from the processor could be lower than when a smaller device with fewer chips has one bad or failing chip.

Nobody is denying that some applications that have to have 24/7/365 availability or where a small error can have a huge cost need ECC - but it also makes no sense on its own. You don't make a high-availability system just by chucking in ECC memory which just guards against one very specific and narrow type of failure. It is not clear that Apple even has a horse in the high-availability race - certainly since they dumped the XServe.

@theluggage There's no reason to restrict ECC to high-availability systems. I'd like to know if any of my computing devices have bad or failing RAM chips, are under Rowhammer or similar attacks, or, as you correctly point out, are in environmental conditions that increase the error rate. People saying "I haven't had memory problems" are necessarily lying as, without ECC, there is no way they would have known about bit flips during normal operation.

...apart from extra cost and complexity, needing ~12% more physical memory for the same available RAM, wider data buses for "sideband" ECC or extra read/write operations for "inline" ECC (as would be used on Apple's LPDDR RAM).There's no reason to restrict ECC to high-availability systems.

Like most things in life it is a cost/benefit calculation. For most personal applications the benefits just don't outweigh the costs. If you're running a mission-critical system where every second of downtime will leave you haemorrhaging cash then, yes, you probably need it - along with a bunch of other server-grade features that Apple hasn't offered since 2010.

People saying "I haven't had memory problems" are necessarily lying as, without ECC, there is no way they would have known about bit flips during normal operation.

Apart from glitches, crashes, the computer (mostly) completely dying if a chip fails, power-on-self-test failures... If a bit flip matters, you'll know.

For most Mac users, ECC is like wearing a Formula 1 crash helmet and a fireproof suit when you drive your Kia to the shops. Sure, it could theoretically save your life in a crash so there's no reason not to, right?

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.