- Raw performance

- Performance per watt

- BTU/hr

Apple's M1 processor often surpasses the graphics performance of desktop GPUs, including the Nvidia GeForce GTX 1050 Ti and AMD Radeon RX 560,...

www.macrumors.com

2020 M1 SoC's iGPU had the dGPU raw performance of a 2016 Nvidia GeForce GTX 1050 Ti & a 2017 AMD Radeon RX 560.

What they did not realize was that M1 had the highest performing desktop/laptop iGPU at its time of release.

For PC gamer types this was a joke because their thinking was that iGPU is garbage and the NVidia & AMD parts were 4 & 3 years old respectively.

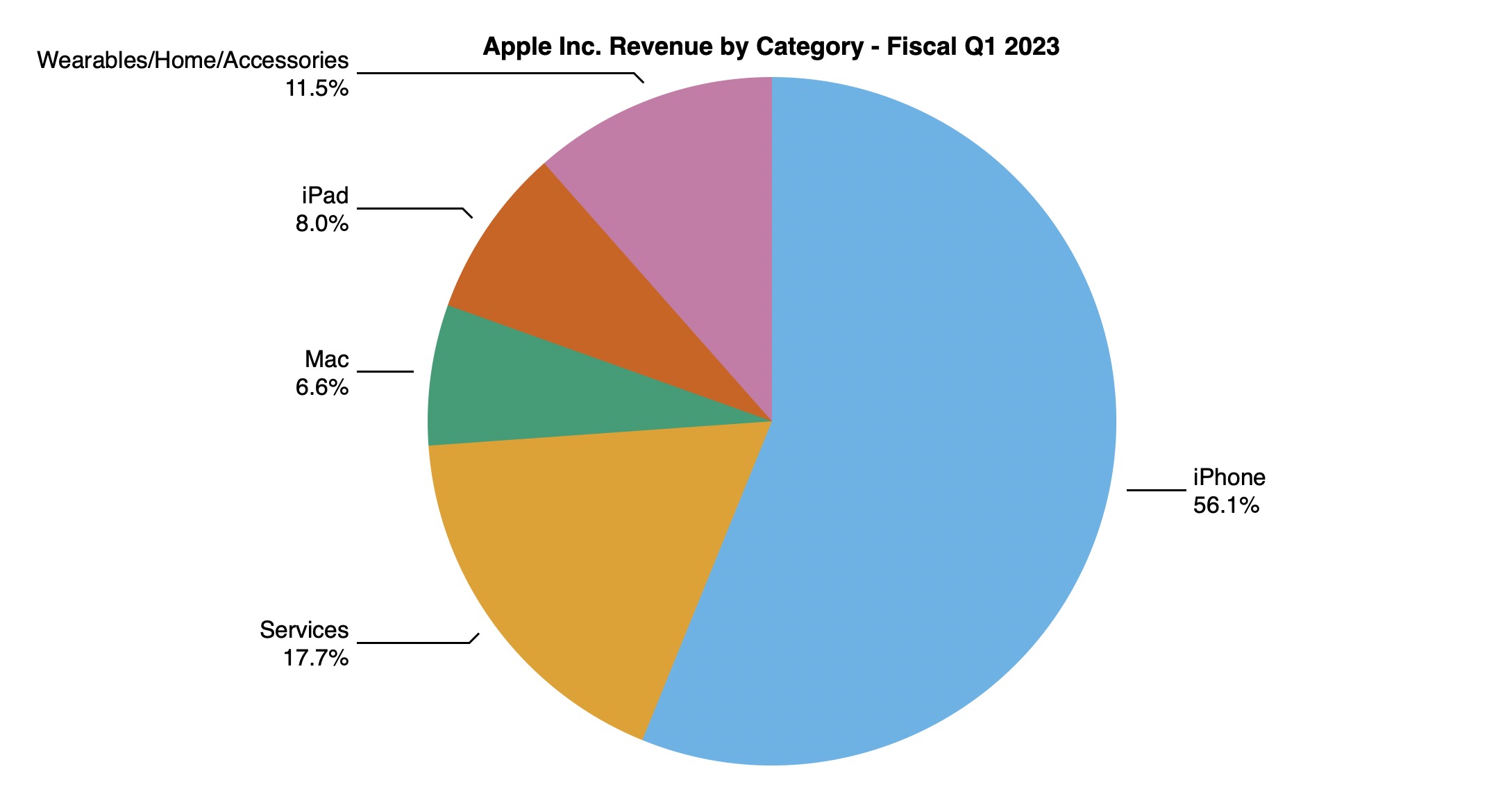

~80% of all PCs are laptops and laptop users demand battery life. It is easier for a mobile SoC vendor to scale up a smartphone/tablet part to a laptop/desktop than a x86 parts vendor to scale down their desktop part to laptop/smartphone/tablet.

PC gamer types who buy RTX 4090 and 24-core Core i9 chips make up ~1% of all PCs shipped.

Then there was talk on MR & other forums about a desktop workstation-class SoC that would deliver RTX dGPU performance.

Almost everyone thought it would never occur because no one has ever done it.

They were correct as the business model of parts vendor differ greatly to systems vendor.

That turned out to be the M1 Ultra. Has AMD/Intel/Nvidia ever offered a laptop much less desktop chip with its very unique feature set? SoC that combines a Threadripper Pro + RTX 3090.

Yes, Apple's comparison to the RTX 3090 was specific to certain use cases that unique to the Mac that's why performance on other benchmarks did not match up. But in truth what buyers of the Mac should be concerned about is their use case and not a PC use case.

Parts vendors are pressured to sell cheap parts so they try to make the part's area/size as small as possible. They design it for that purpose and catch up in performance via electricity input that the user pays to the utility company. Seeming the power charges is not from Intel/AMD/Nvidia then it does not impact their sales.

PC master race types are focused on raw performance. They do not care about power consumption or even heat as the rich countries they live in have cheap power or as of a cold climate.

But if you live in a country with the highest $/kWh rate in the world or you do not pay for the power bills then who cares if your PC requires a 1.5kW PSU?