Remember, you are impacting professional workflows with your recommendations. This is not a gamer forum. If professionals take your advice and then find slow performance and compute bugs they really should make an example of you.

Well, I would assume that "professionals" know that the Geforce line is an enthusiast level offering from nVidia. I.E. gamer hardware, those looking for professional level graphics and support would want to look at the Quadro line.

nVidia hasn't offered a Quadro since the K5000 for Mac, and I don't see anything from nVidia, that backs up your claim that their drivers for official Mac Retail Products are bata.

nVidia's offerings of Maxwell and Pascal PC cards drivers, that enable these cards to work under OS X, has never been a Professional offering, nor has there ever been any sort of claim of support, on any level, from nVidia.

While I'm less than thrilled at the "Professional" support in the drivers for my Quadro card, under the MacOS, nVidia never made any claims that it would have parity with Windows or Linux drivers and support.

I don't really understand why the MacOS is such a dog slow pig, when it comes to some Professional features of the Quadro line, but AMD's FirePro D300/D500/D700 isn't any faster, under the MacOS, at these features. So much for AMD's "Professional" level drivers for the MacOS.

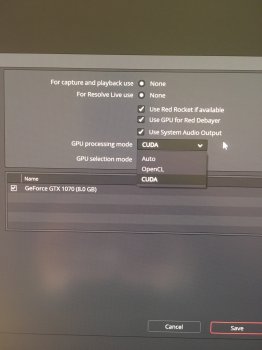

While I admit, that AMD has a decided edge in certain "Pro" applications under the MacOS that use OpenCL, still other "Pro" apps use Cuda, and we all know that dog won't hunt on AMD cards.

Anyone that is using bata drivers, with PC hardware, under the MacOS, does so fully understanding that nVidia makes no claims that any software or hardware combination used, would receive any real level of support from nVidia.

I didn't see anyone here claiming they would offer Professional level support for any nVidia product. MVC does offer some level of support for his flashed nVidia products, but anyone that buys from him should understand that he has no official relationship with nVidia, and no access to the driver codebase, thus is unable to fix bugs in nVidia's drivers, or work with Apple to better optimize support for features, like, but not limited to OpenCL.