Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

2013 Mac Pro Price (Range?)

- Thread starter icanhazapple

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Except that the bulk of their computers sell probably at around the $1,200-$1,500. Apple has steep drop off in sales at $2,500, proof? That's why they dropped the 17" MBP and want to drop the old Mac Pro.

Not much proof. First, the rMBP 15" dropped onto the market at roughly the same price points at the MBP 17". Apple wasn't trying to make the rMBP 15" the bulk of the computers they sell. It is however, trying to deliver as high a value ( number of pixels not going down ) in a smaller package.

Second, the desire to want to drop the Mac Pro likely came from slumping growth rather than purely a volume consideration. Shrinking toward 1% (or less ) of Macs sold begs the question of 'why bother'. Mac Pro doesn't have to out sell the iMac/Mini/MBP siblings but it does have to roughly keep up.

, but I will not be at all surprised if it comes in at $1,999.

Doubtful as Apple already has a $1,999 product and this isn't exactly a high growth or bulk market price point to offset the fratricide.

Just the buzz Apple wants, and will turn the Mac Pro into a healthy production line.

They've got buzz and haven't even dropped the price yet. If they can stick a price differential between mini-toward workstation + 2 Pro GPUs cards they will still have buzz.

Whether the Mac Pro is a heathy product line far more depends upon trends the customers are following outside the device. More groups doing centralized storage and/or extensive "sneaker net" data transfers and this has legs. Likewise increasing number of titles leverging GPGPU for computation. Standard allocated resources typically means software will be written to consume/leverage it over time.

Would the entry Mac Pro sell better closer to the $2,000 border with the iMac? Yes. But Apple can work on that over time. If in 2-3 generations the equivalent of the W5000 card can drive 6 screens , SSDs are substantially cheaper , and DDR4 RAM has gotten to volume levels so the price doesn't carry quite as large a premium then they can move the price down a couple hundred if start around $2,499.

----------

.... I added my total solid state storage needs together... which is currently around 500GB (even though I have 1TB).

Total storage needs has little to do with what minimal drive needs to be in the Mac Pro entry level specs; hence price of Mac Pro.

If the 'common usage' is that the OS/Apps/basic Home directory drive is under 256GB then using a 256GB drive fits. Home much folks need for a "working space" drive is highly variable. So let folks just add whatever amount they need later outside of the standard config. That gets solved either with a BTO option or a 3rd party product(s).

For example if there was a Mac Pro BTO configuration with two SSDs then folks could drive the total SSD capacity internal higher. Likewise with just bigger ( opt for 512GB option instead of 256GB ). A single SSD can fill the role; just not a necessity.

The notion that the entry and/or standard Mac Pro configs should fulfill a broad spectrum of total SSD capacity is just off. If the user base has highly varying needs and price constraints there is little chance will make a broad spectrum happy with standard configurations.

If I'm going to buy a 2013 Mac Pro, I will get it configured with between 500GB and 1TB depending on the price.

I don't think the post you were reply to was even remotely trying to say there should be one and only one SSD capacity in all Mac Pro BTO configurations. It is far more pointed at what you are conditionally adapting to above.. the price point of where start from before add-ons and customization. Driving that starting point too high will drive away customers.

Last edited:

Would the entry Mac Pro sell better closer to the $2,000 border with the iMac? Yes. But Apple can work on that over time. If in 2-3 generations the equivalent of the W5000 card can drive 6 screens , SSDs are substantially cheaper , and DDR4 RAM has gotten to volume levels so the price doesn't carry quite as large a premium then they can move the price down a couple hundred if start around $2,499.

That's unlikely, when does Apple lower prices over time? AFAIK they pick a price tier and stick with it. Whatever price they put on this machine at the beginning is the one it's going to have. Regardless, sure $1,999 is entirely possible, but a bit of a stretch. However starting above $2,499 isn't going to happen. A desktop computer with no PCI, 4 slots and no drive expansion that starts at $3k? Two graphics chips and a Xeon aren't that expensive.

That's unlikely, when does Apple lower prices over time? AFAIK they pick a price tier and stick with it. Whatever price they put on this machine at the beginning is the one it's going to have. Regardless, sure $1,999 is entirely possible, but a bit of a stretch. However starting above $2,499 isn't going to happen. A desktop computer with no PCI, 4 slots and no drive expansion that starts at $3k? Two graphics chips and a Xeon aren't that expensive.

Google the prices of the 2 GPU's and a 6 Core Xeon from the latest ranges, plus the RAM, Storage etc, it could easily start at $3,000.

That's unlikely, when does Apple lower prices over time? AFAIK they pick a price tier and stick with it.

Market dynamics change over time. If the Mac growth stays in the crapper for 4-6 more quarters the whole line up is going to move. ( not radically just shift down a bit. )

The Mac mini has oscillated a bit .

2006-09 ___ $599

2010 ______ $699

2011 ___ $599

So has the Mac Pro

2006-08 $2199

2009-12 $2499

Part of that is performance shifts and/or component costs. In Mac Pro case dumping front side bus and having far more linear scalable performance for core count increase meant more bang-for-buck. But sales volume was likely better back when at $2,100. The middle 2,299 is probably a healthier point if can meet the targeted minimal functionality.

Right now the W5000 is too weak in output channel ability. DDR4 required for next generation is likely going to drive BOM prices up a bit next year. If Apple can sell enough at $2,499 they can stick with it. But frankly there isn't a good track record for being able to. So long term, they should have some plan with future parts to get back to hugging the $2K border a bit more closely. Frankly, if the E5 1620 v3 and v4 sticks with 4 cores (with higher base clock rates) going closer to $2k is going to be far more viable. The $2,499 would be in the expected 6 core zone in 2014-15.

Whatever price they put on this machine at the beginning is the one it's going to have.

This round. (unless sales tank... e.g.. rMBP 13" early in 2013. )

I think going to see movement in the laptop line up as other Mac products vacate certain price points. (e.g, retired Macbook slot taken over by MBA 11").

Regardless, sure $1,999 is entirely possible, but a bit of a stretch. However starting above $2,499 isn't going to happen.

$2,599 is plausible. There will be complaints ( as if there is a shortage of complaints by some subset of users ), but +/- $100 has appeared before ( Mini case above.. iMac 2012 on entry model , MBP 15" when needed discrete GPU after shifting one year to iGPU entry point, etc. )

A desktop computer with no PCI, 4 slots and no drive expansion that starts at $3k?

There is PCIe. A legacy physical socket connect format is not PCIe. Unknown on drive expansion. First, there likely will be 3rd party cards. Second, may have option for two SSD if second GPU card is enabled with a drive slot.

Starting at $3K price point is whacked. There is little rational reason to open up a $1K gap between iMac and Mac Pro pricing. That is a hole large enough to drive a truck through. Mac unit numbers are already down. Competitors will only use a disconnect like that to beat the crap out of Apple over the long run.

Two graphics chips and a Xeon aren't that expensive.

It is more than just the graphics chips and yes there will be competing workstations and Mac Pros priced at the $3K price point. Six x86 cores and two GPU functionality will probably start at around $3K. (like 6 core + one GPU does now.)

you want some insight into how the price of this computer is going to be determined? go to 8 mins in this video and watch for a little bit..

YouTube: video

3 months into incorporating next, they already have the price figured out and it's determined via polls/interviews on how much people are willing to pay. next didn't even have a prototype at this stage in development.

that's all there is to it.. this new mac? they had the price figured out long before..

and seemingly, any time this dude is involved with the computer, that target price is always around $3000.. for the past 25 years

the nExt computer ended up being something like $6000 when it finally hit the shelves.. and we all know the fate of next computers.. i think they have it figured out by now how to set a 3g target price and stick to it because they also know what happens when the price goes over that amount.

regardless, i guarantee they're not designing/prototyping the new mac then adding up the cost of it's parts then determining the sales cost.. the cost was already figured out at the very beginning of the process.

At that point in time they were attempting to establish a new paradigm in equipment and crate a market for it. Literally tap into the folks who were falling between the cracks. They wanted a machine which was 5 to 10 times the computing power but at the same cost - which at the time was $2k to $3k. So it was decided as you saw, that the NEXT needed to be $3k or under. In that case they were developing to a specific price target.

That's still part of the design phase (like you saw) but it's not as ridged as you're maybe thinking - from what you saw in the video. Sticker price is part of the earliest phases of design but it's flexible and based off of some preliminary formal/informal meetings with the part manufacturers. So in a sense it's both a preliminary target price range and then also "designing/prototyping the new mac then adding up the cost of it's parts".

Here's an interesting Jobs quote to something relevant to what we're discussing:

http://www.youtube.com/watch?v=2nMD6sjAe8I&feature=player_detailpage&t=2897

And of course this echoes and reaffirms all that:

Last edited:

For me, the problem is that the iMac comes with a large, high quality monitor built into it that Apple sells on its own for 1 grand (Thunderbolt display). Sure, there are cheaper options, but I think people would struggle to find 27" 1440p monitors for much under 500.

So even selling at 2 grand, the new Mac Pro would have to be a fair bit more powerful than the top level iMac. Otherwise, why would people buy it?

Based on the top tier iMac, with quad CPUs, and rather expensive graphics cards (I would be surprised if those mobile 675/680s are much if at all cheaper than their desktop counterparts) that it would be reasonable for Apple to sell a quad-core Mac Pro with two reasonable graphics cards (each at least as good as the 680MX) for around 2 grand, if they are going to do that.

I should think for 3 grand, you would want at least 6-8 cores, and two pretty good GPUs. Even if for that price, it comes with a very small amount of RAM and SSD space.

For 4 grand, I think you would be expecting 12 cores, and two top-end GPUs. With a nice amount of RAM and SSD.

So even selling at 2 grand, the new Mac Pro would have to be a fair bit more powerful than the top level iMac. Otherwise, why would people buy it?

Based on the top tier iMac, with quad CPUs, and rather expensive graphics cards (I would be surprised if those mobile 675/680s are much if at all cheaper than their desktop counterparts) that it would be reasonable for Apple to sell a quad-core Mac Pro with two reasonable graphics cards (each at least as good as the 680MX) for around 2 grand, if they are going to do that.

I should think for 3 grand, you would want at least 6-8 cores, and two pretty good GPUs. Even if for that price, it comes with a very small amount of RAM and SSD space.

For 4 grand, I think you would be expecting 12 cores, and two top-end GPUs. With a nice amount of RAM and SSD.

For me, the problem is that the iMac comes with a large, high quality monitor built into it that Apple sells on its own for 1 grand...

Speaking of monitors... with all the repeated mentions of simultaneous 4K display support in the announcement and on the apple website I'm bracing myself for this purchase alongside the new Mac Pro when they launch.

My seat of the pants guess is that a nicely-specced (bump RAM and SSD sizing up) BTO "better" new Mac Pro will come in just a hair shy of $4,000 with the "best" configs being appealing but landing closer to $5,000. Add on top of that another $4,000 for the Apple-branded 4K display that I'll want. Plus a thunderbolt external enclosure. I figure after taxes and shipping I'm in for a solid $10K on launch day to buy in and drink the Kool Aid. That's my best guess based on the speculation in this thread and my sense of Apple's historical pricing approach.

This will replace my early 2008 Octomac and 30" ACD which have both long-since been depreciated and amortized to nothingness since I bought five years ago. I'm eager to buy.

Speaking of monitors... Add on top of that another $4,000 for the Apple-branded 4K display that I'll want. Plus a thunderbolt external enclosure. ....

Is Apple going to sell $4K 4K monitors? The price of 4K monitors is likely going to crater over the next 12-18 months. Right now it is one of those super bleeding edge items that has super high mark-ups attached to them. As much as people ride Apple about their margins they are typically not crazy high for standard products.

If Apple jumped into 4K market now, they'd probably have to do a major price cut in a year or so. Far better for their "set the price and don't waver" pricing policy to jump in after the super bleeding edge mark-ups disappear.

Once the more steady state price is clear then jump in with a product ( if it looks like there is enough).

Besides the FAR more effective 4K monitor is going to be one that is native DisplayPort v1.2 (DPv1.2), not a Thunderbolt one. In a 3 4K set-up with a Mac Pro ( if had gobs of money to blow) you'd kill the bandwidth of all three TB controllers if tried to pump that video date over a TB network. If plug-in a DPv1.2 device it uses no TB network bandwidth. Similarly the device is actually usage of a broad array of current PCs with modern GPU cards which have DPv1.2 sockets on them. In short, 4K monitor, not 4K docking station is probably going to dominate the market.

In other words, Apple has left the monitor business ( just sells docking stations with integrated displays now), so why would they jump back in?

What Apple FAR more needs is a sub $999 docking station/monitor to go with this new Mac Pro. Like a 21.7" scaled down iMac tweak ( like the 27" tweak the current docking station/display is) that is in the $599 range. There is going to be Mac Pro users who need more that just 4 USB ports. That need at least one FW port. All the bulk data storage is outside the box so another Ethernet port isn't going to hurt ( e.g., bond dual Ethernet ports and place on NAS/SAN network. Hook the other Ethernet port to general internet network. )

When 4K gets down to 21.7" with higher, but affordable densities then Apple can tag a future version as "Retina" and keep the higher price.

The 4K monitor situation doesn't seem all that different to me than the 30" 2560x1600 situation was in 2007/2008. Yes, I think Apple will release their own flavor of 4K monitor alongside the new Mac Pro.

For me, the problem is that the iMac comes with a large, high quality monitor built into it that Apple sells on its own for 1 grand (Thunderbolt display). Sure, there are cheaper options, but I think people would struggle to find 27" 1440p monitors for much under 500.

Doesn't have to be under $500 to replace the Thunderbolt display.

A $525 27" 1440p montior

$299 Belkin Express dock

$824 ( and over a $100 cheaper than the Apple combo).

If a $199 dock shows up ( don't need FW e.g., http://www.caldigit.com/thunderboltstation/ ) gap is even wider.

So even selling at 2 grand, the new Mac Pro would have to be a fair bit more powerful than the top level iMac. Otherwise, why would people buy it?

What is "power"? The myopic view that 'power' is solely just x86 core count is off. It has higher I/O bandwidth. More computational headroom. non "downclocked" GPUs. Higher upper bound on RAM ( iMac capped at 32MB). More flexibility in display choices.

All of that will 'offset' the $500+ display that the iMac has even if the CPUs were about even for folks who need those features. So I doubt the priced on top of the iMac will happen.

For 4 grand, I think you would be expecting 12 cores, and two top-end GPUs. With a nice amount of RAM and SSD.

One or the other ( 12 core or top-end GPUs ), but not both at $4K. That is more likely in the $6K range. 12 core CPU is around $2K all by itself straight from Intel (without Apple's mark up).

I have a feeling it's not going to be cheap... Obviously. However, I'm hoping Apple pulls a FCPX on us and surprises everyone with a nice price tag. Again, wishful thinking.

Is Apple going to sell $4K 4K monitors?

You must be forgetting the ACD 30" display that sold for $3300 for the first two or three years!

This is a rant, and I sure don't plan this to be taken too seriously. I just need to vent a little.

My first thoughts were pretty much that this thing looks good. 12 cores sounds good - not terribly much these days, but sure, that ought to be good enough for most tasks. Two GPU's? Okay, that's a little strange, but why not, OpenCL is cool and all. Good connectivity and fast internal SSD... count me in!

And then I started to think about it for a bit about what was not told, price, and what I personally could use this for, application wise.

It seems like 12 cores is going to cost a lot. So, if we downgrade to 6 cores and assume a base price of 2500$(or as it so often translates to) it puts the whole thing in a completely different perspective:

- OpenCL is quite nice, but many apps use CUDA. If they use GPU power at all. Apps that do use OpenCL to the extent that would benefit from two FireGL based ATI cards are far more rare. For me... none?

- Speaking of that, I wonder how much premium there is to have FireGL branded Radeons in there. FireGL is a fluff, overpriced cards with perhaps a bit more memory and different drivers and better customer support. On Windows - in OS X they'd be the same drivers anyhow and it's Apple's support anyway.

- So, what about OpenGL? Yeah, if you do games. Not too many apps can use two GPU's for 3D. Not everybody needs that either these days since many of the cards are good-enough for displaying geometry on the screen for rather many purposes. And in high-end, you have always had ways to get around that.

- What about hat 4-6 core CPU then and memory? It's all good, actually. It's enough for many purposes. Could I use more cores, sure - even without app support, on occasions one can launch multiple instances of the same app to do the bidding - for example, instance 1 renders odd frames and instance 2 the even frames. Or whatever, encode multiple H264s at the same time. But it's not cool to pay 2500$ for a six core headless desktop when iMac sells for 2000$ with a large display.

I would really love to find a use for this machine for me, any excuse I could get one for around 2500-3000$ since it's rather sexy. But with that cost I do need to think what it offers with the current software - future software is speculative and there's no point in getting a machine like this before you can use it properly.

So it comes down to price. With the prices speculated over here this seems to force me to look elsewhere or suffer with laptops even longer.

Anyhow, I rant because I care. Otherwise I wouldn't be here. Feels better already.

My first thoughts were pretty much that this thing looks good. 12 cores sounds good - not terribly much these days, but sure, that ought to be good enough for most tasks. Two GPU's? Okay, that's a little strange, but why not, OpenCL is cool and all. Good connectivity and fast internal SSD... count me in!

And then I started to think about it for a bit about what was not told, price, and what I personally could use this for, application wise.

It seems like 12 cores is going to cost a lot. So, if we downgrade to 6 cores and assume a base price of 2500$(or as it so often translates to) it puts the whole thing in a completely different perspective:

- OpenCL is quite nice, but many apps use CUDA. If they use GPU power at all. Apps that do use OpenCL to the extent that would benefit from two FireGL based ATI cards are far more rare. For me... none?

- Speaking of that, I wonder how much premium there is to have FireGL branded Radeons in there. FireGL is a fluff, overpriced cards with perhaps a bit more memory and different drivers and better customer support. On Windows - in OS X they'd be the same drivers anyhow and it's Apple's support anyway.

- So, what about OpenGL? Yeah, if you do games. Not too many apps can use two GPU's for 3D. Not everybody needs that either these days since many of the cards are good-enough for displaying geometry on the screen for rather many purposes. And in high-end, you have always had ways to get around that.

- What about hat 4-6 core CPU then and memory? It's all good, actually. It's enough for many purposes. Could I use more cores, sure - even without app support, on occasions one can launch multiple instances of the same app to do the bidding - for example, instance 1 renders odd frames and instance 2 the even frames. Or whatever, encode multiple H264s at the same time. But it's not cool to pay 2500$ for a six core headless desktop when iMac sells for 2000$ with a large display.

I would really love to find a use for this machine for me, any excuse I could get one for around 2500-3000$ since it's rather sexy. But with that cost I do need to think what it offers with the current software - future software is speculative and there's no point in getting a machine like this before you can use it properly.

So it comes down to price. With the prices speculated over here this seems to force me to look elsewhere or suffer with laptops even longer.

Anyhow, I rant because I care. Otherwise I wouldn't be here. Feels better already.

- So, what about OpenGL? Yeah, if you do games. Not too many apps can use two GPU's for 3D. Not everybody needs that either these days since many of the cards are good-enough for displaying geometry on the screen for rather many purposes. And in high-end, you have always had ways to get around that.

i'm not a code wiz or anything like that and i'm speaking strictly from an end user pov but the dual gpu setup isn't aimed at openGL type improvements..

from what i see, a modest single gpu can handle all the openGL tasks just fine because what happens is the cpu maxes out long before openGL does.. if you're working on a large 3d model, it will become sluggish due to cpu reasons first at which point, the openGL tasks have no problem keeping up with the sluggish model.

where openCL comes into play isn't really anything to do with displaying graphics and/or effects.. it can do the same type of calculations on gpus as the cpus are doing only much faster and more efficient.. in essence, the 2nd gpu can be viewed as the second cpu only a helluva lot faster than you'll get by added another set of cores..

..if your developers are tapping into it that is.

i'm not a code wiz or anything like that and i'm speaking strictly from an end user pov but the dual gpu setup isn't aimed at openGL type improvements..

from what i see, a modest single gpu can handle all the openGL tasks just fine because what happens is the cpu maxes out long before openGL does.. if you're working on a large 3d model, it will become sluggish due to cpu reasons first at which point, the openGL tasks have no problem keeping up with the sluggish model.

where openCL comes into play isn't really anything to do with displaying graphics and/or effects.. it can do the same type of calculations on gpus as the cpus are doing only much faster and more efficient.. in essence, the 2nd gpu can be viewed as the second cpu only a helluva lot faster than you'll get by added another set of cores..

..if your developers are tapping into it that is.

The big question that I have concerning the GPU in the nMP is if apple did some magic to make the two 6gb GPU to pool their memory toghetter, making the system see it as 12gb or not.

On 3D/VFX forums that I hang around, peoples are getting close to the 6gb limits of vram with some of their scene when rendering really complex objects. Crossfire by itself doesn't pool the vram, neither does SLi for that matter. In Blender for exemple, the rendering will crash if you run out of vram.

NVidia just announced a 12gb Quadro... Too bad it won't be available for the nMP.

further-

it seems (to me) that cpu assigned tasks are more or less topped out and the only way to get better performance is to add more cores (and even then, we're talking about a relatively small amount of applications that will benefit from more cores).. the developers have been refining cpu coding for 30 years or whatever and they can't really streamline the code or refine the algorithms much further..

the real speed enhancements in applications we'll see over the next decade are going to come from the gpu side of things.. with the computer config'd as the new mac, apple engineers believe (i assume they believe this) they're giving the devs much more potential energy to play with..

i also think, looking down the road, apple is expecting the ability to be able to plug additional gpus in via thunderbolt on an as needed basis.. it's arguable that tbolt is too slow for this-- even if it again doubles in throughput.. but even then, i'd expect someone to get more computing power for a lot cheaper if plugging in a gpu module as opposed to a cpu module.. because as far as i can gather, you can't simply plug in additional cpus via pcie and expect any sort of anything whereas you can with gpus.. right now, if you want more cpu power, you have to plug in another complete computer or cram more cpus into a single computer but in a way that isn't customizable.. you can only customize that at time of purchase and not expandable down the road.

----------

really? wow.. i mean i've definitely run out of ram before when rendering which has led to a crash but i didn't realize vram could top out in the same way..

but then again, i really try not to dive too much into coding/technomumbojumbo.. that's a trap i'd pretty much rather avoid

it seems (to me) that cpu assigned tasks are more or less topped out and the only way to get better performance is to add more cores (and even then, we're talking about a relatively small amount of applications that will benefit from more cores).. the developers have been refining cpu coding for 30 years or whatever and they can't really streamline the code or refine the algorithms much further..

the real speed enhancements in applications we'll see over the next decade are going to come from the gpu side of things.. with the computer config'd as the new mac, apple engineers believe (i assume they believe this) they're giving the devs much more potential energy to play with..

i also think, looking down the road, apple is expecting the ability to be able to plug additional gpus in via thunderbolt on an as needed basis.. it's arguable that tbolt is too slow for this-- even if it again doubles in throughput.. but even then, i'd expect someone to get more computing power for a lot cheaper if plugging in a gpu module as opposed to a cpu module.. because as far as i can gather, you can't simply plug in additional cpus via pcie and expect any sort of anything whereas you can with gpus.. right now, if you want more cpu power, you have to plug in another complete computer or cram more cpus into a single computer but in a way that isn't customizable.. you can only customize that at time of purchase and not expandable down the road.

----------

In Blender for exemple, the rendering will crash if you run out of vram.

really? wow.. i mean i've definitely run out of ram before when rendering which has led to a crash but i didn't realize vram could top out in the same way..

but then again, i really try not to dive too much into coding/technomumbojumbo.. that's a trap i'd pretty much rather avoid

further-

it seems (to me) that cpu assigned tasks are more or less topped out and the only way to get better performance is to add more cores (and even then, we're talking about a relatively small amount of applications that will benefit from more cores).. the developers have been refining cpu coding for 30 years or whatever and they can't really streamline the code or refine the algorithms much further..

the real speed enhancements in applications we'll see over the next decade are going to come from the gpu side of things.. with the computer config'd as the new mac, apple engineers believe (i assume they believe this) they're giving the devs much more potential energy to play with..

i also think, looking down the road, apple is expecting the ability to be able to plug additional gpus in via thunderbolt on an as needed basis.. it's arguable that tbolt is too slow for this-- even if it again doubles in throughput.. but even then, i'd expect someone to get more computing power for a lot cheaper if plugging in a gpu module as opposed to a cpu module.. because as far as i can gather, you can't simply plug in additional cpus via pcie and expect any sort of anything whereas you can with gpus.. right now, if you want more cpu power, you have to plug in another complete computer or cram more cpus into a single computer but in a way that isn't customizable.. you can only customize that at time of purchase and not expandable down the road.

Actually you could plug a cpu via PCIe... They did this in the 90's with plain old PCI. We had some i960 based board from intel in our servers as co-processors. Even before that and with more primitive interface, both Atari and Amiga had Transputer board available for parallel processing.

Most of those board are infact headless computers with their own ram.

----------

further-

it seems (to me) that cpu assigned tasks are more or less topped out and the only way to get better performance is to add more cores (and even then, we're talking about a relatively small amount of applications that will benefit from more cores).. the developers have been refining cpu coding for 30 years or whatever and they can't really streamline the code or refine the algorithms much further..

the real speed enhancements in applications we'll see over the next decade are going to come from the gpu side of things.. with the computer config'd as the new mac, apple engineers believe (i assume they believe this) they're giving the devs much more potential energy to play with..

i also think, looking down the road, apple is expecting the ability to be able to plug additional gpus in via thunderbolt on an as needed basis.. it's arguable that tbolt is too slow for this-- even if it again doubles in throughput.. but even then, i'd expect someone to get more computing power for a lot cheaper if plugging in a gpu module as opposed to a cpu module.. because as far as i can gather, you can't simply plug in additional cpus via pcie and expect any sort of anything whereas you can with gpus.. right now, if you want more cpu power, you have to plug in another complete computer or cram more cpus into a single computer but in a way that isn't customizable.. you can only customize that at time of purchase and not expandable down the road.

----------

really? wow.. i mean i've definitely run out of ram before when rendering which has led to a crash but i didn't realize vram could top out in the same way..

but then again, i really try not to dive too much into coding/technomumbojumbo.. that's a trap i'd pretty much rather avoid

It comes about the good old quality vs quantity principle. If you go to deep in the details of your scene with multi millions/billions of quads then yes you can blow the vram limits when rendering. It's all in knowing how to turn high polys to low polys with the least quality loss before rendering the scene. With enough ram we wouldn't have to bother, just like we don't really bother with file size anymore since we are now playing in the terrabytes of disk space realm now.

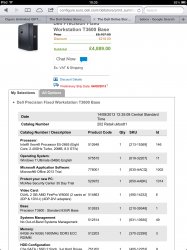

Based on an equivalent Dell how do we think the nMP will compare?

Priced this yesterday as I'm also waiting for the nMP pricing!

I know the argument over slots etc, but it doesn't apply to me as I use a combo of Dropbox and and 3tb TB drive for active storage and time capsule for back up!

This is a Dell T3600 workstation in a config that I'd need for work, but no way top end!

8 core 2.4 - 64gb of 1600mhz ECC RAM, a 512 SSD with Dual Firepro 2gb W5000 - price is about £4900 about $7600.

RAM and SSD aren't up to quoted nMP 768gb SSD & RAM at 1800mhz. And no TB obviously!

Do you think this will be below the price of a comparable nMP? Opinions?

Have to work out what OSX is worth to me as well?

Priced this yesterday as I'm also waiting for the nMP pricing!

I know the argument over slots etc, but it doesn't apply to me as I use a combo of Dropbox and and 3tb TB drive for active storage and time capsule for back up!

This is a Dell T3600 workstation in a config that I'd need for work, but no way top end!

8 core 2.4 - 64gb of 1600mhz ECC RAM, a 512 SSD with Dual Firepro 2gb W5000 - price is about £4900 about $7600.

RAM and SSD aren't up to quoted nMP 768gb SSD & RAM at 1800mhz. And no TB obviously!

Do you think this will be below the price of a comparable nMP? Opinions?

Have to work out what OSX is worth to me as well?

Attachments

Last edited:

...If you go to deep in the details of your scene with multi millions/billions of quads then yes you can blow the vram limits when rendering. It's all in knowing how to turn high polys to low polys with the least quality loss before rendering the scene. With enough ram we wouldn't have to bother, just like we don't really bother with file size anymore since we are now playing in the terrabytes of disk space realm now.

With multiple GB VRAM sizes that is in the billions of bytes and hundreds of millions of 32 bit words. The W9000 Fire Pro card comes with 6GB of VRAM. The standard configuration of the current Mac Pro range in the 3GB to 6GB range. ( 6GB being over a billion 32 bit words. ) Pretty much already at the point that the VRAM on GPGPU/GPU cards is as large was what was in most workstations several years ago.

Even super duper sized screens are only in the 10's of millions of pixels while the local storage capacity is in the 100's of millions. If careful about caching textures and there is room for even highly complex scenes.

I'm sure can blow out regular mainstream cards/VRAM (e.g., in most iMacs.), but in the 4-6GB zone (range covered by W7000, W8000, and W9000 ) it is in the practical-to-do range for a pretty broad range of rendering tasks.

You must be forgetting the ACD 30" display that sold for $3300 for the first two or three years!

Not really because that was a different era. A 20" monitor in that era was in the $1000 range. Can buy one for way less than that now. Customers are price anchored to lower price points now. Besides the 30" cratered multiple times over the first couple of years. Look at the "Summary" section here:

"Apple lowered the price of this 30" display from $3,000 in October, 2005.

Mine cost me $2,500 in January 2006 ...

As of April 2007, Apple is giving them away at $1,800. "

http://www.kenrockwell.com/apple/30-inch-cinema-display.htm

Unlike 2004-2007, Apple has money coming out of their eyeballs. Chasing this product for some relatively minor bump for 12-18 months isn't really worth it. They can let the 3rd parties take a stab at it. When it transitions to stablized prices then step in and put a floor under the price. If they try to put a floor under the price now they'll just get run over. When things stabilize will see who is a quality, volume screen supplier. Sharp seems to be still shooting itself in the foot by some reports.

At a reasonable viewing distance the current 27" models are already in the "Retina" range. If working with 4K content it is a big differentiator. If working most other things it isn't going to help as much. And 4K and still lacking a more comprehensive 10-bit color stack it gets very questionable about the market reach.

The other problem is that they will likely saddle it with docking station duties and overhead. Even though as pointed out it is far more effective and portable to more systems (non Apple ones) as just plain DisplayPort monitor, it seems likely that the Cupertino kool-aid is going to narrow it. If Apple jumps back in the monitor business then perhaps. So far though their track record says that they are out ( like printers, rack servers , etc. ).

Seems pretty straighforward to me...

I just don't think Apple would be boasting about 4K display support without plans to release their own 4K display. It seems very un-Apple like to release the new Mac Pro with all the marketing highlighting 4K support and then telling their customers to go buy an ASUS display if they want to take advantage of the capability. Pricing on competitor displays is about $3500 right now, so adding the usual Apple tax, $4K seems like a decent guess.

I just don't think Apple would be boasting about 4K display support without plans to release their own 4K display. It seems very un-Apple like to release the new Mac Pro with all the marketing highlighting 4K support and then telling their customers to go buy an ASUS display if they want to take advantage of the capability. Pricing on competitor displays is about $3500 right now, so adding the usual Apple tax, $4K seems like a decent guess.

With multiple GB VRAM sizes that is in the billions of bytes and hundreds of millions of 32 bit words. The W9000 Fire Pro card comes with 6GB of VRAM. The standard configuration of the current Mac Pro range in the 3GB to 6GB range. ( 6GB being over a billion 32 bit words. ) Pretty much already at the point that the VRAM on GPGPU/GPU cards is as large was what was in most workstations several years ago.

Even super duper sized screens are only in the 10's of millions of pixels while the local storage capacity is in the 100's of millions. If careful about caching textures and there is room for even highly complex scenes.

I'm sure can blow out regular mainstream cards/VRAM (e.g., in most iMacs.), but in the 4-6GB zone (range covered by W7000, W8000, and W9000 ) it is in the practical-to-do range for a pretty broad range of rendering tasks.

You are talking about screen resolution and I'm talking about complex 3D object. There isn't a relation between the two. I can use an 800x600 screen and still design very complex scene with millions of quads in it. All of those quads are in relation to each other when it comes to rendering and they ALL have to be taken into account when you run your render pass. In other word, it fill up your vram pretty quickly. Of course if you use a cpu bound renderer then you can make use of your ram instead of your vram at the expense of speed. This is also why the NVidia Titan is a popular video card for 3D modeller since it comes with 6Gb also. But some are hitting really close to that limit already and dreams about getting their hand on the new 12Gb Quadro.

You are talking about screen resolution and I'm talking about complex 3D object.

Those are not 100% decoupled from one another. Just as you alluded to lowering the polygon count to a level to keep most of the quality with lower requirements just cranking up the polygons without limit doesn't necessarily increase the quality of the render. It just just makes it take longer.

If have 10 polygons per pixel on the screen who is going to see them? They all can't be presented on the screen. Sure some might be clipped from view but 9 for every pixel?

There isn't a relation between the two. I can use an 800x600 screen and still design very complex scene with millions of quads in it.

You tell me how you get 1.5 billion polygons on a 800x600 screen.

All of those quads are in relation to each other when it comes to rendering and they ALL have to be taken into account when you run your render pass.

If no one can see them what impact does it have on the final result? Completely obscured from just about all angles polygons aren't going to contribute much.

Can play the game of "I need 100 million polygons"... "too small? then need 1 Billion" ... "too small? then need 10 billion" ... All the while the human eye has constant constraints on what it can see.

This is also why the NVidia Titan is a popular video card for 3D modeller since it comes with 6Gb also.

Right, because it mostly works for sizable group of people now.

But some are hitting really close to that limit already and dreams about getting their hand on the new 12Gb Quadro.

I'm sure there are some folks rendering for 4K video, IMAX, or tiny few for 8K prototype system. 12GB may not necessarily be the path as much as more uniform access to main RAM and better ( coherence with RAM and VRAM ) , bigger (capacity) L3 on GPGPU cards.

Cards will probably hit way points at 8 and 10 before getting anywhere close to 12. Could get to point where VRAM is just treated as a humongous L4 cache of main RAM. That would mean need enough to work on the current computational fronts/waves and just page in as necesary a complete the whole picture.

You tell me how you get 1.5 billion polygons on a 800x600 screen.

zoom out?

(for instance, you might detail a cornice on a building.. if you render the building as a whole, the detail might not be apparent in that particular image but the polys are still in the model and are being tracked.. it's (generally) entirely impractical to model multiple different versions with differing amounts of detail depending on the viewing distance in a render.. and that's just a simple example)

for example: here's 200,000 entities:

(that little black dot in the bottom right)

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.