Here's my 10 cents or points on whether Titans are overpriced.

When you pull out that kinda of comparison of render-time/$. A $1000 GPU seems like a bargain

Now, but in more detail. And I agree that a $1K Titan is truly a bargain, as more fully shown below.

If one's into 3d animation rendering, keep reading -

This, in detail, is how I do the CPU vs. Tesla vs. Titan GPU comparison.

1) One Tesla K20X = ten E5-2687w v1. That Tesla CPU costs at or above the $3,400 range.

Also, please keep in mind that rendering with a CPU does not yield linear increases in performance even when the addition CPUs are in the same system, whereas rendering with GTX GPUs in OctaneRender does yield linear increases in performance when the GPUs are in the same system.

2) One E5-2687w v1 = 8 cores that run at 3.1 GHz at base; 6 to all cores at 3.4 GHz at stage 1 turbo; 4 or 5 cores at 3.5 GHz at stage 2 turbo; 2 or 3 cores at 3.6 GHz at stage 3 turbo; and 1 core at 3.8 GHz at stage 4 turbo. Otherwise the others are still running at base of 3.1 GHz.

3) Therefore, one Tesla K20X can deliver performance equal to that of 80 CPU cores that run at 3.1 GHz at base; 60 to all cores at 3.4 GHz at stage 1 turbo; 40 or 50 cores at 3.5 GHz at stage 2 turbo; 20 or 30 cores at 3.6 GHz at stage 3 turbo; and 10 cores at 3.8 GHz at stage 4 turbo; with the others running at base of 3.1 GHz.

4) One Titan w/o overclocking by EVGA Precision X, but with all double precision floating point peak performance enabled by Nvidia Control panel is faster than a Tesla K20X. See the chart in my earlier post.

5i) Therefore, one Titan in Windows w/o overclocking by EVGA Precision X, but with all double precision floating point peak performance enabled by Nvidia Control panel, can deliver performance better than that of 80 CPU cores that run at 3.1 GHz at base; 60 to all cores at 3.4 GHz at stage 1 turbo; 40 or 50 cores at 3.5 GHz at stage 2 turbo; 20 or 30 cores at 3.6 GHz at stage 3 turbo; and 10 cores at 3.8 GHz at stage 4 turbo; with the others running at base of 3.1 GHz.

5ii) Comparison: 836 / 735 (see my chart - compare base speed of K20X with Titan's) = 1.13741496598639; Check: 836 / 735 * 3950 = 4492.789 =~ 4,500; 1.137 * 1310 = 1489.47 = ~ 1,500 (See my chart - single & double precision floating point peak performance values for Titan and K20X)

6) At Titan's factory setting, one Titan has single precision floating point performance 1.137 times greater than that of one Tesla K20X. So the Titan effect can be expressed this way:

a) 3.1 GHz x 1.137 GHz = 3.50 GHz, all eight cores at base;

b) 3.4 GHz x 1.137 GHz = 3.86 GHz, all eight cores at stage 1 turbo;

c) 3.5 GHz x 1.137 GHz = 3.98 GHz, 4 or 5 cores at stage 2 turbo;

d) 3.6 GHz x 1.137 GHz = 4.09 GHz, 2 or 3 cores at stage 3 turbo; and

e) 3.7 GHz x 1.137 GHz = 4.20 GHz, 1 core at stage 4 turbo.

f) Otherwise the remaining cores are still running at base - 3.50 GHz.

You'd have to have a CPU with these characteristics to approximate 1/10th of the Titan's effect. The closest you'd get to this is one of Dave's overclocked E5-2687W's v2 (See post no. 774 and

http://www.cpu-world.com/CPUs/Xeon/Intel-Xeon E5-2687W v2.html )

7) But that equivalency to overclocked E5-2687w's v2 is just a small part of the comparison because I've overlocked my Titan(s) by a factor of 1.49. So you'd have to then overclock those overclocked E5-2697W's by another 1.49 times. Here's the next stage of the math:

a) 3.50 GHz x 1.49 GHz = 5.20 GHz, all eight cores at base;

b) 3.86 GHz x 1.49 GHz = 5.75 GHz, all eight cores at stage 1 turbo;

c) 3.98 GHz x 1.49 GHz = 5.93 GHz, 4 or 5 cores at stage 2 turbo;

d) 4.09 GHz x 1.49 GHz = 6.09 GHz, 2 or 3 cores at stage 3 turbo; and

e) 4.20 GHz x 1.49 GHz = 6.26 GHz, 1 core at stage 4 turbo.

f) Otherwise, the remaining cores are still running at base - 5.20 GHz.

You'd have to have a CPU with these characteristics to approximate 1/10th of the overclocked Titan's effect.

8) But to be kind, let's not cut the Titan into tenths, rather let's keep it whole. So one overclocked Titan has the compute potential of ten 8-core CPUs that we have no idea of when it'll drop. So one, whole overclocked Titan has the compute potential of a CPU with these characteristics:

a) all eighty cores at base - 5.20 GHz;

b) all eighty cores at stage 1 turbo - 5.75 GHz;

c) 40 to 50 cores at stage 2 turbo - 5.93 GHz;

d) 20 or 30 cores at stage 3 turbo - 6.09 GHz; and

e) 10 cores at stage 4 turbo - 6.26 GHz.

f) Otherwise, the remaining cores are still running at base - 5.20 GHz.

9) Extending Nvidia's comparison of 10 particular CPUs to one Tesla K20X, lets use GHz equivalency and take the CPU with the highest total GHz output - that is the Xeon E5-2697 v2 that has a base speed of 2.7 GHz (12 cores * 2.7 GHz = 32.4 GHz) and a turbo speed of 3.5 GHz (3.5 GHz * 12 cores = 42 GHz) and see how one Titan stacks up to that:

a) One Titan has the total base GHz equivalency of 80 cores * 5.2 GHz = 416 GHz.

b) 416 GHz / 32.4 GHz = 12.84; So one fully tweaked $1,000 Titan is equal to approximately thirteen $2,600+ Xeon E5-2697s. 13 * 2,600 = $33,800+ for 13 Xeon E5-2697.

c) If you spent $4,000 for 4 Titans to put on a motherboard with 4 double wide, x16 PCIe slots, you have the equivalent of

(416 GHz * 4 = 1,664 GHz) / 32.4 GHz = 51.39 or 51 Xeon E5-2697s. 51 E5-2697s would cost you about $132,600. $4K vs. $132K: Um- which route would you take?

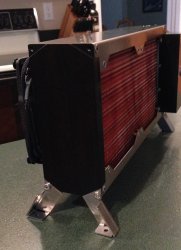

10) My WolfPackAlphaCanisLupus has, in one case, 8 Titans that have a very high CPU core rendering equivalency.

a) all 640 cores at base - 5.20 GHz;

b) all 640 cores at stage 1 turbo - 5.75 GHz;

c) 320 to 400 cores at stage 2 turbo - 5.93 GHz;

d) 160 or 180 cores at stage 3 turbo - 6.09 GHz; and

e) 80 cores at stage 4 turbo - 6.26 GHz.

f) Otherwise, the remaining cores are still running at base - 5.20 GHz.

g) 640 cores * 5.2 GHz = 3,328 GHz; 3,328 GHz (8 Titans) / 32.4 GHz (Xeon E5-2697) = ~102.72. 103 x $2,600 = $267,800. What would it cost to house and power 102 Xeon E5-2697s? Two thousand dollars to house a pair would be on the low end. So lets say, while forgetting the electrical power issue, $102,000 for the housing (102/2 = 51; 51 * $2,000 = $102,000) + $267,800+ for CPUs (or $370,000) vs. $8,000 for GPUs + about $6,000 for housing (or about $14,000 total): Um- did I take the wrong route by choosing the 1/26 path ($370,000 / $14,000 = 26.42) ? Not!

Now, doesn't a Titan seem dirt cheap as a 3d animation rendering tool and justify a desire, if not an obsession, for more double wide, x16 PCIe slots perceived to be in one Windows system? If not, then one's most likely not into 3d animation rendering.