Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

All We Know About Maximizing CPU Related Performance

- Thread starter Tutor

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

... . Now I did what I thought was a crude comparison a few posts back comparing GHz & cores as a total number - is this an accurate enough representation of actual CPU power? - - - edit: and of course in my case at work there is quite a range of generations here in which each architecture version will perform differently.

A CPU based renderfarm lacks the linearity that you get from identical GTX cards and OctaneRender, so long as the additional GTX(s) are perceived by OctaneRender to be in one system. Thus, even if, in the analysis that I gave above, the total GHz of the CPUs was equal to that of a Titan (or two or three, etc.), the Titan system would still be the faster render tool.

JasonVP mentioned there is a loss when 'context switching' with multiple threads which I hadn't heard of.

Here're two videos that should give you some background to understand Jason's point. I suggest that you watch them in the following order:

1) http://www.youtube.com/watch?v=_Y7DABKAYjg and

2) http://www.youtube.com/watch?v=0qgbu_xnKaY .

Pasting a spreadsheet text in here didn't format well so I've inserted it as an image.

Image

Anyway the overall total is 229.20

Does not seem very impressive next to those Titan figures you posted

So, it looks like this mass of power consuming steel performs right underneath the stock settings of a single Titan under Windows. Can we approximate the difference between this and how the card functions under OSX?

At Titan's factory setting (and adequately powered), one Titan has the compute potential of eighty CPU cores running at 3.50 GHz at base. 80 * 3.50 GHz = 280 GHz. Your netgang has a raw equivalency of about 229 GHz from 76 total cores. So one Titan would render faster, particularly since you're adding OctaneRender. Even if you use the compute potential of a Tesla K20X which is that of ten eightcore E5-2687W v1s, which have a base speed of 3.1 GHz and which I'd expect that a Titan in a Mac (or under OSX) would have no problem attaining, then you get 80 * 3.1 = 248, which is still greater than the GHz equivalency of the netgang.

Ok I just couldn't get my head around a single card on my home machine outperforming every mac combined at work, so I have just bought the full version of Octane for C4D - better go back and remember how to use those lights and materials. A new Titan will soon be alongside that GTX570 in my SR-2.

Crazy how I needed a realistic point of reference to convince me - sometimes numbers on a screen are hard to put in perspective

Congratulations on those purchases. How many empty PCIe slots will you have empty after you add the Titan? If that number is only one, then the Bad Man suggests that you spring for an EVGA 04G-P4-2647-KR GeForce GT 640 4GB 128-bit DDR3 PCI Express 3.0 x16 HDCP Ready Video Card (for $99 after rebate from Newegg [ http://www.newegg.com/Product/Product.aspx?Item=N82E16814130818 ]), to use for interactivity and allow both the GTX Titan and the GTX570 to work solely for Octane when you're tweaking/building your scene and to give your system better interactivity [ Page 9 of the OctaneRender User Manual states, "For the smoothest user experience with OctaneRender™, it is recommended to dedicate one GPU for the display and OS to avoid slow and jerky interaction and navigation. The dedicated video card could be a cheap, low powered card since it will not be used for rendering and it should be unticked (off) in CUDA devices in the Device Manager/Preferences." That GT 640 could be ticked on for final render when you're no longer tweaking the scene. Then you'd get the compute performance of all three cards at the same time. However, if you have space for another double wide card, then wait until you can get another fast GTX.

Congratulations on those purchases. How many empty PCIe slots will you have empty after you add the Titan?

Unfortunately the answer is less than none at this point!

It will look like:

Slot 1: Titan

Slot 2: Titan

Slot 3: GTX 570

Slot 4: GTX 570

Slot 5: Ethernet

Slot 6: Areca 1213-4i RAID

Slot 7: Firewire

Leaving me having to remove my PCIe OCZ revodrive and move to a much slower SATA SSD on a 3GB/s port for Windows OS.

Or maybe I can find a USB ethernet adapter - I just had another quick look and found one that does 1000 Base T GbE - all the others I saw in the past would only run 100 Base T. Could free up another slot if it runs with OSX.

If I were to run the GTX570 as the display card does that mean my scenes would be limited to its 1280MB memory? Might start asking around at the octane forums as well

Thanks for you help Tutor!

Unfortunately the answer is less than none at this point!

It will look like:

Slot 1: Titan

Slot 2: Titan

Slot 3: GTX 570

Slot 4: GTX 570

Slot 5: Ethernet

Slot 6: Areca 1213-4i RAID

Slot 7: Firewire

Leaving me having to remove my PCIe OCZ revodrive and move to a much slower SATA SSD on a 3GB/s port for Windows OS.

Or maybe I can find a USB ethernet adapter - I just had another quick look and found one that does 1000 Base T GbE - all the others I saw in the past would only run 100 Base T. Could free up another slot if it runs with OSX.

How about outfitting and setting it up like one of these:

I.

Slot 1 (x16 ) : GTX 570-1 (off for use in build/tweaking)

Slot 2 (empty) : GTX 570-1 (off for use in build/tweaking)

Slot 3 (x8 ) : Dynapower USA NetStor NA211A TurboBox PCI Express Expansion Box for Desktop [ $724 from http://www.bhphotovideo.com/c/produ...NA211A_DT_3_SLOT_PCIe_EXPANSION_ENC_PCIe.html ] holding (i) Firewire card, (ii) Ethernet card & (iii) PCIe OCZ revodrive card

Slot 4 (x8 ) : Areca 1213-4i RAID

Slot 5 (x16 ) : Titan-1

Slot 6 (empty) : Titan-1

Slot 7 (x16 ) : Titan-2 ($1K)

edge of mobo : Titan-2

(Adds about $1,724 US)

OR

II.

Slot 1 (x8 ) : GT 640 4 gig (off for use in build/tweaking) ($100)

Slot 2 (x8 ) : PCIe OCZ revodrive card

Slot 3 (x8 ) : Dynapower USA NetStor NA211A TurboBox PCI Express Expansion Box for Desktop [ $724 from http://www.bhphotovideo.com/c/produ...NA211A_DT_3_SLOT_PCIe_EXPANSION_ENC_PCIe.html ] holding (i) Firewire card and (ii) Ethernet card

Slot 4 (x8 ) : Areca 1213-4i RAID

Slot 5 (x16 ) : Titan-1

Slot 6 (empty) : Titan-1

Slot 7 (x16 ) : Titan-2 ($1K)

edge of mobo : Titan-2

(Adds about $1,824 US)

OR

III.

Slot 1 (x16 ) : Titan-1

Slot 2 (empty ) : Titan-1

Slot 3 (x8 ) : Dynapower USA NetStor NA211A TurboBox PCI Express Expansion Box for Desktop [ $724 from http://www.bhphotovideo.com/c/produ...NA211A_DT_3_SLOT_PCIe_EXPANSION_ENC_PCIe.html ] holding (i) Firewire card, (ii) Ethernet card and (iii) PCIe OCZ revodrive card

Slot 4 (x8 ) : Areca 1213-4i RAID

Slot 5 (x16 ) : Titan-2 ($1K)

Slot 6 (empty) : Titan-2

Slot 7 (x16 ) : Titan-3 ($1K)

edge of mobo : Titan-3

Adds about $2,724 US)

If I were to run the GTX570 as the display card does that mean my scenes would be limited to its 1280MB memory?

The GTX570 would display the same size scenes that it displays now, but if you tick the GTX570 "on" for rendering, then the excess memory of the Titan(s) would be wasted because the rendering card with the least amount of memory would set the max for them all [p.10 of OctaneRender User Manual says, "In multi-GPU setups, the amount of RAM available to OctaneRender™ is not equal to the sum of the RAM on the GPUs, but it is restricted to the GPU with the smallest amount of RAM." ]. Note (1) that for my CUDA rigs I keep cards with similar memory amounts together and (2) that the GT 640 card that I suggested for interactivity, has 4 gigs of memory. That's enough ram for it to participate in rendering many scenes. The minimum recommended for Octane is 1.5 gigs [p.6 of OctaneRender User Manual says, "There are several things to consider when purchasing a new GPU. You'll want to purchase a video card with the largest amount of RAM (we recommend a minimum of 1.5 GB video RAM), with the most amount of CUDA Cores for your budget. Make sure your Power Supply can handle the new card as well." ]. Off course you could sell the GTX570 or put it to use in another system; then you could put three Titans in the EVGA, with one Titan turned "off" for build/tweaking if interactivity suffers, but have them all "on" for final render.

Last edited:

When the wikipedia Nvidia charts aren't enough - for the GPU Techie in you

Here's an illuminating article on GPU Performance Analysis and Optimization for you GPU techies [ http://on-demand.gputechconf.com/gtc/2012/presentations/S0514-GTC2012-GPU-Performance-Analysis.pdf ] . It's got lots of pictures and is a highly educational but relatively easy read for software developers. It's also a recommended read if you want to develop CUDA applications or need insight into their development. Here're additional video and written resources to help develop your GPU knowledge base: http://www.gputechconf.com/gtcnew/on-demand-gtc.php .

Here's an illuminating article on GPU Performance Analysis and Optimization for you GPU techies [ http://on-demand.gputechconf.com/gtc/2012/presentations/S0514-GTC2012-GPU-Performance-Analysis.pdf ] . It's got lots of pictures and is a highly educational but relatively easy read for software developers. It's also a recommended read if you want to develop CUDA applications or need insight into their development. Here're additional video and written resources to help develop your GPU knowledge base: http://www.gputechconf.com/gtcnew/on-demand-gtc.php .

Last edited:

How about outfitting and setting it up like one of these...

Hey Tutor thanks for the config suggestions, although I may hold off on any extra expansion boxes until my upcoming (wedding sized) expenses are all covered

As an additional update I've been running some tests on how After Effects scales with increased threads when enabling Multi-processing within the preferences. I created a custom benchmark scene and repeated the render while incrementally increasing threads from 1 to 24.

All I can say so far is CPU cores/threads definitely don't behave linearly like adding CUDA cards

It could already be common knowledge about how CPUs behave but this info can be used to specifically set up After Effects for maximum performance - something which has been somewhat a mystery to a lot of designers without extra background knowledge.

Hey Tutor thanks for the config suggestions, although I may hold off on any extra expansion boxes until my upcoming (wedding sized) expenses are all covered

Congratulations. I understand - first things first.

As an additional update I've been running some tests on how After Effects scales with increased threads when enabling Multi-processing within the preferences. I created a custom benchmark scene and repeated the render while incrementally increasing threads from 1 to 24.

All I can say so far is CPU cores/threads definitely don't behave linearly like adding CUDA cards

It could already be common knowledge about how CPUs behave but this info can be used to specifically set up After Effects for maximum performance - something which has been somewhat a mystery to a lot of designers without extra background knowledge.

Can't wait 'til you have the time to reveal details. I should be able to finish the GPU testing of Premiere soon and I'll post the details here.

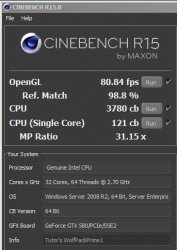

Maxon has released Cinebench 15.

Here's a pic showing how one of my 32 core WolfPackPrime's scored on Cinebench 15. Also, note the xCPU and the CPU (one core) scores and how they compare. While GPU additions under OctaneRender are as close to perfect linearity as possible, there is a slight difference with Cinebench 15 (and presumably Cinema 4d 15) with the MP ratio (comparing 32 core vs. 1 core performance for a MP score of 31.15x). Still, software has a whole lot to due with linear scaling with the addition of compute units. Maxon's Cinema 4d and Otoy's OctaneRender are two such software packages. They do it best for 3d animators. OctaneRender, of course, works with the much faster computing unit, however. That's because a GTX Titan is over 10 times faster at rendering than an E5-2687W. Thus, a formidable 3d rendering combination consists of EVGA GTX Titan(s), tweaked in Windows with EVGA Precision X and Nvidia Control Panel, inside a multi CPU system running Cinema 4d accompanied by the OctaneRender combo with the Cinema 4d plugin. With that combo of combinations, you can render on the CPUs while you render, separately, on the GPUs. I call that combo of combinations the ultimate in maximizing CPU related performance.

Here's a pic showing how one of my 32 core WolfPackPrime's scored on Cinebench 15. Also, note the xCPU and the CPU (one core) scores and how they compare. While GPU additions under OctaneRender are as close to perfect linearity as possible, there is a slight difference with Cinebench 15 (and presumably Cinema 4d 15) with the MP ratio (comparing 32 core vs. 1 core performance for a MP score of 31.15x). Still, software has a whole lot to due with linear scaling with the addition of compute units. Maxon's Cinema 4d and Otoy's OctaneRender are two such software packages. They do it best for 3d animators. OctaneRender, of course, works with the much faster computing unit, however. That's because a GTX Titan is over 10 times faster at rendering than an E5-2687W. Thus, a formidable 3d rendering combination consists of EVGA GTX Titan(s), tweaked in Windows with EVGA Precision X and Nvidia Control Panel, inside a multi CPU system running Cinema 4d accompanied by the OctaneRender combo with the Cinema 4d plugin. With that combo of combinations, you can render on the CPUs while you render, separately, on the GPUs. I call that combo of combinations the ultimate in maximizing CPU related performance.

Attachments

Last edited:

Looks great, would love to see some pics of the whole build when it's done.

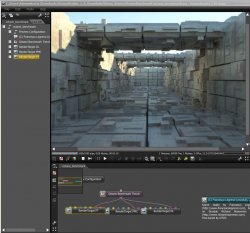

It's done, along with a transfer of the guts to the Cooler Master Cosmos II case. What. A. BEAST! I've attached the images for your perusal. Again, this is a gaming PC and I'm hesitant to share the info and images here, since we're in the Mac Pro section of the forums. But, we're also talking Hacks, and some of these lessons learned may help...

Anyway:

Attachments

Hey this thread is generally about all CPU related performance, so go right ahead

You could definitely apply this to machines running osx obviously not real macs though hehe

When you said separate unit, I didn't realise this rad needed it's own pedestal outside the case!

Looks like one serious block of cooling gear, congrats on the setup.

You could definitely apply this to machines running osx obviously not real macs though hehe

When you said separate unit, I didn't realise this rad needed it's own pedestal outside the case!

Looks like one serious block of cooling gear, congrats on the setup.

When you said separate unit, I didn't realise this rad needed it's own pedestal outside the case!

The pedestal was a last minute decision based on a design flaw in the radiator, as well as stupidity of yours truly. I'm not sure I'd buy this exact radiator setup again due to: the input and output holes in it are threaded through plastic. Without any metal inserts in the threads. The fittings you generally buy with BSPP G1/4 threads are: metal.

Metal vs plastic usually ends one way: badly. I originally had the radiator standing on its edge so the fans could push/pull air easily, side to side. The unit has input and output holes easily accessible if the radiator is sitting in that position. Unfortunately, I finger-tightened the input metal fitting a little too tight, and stripped the plastic threads in the radiator.

Much cursing ensued.

Rolling the radiator over so that the fans are facing up was really the only solution. It has another pair of input and output holes as shown in the pics. But it's a sub-optimal airflow; the fans are trying to suck air off the desk top and exhale it upward. Given the thickness of the fans (and the pump motor itself), I had no choice but to raise it up off the desk a bit. Thus the pedestal.

Oh, and for what it's worth: I strongly recommend investing in a small box of puppy pee pads like I have underneath the rad. That to catch spills while filling the reservoir, as well as making it very obvious that there are any leaks.

Thanks to habitullence for triggering some of my neurons to fire.

Habitullence recently brought to my attention this video on dual Titans vs dual 780s - http://www.youtube.com/watch?v=7rS88DG9TJo and asked for my take on their conclusions.

My takes are:

Those results are for gaming w/SLI configurations. If I were building a gaming rig for single or SLI configuration, I'd get 780s over Titans (or as suggested, below, I'd wait just a bit). But gaming won't pay my bills.

For CUDA 3d rendering in OctaneRender one should not use SLI. Using SLI only impairs the process [ http://render.otoy.com/faqs.php - "Does Octane Render take advantage of SLI? No, but it can use multiple video cards for rendering (see above). It is also recommended to disable the SLI option in your Nvidia control panel to maximize Octane's rendering performance." ].

The 780s, at stock, are clocked higher than regular Titans [the non-EVGA SuperClocked ("OC") versions]. But if a gamer overclocked two regular Titans to run at the same speed as the two 780s at factory (a feat easily doable in Windows with regular Titans, then those Titans would most likely excel at those gaming oriented benchmarks. One could spring for the EVGA "OC" version to get a more powerful Titan card if he/she didn't want to do any overclocking him/herself or was using a Mac Pro. All of my Titans are the EVGA "OC" versions and I have over 300 MHz of overclocking headroom still left. The Titan, at stock, has twice the amount of ram as a 780 (and about eight times the double precision floating point peak performance of the 780s when run in Windows if one uses Nvidia Control Panel to release that ability; and "no" you can't do that with a 780). Ram size and double precision increased capability are the two main reasons for Nvidia's Titan vs. 780 pricing differential. For 3d rendering a Titan can render a scene so large with so many objects and textures as to cause a 780 to choke, i.e., the 780 couldn't render the scene at all. And with the additional double precision floating point peak performance, a Titan has the potential to render that larger/more complex scene extremely fast.

However, if you haven't already purchased GPGPUs cards, you might want to wait for about two months. Nvidia has hinted that it may soon be releasing a $1,000ish real monster - the GTX 790 (that's dual 780s) [ http://videocardz.com/nvidia/geforce-700/geforce-gtx-790 ]. But note - it may require dual eight pin power connectors. If the specs are as shown by videocardz, then this is the card (with 4,992 CUDA cores running at stock speeds of 1,100/1,150 MHz) that I might surely recommend over any Titan1 (with 2,688 CUDA cores running at stock speeds of 837/876 MHz for the regular version and at stock speeds of 876/928 MHz for the EVGA "OC" version). The 780 has 2,304 CUDA cores running at stock speeds of 863/900 MHz. The 780's memory bus is the same as the Titan's - 384-bit with a memory bandwidth of 288 GB/sec and an effective memory clock of 6,008 MHz. The 790 is projected to have 6 gigs of ram and a 768-bit memory bus, but an effective memory clock of only 6,000 MHz, 8 MHz lower than that of the 780 and the Titan. Ultimate configuration of that memory in the 790 will matter greatly - such as is that per card or total for both cards. The latter, which is more likely the case, would just be the same as for a single 780. Hopefully, there's a Titan2 waiting in the wings. What if the Titan2 is the GTX version of the recently announced Tesla K40(X) with a GK180 GPU [ http://videocardz.com/46388/nvidia-launch-tesla-k40-atlas-gk180-gpu ] just as the Titan1 is the GTX version of the Telsa K20(X). That would mean that the Titan GK180 might be a more specially binned GK110 chip, with 12 gigs of ram, 2,880 CUDA cores, higher core speeds, higher memory bandwidth and thus greater single and double precision floating point performance, all while still maintaining the same TDP and $1,000 price tag.

My guess is that current 780 and Titan1 prices may soon let go of the handles on top of the price sliding board. But Titan1s have been so limited in sales quantities that the effect on them will be extremely limited. Moreover, if there's no Titan2, Titan1'll still have that ram size and double precision floating point advantages to act as glue on their backsides or, at least, to soften their little fall.

Isn't Nvidia's battle with AMD wonderful. Competition - I love it.

Habitullence recently brought to my attention this video on dual Titans vs dual 780s - http://www.youtube.com/watch?v=7rS88DG9TJo and asked for my take on their conclusions.

My takes are:

Those results are for gaming w/SLI configurations. If I were building a gaming rig for single or SLI configuration, I'd get 780s over Titans (or as suggested, below, I'd wait just a bit). But gaming won't pay my bills.

For CUDA 3d rendering in OctaneRender one should not use SLI. Using SLI only impairs the process [ http://render.otoy.com/faqs.php - "Does Octane Render take advantage of SLI? No, but it can use multiple video cards for rendering (see above). It is also recommended to disable the SLI option in your Nvidia control panel to maximize Octane's rendering performance." ].

The 780s, at stock, are clocked higher than regular Titans [the non-EVGA SuperClocked ("OC") versions]. But if a gamer overclocked two regular Titans to run at the same speed as the two 780s at factory (a feat easily doable in Windows with regular Titans, then those Titans would most likely excel at those gaming oriented benchmarks. One could spring for the EVGA "OC" version to get a more powerful Titan card if he/she didn't want to do any overclocking him/herself or was using a Mac Pro. All of my Titans are the EVGA "OC" versions and I have over 300 MHz of overclocking headroom still left. The Titan, at stock, has twice the amount of ram as a 780 (and about eight times the double precision floating point peak performance of the 780s when run in Windows if one uses Nvidia Control Panel to release that ability; and "no" you can't do that with a 780). Ram size and double precision increased capability are the two main reasons for Nvidia's Titan vs. 780 pricing differential. For 3d rendering a Titan can render a scene so large with so many objects and textures as to cause a 780 to choke, i.e., the 780 couldn't render the scene at all. And with the additional double precision floating point peak performance, a Titan has the potential to render that larger/more complex scene extremely fast.

However, if you haven't already purchased GPGPUs cards, you might want to wait for about two months. Nvidia has hinted that it may soon be releasing a $1,000ish real monster - the GTX 790 (that's dual 780s) [ http://videocardz.com/nvidia/geforce-700/geforce-gtx-790 ]. But note - it may require dual eight pin power connectors. If the specs are as shown by videocardz, then this is the card (with 4,992 CUDA cores running at stock speeds of 1,100/1,150 MHz) that I might surely recommend over any Titan1 (with 2,688 CUDA cores running at stock speeds of 837/876 MHz for the regular version and at stock speeds of 876/928 MHz for the EVGA "OC" version). The 780 has 2,304 CUDA cores running at stock speeds of 863/900 MHz. The 780's memory bus is the same as the Titan's - 384-bit with a memory bandwidth of 288 GB/sec and an effective memory clock of 6,008 MHz. The 790 is projected to have 6 gigs of ram and a 768-bit memory bus, but an effective memory clock of only 6,000 MHz, 8 MHz lower than that of the 780 and the Titan. Ultimate configuration of that memory in the 790 will matter greatly - such as is that per card or total for both cards. The latter, which is more likely the case, would just be the same as for a single 780. Hopefully, there's a Titan2 waiting in the wings. What if the Titan2 is the GTX version of the recently announced Tesla K40(X) with a GK180 GPU [ http://videocardz.com/46388/nvidia-launch-tesla-k40-atlas-gk180-gpu ] just as the Titan1 is the GTX version of the Telsa K20(X). That would mean that the Titan GK180 might be a more specially binned GK110 chip, with 12 gigs of ram, 2,880 CUDA cores, higher core speeds, higher memory bandwidth and thus greater single and double precision floating point performance, all while still maintaining the same TDP and $1,000 price tag.

My guess is that current 780 and Titan1 prices may soon let go of the handles on top of the price sliding board. But Titan1s have been so limited in sales quantities that the effect on them will be extremely limited. Moreover, if there's no Titan2, Titan1'll still have that ram size and double precision floating point advantages to act as glue on their backsides or, at least, to soften their little fall.

Isn't Nvidia's battle with AMD wonderful. Competition - I love it.

Last edited:

No problem, thanks for the insight

So SLI doesn't give you anything for workstation 3D, in fact it reduces performance.

Can this be unlocked in Mac OS?

Thought you must be getting something for the extra coin. It seems to me like the difference is similar to comparing an i7 with a Xeon. At stock both will perform roughly the same, in fact the i7 will perform better on a per $ basis, until you start getting into workstation applications.

Components for my build start arriving next week but CPUs won't be available here until month end at the earliest. Guess I'll take my time with the build and hope that NVIDIA release new card(s) in November. Funny how technology seems to move so fast and yet we're always playing the waiting game.

For CUDA 3d rendering in OctaneRender one should not use SLI. Using SLI only impairs the process [ http://render.otoy.com/faqs.php - "Does Octane Render take advantage of SLI? No, but it can use multiple video cards for rendering (see above). It is also recommended to disable the SLI option in your Nvidia control panel to maximize Octane's rendering performance." ].

So SLI doesn't give you anything for workstation 3D, in fact it reduces performance.

eight times the double precision floating point peak performance of the 780s when run in Windows if one uses Nvidia Control Panel to release that ability

Can this be unlocked in Mac OS?

Ram size and double precision increased capability are the two main reasons for Nvidia's Titan vs. 780 pricing differential. For 3d rendering a Titan can render a scene so large with so many objects and textures as to cause a 780 to choke, i.e., the 780 couldn't render the scene at all. And with the additional double precision floating point peak performance, a Titan has the potential to render that larger/more complex scene extremely fast.

Thought you must be getting something for the extra coin. It seems to me like the difference is similar to comparing an i7 with a Xeon. At stock both will perform roughly the same, in fact the i7 will perform better on a per $ basis, until you start getting into workstation applications.

Components for my build start arriving next week but CPUs won't be available here until month end at the earliest. Guess I'll take my time with the build and hope that NVIDIA release new card(s) in November. Funny how technology seems to move so fast and yet we're always playing the waiting game.

So SLI doesn't give you anything for workstation 3D, in fact it reduces performance.

That's the situation for now and the way it has been.

Can this be unlocked in Mac OS?

Not until Nvidia releases for the Mac an equivalent to Nvidia Control Panel for Windows, unless someone like the folks at Netkas.org or MacVideoCards comes up with an alternative.

Thought you must be getting something for the extra coin. It seems to me like the difference is similar to comparing an i7 with a Xeon. At stock both will perform roughly the same, in fact the i7 will perform better on a per $ basis, until you start getting into workstation applications.

That's it.

Components for my build start arriving next week but CPUs won't be available here until month end at the earliest. Guess I'll take my time with the build and hope that NVIDIA release new card(s) in November. Funny how technology seems to move so fast and yet we're always playing the waiting game.

I believe that the release will be set to around the time that AMD releases its new video cards, to steal AMD's thunder. That could take us into early next year for the release of the AMD RADEON R9 290X, although videocardz has the release set for sometime in the 4th quarter of this year [ http://www.techspot.com/news/53743-nvidia-allegedly-working-on-dual-gpu-gk110-based-gtx-790.html and http://videocardz.com/amd/radeon-r200/radeon-r9-290x ].

Last edited:

What does GPU computing have to offer? Topping it off - remote graphics via LAN/WANs.

At GTC 2013, Nvidia showcased the diversity of problems being tackled by CUDA GPUs. Video 10 of 11 highlights Otoy's OctaneRender and how it is changing radically the 3d animation industry. Those of you who are interested in other fields may find some of the other presentations valuable.

[ http://www.youtube.com/watch?v=A84v7lbdcYg&list=SPZHnYvH1qtOY0ZrWQgnQlj4dwGZ1pVgoj ] Also of particular note are videos 7 to 10 that showcase Nvidia's Grid VCA [ http://develop3d.com/hardware/nvidia-grid-vca ], a visual computing appliance that is targeted toward small and midsize businesses. With this 4U rack appliance, individuals can work with Windows-only heavy duty applications even from their Windows and Mac portables (and soon iPads and Android tablets) at unrivaled speeds via virtualized graphics over lans and remotely. The VCA features up to four Xeon processors, 16 GRID GPUs plus all the system software, including the hypervisor that creates and runs up to 16 virtual machines. [ http://develop3d.com/features/nvidia-gtc-2013 ] Can't innovate my ... .

At GTC 2013, Nvidia showcased the diversity of problems being tackled by CUDA GPUs. Video 10 of 11 highlights Otoy's OctaneRender and how it is changing radically the 3d animation industry. Those of you who are interested in other fields may find some of the other presentations valuable.

[ http://www.youtube.com/watch?v=A84v7lbdcYg&list=SPZHnYvH1qtOY0ZrWQgnQlj4dwGZ1pVgoj ] Also of particular note are videos 7 to 10 that showcase Nvidia's Grid VCA [ http://develop3d.com/hardware/nvidia-grid-vca ], a visual computing appliance that is targeted toward small and midsize businesses. With this 4U rack appliance, individuals can work with Windows-only heavy duty applications even from their Windows and Mac portables (and soon iPads and Android tablets) at unrivaled speeds via virtualized graphics over lans and remotely. The VCA features up to four Xeon processors, 16 GRID GPUs plus all the system software, including the hypervisor that creates and runs up to 16 virtual machines. [ http://develop3d.com/features/nvidia-gtc-2013 ] Can't innovate my ... .

Last edited:

What does GPU computing have to offer? Topping it off - remote graphics via LAN/WANs.

This is exactly what I thought when the new Mac Pro was released... Apple want everything to move outside the box into the cloud (or central on-site business server) but my question was - is it really going to happen right now? Thus my un-excitement for the nMP, as it's a solution without a problem - or supporting infrastructure. However this nVidia cluster may prove it's coming sooner than expected.

- - - - - - - - - - -

In other news although I've been browsing the site at work, I finally got to finishing off the AE CS6 thread count tests.

Test machine is as listed in my sig, running at stock speeds of 3.2GHz and 1333MHz RAM. Despite slight CPU differences it falls almost exactly in line with the top of the line real Mac Pro according to average stock GB scores.

Disk cache was disabled in AE. Memory per background CPU was set at 3GB where possible, however towards the top end of the core count this had to be reduced accordingly so all CPU threads would register for rendering.

Here's a shot of the settings where cores were incrementally added:

And here are the results:

So it's quite clear how quickly the performance benefits of extra cores drops off!

Keep in mind Adobe have often stated that AE is most effective with actual physical cores, not threads. But even if you chop the scale off at only 12 threads, the results pretty much show the same exponential decline in speed - it just plateaus from 12-24.

***Please note, if you have a 4, 6 or 8 core machine it does not mean your render times will follow the same results, just ignoring the added threads/cores of my example. For example on a 6 core/12 thread machine, 1 and 2 thread results may initially follow a similar scale, but your performance will drop off far quicker at the 4 core mark and completely level out from the 6 to 12 thread counts. I just don't want anyone misinterpreting this and saying "see there's no benefit to more cores". There's definitely a benefit - the more the better, but they just don't scale linearly.

There are also a few seconds here and there out of place but this is due to time being taken while AE is "initializing background processes" at the start of the render. Sometimes this process would randomly take between 2 and 10 seconds, so I did up to 4 passes of each thread count and took the best speed of each.

Here's a link for anyone wanting to check the project for themselves. It's nothing special, I just created a few layers of varying effect types to give AE a bit of a workout. No linked footage, plugins or fonts are required, it should run on anyone's machine.

https://www.hightail.com/download/OGhmQ1ZqTStCSnJyZHNUQw

So I hope this gives you guys an insight into setting up your machines for best performance in AE

Nvidia's response to the AMD RADEON R9 290X won't be the GTX 790 or Titan2.

Nvidia's response to the AMD RADEON R9 290X will be the GTX 780 Ti aka The Titan Killer [ http://hothardware.com/News/NVIDIAs...s-Leaked--Could-be-a-Touch-Faster-Than-Titan/ ]; well almost. The GeForce GTX 780 Ti may sport a GK110 Kepler GPU with, among other features, 2,496 CUDA cores. It's core clockspeed may be 902MHz base and 954MHz boost, and it will sport 3GB of GDDR5 memory on a 384-bit bus that may likely be clocked at 1502MHz (6008MHz effective). In price, it'll likely be spaced between the GTX 780 and the Titan, selling for around $650. This means that regular GTX 780 prices will take the fall. Moreover, the Titan1'll still have the ram size and double precision floating point advantages*/ to likely maintain Titan's current pricing of $1k for the standard model. The GTX 780 Ti will most likely hit the streets in mid-to-late November of this year (but after AMD releases the R9 290s) to capture holiday shoppers by being competitive with, if not beating, the R9 290's prices.

*/ BTW - An EVGA Titan OC edition can still be massively overclocked to maintain its vast superiority, by attaining a single precision floating point peak of 6.728 TFlops and a double precision floating point peak of 2.243 TFlops.

Nvidia's response to the AMD RADEON R9 290X will be the GTX 780 Ti aka The Titan Killer [ http://hothardware.com/News/NVIDIAs...s-Leaked--Could-be-a-Touch-Faster-Than-Titan/ ]; well almost. The GeForce GTX 780 Ti may sport a GK110 Kepler GPU with, among other features, 2,496 CUDA cores. It's core clockspeed may be 902MHz base and 954MHz boost, and it will sport 3GB of GDDR5 memory on a 384-bit bus that may likely be clocked at 1502MHz (6008MHz effective). In price, it'll likely be spaced between the GTX 780 and the Titan, selling for around $650. This means that regular GTX 780 prices will take the fall. Moreover, the Titan1'll still have the ram size and double precision floating point advantages*/ to likely maintain Titan's current pricing of $1k for the standard model. The GTX 780 Ti will most likely hit the streets in mid-to-late November of this year (but after AMD releases the R9 290s) to capture holiday shoppers by being competitive with, if not beating, the R9 290's prices.

*/ BTW - An EVGA Titan OC edition can still be massively overclocked to maintain its vast superiority, by attaining a single precision floating point peak of 6.728 TFlops and a double precision floating point peak of 2.243 TFlops.

Last edited:

So it's quite clear how quickly the performance benefits of extra cores drops off!

This is fantastic testing! Thank you for taking the time to put that together and post it. I really do wish Premiere Pro had the same controls for number of threads like AE does. Alas, it doesn't. That's the application I'm most interested in.

Either way, well done!

Thanks again habitullence for your questions.

Because of those questions that you last asked me, when I went to my CUDA rig with the GTX 690, I remembered to try something new and did it payoff. A GTX 690 is mainly a 2 GPU card. So I went into Nvidia Control Panel (currently Windows only) to do some exploration. There I found a parameter that allows me to turn-off the default treatment of the two GPUs within that double wide card, as a multi card (i.e., SLI) and to treat each GPU more separately. Doing so allowed me to render the OctaneRender Benchmark scene in 79 seconds (Compare that time with the ones in this shootout done by Barefeats - http://www.barefeats.com/gputitan.html ) . The GTX 690 even beat the time there taken by the Titan by rendering that demo scene 17 seconds faster. I did overclock just the memory of the GTX 690 to achieve that result, but the GTX 690 untweaked still beat the time taken by that Titan (95 sec.) by at least 8 seconds consistently. I may not have ever thought about treating those GPUs separately but for your inquiry. Before I went into Nvidia Control Panel to breakup SLI treatment, the GTX 690 rendered the scene in 101-102 seconds. So the overall gain for the GTX 690 with its memory untweaked was to render the scene about 14 seconds faster. Although that might not seem like much, depending on the resolution/format of an animation it would take between 24 to 30+ fps to render one second of video and to render one minute of video would add a multiple of 60. So that could require rendering 1440 to 1800+ frames for a one minute video. Obviously that 14 second per frame would quickly add up - 14 sec. * 1440 = 20,160 sec; 20,160 sec. / 60 = 336 minutes saved or (/60 again ) = 5.6 hours of time saved. When time is of the essence, every minute saved is important. So here is a perfect example of why one should not render in OctaneRender with GPUs set to SLI mode, even if the card is one of those two GPU wonders.

So SLI doesn't give you anything for workstation 3D, in fact it reduces performance. ... .Can this be unlocked in Mac OS?

Because of those questions that you last asked me, when I went to my CUDA rig with the GTX 690, I remembered to try something new and did it payoff. A GTX 690 is mainly a 2 GPU card. So I went into Nvidia Control Panel (currently Windows only) to do some exploration. There I found a parameter that allows me to turn-off the default treatment of the two GPUs within that double wide card, as a multi card (i.e., SLI) and to treat each GPU more separately. Doing so allowed me to render the OctaneRender Benchmark scene in 79 seconds (Compare that time with the ones in this shootout done by Barefeats - http://www.barefeats.com/gputitan.html ) . The GTX 690 even beat the time there taken by the Titan by rendering that demo scene 17 seconds faster. I did overclock just the memory of the GTX 690 to achieve that result, but the GTX 690 untweaked still beat the time taken by that Titan (95 sec.) by at least 8 seconds consistently. I may not have ever thought about treating those GPUs separately but for your inquiry. Before I went into Nvidia Control Panel to breakup SLI treatment, the GTX 690 rendered the scene in 101-102 seconds. So the overall gain for the GTX 690 with its memory untweaked was to render the scene about 14 seconds faster. Although that might not seem like much, depending on the resolution/format of an animation it would take between 24 to 30+ fps to render one second of video and to render one minute of video would add a multiple of 60. So that could require rendering 1440 to 1800+ frames for a one minute video. Obviously that 14 second per frame would quickly add up - 14 sec. * 1440 = 20,160 sec; 20,160 sec. / 60 = 336 minutes saved or (/60 again ) = 5.6 hours of time saved. When time is of the essence, every minute saved is important. So here is a perfect example of why one should not render in OctaneRender with GPUs set to SLI mode, even if the card is one of those two GPU wonders.

Attachments

Last edited:

Further curiosity didn't kill this cat. Picking up on my last post -

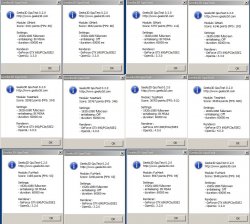

Curious about how multi (SLI) and non-multi modes on the GTX 690 affected other performance metrics, I ran GpuTest in each mode (other settings were unchanged from those employed in post # 845). The two columns on the left were run in SLI and the two columns of the right were run in non-multi mode, with the first column for each pair showing the results at highest anti-aliasing and the second column of each pair showing the results with no antialiasing. To me, the results were shocking, to say the least - especially in non-multi at the highest anti-aliasing setting. What paradoxes? Highest scores were in non-SLI mode with highest anti-aliasing setting.

Curious about how multi (SLI) and non-multi modes on the GTX 690 affected other performance metrics, I ran GpuTest in each mode (other settings were unchanged from those employed in post # 845). The two columns on the left were run in SLI and the two columns of the right were run in non-multi mode, with the first column for each pair showing the results at highest anti-aliasing and the second column of each pair showing the results with no antialiasing. To me, the results were shocking, to say the least - especially in non-multi at the highest anti-aliasing setting. What paradoxes? Highest scores were in non-SLI mode with highest anti-aliasing setting.

Attachments

Last edited:

This is fantastic testing! Thank you for taking the time to put that together and post it. I really do wish Premiere Pro had the same controls for number of threads like AE does. Alas, it doesn't. That's the application I'm most interested in.

Either way, well done!

Thanks and let me know if there's any test you have in mind for Premiere that I can run to compare to your system. I may have some control in the BIOS over the CPUs that is unavailable on a real mac pro.

I think part of what you are seeing here is exactly what it looks like, CUDA is a more efficient way to split load across multi GPUs than SLI i.

I have used SLIPatch on my 2009 and run multiple GPUs. I was amazed that it literally meant like 90% added to frame rates.

But that is what SLi wa for, initially.

CUDA and OpenCl are a different beast, and they apparently can better coordinate the GK104 cores than the BRO4 bridge on the card.

(You can see BRO4 using NVFlash. It has it's own EEPROM and little world it lives in.)

As far as why the AA is BOOSTING frame rates, I can only guess.

I have noticed inconsistencies using the Uningine benchmaks where AA doesn't seem to slow down the speeds, whereas it used to be a boat anchor that would sink fps.

Conceptually it feels like the part of core that handles this is unused unless you turn this function on.

Also, how do you have the global settings for FSAA etc?

The Nvidia control panel can trump anything set in renderer or let program choose.

I have used SLIPatch on my 2009 and run multiple GPUs. I was amazed that it literally meant like 90% added to frame rates.

But that is what SLi wa for, initially.

CUDA and OpenCl are a different beast, and they apparently can better coordinate the GK104 cores than the BRO4 bridge on the card.

(You can see BRO4 using NVFlash. It has it's own EEPROM and little world it lives in.)

As far as why the AA is BOOSTING frame rates, I can only guess.

I have noticed inconsistencies using the Uningine benchmaks where AA doesn't seem to slow down the speeds, whereas it used to be a boat anchor that would sink fps.

Conceptually it feels like the part of core that handles this is unused unless you turn this function on.

Also, how do you have the global settings for FSAA etc?

The Nvidia control panel can trump anything set in renderer or let program choose.

Curious about how multi (SLI) and non-multi modes on the GTX 690 affected other performance metrics, I ran GpuTest in each mode (other settings were unchanged from those employed in post # 845). The two columns on the left were run in SLI and the two columns of the right were run in non-multi mode, with the first column for each pair showing the results at highest anti-aliasing and the second column of each pair showing the results with no antialiasing. To me, the results were shocking, to say the least - especially in non-multi at the highest anti-aliasing setting. What paradoxes? Highest scores were in non-SLI mode with highest anti-aliasing setting.

That's unbeleivable, it's as if the second half of the card does almost nothing when in SLI mode!

All this talk of how much better GPUs run in Windows has me doubting whether I'll bother with OSX for the video/AE/C4D workstation for much longer... MacVidCards is uncovering evidence that the 'workstation' cards are running on the same drivers as gamer cards so it seems there's no real optimization for pro apps. Still love OSX though argh!!

+1that's unbeleivable, it's as if the second half of the card does almost nothing when in sli mode!

All this talk of how much better gpus run in windows has me doubting whether i'll bother with osx for the video/ae/c4d workstation for much longer... Still love osx though argh!!

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.