Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Confirmed and Possible Flashable GTX 680 Models

- Thread starter xcodeSyn

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Read the excellent post Step-by-Step Instructions for Flashing GTX680, and you are golden.Thanks for your informations. I will did flash in next days

You can set the clocks back up using Kepler BIOS Tweaker in Windows.

Thanks!

Another success. GTX-680 Zotac ZT-60101-10P flashed and installed in my Mac Pro 5,1 very quickly. I booted with Windows 7 and followed the instructions in this tutorial, it took me about five minutes. Works like a charm.

ZOTAC GTX 680 AMP! Dual-Silencer (ZT-60105-10P) ... SUCCESS!!!!

I have put the Kepler utility, rom and nvflash in the same folder.

I read the Original Bios of the Zotac card with Kepler utility and backed it up.

I tweaked the evga bios to my card’s specs as instructed by this thread.

The only problem was that i could not flash the ROM with the Kepler Utility, so i downloaded the latest nvflash 5.513.0 and flashed with the following command (suggested by @dosdude1 here): nvflash64 -6 custom-name.rom

Flashing was done in Windows 10 installed in legacy and it was very easy process!!!!!!

I have put the Kepler utility, rom and nvflash in the same folder.

I read the Original Bios of the Zotac card with Kepler utility and backed it up.

I tweaked the evga bios to my card’s specs as instructed by this thread.

The only problem was that i could not flash the ROM with the Kepler Utility, so i downloaded the latest nvflash 5.513.0 and flashed with the following command (suggested by @dosdude1 here): nvflash64 -6 custom-name.rom

Flashing was done in Windows 10 installed in legacy and it was very easy process!!!!!!

Hello everybody,

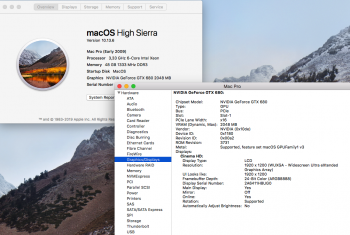

I recently purchased an ASUS GTX 680 DirectCU II TOP version, then I have flashed the card with the usual EVGA bios and everything works well, both under OSX and Windows 7 except the speed under OSX (OceanWave and LuxMark) is shown as 1411 MHz instead of the usual 1084 MHz while under Windows 7 they are reported correctly.

CUDA-Z instead reports the right speed but show a strange behavior in the Performance Tab: while in Windows 7 the Device-to-Device speed is steady at above 70 Gb/s under OSX it goes up and down between 55 and 69 Gb/s.

Anybody in the forum with this particular card has experienced this issue?

After that I have also have modified the clocks and the voltage of the ROM to match the ones of the original GTX 680 TOP version using the Kepler BIOS Tweaker, I have carefully double checked every difference between the ASUS and EVGA Mac Roms and every value of the ASUS parameters has been copied in the EVGA rom.

The only thing that does not perfectly matched is the Boost Table where the shift point between the two roms was hard coded differently and I could not edit it, only three values in the middle (around the position 21) are different, all the others matches perfectly.

I succesfully flashed back this modded Rom to the card and the speed is noticeable, no higher fan activity than before and the benchmarks confirm the new speed is effective. The strange 1411 MHz value is still here also after the reflash with new clock speeds.

So far I am really happy about this ASUS card but I would like the infos about speed could be fixed. Any idea about this?

Many thanks in advance.

I recently purchased an ASUS GTX 680 DirectCU II TOP version, then I have flashed the card with the usual EVGA bios and everything works well, both under OSX and Windows 7 except the speed under OSX (OceanWave and LuxMark) is shown as 1411 MHz instead of the usual 1084 MHz while under Windows 7 they are reported correctly.

CUDA-Z instead reports the right speed but show a strange behavior in the Performance Tab: while in Windows 7 the Device-to-Device speed is steady at above 70 Gb/s under OSX it goes up and down between 55 and 69 Gb/s.

Anybody in the forum with this particular card has experienced this issue?

After that I have also have modified the clocks and the voltage of the ROM to match the ones of the original GTX 680 TOP version using the Kepler BIOS Tweaker, I have carefully double checked every difference between the ASUS and EVGA Mac Roms and every value of the ASUS parameters has been copied in the EVGA rom.

The only thing that does not perfectly matched is the Boost Table where the shift point between the two roms was hard coded differently and I could not edit it, only three values in the middle (around the position 21) are different, all the others matches perfectly.

I succesfully flashed back this modded Rom to the card and the speed is noticeable, no higher fan activity than before and the benchmarks confirm the new speed is effective. The strange 1411 MHz value is still here also after the reflash with new clock speeds.

So far I am really happy about this ASUS card but I would like the infos about speed could be fixed. Any idea about this?

Many thanks in advance.

Please, can someone that has flashed an ASUS GTX 680 DirectCU II with standard EVGA Bios tell me what is the clock speed reported by OpenCL OceanWave and LuxMark in OSX?

Got a Gainward GeForce gtx680 for really cheap (dude thought it was faulty as it gave him the old bsod) gave it a quick blast with some compressed air, cleaned the PCIe fingers with some isopropyl alcohol and chucked it in my cMP. Worked sweet so I flashed it and now I have boot screens. Cheers for all the info in this thread!

would this card work.. ?

I think it would...

https://www.amazon.com/MSI-N680GTX-...e=UTF8&qid=1371469552&sr=8-7&keywords=GTX+680

I think it would...

https://www.amazon.com/MSI-N680GTX-...e=UTF8&qid=1371469552&sr=8-7&keywords=GTX+680

As an Amazon Associate, MacRumors earns a commission from qualifying purchases made through links in this post.

would this card work.. ?

I think it would...

https://www.amazon.com/MSI-N680GTX-...e=UTF8&qid=1371469552&sr=8-7&keywords=GTX+680

See post 322 in this thread.

As an Amazon Associate, MacRumors earns a commission from qualifying purchases made through links in this post.

Gigabyte Reference GTX680 aka "lightsaber edition" => GV-N680D5-2GD-B*

* https://www.gigabyte.com/us/Graphics-Card/GV-N680D5-2GD-B#ov

HW: MacPro 5.1 mid2010

SOFT: NVflash v5.134 => download: https://forums.macrumors.com/thread...-rates-pci-e-2-0-5gt-s.1603260/#post-17504407

FW: EVGA 2GB => download:

https://forums.macrumors.com/thread...-680-mac-edition.1565735/page-5#post-17132316

INSTRUCTION: step-by-step => https://forums.macrumors.com/thread...-pci-e-2-0-5gt-s.1603260/page-4#post-17832428

I also confirm that the Gigabyte GV-N680D5-2GD-B works great ! *boot screen, Mojave, etc.

+ https://forums.macrumors.com/thread...e-gtx-680-models.1578255/page-2#post-17501849

* https://www.gigabyte.com/us/Graphics-Card/GV-N680D5-2GD-B#ov

HW: MacPro 5.1 mid2010

SOFT: NVflash v5.134 => download: https://forums.macrumors.com/thread...-rates-pci-e-2-0-5gt-s.1603260/#post-17504407

FW: EVGA 2GB => download:

https://forums.macrumors.com/thread...-680-mac-edition.1565735/page-5#post-17132316

INSTRUCTION: step-by-step => https://forums.macrumors.com/thread...-pci-e-2-0-5gt-s.1603260/page-4#post-17832428

I also confirm that the Gigabyte GV-N680D5-2GD-B works great ! *boot screen, Mojave, etc.

+ https://forums.macrumors.com/thread...e-gtx-680-models.1578255/page-2#post-17501849

Attachments

Last edited:

Your card is very similar, maybe identical, to my original eVGA GTX 680 Mac Edition. Nice to know that this one works.Gigabyte Reference GTX680 aka "lightsaber edition" => GV-N680D5-2GD-B*

* https://www.gigabyte.com/us/Graphics-Card/GV-N680D5-2GD-B#ov

HW: MacPro 5.1 mid2010

SOFT: NVflash v5.134 => download: https://forums.macrumors.com/thread...-rates-pci-e-2-0-5gt-s.1603260/#post-17504407

FW: EVGA 2GB => download:

https://forums.macrumors.com/thread...-680-mac-edition.1565735/page-5#post-17132316

INSTRUCTION: step-by-step => https://forums.macrumors.com/thread...-pci-e-2-0-5gt-s.1603260/page-4#post-17832428

I also confirm that the Gigabyte GV-N680D5-2GD-B works great ! *boot screen, Mojave, etc.

+ https://forums.macrumors.com/thread...e-gtx-680-models.1578255/page-2#post-17501849

I have flashed my new Gainward GeForce® GTX 680 2GB today, and work just fine

I'm all heapy now with a working bootscreen again, makes live easier sometimes ...

...

Anyway, I was wondering how i can read the GPU die within MacOS ?

within Bootcamp it's easy, i just ran GPU-Z for example, and it gives me all the temps. I want to see.

unfortunately istat menu's, nor Macsfancontrol cannot read the GPU so far..

Anybody got a fix for me.. ?

My Gainward GTX680 can run a little hot, I think due to very low GPU fan speed control.

the fans also ramped up at arround 78 degrees, in My opinion a little late which will slowly bake the GPU over time..

Maybay tools like kepler bios editors can fix a fan speed or something ?

How can I fix this, I dont know what the best way is for me.. ?

I'm all heapy now with a working bootscreen again, makes live easier sometimes

Anyway, I was wondering how i can read the GPU die within MacOS ?

within Bootcamp it's easy, i just ran GPU-Z for example, and it gives me all the temps. I want to see.

unfortunately istat menu's, nor Macsfancontrol cannot read the GPU so far..

Anybody got a fix for me.. ?

My Gainward GTX680 can run a little hot, I think due to very low GPU fan speed control.

the fans also ramped up at arround 78 degrees, in My opinion a little late which will slowly bake the GPU over time..

Maybay tools like kepler bios editors can fix a fan speed or something ?

How can I fix this, I dont know what the best way is for me.. ?

I have flashed my new Gainward GeForce® GTX 680 2GB today, and work just fine

I'm all heapy now with a working bootscreen again, makes live easier sometimes...

Anyway, I was wondering how i can read the GPU die within MacOS ?

within Bootcamp it's easy, i just ran GPU-Z for example, and it gives me all the temps. I want to see.

unfortunately istat menu's, nor Macsfancontrol cannot read the GPU so far..

Anybody got a fix for me.. ?

My Gainward GTX680 can run a little hot, I think due to very low GPU fan speed control.

the fans also ramped up at arround 78 degrees, in My opinion a little late which will slowly bake the GPU over time..

Maybay tools like kepler bios editors can fix a fan speed or something ?

How can I fix this, I dont know what the best way is for me.. ?

When I run Macsfancontrol in my 5.1, there's a reading for PCIe Ambient. Boost A is for the PCIe cooling, so try changing this to a higher rpm to reduce the GPU temp. The Ambient temp will rise if the card is running hotter. There's no Mac app I know of to monitor GPU temps unfortunately, so this would be a good workaround. Maybe one of the wizards on here can offer a better solution?

When I run Macsfancontrol in my 5.1, there's a reading for PCIe Ambient. Boost A is for the PCIe cooling, so try changing this to a higher rpm to reduce the GPU temp. The Ambient temp will rise if the card is running hotter. There's no Mac app I know of to monitor GPU temps unfortunately, so this would be a good workaround. Maybe one of the wizards on here can offer a better solution?

This is a good solution but you suggested the wrong fan. If PCIe Ambient temp is desired to run lower, then the "PCI" fan needs to be ramped up. It is the first fan at the top of the list. "Boost A" fan is the fan in the CPU heatsink. Ramping up Boost A fan will lower CPU temps, and likely the IOH temps.

Thanks skizzo for that correction... Evacuating the heat here is key!This is a good solution but you suggested the wrong fan. If PCIe Ambient temp is desired to run lower, then the "PCI" fan needs to be ramped up. It is the first fan at the top of the list. "Boost A" fan is the fan in the CPU heatsink. Ramping up Boost A fan will lower CPU temps, and likely the IOH temps.

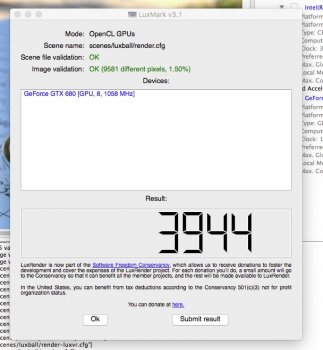

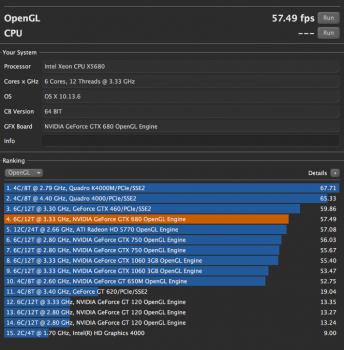

I am puzzled, my first GTX680 was an ASUS Direct CU2 TOP, flashed with EVGA Mac Edition bios and clock speed corrected with Kepler tweaker, the speed reported by luxmark was 1411 MHz, my thought was because the difference between the card an the bios, but now I purchased a genuine EVGA one, the same layout as the Mac version, flashed with the original bios and LuxMark still reports 1411 MHz instead of 1058 MHz as it should be...

My MacPro is a 4.1 upgraded to 5.1, with Mojave 10.14.3 installed. Am I missing something? No one here seems to have reported this before, could be an issue with 4.1 MacPros only?

My MacPro is a 4.1 upgraded to 5.1, with Mojave 10.14.3 installed. Am I missing something? No one here seems to have reported this before, could be an issue with 4.1 MacPros only?

I am puzzled, my first GTX680 was an ASUS Direct CU2 TOP, flashed with EVGA Mac Edition bios and clock speed corrected with Kepler tweaker, the speed reported by luxmark was 1411 MHz, my thought was because the difference between the card an the bios, but now I purchased a genuine EVGA one, the same layout as the Mac version, flashed with the original bios and LuxMark still reports 1411 MHz instead of 1058 MHz as it should be...

My MacPro is a 4.1 upgraded to 5.1, with Mojave 10.14.3 installed. Am I missing something? No one here seems to have reported this before, could be an issue with 4.1 MacPros only?

my flashed Gainward GTX680 also shows 1411mhz in Luxmark.

running MacPro 4,1-5,1 flashed here with Mojave 10.14.3 aswell.

Maybay 1411mhz core is what is used in the EVGA original mac BIOS.. ?

i dont know..

and tuning it with kepler bios tweaker does not work for some reason on some card perhaps.. ?

small update :

it should be 1058mhz i think, according to spec. list from EVGA.

should we worry since our core is running at 1411mhz all the time.. ?

or is this just a temp. speedboost when starting luxmark for example.. ?

Last edited:

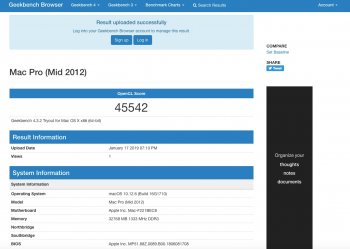

I have flashed my Palit GTX 680 Jetstream 2 GB (P/N NE5X680H1042-1040J). I flash it on a Windows PC with Windows 10 using NVFlash 5.134 for Windows. I tried with 4 newer versions first, but I couldn't get them to work.

I used the -6 modifier to force flashing (otherwise an error would prevent flashing).

I used the standard EVGA rom from here.

As this is my first flashing, I haven't done any post tweaking. I do believe the Cinebench score is pretty low. I get the feeling Cinebench maybe isn't the best bench marking tool. Fans runs perfectly for now, speed up at boot, turns off at desktop, and speeds up at load. The fans are a bit tired, the middle fan bearing is actually shot, so it is disconnected for now, until at replacement fan arrives. I'll need to research this Kepler tweaker and do some Luxmark bench marks.

I used the -6 modifier to force flashing (otherwise an error would prevent flashing).

I used the standard EVGA rom from here.

As this is my first flashing, I haven't done any post tweaking. I do believe the Cinebench score is pretty low. I get the feeling Cinebench maybe isn't the best bench marking tool. Fans runs perfectly for now, speed up at boot, turns off at desktop, and speeds up at load. The fans are a bit tired, the middle fan bearing is actually shot, so it is disconnected for now, until at replacement fan arrives. I'll need to research this Kepler tweaker and do some Luxmark bench marks.

Attachments

my flashed Gainward GTX680 also shows 1411mhz in Luxmark.

running MacPro 4,1-5,1 flashed here with Mojave 10.14.3 aswell.

it should be 1058mhz i think, according to spec. list from EVGA.

should we worry since our core is running at 1411mhz all the time.. ?

or is this just a temp. speedboost when starting luxmark for example.. ?

Ok, sorry for the delay in the answer but now I have verified what is really happening.

The OpenCL framework is correct in reporting the GPU speeds until OSX Sierra, starting with High Sierra and Mojave the reported speed is totally wrong, when you see on eBay and everywhere on the Net (but also here in various thread) the screenshots of LuxMark reporting the right speed you can bet you are seeing a MacPro running OSX lesser or equal to 10.12.6

If you have an nVidia GPU and 10.13 installed you can read the correct clock speed using the nVidia Web drivers and CUDA-Z but since it polls the nVidia drivers (and not the OpenCL framework) the reported speed is real and correct.

Of course the effective speed of the graphic card is what is written on the firmware and not in the OpenCL (or OpenGL) drivers. If you are seeing poor performance on CineBench, for example, keep in mind that Apple's OpenGL is stopped at 4.2 even on recent cards, for this reason only Metal benchmarks have meaning from High Sierra on.

Ok, sorry for the delay in the answer but now I have verified what is really happening.

The OpenCL framework is correct in reporting the GPU speeds until OSX Sierra, starting with High Sierra and Mojave the reported speed is totally wrong, when you see on eBay and everywhere on the Net (but also here in various thread) the screenshots of LuxMark reporting the right speed you can bet you are seeing a MacPro running OSX lesser or equal to 10.12.6

If you have an nVidia GPU and 10.13 installed you can read the correct clock speed using the nVidia Web drivers and CUDA-Z but since it polls the nVidia drivers (and not the OpenCL framework) the reported speed is real and correct.

Of course the effective speed of the graphic card is what is written on the firmware and not in the OpenCL (or OpenGL) drivers. If you are seeing poor performance on CineBench, for example, keep in mind that Apple's OpenGL is stopped at 4.2 even on recent cards, for this reason only Metal benchmarks have meaning from High Sierra on.

For info, this is the Luxmark result from my Sapphire PULSE RX580 in High Sierra. The speed 1340MHz is correctly reported.

Only after upgrade to Mojave, then the reported speed become 300MHz (the base clock for low power state).

Guys, I got a K5000 reference PC card, I wonder how I flash it. I tried the k5000 mac rom and NVflash just giving me id not matching, board ID mismatch etc. I don't know what is wrong. Please help. Anyone successfully flashed a k5000? Will nvflash 5.134 work for k5000? Should I use -4 -5 -6? Thanks.

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.