If Apple did not care about having the single core performance crown they would have stick to stock ARM chips and not design their OWN ARM chips.Sure, but now it isn't, and honestly, only some geeks here care. Nobody else does. They've given up the single-core crown and Macs will sell as well as ever. I'm sure they could create some boutique chips that ran at super high power to get that crown back but they won't in the main consumer and pro M series because it just doesn't make much sense from their perspective, from both a technical and business point of view.

But who knows, maybe they'll have a Mac Pro SKU that wins back the crown, since those are boutique flagship machines anyway, likely selling less than 0.1% of all Macs.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

First M2 benchmarks

- Thread starter EugW

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel does not care about performance per watt as their laptop chips are no where as efficient because of a range of factors.Intel didn't give up the single-core laptop CPU speed crown. They're just 10 years behind schedule.

I’m not sure that works out.If Apple did not care about having the single core performance crown they would have stick to stock ARM chips and not design their OWN ARM chips.

Apple clearly does care about performance, but not to the point where they’ll sacrifice efficiency for it. It’s not an “either/or” argument so much as it’s “we’re going to get as much performance as we can without clocking the nuts off the processor for dickwaving”.

Saying they’d just use off-the-shelf designs if they “didn’t care about being the fastest” is looking at it in black and white.

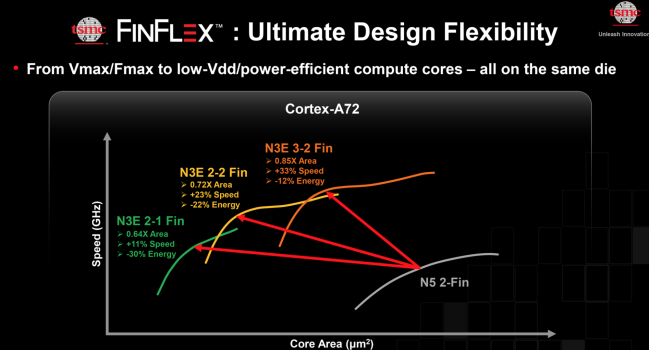

My god, isn’t the purpose of graphs to make information easier to understand?i wonder how the M3 will be like compared to the M3 Pro/max/ultra when FinFlex N3 will arrive

This one makes me feel like I’m brain damaged.

You guys are completely missing the point. Apple's target here is to be able to sell super fast machines at low power usage at high volume, to make the most money. Buying Qualcomm or Samsung ARM chips is not going to achieve that obviously. The chips Apple has designed with their chosen clock rates and power specs meets that criteria, and in spades.If Apple did not care about having the single core performance crown they would have stick to stock ARM chips and not design their OWN ARM chips.

That's the target. The target is not simply to achieve the single-core speed crown. If they do, that's great, and Apple will brag about it. If they don't, that's fine too, and Apple just won't talk about it.

I think M2 is a prime example of this. They didn't push the envelope here regarding single-core CPU speed. Instead, they chose to focus on GPU improvements, hardware ProRes acceleration, neural engine improvements, memory improvements, and efficiency. In fact, regarding the latter, Apple said it themselves, that they have a "relentless focus on power-efficient performance".

Apple clearly does care about performance, but not to the point where they’ll sacrifice efficiency for it.

Exactly. Apple is greedy. They want both performance and efficiency.

Yes I do agree with that. Notice how M2 did not gets its own event because it was not a good CPU increase...You guys are completely missing the point. Apple's target here is to be able to sell super fast machines at low power usage at high volume, to make the most money. Buying Qualcomm or Samsung ARM chips is not going to achieve that obviously. The chips they designed with their chosen clock rates, voltages, and power specs meets that criteria, and in spades.

That's the target. The target is not simply to achieve the single-core speed crown. If they do, that's great, and Apple will brag about it. If they don't, that's fine too, and Apple just won't talk about it.

I think M2 is a prime example of this. They didn't push the envelope here regarding single-core CPU speed. Instead, they chose to focus on GPU improvements, hardware ProRes acceleration, neural engine improvements, memory improvements, and efficiency. In fact, Apple has said it themselves, that they have a "relentless focus on power-efficient performance".

A12Z to M1 was 50% faster.

M1 to M2 was 18% faster.

I think M3 will get its own event in 2023 like M1 did because new node, and new architecture.

They didn't push the envelope here regarding single-core CPU speed.

Of course they did. Just not as much as with some other iterations. Apple always operated in a shifted cadence, focusing on different areas every year. But M2 brings around 7-10% increase in single-core CPU performance, which is consistent to what Intel or AMD have been delivering in their generational improvements. In fact, M2 is currently among the fastest — if not the fastest — mobile CPU in terms of single-threaded performance.

Let's be honest M2 was underwhelming for Apple standards. M3 should be more exciting.Of course they did. Just not as much as with some other iterations. Apple always operated in a shifted cadence, focusing on different areas every year. But M2 brings around 7-10% increase in single-core CPU performance, which is consistent to what Intel or AMD have been delivering in their generational improvements. In fact, M2 is currently among the fastest — if not the fastest — mobile CPU in terms of single-threaded performance.

Let's be honest M2 was underwhelming for Apple standards. M3 should be more exciting.

Of course it was underwhelming. I do believe that they are behind the schedule. M2 was probably supposed to debut early last autumn, but it was around 8 months too late. COVID and friends have really messed things up, and now Russia and China are creating more uncertainty. It will probably take years until Apple's intended schedule will become clear.

The M2 alone is still a good evolution over the M1 thanks to its lpddr5, bigger bandwidth , over 30-40% gpu

But yes, when it comes to the M2 pro/max IF they will be just scaled up M2, that will be a less of an upgrade

In pc era, i dont see over course of 18 months over 30-40% improvements in gpu department

So for now, the M2 is good enough for the new Macbook Air, its a complete package

But yes, when it comes to the M2 pro/max IF they will be just scaled up M2, that will be a less of an upgrade

In pc era, i dont see over course of 18 months over 30-40% improvements in gpu department

So for now, the M2 is good enough for the new Macbook Air, its a complete package

Never said you were. Just that it's the direction that kind of comment typically comes from.Since when am I an x86 apologist? 🤪

Both Apple producing something good, arguably better than x86, and leaving performance on the table for the sake of design flexibility and ergonomics is anathema to some people.

The policy is not arbitrary. It is prioritizing the right things and it brings Apple financial benefits as well.And yes, there is both direct and indirect evidence that the maximal clock of M-series is an inherent limitation of their design rather than arbitrary policy.

Of course the Apple SoCs are likely not to scale as high in clock speed as, say, an Alder Lake desktop chip! But it is also extremely unlikely to not be fundamentally able to extend further along the frequency vs. power curve than what Apple ships in all their devices.I agree with everything you say here. Buy none of it proves that M1 clock speed is an arbitrary policy. Apple doesn’t just choose to operate on a more efficient segment of the power curve, they actually deliver comparable peak performance at 2-3 times lower power consumption than their d86 competitors. That’s not something one can explain with node advantage alone, this has to be a fundamental property of their CPU design. And it’s unlikely that this design comes with no drawbacks. It it entirely unreasonable to speculate that limited maximal clock might be among those limitations?

That the design decisions behind M1 make it impossible to systematically achieve stable operation at higher clocks than 3.2 ghz.

I contend that this assertion is false, and I have seen no supporting evidence. None.

And I probably won't, because it doesn't make sense.

What Andrei showed was, if you stop to think about it, simply that Apples SoCs work just like pretty much every damn CPU and GPU manufactured - they have a frequency to power curve. We know that, and it has been demonstrated, not that it needed to. That knowledge actually leads to further conclusions - Apple does not play a binning game with their chips. For reasons that fundamentally depend on lithographic stochastics, individual chip performance falls within something that is vaguely normal distribution-ish. (How wide that distribution is, and its precise shape depend not only on lithography but also to some extent circuit design but we are entering into deep waters there, where I'd prefer if someone who has done actual work in those trenches would speak about the particulars.)Direct evidence: tests done by Anandtech some years ago that showed a sharp exponential increase in power consumption when approaching the maximal operating clock. If I remember correctly, this was done for A12, but if Apple's basic design didn't change it could suggest that the current chips already operate at or close to their limit.

Likewise the exact behavior of the frequency to power curve is not going to be the same either across processes or necessarily designs. But there is nothing that suggest that Apples would be truncated at exactly the frequency that they are shipping all their products with.

Now, since Apple doesn't ship product tiers based on chip binning, it means that in order not to have to discard a very large part of the SoCs coming from the fab, they need to put the cut-off on the normal distribution curve pretty far down. All the SoCs delivered to customers will fulfill the minimum criteria, but they will differ individually. (Quite a lot actually, judged by the devices normal distribution curve, which on the other hand doesn't actually tell us much since we don't know how wide that curve is.)

Because Apple wants to sell a compact and quiet Studio. They, quite correctly in my book, make the call that pushing further up the frequency vs. power curve provides little benefit. Unless you are a forum benchmark warrior, who cares about 10-15% performance in absolute terms? It would make a molecular dynamics run take 9 hours instead of 10, it would apply a Photoshop filter in 0.45s rather than 0.5s - neither of which makes any practical difference whatsoever. Having the freedom to design small quiet computers is way, WAY more important to Apple and arguably their customers, and not pushing the envelope on clocks has the added benefit that they don't have to do complex binning with associated product matrix clutter in order to have good SoC/product yield! (Financial win - Tim is happy.)Indirect evidence: just a few considerations. First, if Firestorm could support higher clocks at the expense of higher power consumption, it is likely that Apple could have squeezed at least 10-20% more at 100-150% higher power consumption. That would still conformably place them under desktop Intel or AMD — with a very wide margin, but deliver higher performance. So why is the M1 in the Studio still limited to 3.2Ghz? Surely it has a budget of 20W or so for single-core operation? Second, it has been observed that Firestorm has relatively long pipeline stages, which would limit the maximal attainable clock.

I'd say that the effort lies in the x86 side of the playing field. They want to be able to capitalize on their designs over a very wide range of products. Considering the challenge of serving an extremely diverse eco system of devices I'd say they do pretty well, but the competitive advantage here lies with Apple in the relatively narrow spaces where they choose to compete.The overall idea is that Apple's design is probably very different from Intel or AMD. Modern x86 CPUs are designed to achieve very high clock rates while remaining scalable and operational across wide clock ranges. Apple CPUs are designed to do as much work possible with as little energy usage as possible, which is not the same as just running a CPU at a lower clock to save energy.

I, on the other hand, don't think they care much. The marketeers may mention it if it falls into their lap of course, but Johny Srouji has been super clear that they prioritize performance efficiency, not performance alone. That's the story they reinforce both directly in their presentations, and implicitly with their very compact enclosures with long battery lives. Apples silicon team has also stated clearly that they feel that it's a great advantage to design for a target product as opposed to selling silicon chips in bulk to a variety of customers, and customer needs. At the end of the day, Apple sells finished products, not chips, thus the actual product story is is what they focus on, and we get presentations that make us tech heads groan in frustration. 😀See above. I don't believe that Apple would miss the chance to claim the absolute performance crown in single-threaded operation.

@EntropyQ3 I suppose we will have agree to disagree on this topic then  Let's revisit this in a couple of years when Apple's plan is more clear.

Let's revisit this in a couple of years when Apple's plan is more clear.

AMD is eating their market thanks to that delay.Intel didn't give up the single-core laptop CPU speed crown. They're just 10 years behind schedule.

@EntropyQ3, I agree with most of what your said in your last post, except the part about Apple not binning. They are in fact binning, but just not with clock speed. There are two tiers of M1, two tiers of M2, three tiers of M1 Pro, and two tiers of M1 Max, based on the number of GPU cores and sometimes the CPU cores as well. (I'll ignore M1 Ultra for this discussion.)

It's not that they don't care, They became complacent and their designs barely improved as they thought they'd have market domination. Then came Zen, then came AppleIntel does not care about performance per watt as their laptop chips are no where as efficient because of a range of factors.

You aren't going to see 20% jumps every single generation.Let's be honest M2 was underwhelming for Apple standards. M3 should be more exciting.

That the design decisions behind M1 make it impossible to systematically achieve stable operation at higher clocks than 3.2

It is not the design.

You just do not present any evidence. You just have to look at the facts. At the current operating voltages, Apple is not anywhere close to the limit (contrary to the AMD/Intel desktop cores). Any circuit at the same technology scales similarly with voltage. So the relative scaling from 0.9V to say 1.4V is similar between say AMD and Apple. Or with other words, it is impossible that one architecture stops scaling at 0.9V and the other does not.Indirect evidence: just a few considerations. First, if Firestorm could support higher clocks at the expense of higher power consumption, it is likely that Apple could have squeezed at least 10-20% more at 100-150% higher power consumption. That would still conformably place them under desktop Intel or AMD — with a very wide margin, but deliver higher performance. So why is the M1 in the Studio still limited to 3.2Ghz? Surely it has a budget of 20W or so for single-core operation? Second, it has been observed that Firestorm has relatively long pipeline stages, which would limit the maximal attainable clock.

Second Apple is using low leakage cells/high VT cells and less gates/fins, as you do for any ultra mobile design. This is absolutely required in order to get good efficiency and in particular long battery duration under low utilization use-cases. This is the second factor, which limits your frequency.

Finally of course the maximum also depends on architecture. However with the current architecture, the maximum is much higher than you expect, easily 4.5+GHz if i would make an estimate.

See above. I don't believe that Apple would miss the chance to claim the absolute performance crown in single-threaded operation.

Apple makes products, which happen to be much more power efficient than the competition. They are not going to throw this advantage out of the window just to claim "the absolute single threaded performance crown". That title is totally irrelevant for Apple.

Last edited:

I don't see why this is relevant. They're not comparing Apple to Intel, but M2 to M1.Cinebench is not a valid basis for comparison. As has been explained ad nauseum, it depends on the intel Embree library. It isn’t optimised well for non intel chips.

M2 is just an overclocked and bigger M1 as the M2 apparently thermal throttles in the MacBook Pro with a fan.

Better wait for the M3 MBA next year which will be much cooler on 3nm.

Guess this also answers all the complaints about why Apple kept the 13” MBP around, the M2 needs a fan.

Better wait for the M3 MBA next year which will be much cooler on 3nm.

Guess this also answers all the complaints about why Apple kept the 13” MBP around, the M2 needs a fan.

Last edited:

You just do not present any evidence. You just have to look at the facts. At the current operating voltages, Apple is not anywhere close to the limit (contrary to the AMD/Intel desktop cores).

There should be an easy way to determine whose opinion is more correct. We need to look at the power consumption of M2. If it stays within the same ballpark (5W) as M1, then it’s the design. If it is higher than M1, then it’s voltage/tweaking of the efficiency curve.

Any circuit at the same technology scales similarly with voltage. So the relative scaling from 0.9V to say 1.4V is similar between say AMD and Apple.

Or with other words, it is impossible that one architecture stops scaling at 0.9V and the other does not.

Can you elaborate a bit more on this? In my - admittedly amateur - understanding it’s not about the capability to run at a certain voltage/frequency but about circuit synchronization and signal propagation. If the variation in signal propagation time between functional units is high, increasing the frequency past certain limit can’t mask it anymore, leading to synchronization errors. Or would increasing the frequency reduce the variation proportionally?

Last edited:

M2 is just an overclocked and bigger M1 as the M2 apparently thermal throttles in the MacBook Pro with a fan.

According to what data?

There should be an easy way to determine whose opinion is more correct. We need to look at the power consumption of M2. If it stays within the same ballpark (5W) as M1, then it’s the design. If it is higher than M1, then it’s voltage/tweaking of the efficiency curve.

Not sure what you are trying to say. It is always voltage tweaking at play - what i said is, that they will stay far away from the limit. That having said, i would expect, that a larger an inherently faster core from IPC perspective, will consume more power at iso voltage and iso technology.

Can you elaborate a bit more on this? In my - admittedly amateur - understanding it’s not about the capability to run at a certain voltage/frequency but about circuit synchronization and signal propagation. If the variation in signal propagation time between functional units is high, increasing the frequency past certain limit can’t mask it anymore, leading to synchronization errors. Or would increasing the frequency reduce the variation proportionally?

Just making an example, i do not know if the numbers are correct though and they probably are not. Lets assume, an M1 achieves 3.2GHz at its maximum voltage of say 0.9V and an AMD design achieves 4GHz at the same voltage. However we do know that the AMD design can go up to 5GHz at 1.4V. Thats a 25% increase in performance (at more than double the power). So it is reasonable to assume, that an M1 could increase its frequency by 25% as well, when running at 1.4V - that would be 4GHz in this example.

The option you have in addition is to use higher performance cells. Thing with high performance cells is, that the dynamic power does not increase much but the leakage takes a big toll. However leakage is the most important thing if you are shooting for a long battery duration under partial load scenarios (like web browsing etc.). Therefore leakage is king in mobile designs - however you do not care for leakage when you put your design into a desktop.

To finalize my argument, we also have to look at process variations. Your lowest performant SKU has to be signed off, when doing timing-closure at design time at the slow/slow corner. This implies, that your fastest chips can run quite a bit faster at the same voltage but you do not make use of this, because do not bin your lower performant SKU. You can be certain, that Intel is extensively using binning.

In any case, just to show you how much headroom there is from actual mobile HW to the theoretical limit take an Cortex A72 as example. In contemporary ultra mobile designs this CPU was clocked with a maximum frequency of around 2.4GHz (and often lower). Now TSMC was taking the Cortex A72 IP and tweaked both, the voltage and the used cells (7.5T, 3p+3n) in order to see what is possible. The result was, that they could clock the A72 IP at 4.2GHz.

You can read about it here

Last edited:

Not sure what you are trying to say. It is always voltage tweaking at play - what i said is, that they will stay far away from the limit. That having said, i would expect, that a larger an inherently faster core from IPC perspective, will consume more power at iso voltage and iso technology.

Just making an example, i do not know if the numbers are correct though and they probably are not. Lets assume, an M1 achieves 3.2GHz at its maximum voltage of say 0.9V and an AMD design achieves 4GHz at the same voltage. However we do know that the AMD design can go up to 5GHz at 1.4V. Thats a 25% increase in performance (at more than double the power). So it is reasonable to assume, that an M1 could increase its frequency by 25% as well, when running at 1.4V - that would be 4GHz in this example.

The option you have in addition is to use higher performance cells. Thing with high performance cells is, that the dynamic power does not increase much but the leakage takes a big toll. However leakage is the most important thing if you are shooting for a long battery duration under partial load scenarios (like web browsing etc.). Therefore leakage is king in mobile designs - however you do not care for leakage when you put your design into a desktop.

In any case, just to show you how much headroom there is from actual mobile HW to the theoretical limit take an Cortex A72 as example. In contemporary ultra mobile designs this CPU was clocked with a maximum frequency of around 2.4GHz (and often lower). Now TSMC was taking the Cortex A72 IP and tweaked both, the voltage and the used cells (7.5T, 3p+3n) in order to see what is possible. The result was, that they could clock the A72 IP at 4.2GHz.

You can read about it here

Thanks for the explanation, it seems I will have to revisit my beliefs about how these things work. According to notebookcheck it also appears that M2 is indeed using more power, although I would like to see more detailed tests.

Also, thanks for the link, very interesting! They write that they had to redesign the cache as well as use higher powered cells to achieve these frequencies. Wouldn’t that mean that the efficiency on lower frequencies is likely to have diminished? What I am curious about is what is the clock they could have reached without making these changes. After all, is it still the same A72 if you change the hardware implementation details like that? For instance, I can certainly imagine that Firestorm is in principle able to reach much higher frequencies if one tweaks things like that, but will it still retain the unmatched efficiency at 3.2ghz? After all, isn’t that what this all is about?

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.