@PowerHarryG4 Hi, at the moment I have everything running but no working as it should maybe you can go further. Anyways I did change the formula for building squid but I think there will be no difference.

Generate certificates as @Wowfunhappy (Thank you!) installer does, I put them in another folder

cd

mkdir SquidConf

cd SquidConf

openssl req -x509 -newkey rsa:4096 -subj '/CN=Squid' -nodes -days 999999 -keyout squid-key.pem -out squid.pem

Change squid.conf in /etc/squid/squid.conf, mine looks like this

http_port 3128 ssl-bump generate-host-certificates=on cert=/home/pi/SquidConf/squid.pem key=/home/pi/SquidConf/squid-key.pem

tls_outgoing_options cafile=/etc/ssl/certs/ca-certificates.crt

sslcrtd_program /usr/lib/squid/security_file_certgen

#sslcrtd_children 10 startup=5 idle=1

acl localnet src 0.0.0.1-0.255.255.255

acl localnet src 10.0.0.0/8

acl localnet src 100.64.0.0/10

acl localnet src 169.254.0.0/16

acl localnet src 172.16.0.0/12

acl localnet src 192.168.0.0/16

acl localnet src fc00::/7

acl localnet src fe80::/10

acl excluded_domains ssl::server_name .pypi.org .pythonhosted.org

acl apple_domains ssl::server_name_regex ess\.apple\.com$ ^sw.*\.apple\.com$

acl excluded any-of excluded_domains apple_domains localnet

ssl_bump splice excluded

ssl_bump bump all

acl fetched_certificate transaction_initiator certificate-fetching

cache allow fetched_certificate

http_access allow fetched_certificate

sslproxy_cert_error deny all

http_access allow localhost

http_access deny to_localhost

http_access allow localnet

http_access deny all

cache_log /dev/null

access_log none

logfile_rotate 0

For some reason the script to start the service in /etc/init.d/squid was missing so I added, if you have the problem here is the content. I do this quite different but I think It should work.

sudo nano /etc/init.d/squid

#! /bin/sh

#

# squid Startup script for the SQUID HTTP proxy-cache.

#

# Version: @(#)squid.rc 1.0 07-Jul-2006 luigi@debian.org

#

# pidfile: /var/run/squid.pid

#

### BEGIN INIT INFO

# Provides: squid

# Required-Start: $network $remote_fs $syslog

# Required-Stop: $network $remote_fs $syslog

# Should-Start: $named

# Should-Stop: $named

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Squid HTTP Proxy version 4.x

### END INIT INFO

NAME=squid

DESC="Squid HTTP Proxy"

DAEMON=/usr/sbin/squid

PIDFILE=/var/run/$NAME.pid

CONFIG=/etc/squid/squid.conf

SQUID_ARGS="-YC -f $CONFIG"

[ ! -f /etc/default/squid ] || . /etc/default/squid

. /lib/lsb/init-functions

PATH=/bin:/usr/bin:/sbin:/usr/sbin

[ -x $DAEMON ] || exit 0

ulimit -n 65535

find_cache_dir () {

w=" " # space tab

res=`$DAEMON -k parse -f $CONFIG 2>&1 |

grep "Processing:" |

sed s/.*Processing:\ // |

sed -ne '

s/^['"$w"']*'$1'['"$w"']\+[^'"$w"']\+['"$w"']\+\([^'"$w"']\+\).*$/\1/p;

t end;

d;

:end q'`

[ -n "$res" ] || res=$2

echo "$res"

}

grepconf () {

w=" " # space tab

res=`$DAEMON -k parse -f $CONFIG 2>&1 |

grep "Processing:" |

sed s/.*Processing:\ // |

sed -ne '

s/^['"$w"']*'$1'['"$w"']\+\([^'"$w"']\+\).*$/\1/p;

t end;

d;

:end q'`

[ -n "$res" ] || res=$2

echo "$res"

}

create_run_dir () {

run_dir=/var/run/squid

usr=`grepconf cache_effective_user proxy`

grp=`grepconf cache_effective_group proxy`

if [ "$(dpkg-statoverride --list $run_dir)" = "" ] &&

[ ! -e $run_dir ] ; then

mkdir -p $run_dir

chown $usr:$grp $run_dir

[ -x /sbin/restorecon ] && restorecon $run_dir

fi

}

start () {

cache_dir=`find_cache_dir cache_dir`

cache_type=`grepconf cache_dir`

run_dir=/var/run/squid

#

# Create run dir (needed for several workers on SMP)

#

create_run_dir

#

# Create spool dirs if they don't exist.

#

if test -d "$cache_dir" -a ! -d "$cache_dir/00"

then

log_warning_msg "Creating $DESC cache structure"

$DAEMON -z -f $CONFIG

[ -x /sbin/restorecon ] && restorecon -R $cache_dir

fi

umask 027

ulimit -n 65535

cd $run_dir

start-stop-daemon --quiet --start \

--pidfile $PIDFILE \

--exec $DAEMON -- $SQUID_ARGS < /dev/null

return $?

}

stop () {

PID=`cat $PIDFILE 2>/dev/null`

start-stop-daemon --stop --quiet --pidfile $PIDFILE --exec $DAEMON

#

# Now we have to wait until squid has _really_ stopped.

#

sleep 2

if test -n "$PID" && kill -0 $PID 2>/dev/null

then

log_action_begin_msg " Waiting"

cnt=0

while kill -0 $PID 2>/dev/null

do

cnt=`expr $cnt + 1`

if [ $cnt -gt 24 ]

then

log_action_end_msg 1

return 1

fi

sleep 5

log_action_cont_msg ""

done

log_action_end_msg 0

return 0

else

return 0

fi

}

cfg_pidfile=`grepconf pid_filename`

if test "${cfg_pidfile:-none}" != "none" -a "$cfg_pidfile" != "$PIDFILE"

then

log_warning_msg "squid.conf pid_filename overrides init script"

PIDFILE="$cfg_pidfile"

fi

case "$1" in

start)

res=`$DAEMON -k parse -f $CONFIG 2>&1 | grep -o "FATAL: .*"`

if test -n "$res";

then

log_failure_msg "$res"

exit 3

else

log_daemon_msg "Starting $DESC" "$NAME"

if start ; then

log_end_msg $?

else

log_end_msg $?

fi

fi

;;

stop)

log_daemon_msg "Stopping $DESC" "$NAME"

if stop ; then

log_end_msg $?

else

log_end_msg $?

fi

;;

reload|force-reload)

res=`$DAEMON -k parse -f $CONFIG 2>&1 | grep -o "FATAL: .*"`

if test -n "$res";

then

log_failure_msg "$res"

exit 3

else

log_action_msg "Reloading $DESC configuration files"

start-stop-daemon --stop --signal 1 \

--pidfile $PIDFILE --quiet --exec $DAEMON

log_action_end_msg 0

fi

;;

restart)

res=`$DAEMON -k parse -f $CONFIG 2>&1 | grep -o "FATAL: .*"`

if test -n "$res";

then

log_failure_msg "$res"

exit 3

else

log_daemon_msg "Restarting $DESC" "$NAME"

stop

if start ; then

log_end_msg $?

else

log_end_msg $?

fi

fi

;;

status)

status_of_proc -p $PIDFILE $DAEMON $NAME && exit 0 || exit 3

;;

*)

echo "Usage: /etc/init.d/$NAME {start|stop|reload|force-reload|restart|status}"

exit 3

;;

esac

exit 0

I believe that you have to change the permissions of the file.

sudo chmod 4755 /etc/init.d/squid

Check if squid starts and it status (or reboot the RPI), as I said it should work but I'm not sure, I did this different and more time consuming

sudo systemctl start squid.service

sudo systemctl status squid.service

For some reason squid shows that pinger was closing , so I found that I have to change its permissions to fix it

sudo chmod 4755 /lib/squid/pinger

Copy the file squid.pem to you mac and add it to the Keychain Access, and change the proxy configuration in network preferences with the IP of the RPI.

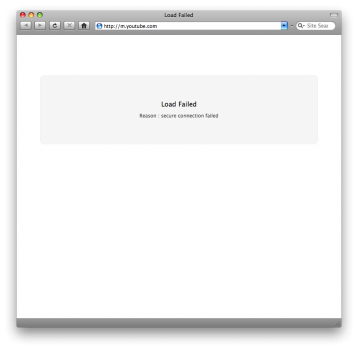

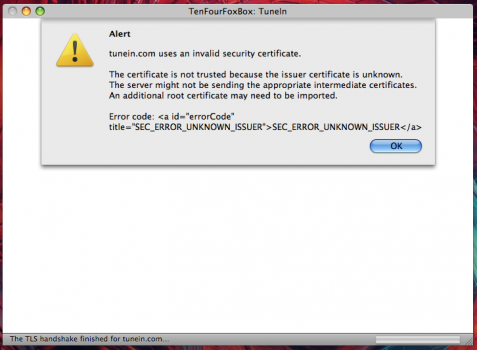

At this point I have squid running and the mac using the proxy but I can't reach wikipedia.

To my understanding the mac is connected to the proxy I can reach almost the same pages that before (I don't see the message in LWK "the proxy can't be reach"). Maybe its something with the configuration file or my IPs assigment (My devices are assigned from xx.xx.xx.43 to .60). or the subnet mask here it's 255.255.255.0 that differs the specified in the squid.config file. I could be wrong I'm learning and it's "work" in progress

As a rare fact I see that the pihole is affected by the connection with squid as if it was omitted for the use of the proxy (I don't know if this is spected)

Thank you for writing all that. I've been following along and for some reason when I do 'sudo systemctl start squid.service' it says 'Failed to start squid.service: Unit squid.service not found.'. I must have missed a step at some point but i'm not sure which one.

Edit: I managed to get squid to run by running 'sudo /etc/init.d/squid start'. I have no idea if this is right but it's working as a proxy now. Pages such as wikipedia I haven't gotten to work yet.

Last edited: