We are allowed to speculate why there is a delay and why Nvidia needs Apple’s help. Why so defensive? I'm a shareholder and I don't get defensive like that.

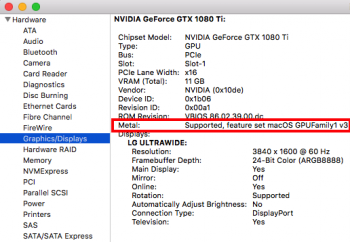

Kepler won't support apps that use 2_v1 features. It's an old card that also doesn't support Direct X 12 on Windows. We are talking about new cards (and very expensive) and they shouldn't need to target Metal 1. Hopefully they still meet Metal 2 specs with Apple's help.

We should not need to spend more than $2K to get 10 bit color from Nvidia. That’s indefensible. They can enable it on their mainstream cards.

The BIG issue is that Nvidia needs to list which cards they officially support and end this perpetual beta which you are trying to deny. I'm a shareholder. I need to stay educated about companies I invest in. Those drivers are still not out of beta for Maxwell, Pascal, Turing, Volta and no board partners have been told to state 'macOS support' on the retail box or been given green light for Mac editions (if needed).

When Nvidia tells its board partners they can print 'macOS support' on the retail box and the driver download page mentions 9, 10, 20 series then it is out of beta. Then Nvidia and board partners have to provide tech support.

That's good business for them. They will sell more GPUs. Especially if there is plug n play GPU support and 10 bit color.

I'm not sure why you think I'm being defensive? I've politely asked that if you are posting speculation, particularly in this thread, that you clearly indicate that it is your theory or opinion rather than an obviously well-known fact. I really don't think that's too much to ask, because it avoids a lot of confusion. Maybe it's just me, because when I see these things posted as fact I assume that I've missed some news because I just don't follow these things as closely as I used to.

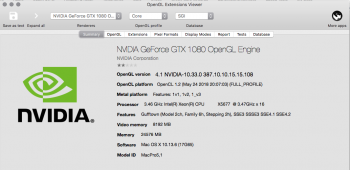

I haven't looked at the Metal 2 specs/features in detail, but I would imagine that Pascal+ could support it. That is, of course, assuming that Apple has provided all the necessary information to NVIDIA (internal frameworks and kexts, documentation, and so on). Nobody outside of Apple and NVIDIA knows the real reason why there hasn't been a Mojave web driver, and I've posted my theories in the past.

If you want 10-bit color on cheap consumer hardware, buy AMD. I've been saying this for years. NVIDIA believes this is a premium feature, and thus includes it only with their professional Quadro line. If you don't like that, buy an AMD card instead.

When have I ever tried to deny the beta status (at best) of the Maxwell+ GPUs? 6 years ago in the first version of my FAQ post, I even included information about how the cards should work but that they are not officially supported. I've been saying that ever since, even when NVIDIA officially recognized that PC cards would work on macOS with their web driver but that it was beta support. It sounds like you're asking for them to be officially supported like they are on Windows and Linux, and quite frankly I just don't see that happening anymore. One might assume NVIDIA was working towards having every card support the cMP and have a boot screen given the presence of such a UEFI on the Turing cards, which would be an important step towards all cards being officially supported under macOS. However, the lack of a Mojave web driver suggests that this is either no longer the case, or that we are at the very least a long way off from such a situation (perhaps because Apple doesn't want this). If you don't like this, then just go and buy an AMD card, which is very clearly the message we are getting from Apple right now in my opinion.

I also really don't think it's as simple as saying "oh we should just end the beta status, and provide full customer support on macOS". I would imagine there's a huge difference in the number of support staff NVIDIA has for Windows versus macOS. They'd either have to train or hire a ton of people to provide the same level of support as Windows. They'd likely have to include Hackintosh support, which opens up a whole new can of worms. It's been pretty clear to me that NVIDIA simply does not want to do this. For a long time, up until several years ago at least, you could basically just buy any Maxwell card and it'd just work. Then, Apple started revving their OS build numbers every couple of weeks with security updates, which meant you had to start updating the web driver more than once a quarter. It was around this time the relationship between the two companies started to go downhill, and the macOS driver quality has gotten worse and worse. My take on this has always been that NVIDIA simply does not care as much about macOS as it used to, and is devoting fewer resources to supporting it.

As I've been saying clearly for many years now, the end result is pretty simple: don't buy an NVIDIA GPU for use under macOS. Personally, games were always my focus and I've just switched to Windows 10 on my Hackintosh and haven't even booted macOS in a few years now.

[doublepost=1546797912][/doublepost]

It would be really good business for Apple and Nvidia to resolve this finally. Apple should take control of the driver situation and roll it into macOS.

Why do you think this? Apple already has a driver in macOS that supports the NVIDIA GPUs that Apple cares about (i.e. Kepler). I can't imagine Apple actually wants to make it easy for people to keep using their nearly-decade-old cMP machines, they would much rather sell them a new iMac Pro or perhaps the new modular Mac Pro when it comes out. They already have an eGPU solution that involves AMD cards. Why exactly does Apple care about NVIDIA GPUs at this point? Sure, there are vocal groups of people who post on internet forums like this one, but I'd imagine that's a tiny fraction of Apple's customer base.