Isn't N4 just a marketing name for N5 version 1.2. Wikipedia lists the A16 Bionic as a 5 nm (N4P).And from TSMC N4 to TSMC N3B as Apple has done?

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M3 Chip Generation - Discussion Megathread

- Thread starter scottrichardson

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

A12 Bionic 7 nm (TSMC N7)

A13 Bionic 7 nm (TSMC N7P)

A14 Bionic 5 nm (TSMC N5)

A15 Bionic 5 nm (TSMC N5P)

A16 Bionic 5 nm (TSMC N4P)

A17 Pro 3 nm (TSMC N3B)

The "downside" − if you want − of doing a tiny die shrink every year, is that there isn't a bigger leap from the most advanced 5 nm to the least advanced 3 nm process. The full potential has partially been realised over the last three years. 😇

A13 Bionic 7 nm (TSMC N7P)

A14 Bionic 5 nm (TSMC N5)

A15 Bionic 5 nm (TSMC N5P)

A16 Bionic 5 nm (TSMC N4P)

A17 Pro 3 nm (TSMC N3B)

The "downside" − if you want − of doing a tiny die shrink every year, is that there isn't a bigger leap from the most advanced 5 nm to the least advanced 3 nm process. The full potential has partially been realised over the last three years. 😇

Benchmarks are a sore point with me and I strongly distrust them. I think most processors (and many other things too) are designed and optimized to make benchmark results look better and are not reflective of actual performance gains user experience. As a result benchmarks are typically biased to a particular architecture or manufacturer.

I am interested in basic chip characteristics (clock speed, cache size, codec encoders/decoders) but forget benchmark results. And I’m interested in other features to: battery size, interfaces, size , weight, cost.

I think you have it backwards. Certain benchmarks are crafted to favor one CPU and/or GPU over others rather than the CPUs being designed to crush a specific benchmark. 3D Mark got caught crafting some benchmark tests to favor Intel several years ago, and there multiple examples of benchmarks being crafted to favor either Nvidia or AMD on the GPU side over the years. Even the tests run on UserBenchmark are questionable, because that site has an overt bias against all things AMD.

Isn't N4 just a marketing name for N5 version 1.2. Wikipedia lists the A16 Bionic as a 5 nm (N4P).

Not sure what significant point you are trying to make when '5nm' is also a made-up marketing terms. So a marketing term made up over a marketing term is what?

N4 isn't 'made up'. N4 is incrementally denser than N5. That is all these 'made up' numbers are indicating anyway. "more dense". NONE OF THEM are any actual measurement. The 'nm' stuck on the end of '5nm' is relatively far more disingenuous than N5 vs N4.

Something I'd like to know is how everyone has suddenly come to the consensus conclusion that the N3B process is a failure, so far as I know, there hasn't been a detailed breakdown of the A17 yet to determine whether or not it is impressive or not. I mean, sure, we have some guesses from the performance but we don't have any truly detailed analysis yet.Not sure what significant point you are trying to make when '5nm' is also a made-up marketing terms. So a marketing term made up over a marketing term is what?

N4 isn't 'made up'. N4 is incrementally denser than N5. That is all these 'made up' numbers are indicating anyway. "more dense". NONE OF THEM are any actual measurement. The 'nm' stuck on the end of '5nm' is relatively far more disingenuous than N5 vs N4.

It is rumored to have poor yield, but it is a solid improvement.Something I'd like to know is how everyone has suddenly come to the consensus conclusion that the N3B process is a failure

TSMC's 3nm update: N3P in production, N3X on track

The last and final FinFET node from TSMC lives on.

Let's say TSMC told Apple in 2017 that the real N3 node will be ready by 2023 and Apple is given rough design guidelines so they can start A17 design. Suppose that in 2019, TSMC says the real N3 node won't be ready, but instead, they will release a stop-gap node called N3B in 2023. Apple is given brand-new specs.

A bit of revisionist history.

" ... “On N3, the technology development progress is going well, and we are already engaging with the early customers on the technology definition,” said C.C. Wei, CEO and co-chairman of TSMC, in a conference call with investors and financial analysts. “We expect our 3-nanometer technology to further extend our leadership position well into the future.” ..."

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

In July 2019, TSMC had not fully finished defining 3nm. So any looney toon, crazy schedule could they have pulled out of their butt in 2017 wasn't worth toilet paper . Need to know what it is before can throw up a creditable schedule.

N3B likely isn't a stopgap node. Most likely N3E was designed AFTER N3B was mostly done and projected costs in time and wafers caused a number of customers to 'walk away' from it. Customers told TSMC they'd wait longer for 'more affordable'. The pandemic arrived and that group of customers just got longer and longer and longer and longer . To the point the everybody exited. Staying on derivatives of N5 family was just way more safer (lower risk when fab capacity availability was a very big unknown. Stuff already working has far better chance of continuing to work in a disruptive context , than something that hasn't worked yet. )

N3B takes more complicated manufacturing techniques than N3E ( it has a longer 'bake' time and requires more steps. all of those raises costs and risks ). N3E eases up on complexity. If there is any stopgap it would likely be the one EASIER to do. If you have to rush to get something out the door to fill a gap, you typically don't pick a HARDER task to accomplish to do that.

N3B is likely an incremental adjustment to what a few ( or perhaps just one ) customer ask them to do originally in 2019 , but that doesn't really make it a 'stopgap'. If the adjustments were made to make it workable , then pretty much necessary.

N3B probably isn't long term. Mainly because just one customer isn't viable. As much as fan boys posture as Apple as a mega customer than could solely support TSMC process all by itself... they can't. Apple's buys are across a range of fab processes at TSMC. In any one process, Apple's support varies over time.

Apple just lost 2 years of design and planning.

In some alternative universe.

Something I'd like to know is how everyone has suddenly come to the consensus conclusion that the N3B process is a failure,

This really hasn't been 'suddenly'. Early on in the process maturity phase most 'first of the generation' process nodes have substantially higher defect rates. For Example N5 vs N7 vs N6 below.

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

The first instance of N5 plots better than N7 , but worse than N7+ and N6 ( these last two are refinements of N7).

N3 is a more substantial change than N5 vs N7. N3 was likely up over N7 defect rates at -2Q -1Q phase (most rumors in 2022 basically supported that) . N3B is far , far more at the limits of what the first generation EUV fab machines can do. TSMC has to resort to more extensive multipatterning because trying to 'print/draw' smaller than what the machine can 'naturally' do. N5 and N7 far more hit the sweet spot of what the first gen EUV fab machines can do. ( that's is why in part got a generation to generation drop at the start).

N3E eases back toward N5. The SRAM/Cache density is exactly the same.... so back to those 'old' alignments with the EUV machine sweet spot. ( N3B is trying to do better density by adding complexity. )

The other problem with N3B is that it takes longer to make ( +1 or so months. ). So getting from -2Q , -1Q from HVM is likely going to take longer. Quality improvements go up as process more wafers. But if it take substantively longer to process wafers then the QA feedback cycle is longer so progress is likely going to be slower.

N3B probably went HVM at a different density than N5 or N7 did. The 'failure' drumbeat has been about it not being the same as opposed to whether it is making relative progress or not. ( N3B is slower, but it is also slower to make. )

N3E is making same 'early' just in lower defect density that N7+ and N6 made because N7 took most of the 'experience learning curve' lumps. ( And also backtracking to loop in more N5 learning curve knowledge. )

N3B hasn't been getting better as fast as N5, N7 , N6 did so there is herd jumping on the bandwagon to declare it a 'failure'. Seems to be a decent overlap of same folks who heaped 'failure' on Intel "10nm" even after it moved to '10 nm SuperFin' ( and to Enhanced SuperFin that got relabeled Intel 7) . Once the early HVM is 'bad yields' then it is 'bad yields' forever. That isn't necessarily true.

so far as I know, there hasn't been a detailed breakdown of the A17 yet to determine whether or not it is impressive or not. I mean, sure, we have some guesses from the performance but we don't have any truly detailed analysis yet.

The recent 'shade' being thrown at N3B is coming from some of the folks who hyped up how N3 was going to bring 'micralous' , 'huge' , 'mind blowing' jumps in performance. Instead of admitting their hype wasn't well grounded , it is far easier to throw misdirection at the fab process.

Apple's designs are relatively cache/SRAM heavy. N3B only got increment improvement there and N3E will have NONE. Apple could do refinements on cache access and internal networking to make a cache of the same capacity better, but they were not going to be able to take a larger 'hammer' to problems with an even larger cache this time.

N3B and N3E are going to be more expensive than N5/N4 were. So even bigger die sizes ( the crutch they have leaned on for last couple N5 family iterations) were likely out the window also. ( A17 is likely going to turn out same size or smaller than A16 ; basically a retreat back to 'normal' A-series die sizes. )

The other problem is monomanical obsession with narrow dimension benchmarks. If single thread doesn't jump xx % then it is a fail. Most likely Apple has multiple dimension , holistic system metrics they are evaluating on. The singular P core may not be the pinnacle of the priorities list. The single thread, hot rod drag racing crowd is fixated on peak clock rate jumps that N3E would bring, not paying much attention the SRAM stagnation also brings as a negative. Just focused on brute force overclocking their way out of issues.

P.S. N3B may not be a 'print money' fab process for TSMC , but it also seems on track to getting to break even. So throwing 'failure' at something that is at , or above , breakeven is at least somewhat misplaced.

Nah, 😂 5nm is a specific type of ASML extreme ultraviolet lithography machines (EUVL). N5, N5P and N4P are all made on the same machines in the same factory. That’s why N4P is referred to as a second generation of "5nm technology". N3B and beyond are made on completely new machines in entirely new factories. For the first time ZEISS had to replace their lenses with a system of mirrors, because the wave length of the light is becoming so small that the glass would’ve absorbed the EUV light entirely. So from here on it’s all "3nm technology" even if it goes down to 2nm or 1nm. Only when a new generation of lithography machines is invented, there will be a new nanometer name for that technology.Not sure what significant point you are trying to make when '5nm' is also a made-up marketing terms. So a marketing term made up over a marketing term is what?

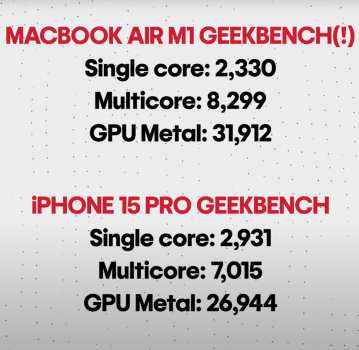

Looks like the GPU improvement are between 20 and 50% depending on the specific metric used.

165 fps at Aztec offscreen. That’s not far from the M1!

www.mysmartprice.com

www.mysmartprice.com

165 fps at Aztec offscreen. That’s not far from the M1!

Apple iPhone 15 Pro and iPhone 15 Pro Max GFXBench Scores Reveal A17 Pro GPU Performance Improvements - MySmartPrice

Apple iPhone 15 Pro and iPhone 15 Pro Max have made appearance on GFXBench benchmark database. Take a look at the details.

"I do not think it means what you think it means"... You are using "die shrink" incorrectly. What you mean to say is that they're using a process with smaller features. But a "die shrink" or "optical shrink" refers to a chip that is the same design as a previous chip, just with reduced feature sizes. There is no question- the A17 is NOT a die shrink of the A16.But it is a die shrink to 3 nanometers. If reality doesn't match with your expectations, check your assumptions! N3E promised an 18% speed improvement at the same power draw OR a 32% power reduction at the same speed over N5. Not both and not on a different chip with a lot more features. Naturally Apple can't achieve the full 18% when they want to save energy for other new tasks.

Let's say TSMC told Apple in 2017 that the real N3 node will be ready by 2023 and Apple is given rough design guidelines so they can start A17 design. Suppose that in 2019, TSMC says the real N3 node won't be ready, but instead, they will release a stop-gap node called N3B in 2023. Apple is given brand-new specs.

Apple just lost 2 years of design and planning.

Just all speculation of course. But this could have happened and we have an A17 that was designed with less time than a typical generation.

I kind of doubt any of these things work like that. And I am also not quite sure that Apple would target to finalising a design years before the commercial availability of a process. When you look at the timing of their patents, they are usually submitted 18-24 months before the design shows up in a commercial product. There is not much difference here between A17 and any earlier SoC. The only think I suspect is that the new GPU design was already supposed to show up in A16.

Last edited:

Nah, 😂 5nm is a specific type of ASML extreme ultraviolet lithography machines (EUVL). N5, N5P and N4P are all made on the same machines in the same factory.

That is all pretty detached from the facts about the ASML scanners.

" ...C urrently, the most sophisticated EUV scanners in various fabs are ASML's Twinscan NXE:3400C and NXE:3400D. These scanners are equipped with 0.33 numerical aperture (NA) optics, delivering a 13 nm resolution. Such a resolution is suitable to print chips on manufacturing technologies featuring metal pitches between 30 nm and 38 nm. However, when pitches drop below 30 nm (at nodes beyond 5 nm), 13 nm resolution will not be enough and chipmakers will have to use EUV double patterning and/or pattern shaping technologies. Given that double patterning EUV can be both costly and fraught with risks, the industry is working on High-NA EUV scanners, which have a 0.55 NA, to achieve an 8nm resolution for manufacturing technologies intended for the latter half of the decade. ..."

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

Nothing there is "5nm". None of it. The High-NA scanner nobody has in production use yet. The first one is going to an Intel R&D lab... not to make common end user product at all. And even it won't be 5nm resolution.

This is why TSMC , Intel , and Samsung is trying to get folks to shift to N5 , "Intel 20A" , SF3E (Samsung Foundary 3E) . There is no direct , physical measurement being made here. The "3nm" , "2nm" , "5nm" are 'composed' measurements to suggest what a 3D composition is providing ( with a one dimensional unit system ). It is a weaker , and weaker and weaker attempt to provide continuity with the measurements made decades ago ( 100nm , 60nm , 50nm , etc. ) . It is not even completely objective consistant 'smoke' because each vendor has their own formulation ( although there is some attempt to make it somewhat close to meaning the same thing.)

There is a long list of mutlipatterning , pattern shaping, 'bank shot' tricks work under 13nm for a subset of features.

That’s why N4P is referred to as a second generation of "5nm technology". N3B and beyond are made on completely new machines in entirely new factories.

Basically a half true. It is still mostly generation one EUV machines in play. Same article.

The 'tricks' to limbo to lower limits are 'new'. Some of the supplementary tools are new. The new factories are primarily because bought MORE EUV machines and they need lots more floor space.

3400C and 3400D have adjustments in throughput and some effects resoution differences but same baseline light source working with.

The floor space thing is an issue. Three generations here:

ASML to Ship First High-NA EUV Tool This Year: $300 Million per Scanner

ASML on track to ship its first Twinscan EXE scanner this year.

Bigger and bigger 'machine' to make smaller and smaller stuff. ( above the 0.55 NA are people at scale. )

TSMC is buying more EUV scanners than everyone else. If buy lots, then need more room for lots. These are NOT going to fit in the same footprint as the "old stuff" DUV scanners. Not even close.

For the first time ZEISS had to replace their lenses with a system of mirrors, because the wave length of the light is becoming so small that the glass would’ve absorbed the EUV light entirely. So from here on it’s all "3nm technology" even if it goes down to 2nm or 1nm. Only when a new generation of lithography machines is invented, there will be a new nanometer name for that technology.

Intel was going to wait for 0.55 High NA to roll out Intel 18A. But they are going with the EUVs they can get 'sooner rather than later'. There are some pattern shaping tools they are going to use to wiggle around the limitations. Is it going to be more expensive to make ? Yes. Are they going to be able to do massive wafer volumes ? No (at least at first ). But it should end up being very bleeding edge.

There is coupling between new machines of substantively different base light sources and new fab processes over an extended amount of time, but it is not as tight as you are proposing.

I've been away since Friday but I've caught up, and I want to point out something that a lot of people are confused about.

Several people posted about "overclocking", as if Apple could arbitrarily boost the A17's clockspeed if they were willing to pay the price in efficiency. That would then suggest that Apple could, for example, just crank the clocks on the Studio or Pro, to get better performance. There is an apparent assumption that this is relatively easy to do.

That's not true.

Someone else posted about lengthening the pipeline in order to accommodate higher clocks. Yes, you may well need to do that, but that's no necessarily enough either.

There are a lot of ways in which a design can be fundamentally incompatible with a higher clock. In that case not even liquid cooling will get you meaningfully past that limit.

For example, you may design a circuit which works great at 4GHz, but which will fail completely at 4.1GHz, because it depends on signal propagation across a certain path of transistors and wires to complete within a single clock cycle, and the path is simply too long for that to happen due to physics (speed of electrons in silicon, roughly). That's why when I was spitballing last month about how fast the M3 could get, I used 4.5GHz as an arbitrary number, but qualified that by nothing that it might not be possible to run the M3 that fast no matter how much power Apple was willing to throw at it. We just don't know.

If I had to bet (note, my bets about the M3 this year so far have been pretty bad), I'd guess that part of the work Apple's been doing in this generation of cores is increasing headroom for higher clocks.

Several people posted about "overclocking", as if Apple could arbitrarily boost the A17's clockspeed if they were willing to pay the price in efficiency. That would then suggest that Apple could, for example, just crank the clocks on the Studio or Pro, to get better performance. There is an apparent assumption that this is relatively easy to do.

That's not true.

Someone else posted about lengthening the pipeline in order to accommodate higher clocks. Yes, you may well need to do that, but that's no necessarily enough either.

There are a lot of ways in which a design can be fundamentally incompatible with a higher clock. In that case not even liquid cooling will get you meaningfully past that limit.

For example, you may design a circuit which works great at 4GHz, but which will fail completely at 4.1GHz, because it depends on signal propagation across a certain path of transistors and wires to complete within a single clock cycle, and the path is simply too long for that to happen due to physics (speed of electrons in silicon, roughly). That's why when I was spitballing last month about how fast the M3 could get, I used 4.5GHz as an arbitrary number, but qualified that by nothing that it might not be possible to run the M3 that fast no matter how much power Apple was willing to throw at it. We just don't know.

If I had to bet (note, my bets about the M3 this year so far have been pretty bad), I'd guess that part of the work Apple's been doing in this generation of cores is increasing headroom for higher clocks.

An Apple exec was quoted saying they start planning and design roughly 4 years before the release of a new SoC. Since TSMC had delays and N3B is only a temp node while N3E comes online, I don't think this idea is farfetched.I kind of doubt any of these things work like that. And I am also not quite sure that Apple would target to finalising a design years before the commercial availability of a process. When you look at the timing of their patents, they are usually submitted 18-24 months before the design shows up in a commercial product. There is not much difference here between A17 and any earlier SoC. The only think I suspect is that the new GPU design was already supposed to show up in A16.

Since N3B isn't design-compatible with N3E, I doubt Apple would put all of its eggs on a node that can't be extended in the future.

An Apple exec was quoted saying they start planning and design roughly 4 years before the release of a new SoC. Since TSMC had delays and N3B is only a temp node while N3E comes online, I don't think this idea is farfetched.

4 years huh? 2019 + 4 is ...... 2023. Not 2022 (when A16 would have needed to arrive). Again the 'math' on A16 on TSMC N3 doesn't add up at all.

Since N3B isn't design-compatible with N3E, I doubt Apple would put all of its eggs on a node that can't be extended in the future.

There is no 'egg' stuck on N3B. Design compatible is more an indication of just how "cheap" you can do the transition ; not whether it can be done at all. At one point Apple had an A-series SoC on both Samsung and TSMC at the same time! Serially moving the A17 from N3B to N3E over 12 month period would not be hard if Apple wanted to.

Even in the N5 family there was a 'cheaper' way to get to N4/N4P and a more expensive way to get to N4/N4P that better optimized the improvements. Mediatek used both. The first to beat Apple into N4 production. And the second to avoid spending even more money on first generation N3.

You are incorrect. N3 (aka N3B) was not a temporary node. It was the first "3mn" generation node meant to be used widely by TSMC's customers.An Apple exec was quoted saying they start planning and design roughly 4 years before the release of a new SoC. Since TSMC had delays and N3B is only a temp node while N3E comes online, I don't think this idea is farfetched.

Since N3B isn't design-compatible with N3E, I doubt Apple would put all of its eggs on a node that can't be extended in the future.

In the end it may work out that way because TSMC got a lot of pushback from customers who didn't like the costs associated with the node's complexity (a *lot* of EUV layers, double-patterning, etc.). In the end only Apple was willing to invest in a high-volume product on that line (there are a few other customers with relatively low quantities of small chips on N3). But N3B *is* the initial "3nm" process that was planned.

Also, "design-compatible" covers a lot. The actual layout of the A17Pro on N3B can't match a future A17 on N3E, but lots of the design will carry over.

I know, I know! But ... you falsely assume, only because A17 is 3nm and a new design, it must realize the total max possible 18% speed increase from the die shrink plus some extra speed increase from design changes. You do not take into account that new GPU features and power reduction are also valuable design targets."I do not think it means what you think it means"... You are using "die shrink" incorrectly. What you mean to say is that they're using a process with smaller features. But a "die shrink" or "optical shrink" refers to a chip that is the same design as a previous chip, just with reduced feature sizes. There is no question- the A17 is NOT a die shrink of the A16.

Only an idiot would choose CPU speed increase above all else. Apple always prides themselves on leading the competition at performance per watt, which can even mean lowering raw performance a little bit while lowering energy consumption by a lot. That's exactly what they did with the introduction of so-called high-efficiency cores. And every time Apple introduces a new media engine (for example RTX) they take a little bit of chip area away from the CPU cores, so that on highly specific task (like raytracing) can run much faster at much higher energy efficiency. The goal is always to build a system with a good balance between all demands, never one that excels at one metric only.

So when TSMC predicts that the 3nm transition will bring up to 18% CPU speed increase, you should expect less not more. Not because Apple engineers are too stupid to achieve the full potential of this technology, but because they have better things to do than winning a meaningless benchmark race.

When you are worried about a benchmark score...

In Maya projects the M2 ultra is on the same level as Rx 6900XT. People should evaluate the gpu on their needs and from where they get their money.

In Maya projects the M2 ultra is on the same level as Rx 6900XT. People should evaluate the gpu on their needs and from where they get their money.

Nevermind, I’m an idiot and skipped over R24View attachment 2268832I would seriously concern with Apple Silicon's GPU performance since M2 Ultra is barely closed to RTX 3060 Ti according to Cinebench R24. I really think that each chips require more GPU cores cause the power consumption is really low. At this point, Apple Silicon's GPU performance sucks for sure.

It’s basically a test of Redshift, so it’s a fair comparison of Redshift performance in Cinema 4D. Hardly comprehensive to everything.

Last edited:

And the funniest part is that, again - it appears that those chips are less efficient than previous generation.

Page 205 - Discussion - Apple Silicon SoC thread

Page 205 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

TSMCs N3 process is still a steaming pile of garbage.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.