Just as I expected. What's not expected is that it runs just as hot as the 2014. I was expecting it to be at least a smidgen cooler.Don't know if this was already posted (GPU comparison top range iMac 27" 2015 vs. 2014 vs. MacPro): http://barefeats.com/imac5k13.html

Here are additional benchmarks and articles: http://barefeats.com/index.html

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M380 M390 M395 M395X Thread

- Thread starter fob

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

That test was done with i7 4.0GHz, which is a bit too hot chip for iMac. I would like to see test results with i5 3.3GHz and M395X.Just as I expected. What's not expected is that it runs just as hot as the 2014. I was expecting it to be at least a smidgen cooler.

That test was done with i7 4.0GHz, which is a bit too hot chip for iMac.

Wow - you should let Apple know about that. They've sold loads of them already, but maybe they could do a recall.

They don't care. "Let it boil at 100C" they say.. so it does.Wow - you should let Apple know about that. They've sold loads of them already, but maybe they could do a recall.

i5 3.3GHz consumes 26W less than i7 4.0GHz when at 100% utilization..

Wouldn't be surprised, if Apple has counted that these chips can do 100C 37 months.. and then die. Just to survive over the Apple care. Then we can sell theses suckers next computer and there will be less machines in the second hand market.

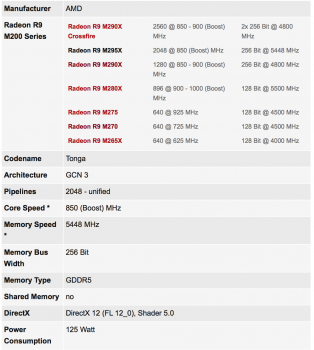

Nope, the core clock for M395X is 50 MHz higher than is with M295X. 850 MHz vs 900 MHz.Thanks for the excellent link. That's exactly what we were looking for, a decent benchmark of the 395X vs 295X.

It shows barely a 5% increase in graphic benchmarks, and as a result I for one will assume that increase is purely down to the 395X being on newer Imac with 6th generation i7.

Thanks for the excellent link. That's exactly what we were looking for, a decent benchmark of the 395X vs 295X.

It shows barely a 5% increase in graphic benchmarks, and as a result I for one will assume that increase is purely down to the 395X being on newer Imac with 6th generation i7.

You're welcome.

And these tests under http://barefeats.com/imac5k13.html were done under OS X. Gaming, for instance, should be done under Windows.That test was done with i7 4.0GHz, which is a bit too hot chip for iMac. I would like to see test results with i5 3.3GHz and M395X.

Well I thought it was impossible for something to both such and blow at the same time, but the 395 obviously does.Nope, the core clock for M395X is 50 MHz higher than is with M295X. 850 MHz vs 900 MHz.

If they just kept the old 295 graphics chip I would expect a bigger increase than 5% with the 2 generations of upgrade in processor alone, going from Haswell, missing Broadwell, and straight to skylake. Intel claims about a 20% increase in chip performance, which should translate to a overall 10% system increase on an iMac, a tad less on a regular pc. So a meagre 5% increase to me suggests there was no improvement whatsoever, in fact maybe a very slight degradation.

So Apple could've cut the power usage when going for the i5 by more than 50 watts, run a lot cooler, and at the same time blow away the performance of the 3 year old m395x if they went with a much more modern gtx 980M that uses 25 watts less power than the AMD chip?They don't care. "Let it boil at 100C" they say.. so it does.

i5 3.3GHz consumes 26W less than i7 4.0GHz when at 100% utilization..

Wouldn't be surprised, if Apple has counted that these chips can do 100C 37 months.. and then die. Just to survive over the Apple care. Then we can sell theses suckers next computer and there will be less machines in the second hand market.

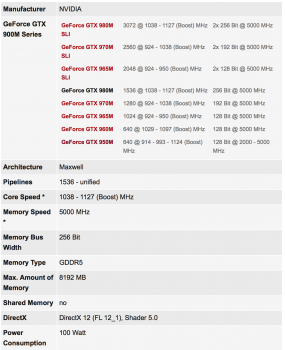

So Apple could've cut the power usage when going for the i5 by more than 50 watts, run a lot cooler, and at the same time blow away the performance of the 3 year old m395x if they went with a much more modern gtx 980M that uses 25 watts less power than the AMD chip?

Not sure where you got the '25 watts less' figure from... from everything I've read the 980m draws more power load for load than the AMD chips. Though it does produce more performance for the power it draws.

Problem is, when it comes to pushing a 5k screen, the 980m is worse than the AMD cards, which I would assume is why apple chose the AMD. They don't market their imacs to gamers, particularly.

I suspect this to be closer to the truth than many might imagine ; building for longevity/reliability, I fear, appears no longer a priority to many of the world's manufacturers - particularly over the past few years. With quality control lacking and value for money fiercly erroded, it will be of little surprise as the integrity of reputations slowly decline in the pursuit of greed. How sad if Apple are to join them.They don't care. "Let it boil at 100C" they say.. so it does.

i5 3.3GHz consumes 26W less than i7 4.0GHz when at 100% utilization..

Wouldn't be surprised, if Apple has counted that these chips can do 100C 37 months.. and then die. Just to survive over the Apple care. Then we can sell theses suckers next computer and there will be less machines in the second hand market.

This is right.Not sure where you got the '25 watts less' figure from... from everything I've read the 980m draws more power load for load than the AMD chips. Though it does produce more performance for the power it draws.

Problem is, when it comes to pushing a 5k screen, the 980m is worse than the AMD cards, which I would assume is why apple chose the AMD. They don't market their imacs to gamers, particularly.

I just dug around a little. See this link for example - it clearly says that the GTX 980 for laptops for instance needs over 160 watts up to 200, so it is way beyond what the iMac is built for. Then again, here this article says that the max resolution is 3840x2160. Seems we have these reasons why Apple went with AMD this time.

M395X should definitely be Tonga (GCN1.2) with 2048 cores (source: AMD website)

Is M395 a mobile version of R9 380? I guess it is a Tonga (GCN 1.2) with 1792 cores. Can anyone confirm this?

M390 is likely a rebranded M290X with Pitcairn/Curacao (GCN 1.0) with 1280 cores.

M380 according to AMD website is a Cape Verde (GCN1.0) with 640 cores. It is both old and weak.

But I've also seen some sources claim that it is a Bonaire (GCN1.1) with 768 cores. That would be much better than a 5 years old Cape Verde...

Is M395 a mobile version of R9 380? I guess it is a Tonga (GCN 1.2) with 1792 cores. Can anyone confirm this?

M390 is likely a rebranded M290X with Pitcairn/Curacao (GCN 1.0) with 1280 cores.

M380 according to AMD website is a Cape Verde (GCN1.0) with 640 cores. It is both old and weak.

But I've also seen some sources claim that it is a Bonaire (GCN1.1) with 768 cores. That would be much better than a 5 years old Cape Verde...

Nope, the core clock for M395X is 50 MHz higher than is with M295X. 850 MHz vs 900 MHz.

Nope, it's 59 Mhz higher and Desmond is right about M395 1792 core

Last edited:

From your very same link on notebook check, the Tonga m295x is rated for 125 watts and the GTX 980M is rated for 100 watts. I think the text is off in those articles, more likely it's calculating total system draw including the cpu/gpu put together in the laptop. This 100/125 watt power ratings are also found from other sources.This is right.

I just dug around a little. See this link for example - it clearly says that the GTX 980 for laptops for instance needs over 160 watts up to 200, so it is way beyond what the iMac is built for. Then again, here this article says that the max resolution is 3840x2160. Seems we have these reasons why Apple went with AMD this time.

Nope, it's 59 Mhz higher and Desmond is right about M395 been 1792 core

So :

m395 = 1792 shaders, 834mhz, 2gb DDr5

m395x = 2048 shaders, 909mhz, 4gb DDr5

Last edited:

I agree with you when it comes to the GTX 980M, but for the actual GTX 980 for Notebooks (or 990M or whatever) it seems like wccftech is on to something.From your very same link on notebook check, the Tonga m295x is rated for 125 watts and the GTX 980M is rated for 100 watts. I think the text is off in those articles, more likely it's calculating total system draw including the cpu/gpu put together in the laptop. This 100/125 watt power ratings are also found from other sources.

They say

The GeForce GTX 980 has a TDP of 165W, the GTX 980M has a TDP of 125W while the GeForce GTX 980 (Laptop SKU) will have a TDP around 125W – 150W (configurable by OEM).

My point still stands: If we're looking at some sort of barrier Apple won't break with the 100-125 watts TDP (Thermal Design Power) because of the thermals, we won't see a hungrier card anytime soon. The TDP doesn't define how many watts the card actually uses, but how efficient the cooling around the card should work. Throttling down a GTX 980 for Notebooks until it's usable won't give a clear advantage over AMD's current offerings which are better suited for 5K, too.

I know we're going back and forth here, but no one is planning on gaming at 5K short of Blizzard games based on decade old graphics engines. When gaming at 1440P (which is the old iMac 27 resolution and what most iMac 5K gamers usually play at) the 980M kills the m295x/395x. The difference is smaller at higher resolutions, but the 980M keeps it's advantage albeit smaller. I know the GTX 990M is too power hungry for the iMac...I agree with you when it comes to the GTX 980M, but for the actual GTX 980 for Notebooks (or 990M or whatever) it seems like wccftech is on to something.

They say

The GeForce GTX 980 has a TDP of 165W, the GTX 980M has a TDP of 125W while the GeForce GTX 980 (Laptop SKU) will have a TDP around 125W – 150W (configurable by OEM).

My point still stands: If we're looking at some sort of barrier Apple won't break with the 100-125 watts TDP (Thermal Design Power) because of the thermals, we won't see a hungrier card anytime soon. The TDP doesn't define how many watts the card actually uses, but how efficient the cooling around the card should work. Throttling down a GTX 980 for Notebooks until it's usable won't give a clear advantage over AMD's current offerings which are better suited for 5K, too.

Attachments

I don't see why someone would want to spend +300€ for it.So :

395 = 1792 shaders, 834mhz, 2gb DDr5

395x = 2048 shaders, 909mhz, 4gb DDr5

https://forums.macrumors.com/thread...s-computer-line.1928218/page-14#post-22135918I agree with you when it comes to the GTX 980M, but for the actual GTX 980 for Notebooks (or 990M or whatever) it seems like wccftech is on to something.

They say

The GeForce GTX 980 has a TDP of 165W, the GTX 980M has a TDP of 125W while the GeForce GTX 980 (Laptop SKU) will have a TDP around 125W – 150W (configurable by OEM).

My point still stands: If we're looking at some sort of barrier Apple won't break with the 100-125 watts TDP (Thermal Design Power) because of the thermals, we won't see a hungrier card anytime soon. The TDP doesn't define how many watts the card actually uses, but how efficient the cooling around the card should work. Throttling down a GTX 980 for Notebooks until it's usable won't give a clear advantage over AMD's current offerings which are better suited for 5K, too.

Here you have real answer why Apple picked AMD this time around. GCN cards simply has much higher compute performance in OpenCL.

https://forums.macrumors.com/thread...s-computer-line.1928218/page-14#post-22135918

Here you have real answer why Apple picked AMD this time around. GCN cards simply has much higher compute performance in OpenCL.

That too, but the main reason for AMD was 5k iMac.

Great find!That too, but the main reason for AMD was 5k iMac.

Great find!

DisplayPort version 1.2 can support video resolutions of up to 3840 x 2160 pixels (4K) at a refresh rate of 60 Hz, and it supports all common 3D video formats. In terms of bandwidth, it can manage 17.28 Gbps. Version 1.3 support up to 8192 x 4320 (8k) and bandwidth is 32.4 Gbps.

http://www.anandtech.com/show/8533/...rt-13-standard-50-more-bandwidth-new-features

Nvidia maxwell GPU is 1.2 DP and AMD R9 M**** is 1.3 DP. That's why apple chose AMD

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.