I doubt the M2 has much overclocking headroom. The M1 Max Studio is clocked at the same speed as the M1 Max laptops, despite having a massive heatsink and a mains power supply. One would think it would be clocked a bit higher if that was an option.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

MP 7,1 Mac Pro 8.1 - WHAT IF...

- Thread starter maikerukun

- Start date

-

- Tags

- 7.1 mac pro next generation

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Supposed you are right, how could you be so sure about the topic ? are you assuming or what ? just wonder...Mac Pro as of spring 2023: An overclocked M2 Ultra, max RAM 192 GB, maybe an IO chip and some extra thunderbolt bandwidth, a lot better cooling than Studio, and some huge internal storage options, by Apple proprietary NAND modules, all controlled by SoC itself obviously. It's gonna be a 20% speed upgrade compared to M1 Ultra, but advertized to be 50% speed-up by some carefully chosen metrics, and presdented to us as pretty difficult to comprehend and impossible to read them right - charts.

It will probably be June 20th, the same time that Ventura 13.3 drops and that's what the new Mac Pro will ship with.

Gurman is saying this morning:

"Aside from the iMac, Apple is scheduled to launch about three new Macs between late spring and summer, I’m told. Those three models are likely to be the first 15-inch MacBook Air (codenamed J515), the first Mac Pro with homegrown Apple chips (J180) and an update to the 13-inch MacBook Air (J513)."

The part about an update to the 13" MacBook Air, the M2 MacBook Air debuted July 2022...

Kinda makes me think these will all be M3-based products; M2 for the 13" MBA, M2 (and M2 Pro...?) for the 15" MBA, and M3 Ultra / M3 extreme for the Mac Pro...!

Mac Pro as of spring 2023: An overclocked M2 Ultra, max RAM 192 GB, maybe an IO chip and some extra thunderbolt bandwidth, a lot better cooling than Studio, and some huge internal storage options, by Apple proprietary NAND modules, all controlled by SoC itself obviously. It's gonna be a 20% speed upgrade compared to M1 Ultra, but advertized to be 50% speed-up by some carefully chosen metrics, and presdented to us as pretty difficult to comprehend and impossible to read them right - charts.

I am hoping Apple decides to introduce the ASi Mac Pro with the M3 Ultra / M3 Extreme SoCs...

As for "extra thunderbolt bandwidth", not needed; the current M1 Ultra has the ability to run eight discrete TB ports, but only utilizes six...

I doubt the M2 has much overclocking headroom. The M1 Max Studio is clocked at the same speed as the M1 Max laptops, despite having a massive heatsink and a mains power supply. One would think it would be clocked a bit higher if that was an option.

ASi SoCs are designed for specific clock speeds to ensure stability under load, with zero provisions for overclocking; but we do see the M2 Max in the top model 16" MacBook Pro laptops with a clock speed bump over the M2 Max with the 30-core GPU...

If the Mac Pro is on M2 gen skip it. First gen Apple SoC Mac Pro will suck.

M3 or m4 based Mac Pro should the one to get.

M3 or m4 based Mac Pro should the one to get.

As for "extra thunderbolt bandwidth", not needed; the current M1 Ultra has the ability to run eight discrete TB ports, but only utilizes six...

2x TB ports = 8 lanes of PCIe 3.0. Not exactly a bandwidth monster for a workstation.

2x TB ports = 8 lanes of PCIe 3.0. Not exactly a bandwidth monster for a workstation.

Sure, the TB bandwidth is what it is; but increasing it without increasing the actual TB spec (currently TB4, a future of TB5) does nothing...

The point was that the current M1 Ultra can run up to eight discrete TB4 ports, but only runs six; so any TB bandwidth increase would really do nothing unless the TB was also bumped to TB5...?

Where the real bandwidth questions come into play is when we see the ASi Mac Pro with actual PCIe slots...

I'm confused - how would you increase the TB bandwidth without upgrading to TB5? They're directly related.Sure, the TB bandwidth is what it is; but increasing it without increasing the actual TB spec (currently TB4, a future of TB5) does nothing...

There would be no increase in TB bandwidth unless you ungraded to TB5, unless I'm missing the point here.The point was that the current M1 Ultra can run up to eight discrete TB4 ports, but only runs six; so any TB bandwidth increase would really do nothing unless the TB was also bumped to TB5...?

In any case, this wasn't the point I was making.

This is what I assumed we were talking about - using the 'spare' / currently unconnected TB channels of the Ultra to provide PCIe lanes in a Mac Pro tower. This is what I referred to when I said 2x TB4 = 8 lanes of PCIe 3.0.Where the real bandwidth questions come into play is when we see the ASi Mac Pro with actual PCIe slots...

The part about an update to the 13" MacBook Air, the M2 MacBook Air debuted July 2022...

Kinda makes me think these will all be M3-based products; M2 for the 13" MBA, M2 (and M2 Pro...?) for the 15" MBA, and M3 Ultra / M3 extreme for the Mac Pro...!

I am hoping Apple decides to introduce the ASi Mac Pro with the M3 Ultra / M3 Extreme SoCs...

As for "extra thunderbolt bandwidth", not needed; the current M1 Ultra has the ability to run eight discrete TB ports, but only utilizes six...

ASi SoCs are designed for specific clock speeds to ensure stability under load, with zero provisions for overclocking; but we do see the M2 Max in the top model 16" MacBook Pro laptops with a clock speed bump over the M2 Max with the 30-core GPU...

That is a very interesting speculation. If the M3 is ready and is mostly a 3nm upgrade, that would be an interesting weird occurrence to basically skip the rest of the M2 roll out. One reason this seems more doubtful is you'd basically be releasing the M3 extreme and ultra first, and then trickling that down. Although now that I type that, maybe that's not so crazy.

Right?That is a very interesting speculation. If the M3 is ready and is mostly a 3nm upgrade, that would be an interesting weird occurrence to basically skip the rest of the M2 roll out.

They literally just released M2-based products in January 2023, and are now suddenly going to also release M3?

🤣

I'm confused - how would you increase the TB bandwidth without upgrading to TB5? They're directly related.

There would be no increase in TB bandwidth unless you ungraded to TB5, unless I'm missing the point here.

In any case, this wasn't the point I was making.

I was thinking the poster who mentioned the TB ports was saying Apple needed more bandwidth dedicated to TB ports for the ASi Mac Pro; I would think the eight possible ports from the M1 Ultra would be enough...

With the jump from the M1 Ultra to a Mn Ultra, I would hope for a change to TB5...

This is what I assumed we were talking about - using the 'spare' / currently unconnected TB channels of the Ultra to provide PCIe lanes in a Mac Pro tower. This is what I referred to when I said 2x TB4 = 8 lanes of PCIe 3.0.

But I was talking about a MPX 2.0, where both primary & secondary slots go from Gen3 x16/Gen3 x8 to Gen5 x16/Gen5 x16, with no TB provisioning...

That is a very interesting speculation. If the M3 is ready and is mostly a 3nm upgrade, that would be an interesting weird occurrence to basically skip the rest of the M2 roll out. One reason this seems more doubtful is you'd basically be releasing the M3 extreme and ultra first, and then trickling that down. Although now that I type that, maybe that's not so crazy.

Not so crazy, we all know the ASi Mac Pro needs to be an absolute powerhouse, both from the CPU & the GPU perspectives...

What better way than to jump straight to M3 Ultra / M3 Extreme SoCs & ASi (GP)GPUs...?!?

And @deconstruct60 has mentioned in the past about how a lower volume high margins SoC could be used to "clear the pipe" for 3nm production ramp-up...?

Right?

They literally just released M2-based products in January 2023, and are now suddenly going to also release M3?

Just Apple getting back on track after the assorted issues that caused the overall delay with the "two year transition"; seems a no-brainer that Apple should have been on 3nm, and most likely on the M3-family of SoCs by this point in time...

Right?

They literally just released M2-based products in January 2023, and are now suddenly going to also release M3?

🤣

I agree with all of that. But here is a somewhat philosophical and marketing question.

If you introduce a product line that scales from roughly a phone to a tower of power, which way should the introductions move. Now apple so far has started with the phone, and worked up to iPads, and entry level laptops (air), on up the ladder to the studio. Fair way to do it.

But did they go that way because it was their strategy or just because they were in a rush and had to, ie, thats the way the initial set of products came out and no Mac Pro would partake in the M1?

What is fundamentally wrong starting with the mega uber super extreme chip, and showing how it scales downward as a philosophy? I guess you remove the chances for "surprises" of new features as you go up, but I would argue those surprises are still surprises for the high end, and would not be a part of the lower end anyway (eg ECC memory).

I maybe missing something obvious, but I'm trying to do the exercise in my pea brain, and nothing is jumping out at me why it's a fundamentally wrong way to go.

SOME may even argue going from extreme to mobile can make more sense in that if you have some architectural bottlenecks (ie enough PCI lanes for cards etc), you can plan out your mega chip dealing with that, but when you scale that down, you wont be bit it having 'too many' pci lanes available for mobile applications.

Then again, I could see a counter argument that if you start from the mega chip, you could lose sight of your biggest money maker products, the phones, and you want to optimize those before all else.

Interesting question of strategy, but on the whole, considering where they make most of their money, I guess it makes more sense to optimize on the iPhone first.

Here is one more interesting question. While optimizing on the iPhone first makes sense, does it not also make sense that they may break up the lines more and more over time and have a multiple optimized lines that spread out to be more their 'own thing' with time. Sure, it makes senses that the iPhone A series was the seed chip, but does it not make sense to have multiple teams designing and optimizing off that for a Server, Desktop, Laptop, tablet, phone, watch, wearables series of chip, each becoming more and more it's own unique thing. For example, the watch does not really need GPU modules of much punch relative to the rest of the line.

WHAT IF...?!?

What if the ASi Mac Pro has the Mn Ultra / Mn Extreme SoC on a MPX 2.0 card; might allow an upgrade or two, at least until MPX 3.0 (dual Gen6 x16 slots) rolls around...

What if the ASi Mac Pro has three of these MPX 2.0 slots; one for the SoC card & two for ASi (GP)GPU cards...?

Obviously, a handful of "regular" PCIe slots for everything else that is not a SoC card or a (GP)GPU card...

What if the ASi Mac Pro has the Mn Ultra / Mn Extreme SoC on a MPX 2.0 card; might allow an upgrade or two, at least until MPX 3.0 (dual Gen6 x16 slots) rolls around...

What if the ASi Mac Pro has three of these MPX 2.0 slots; one for the SoC card & two for ASi (GP)GPU cards...?

Obviously, a handful of "regular" PCIe slots for everything else that is not a SoC card or a (GP)GPU card...

Just putting it out there, does anyone else remember that ‘leak’ of a prototype MP with an octagonal enclosure? Think it was early last year. I promise I’m not gonna crazy haha!

From a 2017 Tech Crunch interview:

"Craig Federighi: I wouldn’t say we’re trying to paint any picture right now about a shape. It could be an octagon this time [laughter]. But certainly flexibility and our flexibility to keep it current and upgraded. We need an architecture that can deliver across a wide dynamic range of performance and that we can efficiently keep it up to date with the best technologies over years."

Last edited:

WHAT IF...?!?

What if the ASi Mac Pro has the Mn Ultra / Mn Extreme SoC on a MPX 2.0 card; might allow an upgrade or two, at least until MPX 3.0 (dual Gen6 x16 slots) rolls around...

What if the ASi Mac Pro has three of these MPX 2.0 slots; one for the SoC card & two for ASi (GP)GPU cards...?

Obviously, a handful of "regular" PCIe slots for everything else that is not a SoC card or a (GP)GPU card...

That would be cool, but I wonder why everyone assumes there will be no 3rd party GPUs. Why is it assumed apple simply cannot write a freak'n driver for an AMD video card. This is so banal and easy for apple to do. Who cares if you havent seen eGPU drivers for a long time. It would take a few people maybe 3 months work to work up a driver. Apple can EASILY afford this and accomplish this. This attitude of difficulty around 3rd party GPUs is totally baffling to me.

Maybe in the end they wont, but it would just prove they are inept spiteful jerks more than some fundamental difficulty in getting 3rd party PCI graphics cards working on an ASi machine.

That would be cool, but I wonder why everyone assumes there will be no 3rd party GPUs. Why is it assumed apple simply cannot write a freak'n driver for an AMD video card. This is so banal and easy for apple to do. Who cares if you havent seen eGPU drivers for a long time. It would take a few people maybe 3 months work to work up a driver. Apple can EASILY afford this and accomplish this. This attitude of difficulty around 3rd party GPUs is totally baffling to me.

Maybe in the end they wont, but it would just prove they are inept spiteful jerks more than some fundamental difficulty in getting 3rd party PCI graphics cards working on an ASi machine.

Something to do with ASi using TBDR, whereas AMD/Intel/Nvidia use IPR; or maybe the whole "switching to Apple silicon" thing...?

It's not Apple that writes the drivers it's AMD. If Apple won't use AMD cards anymore in ARM macOS then AMD won't do it.That would be cool, but I wonder why everyone assumes there will be no 3rd party GPUs. Why is it assumed apple simply cannot write a freak'n driver for an AMD video card. This is so banal and easy for apple to do. Who cares if you havent seen eGPU drivers for a long time. It would take a few people maybe 3 months work to work up a driver. Apple can EASILY afford this and accomplish this. This attitude of difficulty around 3rd party GPUs is totally baffling to me.

Maybe in the end they wont, but it would just prove they are inept spiteful jerks more than some fundamental difficulty in getting 3rd party PCI graphics cards working on an ASi machine.

AMD cards are not great for 3D workloads anymore. Nvidia just made a huge jump with the 4000 series, it's not even funny.

Apple and Intel in their GPU marketing target, Nvidia because frankly they are the market leaders. I do hope that Apple releases a PCIe GPU for the Mac Pro since it's needs to expandable overtime.

If Apple adds Metal RT that is hardware based for Blender( which looks more than likely), that would be a good start. Think Nvidia Optix.

Thats why if the 8,1 has no hardware RT, then skip it. Actually any SoC that Apple releases that does not have hardware RT skip it.

Something to do with ASi using TBDR, whereas AMD/Intel/Nvidia use IPR; or maybe the whole "switching to Apple silicon" thing...?

I don't buy that difference prevents support of the GPU. Switching to apple silicon also not the issue. Apple used to support 3rd party graphics on powerPC. Again, nothing stops them from writing a driver for an AMD GPU other than the will to do so.

It's not Apple that writes the drivers it's AMD. If Apple won't use AMD cards anymore in ARM macOS then AMD won't do it.

AMD cards are not great for 3D workloads anymore. Nvidia just made a huge jump with the 4000 series, it's not even funny.

Apple and Intel in their GPU marketing target, Nvidia because frankly they are the market leaders. I do hope that Apple releases a PCIe GPU for the Mac Pro since it's needs to expandable overtime.

If Apple adds Metal RT that is hardware based for Blender( which looks more than likely), that would be a good start. Think Nvidia Optix.

Thats why if the 8,1 has no hardware RT, then skip it. Actually any SoC that Apple releases that does not have hardware RT skip it.

AMD has already written the drivers. I *believe* apple takes their existing drivers and tidies them up a bit for macOS. Nothing prevents apple from doing that here. It would likely be more work this time with the apple hardware being different, but so what, well within their ability to do so. The first time would be hardest, and whatever bridge scheduling/delegating code they put in to get off apple silicon out to the GPU would thereafter be useable form most if not all future 3rd party cards.

Agreed that AMD are in many ways more limited than Nvidia but at this point beggars cant be too choosy.

I guess I really have been out of the loop -- I hadn't realized the M2 was actually released in June 2022, so releasing the M3 around June 2023 now makes sense.

www.macrumors.com

www.macrumors.com

^per this, it looks like we should see something by the 2nd half of this year, or early 24'

/Resume WHAT IF(fing)....

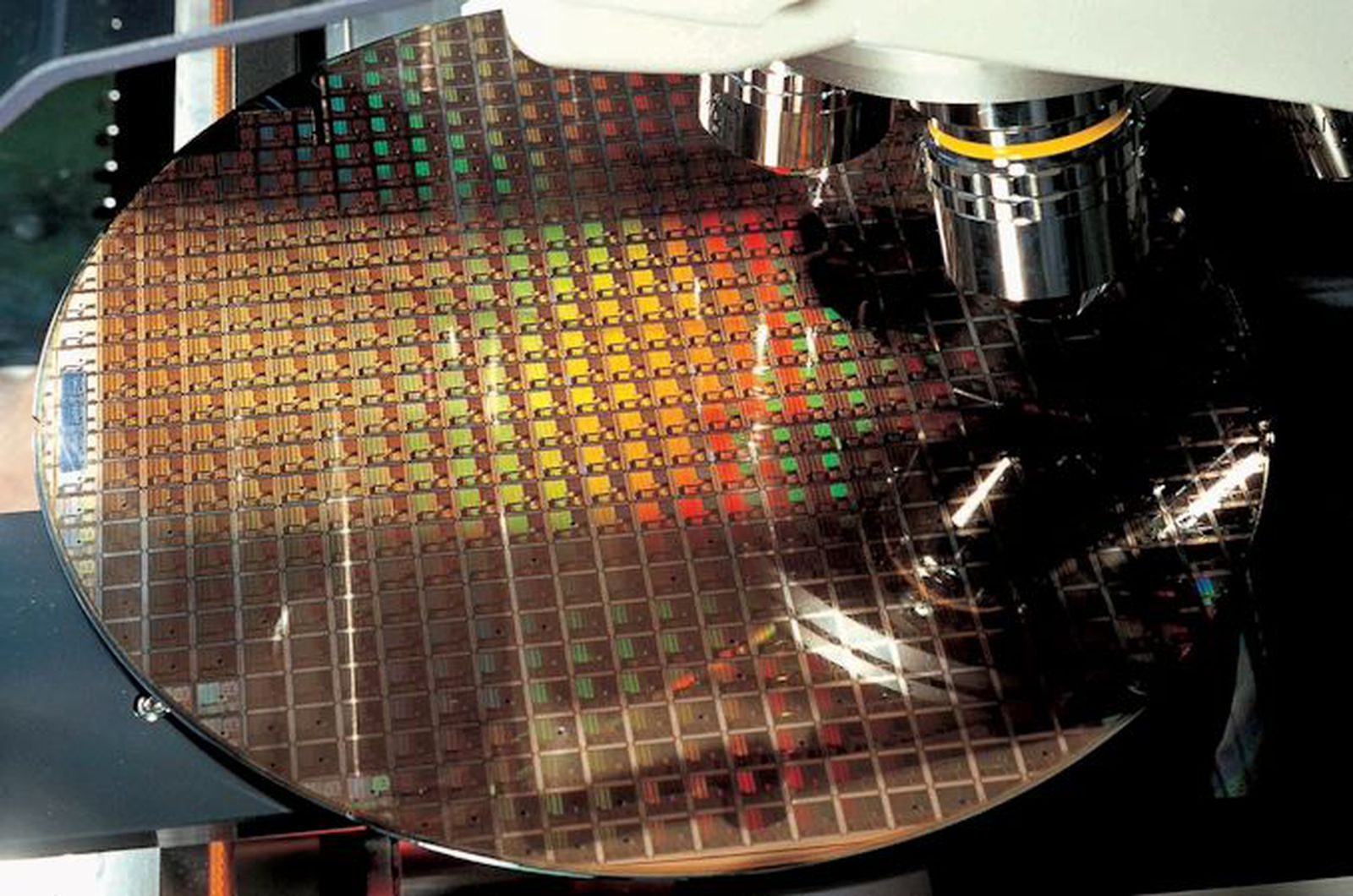

Apple Orders Entire Supply of TSMC's 3nm Chips for iPhone 15 Pro and M3 Macs

Apple has reportedly secured all available orders for N3, TSMC's first-generation 3-nanometer process that is likely to be used in the upcoming iPhone 15 Pro lineup as well as new MacBooks scheduled for launch in the second half of 2023. According to a paywalled DigiTimes report, Apple has...

^per this, it looks like we should see something by the 2nd half of this year, or early 24'

/Resume WHAT IF(fing)....

it looks like we should see something by the 2nd half of this year, or early 24'

3nm wafers have been in production since late December 2022, so I could see M3 Ultra / M3 Extreme Mac Pros any time in the next six months...?

Are you talking about this running off an Ultra chip? It seems not, because that has very few TB (PCIe) lanes spare, none of which are Gen 5. I think we must be talking at cross purposes.But I was talking about a MPX 2.0, where both primary & secondary slots go from Gen3 x16/Gen3 x8 to Gen5 x16/Gen5 x16, with no TB provisioning...

It's only high margin when you're selling them to someone else. For Apple, big chips just = high cost.And @deconstruct60 has mentioned in the past about how a lower volume high margins SoC could be used to "clear the pipe" for 3nm production ramp-up...?

I think the issue is that when working with a new process node, you tend to get more imperfections with transistors. Small chips = more chips per wafer, so even with a bunch of imperfections, you still get a good amount of useable chips. If you go in with the big ones first, most of them could be duds, which is a lot of waste and makes the few working ones very costly. Basically - yield issues.What is fundamentally wrong starting with the mega uber super extreme chip, and showing how it scales downward as a philosophy? I guess you remove the chances for "surprises" of new features as you go up, but I would argue those surprises are still surprises for the high end, and would not be a part of the lower end anyway (eg ECC memory).

I maybe missing something obvious, but I'm trying to do the exercise in my pea brain, and nothing is jumping out at me why it's a fundamentally wrong way to go.

The same CPU and GPU core designs could be used for all the different chips. I'm no expert, but I'd imagine that would contain some of the costs. You'd likely get greater economies of scale from making large numbers of a few different designs though. Currently, the Mac just uses two - Mn and Mn Max. The Pro being a cut-down Max and the Ultra being 2x Max.While optimizing on the iPhone first makes sense, does it not also make sense that they may break up the lines more and more over time and have a multiple optimized lines that spread out to be more their 'own thing' with time.

As far as I can see, an eGPU is fundamentally a PCIe GPU connected by 4 PCIe lanes rather than 16. If Apple intended future Mac Pros to use PCIe GPUs, they could have enabled eGPUs with ASi from the start. By now, there would be a robust, well-tested ecosystem of AMD GPUs with ASi. The fact Apple hasn't done this - and has briefed developers to do otherwise - is clear evidence it was never their intention. The difficulty or otherwise of writing GPU drivers is therefore moot.Why is it assumed apple simply cannot write a freak'n driver for an AMD video card. This is so banal and easy for apple to do. Who cares if you havent seen eGPU drivers for a long time. It would take a few people maybe 3 months work to work up a driver. Apple can EASILY afford this and accomplish this. This attitude of difficulty around 3rd party GPUs is totally baffling to me.

I guess, though it's odd being a beggar when you have $10K to spend on a Mac.Agreed that AMD are in many ways more limited than Nvidia but at this point beggars cant be too choosy.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.