RX 480 is 150W TDP with max board power from connector and PCIex. The GPU does not draw 150W. It draws between 90-100W in gaming load. So yes, dual RX 480 might have better efficiency and price and performance than single GTX 1080.

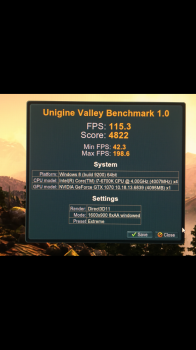

The TDP of an RX 480 (150W) matches the TDP of a GTX 1070 (150W), yet the latter is nearly twice as fast. That means the 1070 is nearly twice as efficient as the 480, using the standard perf per watt metric. You can't just shave off 1/3 of the TDP and claim the AMD chip is more efficient, sorry.

At the end of the day, if you want to buy a Polaris GPU, go for it. Personally, I'm waiting for a 1080 Ti before I upgrade.