This is Apple just getting started. Imagine the beast of a desktop CPU they will have for iMac and the Mac Pro. They won't be restricted to low wattage but still will be low wattage compared the comparable Intel and AMD CPUs.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

So M1 Max with 32 Core GPU matches RTX 3080 Mobile.

- Thread starter iBug2

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

How about start discussing real world usage.

Needs to be confirmed by the community to see if he's running the free version which doesn't use GPU acceleration and if settings are done properly to utilize CUDA. There have been other comparisons where the person didn't set up the test environment properly, made a video claiming the Macbook Pro is faster then had to put out an update retracting.

Could you give me a reference to these fake comparisons?Needs to be confirmed by the community to see if he's running the free version which doesn't use GPU acceleration and if settings are done properly to utilize CUDA. There have been other comparisons where the person didn't set up the test environment properly, made a video claiming the Macbook Pro is faster then had to put out an update retracting.

He is a professional editor and did test with both Premiere and Resolve (if you watched the video), and to my knowledge there isn’t a free version of Premiere

Could you give me a reference to these fake comparisons?

He is a professional editor and did test with both Premiere and Resolve (if you watched the video), and to my knowledge there isn’t a free version of Premiere

Look back above. Davinci Resolve free doesn't utilize GPU. Premiere Pro has a reputation of being a pig so not surprising.

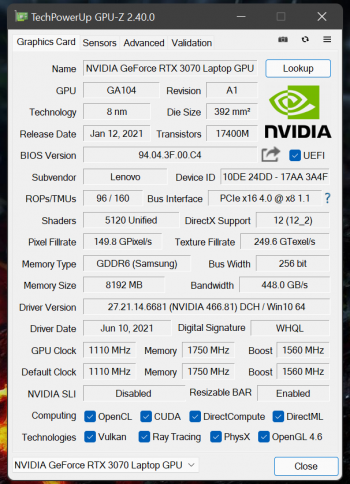

Correct. Here is the GPUz from my laptop's 3070.Both mobile 3080 and 3070 have 448 GB/s bandwidth. Mobile 3060 has 336.0 GB/s.

NVIDIA GeForce RTX 3070 Mobile Specs

NVIDIA GA104, 1560 MHz, 5120 Cores, 160 TMUs, 80 ROPs, 8192 MB GDDR6, 1750 MHz, 256 bitwww.techpowerup.com

Attachments

Apple has just turned the whole PC market on its ear. It has proved that you can do both performance and energy efficiency at the sam time. A question for you. When you were doing video rendering with Da Vinci with both systems, did you hear fan noise on both systems and how loud?

By the way, congrats on your new MacBook Pro M1 Max.

I heard no fans on the Mac. Also, I am playing LoL rght now with a friend and I have the mac plugged into a 144hz monitor and the built in screen open too and it's getting about 130 fps in 1440p. I am using discord, streaming thru discord, and other programs open and the fans ARE NOT EVEN ON.

I just saw the video you referenced above now thanks.Look back above. Davinci Resolve free doesn't utilize GPU. Premiere Pro has a reputation of being a pig so not surprising.

From watching it, it referred to Blender, not Premiere or Resolve.

In the conclusion he stated that the Mac doesn’t have gpu support in Blender.

You complained that people were cheating there Resolve benchmark tests by not enabling gpu acceleration on the PC. You then post a video about an entirely different application, which has gpu acceleration on pc but not Mac.

I genuinely don’t know what to say about this.

And that is probably running through Rosetta.

It is. I would love to see more games running native AS ports.

Are you kidding me. That guy does video editing for a living and you think he is using a free version. You are a laugh riot. Maybe try harder. I know it is hard for you to accept. It is too bad you want to stay in denial land.Needs to be confirmed by the community to see if he's running the free version which doesn't use GPU acceleration and if settings are done properly to utilize CUDA. There have been other comparisons where the person didn't set up the test environment properly, made a video claiming the Macbook Pro is faster then had to put out an update retracting.

Last edited:

From what I have seen, even the apps that run through Rosetta run like they are native. Not all apps run well under Rosetta though, such as music plug-ins.It is. I would love to see more games running native AS ports.

Watch this:Needs to be confirmed by the community to see if he's running the free version which doesn't use GPU acceleration and if settings are done properly to utilize CUDA. There have been other comparisons where the person didn't set up the test environment properly, made a video claiming the Macbook Pro is faster then had to put out an update retracting.

Since you brought up blender. This is a M1 Pro and a AMD Ryzen 9 5900HS. Not even the M1 Max but the M1 Pro.

But this is even more fundamental, this is about Apple Silicon vs Intel and AMD. MacOS just happens to go along for the ride.Some people gotta keep moving the goalposts about whenever a macOS product performs well...?!?

The existing "open" PC architecture is full of bottlenecks.This is Apple just getting started. Imagine the beast of a desktop CPU they will have for iMac and the Mac Pro. They won't be restricted to low wattage but still will be low wattage compared the comparable Intel and AMD CPUs.

The RAM to CPU bottleneck results in the CPU having to crank up to ridiculous frequencies to compensate.

The PCIe bottleneck results in dGPUs having to use ridiculously high bandwidth, expensive and power hungry VRAMs to compensate.

Apple's approach is a departure from this architecture, which frankly is quite refreshing. You get power and save on energy usage at the same time, resulting in longer battery life for mobile applications and less cooling and noise for desktop solutions.

Can't wait to see what the 27" iMac and Mac Pro replacement will look like.

The only downside with the Apple Architecture is upgradability. I mean if you think about what Apple has managed to accomplish is simplify the architecture. Hot wire everything to the CPU. And having 32-channel RAM is unheard of in a laptop let along PC desktops. You need to go to something like the AMD EPYC processors and those use 225 watts of power.The existing "open" PC architecture is full of bottlenecks.

The RAM to CPU bottleneck results in the CPU having to crank up to ridiculous frequencies to compensate.

The PCIe bottleneck results in dGPUs having to use ridiculously high bandwidth, expensive and power hungry VRAMs to compensate.

Apple's approach is a departure from this architecture, which frankly is quite refreshing. You get power and save on energy usage at the same time, resulting in longer battery life for mobile applications and less cooling and noise for desktop solutions.

Can't wait to see what the 27" iMac and Mac Pro replacement will look like.

With M1 Pro and Max you can get peak performance running on battery alone.

And Apple is just getting started.

You have a point there, but frankly, IMHO, with the advancement of technology at current pace, when I'm ready to upgrade my supposedly upgradeable computer, it probably doesn't buy much in terms of speed. Probably makes more sense to upgrade the entire widget and recycle or re-purpose the old hardware.The only downside with the Apple Architecture is upgradability.

Intel and Apple Silicon both have the same RAM to CPU bottleneck. There are some small quantitative differences, but you can safely ignore them most of the time, even if you are writing performance-critical software. The high frequencies of Intel CPUs are unrelated to the bottleneck. Intel simply does it because it's possible and beneficial in some consumer applications.The existing "open" PC architecture is full of bottlenecks.

The RAM to CPU bottleneck results in the CPU having to crank up to ridiculous frequencies to compensate.

The PCIe bottleneck results in dGPUs having to use ridiculously high bandwidth, expensive and power hungry VRAMs to compensate.

The primary effect of the PCIe bottleneck is the programming model where the CPU and the GPU are effectively separate computers connected over a fast network. That's not a real problem in many applications, because they have to scale to multiple systems anyway. Other applications would benefit from the simplicity and performance of shared memory. The bottleneck doesn't increase the need for memory bandwidth, but it may increase the need for the amount of memory.

Upgradeability is more about providing cost-effective devices to many different use cases rather than upgrading systems that are already in use. Tight integration makes sense when you are an average user or close to it. A modular architecture quickly becomes more competitive when one user requires a lot of RAM, another needs several high-end GPUs, and yet another needs a lot of local disk space.You have a point there, but frankly, IMHO, with the advancement of technology at current pace, when I'm ready to upgrade my supposedly upgradeable computer, it probably doesn't buy much in terms of speed. Probably makes more sense to upgrade the entire widget and recycle or re-purpose the old hardware.

I agree both have bottlenecks. Intel's one is a lot smaller compared to AS, and Intel's problem is that their CPUs has to cater to a lot of variations, and therefore cannot freely reduce the bottlenecks compared to AS. Apple can go wild, only limited by physics and cost. Increasing CPU clock frequencies only benefits workloads that fits into L1, L2 and L3 cache. Once you need more, you're hit with the RAM to CPU (i.e. L3 cache) bottleneck. When a 3.2 GHz processor can process more data than a CPU running at 5GHz, it shows that there bottleneck with the 5GHz CPU.Intel and Apple Silicon both have the same RAM to CPU bottleneck. There are some small quantitative differences, but you can safely ignore them most of the time, even if you are writing performance-critical software. The high frequencies of Intel CPUs are unrelated to the bottleneck. Intel simply does it because it's possible and beneficial in some consumer applications.

Engineering is always about trade offs.

Programming models can be abstracted from the underlying implementations. APIs can be written to masked away the RAM to PCIe transfer, but it doesn't mean the bottleneck is not there. Let take this hypothetical scenario. Let's say we load a 10MB texture that's required by the GPU. In the UMA case, it's loaded into RAM, and the SLC cache already have a copy of that texture. The GPU doesn't have to wait for it anymore and access it directly. The higher AS RAM bandwidth go, the better the performance. And again Apple's GPU doesn't need to clock as high to maintiain the same performance, like the CPU case. Now with the M1 Max, Apple's GPU have access to more than 40GB of data to process.The primary effect of the PCIe bottleneck is the programming model where the CPU and the GPU are effectively separate computers connected over a fast network. That's not a real problem in many applications, because they have to scale to multiple systems anyway. Other applications would benefit from the simplicity and performance of shared memory. The bottleneck doesn't increase the need for memory bandwidth, but it may increase the need for the amount of memory.

In the case of dGPU with VRAM, transfer is limited by it 32GB/s limit. So to mitigate this, the VRAM has to go as fast as possible to feed data to the GPUs. PCIe is great for expansion, but not so good for extreme bandwidth data transfers.

Which is why Apple doesn't go into every market there is. They choose to play in the market they think they can make a profit. Apple most likely decided that they market they want to play in doesn't value upgradeability. I don't see anything wrong with that.Upgradeability is more about providing cost-effective devices to many different use cases rather than upgrading systems that are already in use. Tight integration makes sense when you are an average user or close to it. A modular architecture quickly becomes more competitive when one user requires a lot of RAM, another needs several high-end GPUs, and yet another needs a lot of local disk space.

We still have not seen what Apple's AS Mac Pro looks like, but again, Apple is playing in a niche segment of the market here. Definitely not in the enthusiasts market.

I just tested another game, Metro Exodus. I was getting 90-110 fps at 1440p with high settings.

Was that running through Rosetta?I just tested another game, Metro Exodus. I was getting 90-110 fps at 1440p with high settings.

Yes, just launched the mac version through Steam.Was that running through Rosetta?

You have a point there, but frankly, IMHO, with the advancement of technology at current pace, when I'm ready to upgrade my supposedly upgradeable computer, it probably doesn't buy much in terms of speed. Probably makes more sense to upgrade the entire widget and recycle or re-purpose the old hardware.

Buy Mn Max powered Mac minis with 10Gb Ethernet, every time your upgrade to a new unit your personal renderfarm increases in size...?!? ;^p

That's exactly what I meant. All good abstractions are leaky. Everything can be abstracted away, but often you have to understand how things really work beneath several abstraction layers if you want to make informed decisions. Especially if you care about performance.Programming models can be abstracted from the underlying implementations. APIs can be written to masked away the RAM to PCIe transfer, but it doesn't mean the bottleneck is not there.

Faster VRAM doesn't help if the bottleneck is getting the data to the GPU in the first place. More VRAM may help if it allows you to keep the data there for later use.In the case of dGPU with VRAM, transfer is limited by it 32GB/s limit. So to mitigate this, the VRAM has to go as fast as possible to feed data to the GPUs. PCIe is great for expansion, but not so good for extreme bandwidth data transfers.

The workstation market is just a collection of tiny niches. If Apple takes the easy "4x M1 Max" approach, the new Mac Pro may be underwhelming to many current users. Not enough GPU power, not enough RAM, and so on. It may also lose some of the conceptual simplicity of a true unified memory architecture if accessing the RAM behind another chiplet is slower than accessing local memory.We still have not seen what Apple's AS Mac Pro looks like, but again, Apple is playing in a niche segment of the market here. Definitely not in the enthusiasts market.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.