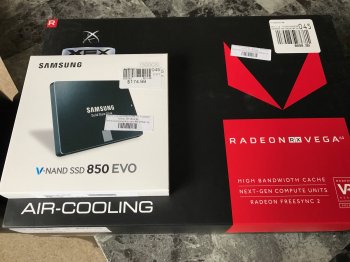

AMD is loosing the battle but winning the war? Maybe a war with itself? I am all for open solutions, alternatives to CUDA and alternatives to data crunching like Volta, but AMD seems to be loosing from its own self hype. Raja Koduri has VFX, GPU rendering, CG, visual effects all over his twitter page. It feels like an advertisement for The Art Institute, Full Sail, or gnomon. Except for a few case files I don't really understand AMDs end game other than trying to pretend to be relevant as long as possible and not die. Their engineers seem lazy and enjoy pretending they have some relevance to the vfx industry than actually coding and programming their way into it. They just seem like fans of film and not much else. Show us real solutions to farm rendering in Nuke and Maya or how OpenCL is a better solution to CUDA. Other than a bunch of self hype, I don't see any ideas trickling down. Show me the better solution AMD. There needs to be a lot more software level integration. A lot!! I don't see it happening. Stability, software integration, market saturation are all more important than saving 30% on a more inneficent card. If I can only render using blender or some other one off app and not a full pipeline their is no end user advantage. Nvidia and CUDA though more expensive and closed seem like they are worth the money. This is AMDs worst launch ever IMHO. This might be the end of any high end GPU from AMD. Raja's twitter feed is a lot of explaining why his platform isn't dominant and how it will be in the future. Raja should just scrub any VFX related words on his twitter feed since his place in that industry is more of a wish for his chip than a reality. I would love to be proved wrong... tick.. tock.. still waiting.