The apple genuine touchpad external costs like 50$ hmm?I'd like to use a touchpad. Many virtual desktops. With ext keyboards it's too difficult.

But thanks for the answer!! 👍🤝

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

(2019) 16" is HOT & NOISY with an external monitor! :(

- Thread starter Appledoesnotlisten

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It works. I've been using an eGPU for about 18 months now. It's not without it's own issues.So, guys, 220+ pages. Sorry, one question. Will eGPU solve that noise+heat problem? If I will use eGPU anything will change? I'm ready to buy but if it will be solved.

I have 16" i9/64Gb and with any monitor I got noise.

- Some apps will crash when you disconnect the eGPU as they were using it to render their application window.

- If your GPU has only HDMI/Displayport and you displays are thunderbolt, HDMI to TBolt cables are expensive.

- Good GPU's are expensive right now. My Radeon 5700T was around $480 before the pandemic. I'm scared to look at current prices.

Something to keep in mind, the eGPU needs to be physically connected to the displays to prevent the overheating problem. You can connect the eGPU and displays in parallel, and MacOS will offload graphical processing tasks to it, but the AMD 5300M/5500M will still overdraw power and overheat.

*** To people who recommend buying an M1 ***

Keep in mind the some of us have employer-provided Macbooks that we had to beg management for months to get approval to purchase it. Trying to convince the company to buy another one a little more then a year from the last purchase, it likely to fall on deaf ears.

Most companies I've worked for have a 3-5 year computer replacement policy. Some are very frugal and will recycle computers as long as they still power on, lol.

Anyway , I could purchase an M1 for myself, but for 40+ hours a week, I'd still need my eGPU.

I agree. Stop recommending M1. M1 does not support Bootcamp, Nvidia, CUDA, eGPU, and has half the speed of Intel i9 on Scientific Compute tasks like matrix multiplication due to lack of AVX. On my i9 Mac, I can reboot into Windows and plug in a Titan V eGPU and do scientific compute and machine learning. Can't do that on a M1. For anyone doing scientific computing it is a huge downgrade. Apple put an ipad processor in a laptop, it is more power efficient, and can encode certain videos faster, but that does not make it the best CPU for everything.It works. I've been using an eGPU for about 18 months now. It's not without it's own issues.

- Some apps will crash when you disconnect the eGPU as they were using it to render their application window.

- If your GPU has only HDMI/Displayport and you displays are thunderbolt, HDMI to TBolt cables are expensive.

- Good GPU's are expensive right now. My Radeon 5700T was around $480 before the pandemic. I'm scared to look at current prices.

Something to keep in mind, the eGPU needs to be physically connected to the displays to prevent the overheating problem. You can connect the eGPU and displays in parallel, and MacOS will offload graphical processing tasks to it, but the AMD 5300M/5500M will still overdraw power and overheat.

*** To people who recommend buying an M1 ***

Keep in mind the some of us have employer-provided Macbooks that we had to beg management for months to get approval to purchase it. Trying to convince the company to buy another one a little more then a year from the last purchase, it likely to fall on deaf ears.

Most companies I've worked for have a 3-5 year computer replacement policy. Some are very frugal and will recycle computers as long as they still power on, lol.

Anyway , I could purchase an M1 for myself, but for 40+ hours a week, I'd still need my eGPU.

T

The Intel Math Kernel Libraries are highly optimized for scientific compute, and make use of AVX, unlike Apple Silicon.

Most reviewers on youtube are biased and just test video editing and geekbench, and call it a day. They think everyone is a youtuber like them. They do not even know how to test math. The only exception I have found is this objective reviewer, who did a proper review, and tested Matlab, and found very similar results as those I found with Mathematica.where can I find out more about maths benchmarks?

I know Intel pushed hard to help maths libraries exploit their CPUs ..

The Intel Math Kernel Libraries are highly optimized for scientific compute, and make use of AVX, unlike Apple Silicon.

Last edited:

Try reading anandtechs M1 pro/max review for an in-depth on the power of these soc’sT

Most reviewers on youtube are biased and just test video editing and geekbench, and call it a day. They think everyone is a youtuber like them. They do not even know how to test math. The only exception I have found is this objective reviewer, who did a proper review, and tested Matlab, and found very similar results as those I found with Mathematica.

The Intel Math Kernel Libraries are highly optimized for scientific compute, and make use of AVX, unlike Apple Silicon.

For doing non-xcode software development. The support for ARM libraries and tools are not there yetI agree. Stop recommending M1. M1 does not support Bootcamp, Nvidia, CUDA, eGPU, and has half the speed of Intel i9 on Scientific Compute tasks like matrix multiplication due to lack of AVX. On my i9 Mac, I can reboot into Windows and plug in a Titan V eGPU and do scientific compute and machine learning. Can't do that on a M1. For anyone doing scientific computing it is a huge downgrade. Apple put an ipad processor in a laptop, it is more power efficient, and can encode certain videos faster, but that does not make it the best CPU for everything.

We have collected more 50 signatures in less than a week, if everybody from this discussion signs the petition we could reach easily more than 500. It's not that hard.

Some update:

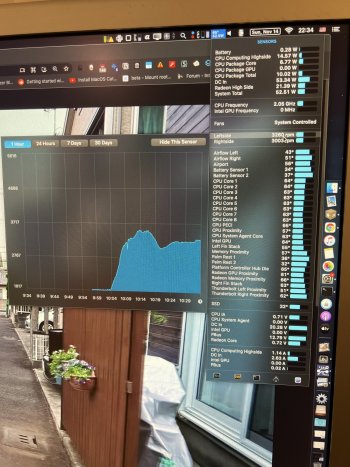

VRM and temp mod (re-paste, vrm on thermal pads, 1 heatpipe added to connect to fans) seems to have some effect with the fan speed. I now have fans running no more than 3500 rpm (audible, but not annoying).

I’m playing 2k video and have some other tabs going in the browser (chrome), and reeder with the gpu driving 2 external screens (and internal one) and its hovering around 3200-3500 rpm. Which is an improvement from around 4500-5000 rpm I had been getting.

(For context, Radeon high side ~ 20W)

If you are curious, the vapor chamber is a part for surfacebook I got on ebay. (Same as one I used in my A1398)

The 2nd picture was from planning, the finished product had thermal pads on vrm, and the components secured using kapton tape.

I’ll probably have some more progress at some point

(Planning to add another heatpipe to GPU die, and add some more thermal pads to VRMs (still throttles at 3.4Ghz+, temperture wise this system should be able to sustain 3.8+ Ghz now, but it still VRM throttles - verified with limit reasons in ThrottleStop)

Also, the increase in fan noise is correlated to GPU die temp and pch temp. Can someone verify?

PS: additional info, running 2 screen (1 internal + apple cinema 30) -> 2700-2800 rpm with less than 20W high side. Sorry for all the edits

VRM and temp mod (re-paste, vrm on thermal pads, 1 heatpipe added to connect to fans) seems to have some effect with the fan speed. I now have fans running no more than 3500 rpm (audible, but not annoying).

I’m playing 2k video and have some other tabs going in the browser (chrome), and reeder with the gpu driving 2 external screens (and internal one) and its hovering around 3200-3500 rpm. Which is an improvement from around 4500-5000 rpm I had been getting.

(For context, Radeon high side ~ 20W)

If you are curious, the vapor chamber is a part for surfacebook I got on ebay. (Same as one I used in my A1398)

The 2nd picture was from planning, the finished product had thermal pads on vrm, and the components secured using kapton tape.

I’ll probably have some more progress at some point

(Planning to add another heatpipe to GPU die, and add some more thermal pads to VRMs (still throttles at 3.4Ghz+, temperture wise this system should be able to sustain 3.8+ Ghz now, but it still VRM throttles - verified with limit reasons in ThrottleStop)

Also, the increase in fan noise is correlated to GPU die temp and pch temp. Can someone verify?

PS: additional info, running 2 screen (1 internal + apple cinema 30) -> 2700-2800 rpm with less than 20W high side. Sorry for all the edits

Attachments

Last edited:

I fully agree with you that the current compiler for M1 is missing most of the optimization for scientific computing. But the MATLAB test was done under Rosetta! So it is strongly biased. We should wait at least one year, to get non-Apple software optimized for Apple Silicon. Just have a look at python world, Pandas is highly optimized for M1, but several numpy modules are not. And finally, it looks like nowadays people buy laptops only for gaming, or producing videos... so sad.T

Most reviewers on youtube are biased and just test video editing and geekbench, and call it a day. They think everyone is a youtuber like them. They do not even know how to test math. The only exception I have found is this objective reviewer, who did a proper review, and tested Matlab, and found very similar results as those I found with Mathematica.

The Intel Math Kernel Libraries are highly optimized for scientific compute, and make use of AVX, unlike Apple Silicon.

Hi there. I couldn't follow the thread recently but I have some good news.

I have contacted engineers at Apple a couple of months ago and the behavior already improved in macOS Monterey. The GPU power consumption was 18 watts before the update, now it is only 15 with an external monitor connected. Also enabling the low power mode under battery settings reduces the GPU power consumption to 5-8 watts. That allows me to use my mac at 55-60 degrees with an external monitor connected. I believe there is not more that can be done on Apple's side.

Hope it resolves the issues for everyone.

EDIT: It is also worth mentioning I am having 1600-1800 rpm which is quite silent.

I have contacted engineers at Apple a couple of months ago and the behavior already improved in macOS Monterey. The GPU power consumption was 18 watts before the update, now it is only 15 with an external monitor connected. Also enabling the low power mode under battery settings reduces the GPU power consumption to 5-8 watts. That allows me to use my mac at 55-60 degrees with an external monitor connected. I believe there is not more that can be done on Apple's side.

Hope it resolves the issues for everyone.

EDIT: It is also worth mentioning I am having 1600-1800 rpm which is quite silent.

5-8 watts with the lid open?Hi there. I couldn't follow the thread recently but I have some good news.

I have contacted engineers at Apple a couple of months ago and the behavior already improved in macOS Monterey. The GPU power consumption was 18 watts before the update, now it is only 15 with an external monitor connected. Also enabling the low power mode under battery settings reduces the GPU power consumption to 5-8 watts. That allows me to use my mac at 55-60 degrees with an external monitor connected. I believe there is not more that can be done on Apple's side.

Hope it resolves the issues for everyone.

EDIT: It is also worth mentioning I am having 1600-1800 rpm which is quite silent.

Yep, I see 5-8 watts with the lid open on low power mode.5-8 watts with the lid open?

"We should wait at least one year" -- you mean in addition to the year we've already waited? I'm sorry to report that Apple has successfully pushed themselves out into the cold on this one. Until ARM processing is more widespread than just mobile devices, Macs and throughput-intensive data center deployments as now, improvements to these aspects of the clang / llvm compiler are going to be on Apple alone. And if the optimizations needed are not general to ARM but rather specific to the M1, they will always be on Apple alone. And judging by Apple's behavior in the past, if it doesn't speed up Final Cut Pro or Photos, it is not going to happen. The best we'll be able to hope for is that eGPU support is eventually added and computations of interest can be done externally.I fully agree with you that the current compiler for M1 is missing most of the optimization for scientific computing. But the MATLAB test was done under Rosetta! So it is strongly biased. We should wait at least one year, to get non-Apple software optimized for Apple Silicon. Just have a look at python world, Pandas is highly optimized for M1, but several numpy modules are not. And finally, it looks like nowadays people buy laptops only for gaming, or producing videos... so sad.

Moderator Note:

A link to a petition has been removed from the thread, since the forum rules do not allow linking to petitions. Please don't repost the link.

A link to a petition has been removed from the thread, since the forum rules do not allow linking to petitions. Please don't repost the link.

I agree, I am disappointed too. I hope that the optimization is AMR generic. Anyhow, I guess that Apple cares only about selling more machines, so if losing scientific users is not an issue, they will not care. I am not sure that this is the case. Scientists and data scientists are a non-negligible fraction of mac os users. This happened because mac os offered a Linux-like environment, without the need to spend time to compile kernel and tweaking configurations, plus a solid hardware. Most of these conditions are not true anymore, and probably we (people doing science) should look around for alternatives. Still, I believe that ARM could be the best solution for laptops (if compilers are actually optimized), and I am a bit skeptical that Linux distros will be ever laptop compliant as desirable."We should wait at least one year" -- you mean in addition to the year we've already waited? I'm sorry to report that Apple has successfully pushed themselves out into the cold on this one. Until ARM processing is more widespread than just mobile devices, Macs and throughput-intensive data center deployments as now, improvements to these aspects of the clang / llvm compiler are going to be on Apple alone. And if the optimizations needed are not general to ARM but rather specific to the M1, they will always be on Apple alone. And judging by Apple's behavior in the past, if it doesn't speed up Final Cut Pro or Photos, it is not going to happen. The best we'll be able to hope for is that eGPU support is eventually added and computations of interest can be done externally.

The problem with Low power mode is it also throttle CPU.Yep, I see 5-8 watts with the lid open on low power mode.

Apple should provide an LPM option to only limit GPU idle power without affecting CPU performance.

I guess you are trying to transfer the CPU/GPU heatsink heat to the back plate of the machine.Some update:

VRM and temp mod (re-paste, vrm on thermal pads, 1 heatpipe added to connect to fans) seems to have some effect with the fan speed. I now have fans running no more than 3500 rpm (audible, but not annoying).

I’m playing 2k video and have some other tabs going in the browser (chrome), and reeder with the gpu driving 2 external screens (and internal one) and its hovering around 3200-3500 rpm. Which is an improvement from around 4500-5000 rpm I had been getting.

(For context, Radeon high side ~ 20W)

If you are curious, the vapor chamber is a part for surfacebook I got on ebay. (Same as one I used in my A1398)

The 2nd picture was from planning, the finished product had thermal pads on vrm, and the components secured using kapton tape.

I’ll probably have some more progress at some point

(Planning to add another heatpipe to GPU die, and add some more thermal pads to VRMs (still throttles at 3.4Ghz+, temperture wise this system should be able to sustain 3.8+ Ghz now, but it still VRM throttles - verified with limit reasons in ThrottleStop)

Also, the increase in fan noise is correlated to GPU die temp and pch temp. Can someone verify?

PS: additional info, running 2 screen (1 internal + apple cinema 30) -> 2700-2800 rpm with less than 20W high side. Sorry for all the edits

I tried that before but the issue is the backplate will be dangerously hot and the heat will also transfer back to the VRM module via the backplate.

It only works if you use a laptop cooler directly cooling the bottom plate.

Yeah, that's what some other guys are trying to achieve by themselves. It would be the perfect fix, or what I am saying "expected behaviour" from laptop 3k$ +. And this thread could finally be closed, after 2 years and almost 6000 posts.The problem with Low power mode is it also throttle CPU.

Apple should provide an LPM option to only limit GPU idle power without affecting CPU performance.

Look closely, I only thermally coupled the back plate to the VRM. The CPU and GPU heatpipes only connect to the added Vapor chamber (notice how no thermal pad added to that area), and the fan cage (with heatpipe)I guess you are trying to transfer the CPU/GPU heatsink heat to the back plate of the machine.

I tried that before but the issue is the backplate will be dangerously hot and the heat will also transfer back to the VRM module via the backplate.

It only works if you use a laptop cooler directly cooling the bottom plate.

And thermal pad from the vapor to fan cage on the right (where the vapor chamber overlap the fan housing)

Can we get this thread renamed to "2019 16"...."Moderator Note:

A link to a petition has been removed from the thread, since the forum rules do not allow linking to petitions. Please don't repost the link.

Cheers!

Adding vapor chamber is an innovative solutionLook closely, I only thermally coupled the back plate to the VRM. The CPU and GPU heatpipes only connect to the added Vapor chamber (notice how no thermal pad added to that area), and the fan cage (with heatpipe)

And thermal pad from the vapor to fan cage on the right (where the vapor chamber overlap the fan housing)

I can confirm the fan will kick on when CPU temp raise above 93 celsius. Throttle cpu at 93 celsius greatly reduce fan noise.

I used the Mathematica version specially compiled for Apple Silicon. It's not much better than Rosetta.I fully agree with you that the current compiler for M1 is missing most of the optimization for scientific computing. But the MATLAB test was done under Rosetta! So it is strongly biased. We should wait at least one year, to get non-Apple software optimized for Apple Silicon. Just have a look at python world, Pandas is highly optimized for M1, but several numpy modules are not. And finally, it looks like nowadays people buy laptops only for gaming, or producing videos... so sad.

If fan noise and heat is the main concern for some, remember to open up the back case and clean it up from time to time. I cleaned up mine after about a year, and the fan speed did drop ~300rpm when doing light work. I do have a dog so my airflow could be more blocked. This chassis is unnecessarily difficult to open up though...

So far I'm fine with the 5w drop (from 20w to 15w Radeon High Side), though the Variable Refresh Rate does come back from time to time. As mentioned in previous post, the LPM feels too sluggish for me.

So far I'm fine with the 5w drop (from 20w to 15w Radeon High Side), though the Variable Refresh Rate does come back from time to time. As mentioned in previous post, the LPM feels too sluggish for me.

You should really get an M1 dude. They're great!I agree. Stop recommending M1. M1 does not support Bootcamp, Nvidia, CUDA, eGPU, and has half the speed of Intel i9 on Scientific Compute tasks like matrix multiplication due to lack of AVX. On my i9 Mac, I can reboot into Windows and plug in a Titan V eGPU and do scientific compute and machine learning. Can't do that on a M1. For anyone doing scientific computing it is a huge downgrade. Apple put an ipad processor in a laptop, it is more power efficient, and can encode certain videos faster, but that does not make it the best CPU for everything.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.